Digital Instrument Building and the Laptop Orchestra

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

UBC High Notes Newsletter of the School of Music at the University of British Columbia

UBC High Notes Newsletter of the School of Music at the University of British Columbia Fall 2012 Director’s Welcome Welcome to the fourteenth edition of High Notes, celebrating the recent activities and major achievements of the faculty and students in the UBC School of Music! I think you will find the diversity and quality of accomplishments impressive and inspiring. A major highlight for me this year is the opportunity to welcome three exciting new full-time faculty members. Pianist Mark Anderson, with an outstanding international reputation gave a brilliant first recital at the School in October.Jonathan Girard, our new Director of the UBC Symphony Orchestra, and Assistant Professor of Conducting, led the UBC Symphony Orchestra in a full house of delighted audience members at the Chan Centre on November 9th. Musicologist Hedy Law, a specialist in 18th-century French opera and ballet is, has already established herself well with students and faculty in the less public sphere of our academic activities. See page 4 to meet these new faculty members who are bringing wonderful new artistic and scholarly energies to the School. It is exciting to see the School evolve through its faculty members! Our many accomplished part-time instructors are also vital to the success and profile of the School. This year we welcome to our team several UBC music alumni who have won acclaim as artists and praise as educators: cellist John Friesen, composer Jocelyn Morlock, film and television composer Hal Beckett, and composer-critic-educator David Duke. They embody the success of our programs, and the impact of the UBC School of Music on the artistic life of our province and nation. -

Computer Music

THE OXFORD HANDBOOK OF COMPUTER MUSIC Edited by ROGER T. DEAN OXFORD UNIVERSITY PRESS OXFORD UNIVERSITY PRESS Oxford University Press, Inc., publishes works that further Oxford University's objective of excellence in research, scholarship, and education. Oxford New York Auckland Cape Town Dar es Salaam Hong Kong Karachi Kuala Lumpur Madrid Melbourne Mexico City Nairobi New Delhi Shanghai Taipei Toronto With offices in Argentina Austria Brazil Chile Czech Republic France Greece Guatemala Hungary Italy Japan Poland Portugal Singapore South Korea Switzerland Thailand Turkey Ukraine Vietnam Copyright © 2009 by Oxford University Press, Inc. First published as an Oxford University Press paperback ion Published by Oxford University Press, Inc. 198 Madison Avenue, New York, New York 10016 www.oup.com Oxford is a registered trademark of Oxford University Press All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted, in any form or by any means, electronic, mechanical, photocopying, recording, or otherwise, without the prior permission of Oxford University Press. Library of Congress Cataloging-in-Publication Data The Oxford handbook of computer music / edited by Roger T. Dean. p. cm. Includes bibliographical references and index. ISBN 978-0-19-979103-0 (alk. paper) i. Computer music—History and criticism. I. Dean, R. T. MI T 1.80.09 1009 i 1008046594 789.99 OXF tin Printed in the United Stares of America on acid-free paper CHAPTER 12 SENSOR-BASED MUSICAL INSTRUMENTS AND INTERACTIVE MUSIC ATAU TANAKA MUSICIANS, composers, and instrument builders have been fascinated by the expres- sive potential of electrical and electronic technologies since the advent of electricity itself. -

The Evolution of the Performer Composer

CONTEMPORARY APPROACHES TO LIVE COMPUTER MUSIC: THE EVOLUTION OF THE PERFORMER COMPOSER BY OWEN SKIPPER VALLIS A thesis submitted to the Victoria University of Wellington in fulfillment of the requirements for the degree of Doctor of Philosophy Victoria University of Wellington 2013 Supervisory Committee Dr. Ajay Kapur (New Zealand School of Music) Supervisor Dr. Dugal McKinnon (New Zealand School of Music) Co-Supervisor © OWEN VALLIS, 2013 NEW ZEALAND SCHOOL OF MUSIC ii ABSTRACT This thesis examines contemporary approaches to live computer music, and the impact they have on the evolution of the composer performer. How do online resources and communities impact the design and creation of new musical interfaces used for live computer music? Can we use machine learning to augment and extend the expressive potential of a single live musician? How can these tools be integrated into ensembles of computer musicians? Given these tools, can we understand the computer musician within the traditional context of acoustic instrumentalists, or do we require new concepts and taxonomies? Lastly, how do audiences perceive and understand these new technologies, and what does this mean for the connection between musician and audience? The focus of the research presented in this dissertation examines the application of current computing technology towards furthering the field of live computer music. This field is diverse and rich, with individual live computer musicians developing custom instruments and unique modes of performance. This diversity leads to the development of new models of performance, and the evolution of established approaches to live instrumental music. This research was conducted in several parts. The first section examines how online communities are iteratively developing interfaces for computer music. -

Third Practice Electroacoustic Music Festival Department of Music, University of Richmond

University of Richmond UR Scholarship Repository Music Department Concert Programs Music 11-3-2017 Third Practice Electroacoustic Music Festival Department of Music, University of Richmond Follow this and additional works at: https://scholarship.richmond.edu/all-music-programs Part of the Music Performance Commons Recommended Citation Department of Music, University of Richmond, "Third Practice Electroacoustic Music Festival" (2017). Music Department Concert Programs. 505. https://scholarship.richmond.edu/all-music-programs/505 This Program is brought to you for free and open access by the Music at UR Scholarship Repository. It has been accepted for inclusion in Music Department Concert Programs by an authorized administrator of UR Scholarship Repository. For more information, please contact [email protected]. LJ --w ...~ r~ S+ if! L Christopher Chandler Acting Director WELCOME to the 2017 Third festival presents works by students Practice Electroacoustic Music Festi from schools including the University val at the University of Richmond. The of Mary Washington, University of festival continues to present a wide Richmond, University of Virginia, variety of music with technology; this Virginia Commonwealth University, year's festival includes works for tra and Virginia Tech. ditional instruments, glass harmon Festivals are collaborative affairs ica, chin, pipa, laptop orchestra, fixed that draw on the hard work, assis media, live electronics, and motion tance, and commitment of many. sensors. We are delighted to present I would like to thank my students Eighth Blackbird as ensemble-in and colleagues in the Department residence and trumpeter Sam Wells of Music for their engagement, dedi as our featured guest artist. cation, and support; the staff of the Third Practice is dedicated not Modlin Center for the Arts for their only to the promotion and creation energy, time, and encouragement; of new electroacoustic music but and the Cultural Affairs Committee also to strengthening ties within and the Music Department for finan our community. -

Concerto for Laptop Ensemble and Orchestra

Louisiana State University LSU Digital Commons LSU Doctoral Dissertations Graduate School 2014 Concerto for Laptop Ensemble and Orchestra: The Ship of Theseus and Problems of Performance for Electronics With Orchestra: Taxonomy and Nomenclature Jonathan Corey Knoll Louisiana State University and Agricultural and Mechanical College, [email protected] Follow this and additional works at: https://digitalcommons.lsu.edu/gradschool_dissertations Part of the Music Commons Recommended Citation Knoll, Jonathan Corey, "Concerto for Laptop Ensemble and Orchestra: The hipS of Theseus and Problems of Performance for Electronics With Orchestra: Taxonomy and Nomenclature" (2014). LSU Doctoral Dissertations. 248. https://digitalcommons.lsu.edu/gradschool_dissertations/248 This Dissertation is brought to you for free and open access by the Graduate School at LSU Digital Commons. It has been accepted for inclusion in LSU Doctoral Dissertations by an authorized graduate school editor of LSU Digital Commons. For more information, please [email protected]. CONCERTO FOR LAPTOP ENSEMBLE AND ORCHESTRA: THE SHIP OF THESEUS AND PROBLEMS OF PERFORMANCE FOR ELECTRONICS WITH ORCHESTRA: TAXONOMY AND NOMENCLATURE A Dissertation Submitted to the Graduate Faculty of the Louisiana State University and Agricultural and Mechanical College in partial fulfillment of the requirements for the degree of Doctor of Philosophy in The School of Music by Jonathan Corey Knoll B.F.A., Marshall University, 2002 M.M., Bowling Green State University, 2006 December 2014 This dissertation is dedicated to the memory of Dr. Paul A. Balshaw (1938-2005). ii ACKNOWLEDGEMENTS First and foremost, I would like to thank Dr. Stephen David Beck, not only for his help with this dissertation, but for the time and effort put into my education and the opportunities he opened for me at LSU. -

Hypothesishypotesis for the Foundation of a Laptop Orchestra

Proceedings of the International Computer Music Conference 2011, University of Huddersfield, UK, 31 July - 5 August 2011 HYPOTHESISHYPOTESIS FOR THE FOUNDATION OF A LAPTOP ORCHESTRA Marco Gasperini Meccanica Azione Sonora [email protected] ABSTRACT been, but as for the use of the word, up to our our days (at least the last two centuries), it is possible to trace In this article some theoretical issues about the some constant features (among many others) which are foundations of a laptop orchestra will be presented, worth considering in the present perspective: prompted by the actual involvement in the design of the • it is a relatively wide instrumental group (there S. Giorgio Laptop Ensemble in Venice. The main are no voices); interest is in the development of an eco-systemic [10] • it is divided in sections of instruments of similar kind of communication/interaction between performers. timbres or of similar playing techniques (strings, First some phenomenological features of orchestra and woods, brass, percussions, etc.); laptop will be reviewed, followed by a brief summary of • it is generally conducted. the history of laptop orchestras. A coherent system Starting from these considerations it can be said that according to the premises will then be developed an orchestra is characterized by a specific sound quality defining what will be an orchestral player and what will which depends on the mix of all the instrumental sounds be a conductor and how they will be musically produced by the musicians, that is to say by all the interacting. Technical issues regarding the set-up of the different timbres of the instruments, by their distribution orchestra and the means of communication between the in the performance place and, last but not least, by the elements of the orchestra will then be approached. -

A NIME Reader Fifteen Years of New Interfaces for Musical Expression

CURRENT RESEARCH IN SYSTEMATIC MUSICOLOGY Alexander Refsum Jensenius Michael J. Lyons Editors A NIME Reader Fifteen Years of New Interfaces for Musical Expression 123 Current Research in Systematic Musicology Volume 3 Series editors Rolf Bader, Musikwissenschaftliches Institut, Universität Hamburg, Hamburg, Germany Marc Leman, University of Ghent, Ghent, Belgium Rolf Inge Godoy, Blindern, University of Oslo, Oslo, Norway [email protected] More information about this series at http://www.springer.com/series/11684 [email protected] Alexander Refsum Jensenius Michael J. Lyons Editors ANIMEReader Fifteen Years of New Interfaces for Musical Expression 123 [email protected] Editors Alexander Refsum Jensenius Michael J. Lyons Department of Musicology Department of Image Arts and Sciences University of Oslo Ritsumeikan University Oslo Kyoto Norway Japan ISSN 2196-6966 ISSN 2196-6974 (electronic) Current Research in Systematic Musicology ISBN 978-3-319-47213-3 ISBN 978-3-319-47214-0 (eBook) DOI 10.1007/978-3-319-47214-0 Library of Congress Control Number: 2016953639 © Springer International Publishing AG 2017 This work is subject to copyright. All rights are reserved by the Publisher, whether the whole or part of the material is concerned, specifically the rights of translation, reprinting, reuse of illustrations, recitation, broadcasting, reproduction on microfilms or in any other physical way, and transmission or information storage and retrieval, electronic adaptation, computer software, or by similar or dissimilar methodology now known or hereafter developed. The use of general descriptive names, registered names, trademarks, service marks, etc. in this publication does not imply, even in the absence of a specific statement, that such names are exempt from the relevant protective laws and regulations and therefore free for general use. -

Comp Prog Info BM 8-20

FLORIDA INTERNATIONAL UNIVERSITY SCHOOL OF MUSIC Bachelor of Music Composition The Florida International University Music Composition Undergraduate Program Philosophy and Mission Statement. The FIU Music Composition Undergraduate Program is designed to give students a strong background in the techniques and languages of a variety of musical styles ranging from common practice music to the most experimental contemporary approaches. Combining writing with analysis, the program is designed to produce composers who are proficient in a variety of musical languages while at the same time allowing for the evolution of an individual’s personal compositional craft and approach. An emphasis on musicianship, including the performance and conducting of a student's works, further enhances the student composer’s development as a competent musician. Numerous performance opportunities of students’ music by excellent performers and ensembles as well as hands on experience in the use of new technologies including computer music, film scoring, video, and interactive and notational software are an integral part of the curriculum. Many of our graduates have continued studies at other prestigious schools in the US and abroad and have been the recipients of ASCAP and BMI Student Composition awards and other important prizes. The four-year music composition program at FIU prepares students for either continued graduate studies in composition or as skillful composers continuing in a variety of related occupations. For more information regarding the program contact: -

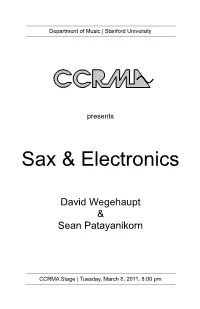

Sax & Electronics

Department of Music | Stanford University presents Sax & Electronics David Wegehaupt & Sean Patayanikorn CCRMA Stage | Tuesday, March 8, 2011, 8:00 pm CONCERT PROGRAM a Stanford/Berkeley exchange concert Chopper (2009), by Chris Chafe (*) Três Fontes (2008), by Bruno Ruviaro Let It Begin With Me (2010-2011), by Liza White is the same... is not the same (2004), by Rob Hamilton Métal Re-sculpté II (2010), by Heather Frasch David Wegehaupt and Sean Patayanikorn, saxophones (*) Special participation: Matthew Burtner and Justin Yang playing saxophones over the network from Virginia and Belfast. please turn off all your electronic devices PROGRAM NOTES Chopper (2009) This is a portrait in sound to remember: a still space in time, flying in a helicopter over a raging natural disaster, sitting in my kitchen at a laptop. Extended at least an hour, it was utterly quiet save for the background from the ventilation in our apartment. Raw news stream, global access, great lens, Santa Barbara, Banff, satellites... now it's a chance to add rotors in retrospect and slow down again to the pace of that unfolding. In this portrait, also a reflection on those who already work this way, every day. Chris Chafe is a composer, improvisor, cellist, and music researcher with an interest in computers and interactive performance. He has been a long-term denizen of the Center for Computer Research in Music and Acoustics where he is the center's director and teaches computer music courses. Three year-long periods have been spent at IRCAM, Paris, and The Banff Centre making music and developing methods for computer sound synthesis. -

Stanford Laptop Orchestra (Slork)

International Computer Music Conference (ICMC 2009) STANFORD LAPTOP ORCHESTRA (SLORK) Ge Wang, Nicholas Bryan, Jieun Oh, Rob Hamilton Center for Computer Research in Music and Acoustics (CCRMA) Stanford University {ge, njb, jieun5, rob}@ccrma.stanford.edu ABSTRACT In the paper, we chronicle the instantiation and adventures of the Stanford Laptop Orchestra (SLOrk), an ensemble of laptops, humans, hemispherical speaker arrays, interfaces, and, more recently, mobile smart phones. Motivated to deeply explore computer-mediated live performance, SLOrk provides a platform for research, instrument design, sound design, new paradigms for composition, and Figure 1. SLOrk classroom; SLOrk speaker arrays. performance. It also offers a unique classroom combining music, technology, and live performance. Founded in 2. AESTHETIC AND RELATED WORK 2008, SLOrk was built from the ground-up by faculty and students at Stanford University's Center for Computer The Stanford Laptop Orchestra embodies an aesthetic that Research in Music and Acoustics (CCRMA). This is also central to the Princeton Laptop Orchestra [8, 9, 10, document describes 1) how SLOrk was built, 2) its initial 12], or PLOrk – the first ensemble of this kind, while fully performances, and 3) the Stanford Laptop Orchestra as a leveraging CCRMA’s natural intersection of disciplines classroom. We chronicle its present, and look to its future. (Music, Electrical Engineering, Cognition, Physical Interaction Design, Performance, and Computer Science, etc.) to explore new possibilities in research, musical 1. INTRODUCTION performance, and education. The Stanford Laptop Orchestra (SLOrk) is a large-scale, Recently, more laptop-mediated ensembles have computer-mediated ensemble that explores cutting-edge emerged, including CMLO: Carnegie Mellon's Laptop technology in combination with conventional musical Orchestra [3], MiLO: the Milwaukee Laptop Orchestra [1], contexts - while radically transforming both. -

Mediated Musicianships in a Networked Laptop Orchestra', Contemporary Music Review, Vol

Edinburgh Research Explorer A managed risk Citation for published version: Valiquet, P 2018, 'A managed risk: Mediated musicianships in a networked laptop orchestra', Contemporary Music Review, vol. 37, no. 5-6, pp. 646-665. https://doi.org/10.1080/07494467.2017.1402458 Digital Object Identifier (DOI): 10.1080/07494467.2017.1402458 Link: Link to publication record in Edinburgh Research Explorer Document Version: Peer reviewed version Published In: Contemporary Music Review General rights Copyright for the publications made accessible via the Edinburgh Research Explorer is retained by the author(s) and / or other copyright owners and it is a condition of accessing these publications that users recognise and abide by the legal requirements associated with these rights. Take down policy The University of Edinburgh has made every reasonable effort to ensure that Edinburgh Research Explorer content complies with UK legislation. If you believe that the public display of this file breaches copyright please contact [email protected] providing details, and we will remove access to the work immediately and investigate your claim. Download date: 25. Sep. 2021 1 A managed risk: Mediated musicianships in a networked laptop orchestra Patrick Valiquet Institute of Musical Research, Royal Holloway, University of London forthcoming in Contemporary Music Review 36 (5-6) 2017. ‘Mediation’ is perhaps one of the most powerful terms in contemporary music studies, but also among the most accommodating. While we can sometimes discern genealogical or conceptual convergences among its various analytical articulations, at the same time one of the term’s most interesting features is its persistent multiplicity. It is eminently relational, but also slippery. -

WITH CSOUND PNACL and WEBSOCKETS Ashvala Vinay

Ashvala Vinay and Dr. Richard Boulanger. Building Web Based Interactive Systems BUILDING WEB BASED INTERACTIVE SYSTEMS WITH CSOUND PN ACL AND WEB SOCKETS Ashvala Vinay Berklee College of Music avinay [at] berklee [dot] edu Dr. Richard Boulanger Berklee College of Music rboulanger [at] berklee [dot] edu This project aims to harness WebSockets to build networkable interfaces and systems using Csound’s Portable Native Client binary (PNaCl) and Socket.io. There are two methods explored in this paper. The first method is to create an interface to Csound PNaCl on devices that are incapable of running the Native Client binary. For example, by running Csound PNaCl on a desktop and controlling it with a smartphone or a tablet. The second method is to create an interactive music environment that allows users to run Csound PNaCl on their computers and use musical instruments in an orchestra interactively. In this paper, we will also address some of the practical problems that exist with modern interactive web-based/networked performance systems – latency, local versus global, and everyone controlling every instrument versus one person per instrument. This paper culminates in a performance system that is robust and relies on web technologies to facilitate musical collaboration between people in different parts of the world. Introduction At the 2015 Web Audio Conference, Csound developers Steven Yi, Victor Lazzarini and Edward Costello delivered a paper about the Csound Emscripten build and the Portable Native Client binary (PNaCl). The PNaCl binary works exclusively on Google Chrome and Chromium browsers. It allows Csound to run on the web with very minimal performance penalties.