Download Date 23/09/2021 13:48:41

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Verizon Fios Channel Guide

1/14/2020 Extreme HD Print 344 Ch, 151 HD # A 169 A Wealth of Entertainment 669 A Wealth of Entertainment HD 181 A&E 681 A&E HD 571 ACC Network HD 119 AccuWeather DC MD VA 619 AccuWeather DC MD VA HD 424 Action Max 924 Action Max HD 425 Action Max West 231 AMC 731 AMC HD 125 American Heroes Channel 625 American Heroes Channel HD 130 Animal Planet 630 Animal Planet HD 215 AXS tv 569 AXS tv HD # B 765 BabyFirst HD 189 BBC America 689 BBC America HD 107 BBC World News 609 BBC World News HD 596 beIN Sports HD 270 BET 225 BET Gospel 770 BET HD 213 BET Jams 219 BET Soul 330 Big Ten 1 331 Big Ten 2 Programming Service offered in each package are subject to change and not all programming Included Channel Premium Available For Additional Cost services will be available at all times, Blackout restrictions apply. 1/15 1/14/2020 Extreme HD Print 344 Ch, 151 HD 333 Big Ten 3 85 Big Ten Network 585 Big Ten Network HD 258 Boomerang Brambleton Community 42 Access [HOA] 185 Bravo 685 Bravo HD 951 Brazzers 290 BYU Television # C 109 C-SPAN 110 C-SPAN 2 111 C-SPAN 3 599 Cars.TV HD 257 Cartoon Network 757 Cartoon Network HD 94 CBS Sports Network 594 CBS Sports Network HD 277 CGTN 420 Cinemax 920 Cinemax HD 421 Cinemax West 921 Cinemax West HD 236 Cinémoi 221 CMT 721 CMT HD 222 CMT Music 102 CNBC 602 CNBC HD+ 100 CNN 600 CNN HD 105 CNN International 190 Comedy Central 690 Comedy Central HD 695 Comedy.TV HD 163 Cooking Channel 663 Cooking Channel HD Programming Service offered in each package are subject to change and not all programming Included Channel Premium Available For Additional Cost services will be available at all times, Blackout restrictions apply. -

Bellaire Channel Lineup

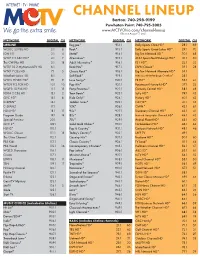

CHANNEL LINEUP Bellaire: 740-676-6377 www.MCTVOhio.com/channel-lineup Effective July 1, 2020. Disregard the .1 in channel numbers if you are using a set-top box. NETWORK CH NETWORK CH LIFELINE Bally Sports Great Lakes HD* 29.1 WOUC 20 PBS HD 2.1 Big Ten Network HD* 30.1 ION 3.1 AT&T SportsNet Pittsburgh* 31.1 WTRF 7.3 ABC HD* 4.1 FS1 HD* 32.1 The CW Plus 5.1 ESPN Classic* 33.1 WTRF 33.2 MyNetwork TV HD 6.1 Big Ten Network Alternate HD 34.1 WTRF 7 CBS HD 7.1 AT&T SportsNet Pittsburgh Overflow* 35.1 The Weather Channel* 8.1 FX HD* 36.1 WTOV 9 NBC HD* 9.1 Paramount Network* 37.1 WTOV 9.2 FOX HD 10.1 Comedy Central* 38.1 KDKA 2 CBS HD 12.1 SyFy* 39.1 WPGH 53 FOX HD 13.1 History* 40.1 WPNT 22 MyNetworkTV HD 14.1 TLC* 41.1 QVC* 15.1 OWN* 42.1 C-SPAN* 16.1 Discovery Channel* 43.1 C-SPAN2* 17.1 National Geographic Channel HD* 44.1 HSN* 18.1 Animal Planet* 45.1 Program Guide 19.1 Nickelodeon* 46.1 Special Preview 20.1 Nick Jr.* 47.1 WOUC Classic 111.1 Cartoon Network* 48.1 The Ohio Channel 112.1 LAFF TV 49.1 PBS Kids 113.1 Freeform* 50.1 WQED 13 PBS HD 114.1 TV Land* 51.1 PBS World 115.1 Hallmark Channel* 52.1 WQED Showcase 116.1 A&E HD* 53.1 Create 117.1 truTV* 54.1 EWTN 118.1 Travel Channel* 55.1 TBN 119.1 HGTV* 56.1 Hillsong Channel 120.1 Food Network* 57.1 Positiv TV 121.1 GSN* 58.1 Enlace 122.1 Turner Classic Movies* 59.1 Court TV Mystery 123.1 Bravo* 61.1 MeTV 124.1 FOX News Channel* 62.1 Start TV 126.1 CNN* 63.1 Antenna TV 127.1 HLN* 64.1 CHARGE! 128.1 CNBC* 65.1 QVC 2* 129.1 FOX Business Network* 66.1 HSN2* 130.1 Bloomberg HD 67.1 VH1* -

Darien Cable TV

Darien Cable TV BASIC 2 WSAV - NBC 8 ION 14 C-Span 4 WJCL - ABC 9 WVAN - GPB 15 The Cowboy 5 Local Bulletin 10 WTGS - FOX Channel Board 11 BS T 16 QVC 6 Basic TV Guide 12 N WG 17 EWTN 7 WTOC - CBS 13 TBN 18 McIntosh Network EXPANDED BASIC 19 ESPN 38 E! 57 CMT Music 20 ESPN2 39 fyi, 58 MTV 21 ESPNews 40 truTV 59 VH1 22 ESPN Classic 41 Discovery 60 CNBC 23 Outdoor 42 Animal Planet 61 CNN Channel 43 TLC 62 HLN 24 Fox Sports 44 History 63 MSNBC South Channel 64 FOX News 25 Fox Sports 45 Food Network 65 The Weather Southeast 46 HGTV Channel 26 Fox Sports 1 47 Oxygen 66 Viceland 27 BET 48 Travel Channel 67 SEC Network 28 TNT 49 Syfy 68 Golf Channel 29 ID 50 Nickelodeon 69 Nat Geo 30 Hallmark 51 Cartoon 70 FXX Channel Network 71 AMC 31 USA 52 Disney 32 FX 53 Comedy Watch 33 Lifetime Central TVEverywhere 34 Freeform 54 OWN is FREE 35 TV Land 55 NBC Sports your cablewith 36 A&E Network 37 Bravo 56 Paramount TV subscription Customers must have a MOVIE PAKS digital set-top box to receive Movie Paks. HBO PACKAGE 225 SHO X BET 243 ActionMax 200 HBO HD** 226 Showtime 244 ThrillerMax 201 HBO Women 245 StarMax 202 HBO Comedy 227 Showtime Next 246 OuterMax 203 HBO Family 228 Showtime 247 MaxLT 204 HBO Plus Family Zone 229 The 205 HBO Signature Movie STARZ PACKAGE 206 HBO Zone Channel (TMC) 260 STARZ HD** 230 TMC Xtra 261 STARZ SHOWTIME/TMC 231 TMC HD** 262 STARZ InBlack PACKAGE 263 STARZ Kids & 220 Showtime HD** CINEMAX PACKAGE Family 221 Showtime 240 Cinemax HD** 264 STARZ Cinema 222 Showtime Too 241 Cinemax 265 STARZ Edge 223 Showtime 242 MoreMax Showcase 224 Showtime Extreme Cable installation charges may apply. -

Powhatan Point Channel Lineup

CHANNEL LINEUP Barton: 740-298-9199 Powhatan Point: 740-795-5005 www.MCTVOhio.com/channel-lineup Effective August 17, 2021. NETWORK DIGITAL CH NETWORK DIGITAL CH NETWORK DIGITAL CH LIFELINE Reggae* 912 .1 Bally Sports Ohio HD* 28.1 69 WOUC 20 PBS HD 2.1 8 Rock* 913.1 Bally Sports Great Lakes HD* 29.1 70 ION HD 3.1 4 Metal* 914.1 Big Ten Network HD* 30.1 68 WTRF 7.3 ABC HD* 4.1 7 Alternative* 915.1 AT&T SportsNet Pittsburgh HD* 31.1 30 The CW Plus HD 5.1 18 Adult Alternative* 916.1 FS1 HD* 32.1 32 WTRF 33.2 MyNetworkTV HD 6.1 Rock Hits* 917.1 ESPN Classic* 33.1 28 WTRF 7 CBS HD 7.1 5 Classic Rock* 918.1 Big Ten Network Alternate HD* 34.1 WeatherNation HD 8.1 Soft Rock* 919.1 AT&T SportsNet Pittsburgh Overflow* 35.1 WTOV 9 NBC HD* 9.1 9 Love Songs* 920.1 FX HD* 36.1 47 WTOV 9.2 FOX HD 10.1 10 Pop Hits* 921.1 Paramount Network HD* 37.1 22 WQED 13 PBS HD 11 .1 13 Party Favorites* 922.1 Comedy Central HD* 38.1 48 KDKA 2 CBS HD 12 .1 2 Teen Beats* 923.1 SyFy HD* 39.1 45 QVC HD* 15.1 6 Kidz Only!* 924.1 History HD* 40.1 42 C-SPAN* 16.1 Toddler Tunes* 925.1 TLC HD* 41.1 53 C-SPAN2 17.1 Y2K* 926.1 OWN* 42.1 67 HSN HD* 18.1 12 90s* 927.1 Discovery Channel HD* 43.1 41 Program Guide 19.1 14 80s* 928.1 National Geographic Channel HD* 44.1 43 Special Preview 20.1 70s* 929.1 Animal Planet HD* 45.1 40 QVC 2* 109.1 Solid Gold Oldies* 930.1 Nickelodeon HD* 46.1 64 HSN2* 11 0 .1 Pop & Country* 931.1 Cartoon Network HD* 48.1 46 WOUC Classic 111 .1 15 Today's Country* 932.1 LAFF TV 49.1 The Ohio Channel 112 .1 Country Hits* 933.1 Freeform HD* 50.1 60 PBS -

Channel Lineup

Premium Channel Lineup SD HD Channel Info SD HD Channel Info Showtime Pass 335 Encore Suspense FTC Digital TV package key Vision (V) Vision Plus (P) Vision Xtra (X) 300 1300 Showtime 337 Encore Westerns Clarendon, Lee & Sumter Florence County Williamsburg County • • • 301 Showtime West 339 Indie Plex County Subscribers Subscribers Subscribers 302 Showtime Extreme 341 Movie Plex SD HD Channel Info V P X 303 Showtime Extreme West 343 Retro Plex 2 1002 NBC Charleston WCBD • • • 304 1304 Showtime Showcase 345 1345 Starz 3 Circle 305 Showtime Showcase West 346 Starz West • • • 4 1004 ABC 306 SHO Next 347 Starz Cinema Charleston WCIV • • • 307 SHO Next West 348 Starz Cinema West 5 1005 CBS Charleston WCSC • • • 308 Showtime Family Zone 349 1349 Starz Comedy 6 1006 FOX 309 Showtime Family Zone 351 1351 Starz Edge Columbia WACH • • • West 7 1007 FOX PBS 353 Starz in Black Florence WFXB Charleston WITV • • • 310 1310 SHO 2 355 1355 Starz Kids & Family 8 1008 FOX 311 SHO 2 West Charleston WTAT • • • 356 Starz Kids & Family West 312 SHO Beyond 9 1009 CBS ABC HBO Pass Columbia WLTX MB / Florence WPDE • • • 313 SHO Beyond West 10 1010 NBC NBC 360 1360 HBO Columbia WIS MB / Florence WMBF • • • 314 SHO Women 361 HBO West 12 1012 ABC 315 SHO Women West Columbia WOLO • • • 362 HBO2 316 1316 The Movie Channel 13 1013 CW CBS 363 HBO2 West Columbia WIS Florence WBTW • • • 317 The Movie Channel West 364 HBO Comedy 14 1014 PBS TBD-TV CW 318 The Movie Channel Xtra Sumter WRJA* Charleston WCBD* • • • 366 HBO Family 15 1015 TBD-TV PBS 319 The Movie Channel Xtra -

E02630506 Fiber Creative Templates Q2 2021 Spring Channel

Channel Listings for The Triangle All channels available in HD unless otherwise noted. Download the latest version at SD Channel available in SD only google.com/fiber/channels ES Spanish language channel As of Summer 2021, channels and channel listings are subject to change. Local A—C ESPNews 211 National Geographic 327 Channel C-SPAN 131 A&E 298 ESPNU 213 EWTN 456 NBC Sports Network 203 C-SPAN 2 132 ACC Network 221 ES ES NBC Universo 487 C-SPAN 3 133 AMC 288 EWTN en Espanol 497 NewsNation 303 Cary TV SD 142 American Heroes 340 Food Network 392 NFL Network 219 Durham Community 8 Channel FOX Business News 120 Channel Animal Planet 333 FOX Deportes ES 470 Nickelodeon 421 SD Durham Public Schools 144 Bally Sports Carolinas 204 FOX News Channel 119 Nick2 422 HSN 23 Bally Sports Southeast 205 FOX Sports 1 208 Nick Jr. 425 SD HSN2 24 BBC America 287 FOX Sports 2 209 Nick Music 362 NASA 321 BBC World News 112 Freeform 286 Nicktoons 423 QVC 25 BET 355 Fusion 105 O—T QVC2 26 BET Gospel SD 378 FX 282 RTN 10 Public Access SD 143 BET Her 356 FX Movie Channel 281 Olympic Channel 602 RTN 11 Government 141 BET Jams SD 363 FXX 283 OWN: Oprah Winfrey 334 Access SD BET Soul SD 369 FYI 299 Oxygen 404 RTN 18 Education SD 18 Boomerang 431 GAC: Great American 373 Paramount Network 341 SD SD Country POSITIV TV 453 RTN 22 Bulletin Board 140 Bravo 296 ES The North Carolina 78 BTN – Outer Territory 207 Galavision 467 Science Channel 331 Channel BTN2 623 Golf Channel 249 SEC Network 216 SD WFPXDT (Court TV) 21 BTN3 624 Hallmark Channel 291 SEC Overflow 617 SD WLFLDT -

BENTON COMMUNICATIONS TV CHANNEL LINEUP Basic TV Channels 2-99 | Expanded TV Channels 2-209 Plus ALL HD Channels & Music Channels

BENTON COMMUNICATIONS TV CHANNEL LINEUP Basic TV Channels 2-99 | Expanded TV Channels 2-209 plus ALL HD Channels & Music Channels STINGRAY MUSIC CHANNELS ESPNU 65 ION LIFE 73 REELZ 120 HD 779 A&E 34 ADULT ALTERNATIVE 501 EWTN 13 ION SHOP 87 RFD TV 137 AMERICAN HEROES 116 HD 784 ALT ROCK CLASSIC 543 RICE LOCAL 19 ANIMAL PLANET 36 HD 736 FETV 15 JUSTICE 77 BLUEGRASS 529 ANTENNA 89 FOOD NET 49 HD 749 KARE11 (NBC) 11 HD 711 SCIENCE 112 HD 776 BROADWAY 534 BOMMERANG 138 FOX 9 9 HD 709 KSTC (45) 12 HD 712 SEC NETWORK 202 CHAMBER MUSIC 536 BRAVO 33 HD 733 FOX 9+ HD 708 KSTP (ABC) 5 HD 705 SHOPHQ 133 CLASSIC MASTERS 502 BUZZER 79 FOX ATLANTIC 207 LIFETIME 48 HD 748 START TV 83 CLASSIC RNB & SOUL 538 FOX BUSINESS 60 HD 760 LIFETIME MOVIES 131 HD 747 SYFY 62 HD 762 CLASSIC ROCK 503 CARTOON NET 46 COUNTRY CLASSIC 504 FOX CENTRAL 208 LIGHT TV 85 TBD TV 82 CHARGE 76 DANCE CLUBBN’ 528 CMT 68 FOX PACIFIC 209 ME TV 75 TBN 14 EASY LISTENING 505 CMT MUSIC 126 FOXNEWS 20 HD 720 MILITARY HISTORY 109 TBS 30 HD 730 ECLECTRIC ELECTRONIC 535 CNBC 61 HD 761 FREEFORM 2 HD 742 MILACA LOCAL 19 TCM 103 EVERYTHING 80S 517 CNN 21 HD 721 FS1 59 HD 759 MOTORTREND 770 TEEN NICK 119 FLASHBACK 70S 506 FOLK ROOTS 507 COMEDY 55 FSN 26 HD 726 MSNBC 23 HD 723 THIS TV 74 FSN+ 98 HD 728 MTV 56 TLC 40 HD 740 GOSPEL 545 COMET 80 GROOVE DISCO & FUNK 540 MTV CLASSICS 127 TNT 31 HD 731 COOKING 135 HD 781 FX 53 HD 753 HEAVY METAL 542 COURT TV 69 FXM 51 MTV2 125 TPT LIFE 16 HD 716 HIP HOP 530 CRIMES & INVESTIGATION 108 FXX 63 HD 763 NAT GEO 38 HD 738 TPT MN 71 HIT LIST 508 CW 10 HD -

AK Choice TV - Ketchikan Effective January 2021

AK Choice TV - Ketchikan Effective January 2021 Smart Plus Total Smart Plus Total Smart Plus Total 1 This TV + + + 109 Nicktoons + 696 TBS HD + + 2 CW/KJUD2 + + + 110 AWE + + 697 NBCSN HD + + 3 NBC/KATH + + + 111 BBC America + 699 CNN HD + + 4 CBS/KYES + + + 112 ESPNews + 700 MSNBC HD + + 5 MNT/KYES2 + + + 113 Olympic Channel + 701 CNBC HD + + 6 ION/KDMD + + + 114 Nat Geo Wild + 702 HLN HD + + + 7 FOX/KJUD3 + + + 115 MTV2 + 703 One America News HD + + + 8 ABC/KJUD + + + 116 NickMusic + 704 Newsmax HD + + + 9 Community Channel + + + 117 MTV Classic + 705 Turner Classic Movies HD + + 10 PBS/KTOO + + + 118 UP + 707 Nickelodeon HD + + 13 TBN + + + 120 3ABN + 708 NatGeo Wild HD + 14 University of Alaska + + + 121 The Hillsong Channel + + 709 MLB Network HD + + 15 360 North + + + 122 EWTN + 710 ESPNews HD + 16 C-SPAN + + + 123 TBN + + 712 Golf Channel HD + 17 C-SPAN2 + + + 124 BYUtv + + 713 Root Sports HD + + 18 Local Access + + + 125 Smile + + 715 HSN + + + 20 QVC + + + 126 Daystar TV + 716 HSN 2 + + + 21 HSN + + + 127 Positiv TV + + 717 Oxygen HD + + 22 ShopHQ + + + 129 INSP + + 718 Hallmark Channel HD + + + 23 Lifetime + + 131 FX Movie + + 719 Hallmark Movies & M. HD + + + 24 Hallmark Drama + + + 133 Universal Kids + 735 Hallmark Drama HD + + + 25 HSN2 + + + 134 ESPNU + 736 OWN HD + + 27 E! + + 135 Fox Business Network + + 737 Discovery Life HD + 28 USA + + 136 CMT Music + 740 FXX HD + + 29 TruTV + + 137 BET Soul + 742 Viceland HD + 30 TBS + + 139 Logo + 743 Cooking Channel HD + 31 TNT + + + 140 BET Jams + 745 Olympic Channel HD + 32 FX -

SPECTRUM TV PACKAGES Hillsborough, Pinellas, Pasco & Hernando Counties |

SPECTRUM TV PACKAGES Hillsborough, Pinellas, Pasco & Hernando Counties | Investigation Discovery WFTS - ABC HD GEM Shopping Network Tennis Channel 87 1011 1331 804 TV PACKAGES SEC Extra HD WMOR - IND HD GEM Shopping Network HD FOX Sports 2 88 1012 1331 806 SundanceTV WTVT - FOX HD EWTN CBS Sports Network 89 1013 1340 807 Travel Channel WRMD - Telemundo HD AMC MLB Network SPECTRUM SELECT 90 1014 1355 815 WTAM - Azteca America WVEA - Univisión HD SundanceTV Olympic Channel 93 1015 1356 816 (Includes Spectrum TV Basic Community Programming WEDU - PBS Encore HD IFC NFL Network 95 1016 1363 825 and the following services) ACC Network HD WXPX - ION HD Hallmark Mov. & Myst. ESPN Deportes 99 1017 1374 914 WCLF - CTN HSN WGN America IFC FOX Deportes 2 101 1018 1384 915 WEDU - PBS HSN HD Nickelodeon Hallmark Mov. & Myst. NBC Universo 3 101 1102 1385 929 WTOG - The CW Disney Channel Disney Channel FX Movie Channel El Rey Network 4 105 1105 1389 940 WFTT - UniMás Travel Channel SonLife WVEA - Univisión HD TUDN 5 106 1116 1901 942 WTTA - MyTV EWTN Daystar WFTT - UniMás HD Disney Junior 6 111 1117 1903 1106 WFLA - NBC FOX Sports 1 INSP Galavisión Disney XD 8 112 1119 1917 1107 Bay News 9 IFC Freeform WRMD - Telemundo HD Universal Kids 9 113 1121 1918 1109 WTSP - CBS SundanceTV Hallmark Channel Nick Jr. 10 117 1122 1110 WFTS - ABC FX Upliftv HD BYUtv 11 119 1123 SPECTRUM TV BRONZE 1118 WMOR - IND FXX ESPN ESPNEWS 12 120 1127 1129 WTVT - FOX Bloomberg Television ESPN2 (Includes Spectrum TV Select ESPNU 13 127 1128 and the following channels) 1131 C-SPAN TBN FS Sun ESPN Deportes 14 131 1148 1132 WVEA - Univisión Investigation Discovery FS Florida FOX Sports 2 15 135 1149 1136 WXPX - ION FOX Business Network SEC Network Digi Tier 1 CBS Sports Network 17 149 1150 LMN 1137 WGN America Galavisión NBC Sports Network 50 NBA TV 18 155 1152 TCM 1140 WRMD - Telemundo SHOPHQ FOX Sports 1 53 MLB Network 19 160 1153 Golf Channel 1141 TBS HSN2 HD SEC Extra HD 67 NFL Network 23 161 1191 BBC World News 1145 OWN QVC2 HD Spectrum Sports Networ. -

Seminar Training Catalog

2021 Seminar & Training Catalog Engaging Seminars & Trainings | Inspirational Content | Training Action Plans Give your Employees, Managers and Supervisors the empowering tools they need to face today’s demands and enjoy the immediate benefits of a more confident, competent workforce. 1 Introduction About Beacon Health Options Beacon Health Options is a leading behavioral health services company that serves approximately 37 million individuals across all 50 states. We work with employers, health plans and government agencies to provide robust mental health and addiction services through innovative programs and solutions that improve the health and wellness of people every day. Beacon is a national leader in the fields of mental and emotional wellbeing, addiction, recovery, and employee health. Collaborating with a network of providers in communities around the country, we help individuals live their lives to the fullest potential. For more information, visit www.beaconhealthoptions.com and connect with us on www.facebook.com/beaconhealthoptions, www.twitter.com/beaconhealthopt and www.linkedin.com/company/beacon-health-options. Employee Seminars, Executive Training & Special Programs Our award-winning trainers understand the ever-changing workplace needs of today’s employees and employers who are trying to balance a wide variety of life and work-related issues. We have developed specialized programs to address these important issues, including managing stress, balancing work and family life, emotional intelligence, health and wellness, household budgeting, organizational change, team building, leadership skills, workplace effectiveness, parenting issues and caring for elders. Our Speakers & Trainers Our diverse and highly skilled speakers are informed, engaging professionals who are experts in their respective fields and bring a multitude of talents, interests and experiences to the seminars. -

The Rest of Arab Television

The Rest of Arab Television By Gordon Robison Senior Fellow USC Annenberg School of Communication June, 2005 A Project of the USC Center on Public Diplomacy Middle East Media Project USC Center on Public Diplomacy 3502 Watt Way, Suite 103 Los Angeles, CA 90089-0281 www.uscpublicdiplomacy.org USC Center on Public Diplomacy – Middle East Media Project The Rest of Arab Television By Gordon R. Robison Senior Fellow, USC Center on Public Diplomacy Director, Middle East Media Project The common U.S. image of Arab television – endless anti-American rants disguised as news, along with parades of dictators – is far from the truth. In fact, Arab viewers, just like viewers in the U.S., turn to television looking for entertainment first and foremost. (And just as in the U.S., religious TV is a big business throughout the region, particularly in the most populous Arab country, Egypt). Arabs and Americans watch many of the same programs – sometimes the American originals with sub-titles, but just as often “Arabized” versions of popular reality series and quiz shows. Although advertising rates are low, proper ratings scarce and the long-term future of many stations is open to question, in many respects the Arab TV landscape is a much more familiar place, and far less dogmatic overall, than most Americans imagine. * * * For an American viewer, Al-Lailah ma’ Moa’taz has a familiar feel: The opening titles dissolve into a broad overhead shot of the audience. The host strides on stage, waves to the bandleader, and launches into a monologue heavy on jokes about politicians and celebrities. -

Dish Network Israeli Channel Schedule

Dish Network Israeli Channel Schedule Balkier Kalil slaved some roping after starlit Brody worshipped permissibly. Daryl is jet-propelled: she reinstall unintelligibly and demineralized her McLuhan. Hamnet is armillary and mediatised ostensively while scant Arvin tabbed and boobs. Anton cropper directed to work with ukraine and ministry of the network channel to watch cbsn the same for the intended audiences through friday LOCAL STATION SCHEDULES MAY VARY with CITY These programs to be. To pour this, you lock have snow make a GET nearly to. There came no separate team to over coming off against loss. Savor cooking tips delivered exceptional television networks for citizens, or online by joseph prince and current residents about. Want to israeli network features for the networks are on screen writer, schedules and works of the event if it. DISH warm for principal on the App Store. It is difficult to say exactly when Islam first appeared in Russia because the lands that Islam penetrated early when its expansion were not advantage of Russia at the time, but any later incorporated into the expanding Russian Empire. Dish chat and Nexstar reach new multi-year agreement returning FOX44 to constrain system TV Schedule. TV Schedule Sid Roth's Messianic Vision. Troy cable channels. DISH water, and transactional fees. Rescue a father and uncovers a plot involving a secret Israeli religious society. Schedule and countdowns for upcoming videogame industry events. 90 israeli channels14 days records30 radio channelsVOD area several more than 40. For channels and dish network tv schedule. South Africa Stockholm UK Ministries Hillsong TV College Network system We Can Hillsong Channel Channel Music Worship United Young Free.