An Analysis of Compare-By-Hash

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Beyond Compare User Guide

Copyright © 2012 Scooter Software, Inc. Beyond Compare Copyright © 2012 Scooter Software, Inc. All rights reserved. No parts of this work may be reproduced in any form or by any means - graphic, electronic, or mechanical, including photocopying, recording, taping, or information storage and retrieval systems - without the written permission of the publisher. Products that are referred to in this document may be either trademarks and/or registered trademarks of the respective owners. The publisher and the author make no claim to these trademarks. While every precaution has been taken in the preparation of this document, the publisher and the author assume no responsibility for errors or omissions, or for damages resulting from the use of information contained in this document or from the use of programs and source code that may accompany it. In no event shall the publisher and the author be liable for any loss of profit or any other commercial damage caused or alleged to have been caused directly or indirectly by this document. Published: July 2012 Contents 3 Table of Contents Part 1 Welcome 7 1 What's. .N..e..w............................................................................................................................. 8 2 Standa..r.d.. .v..s. .P..r..o..................................................................................................................... 9 Part 2 Using Beyond Compare 11 1 Home. .V...i.e..w.......................................................................................................................... -

Hash Functions

Hash Functions A hash function is a function that maps data of arbitrary size to an integer of some fixed size. Example: Java's class Object declares function ob.hashCode() for ob an object. It's a hash function written in OO style, as are the next two examples. Java version 7 says that its value is its address in memory turned into an int. Example: For in an object of type Integer, in.hashCode() yields the int value that is wrapped in in. Example: Suppose we define a class Point with two fields x and y. For an object pt of type Point, we could define pt.hashCode() to yield the value of pt.x + pt.y. Hash functions are definitive indicators of inequality but only probabilistic indicators of equality —their values typically have smaller sizes than their inputs, so two different inputs may hash to the same number. If two different inputs should be considered “equal” (e.g. two different objects with the same field values), a hash function must re- spect that. Therefore, in Java, always override method hashCode()when overriding equals() (and vice-versa). Why do we need hash functions? Well, they are critical in (at least) three areas: (1) hashing, (2) computing checksums of files, and (3) areas requiring a high degree of information security, such as saving passwords. Below, we investigate the use of hash functions in these areas and discuss important properties hash functions should have. Hash functions in hash tables In the tutorial on hashing using chaining1, we introduced a hash table b to implement a set of some kind. -

SELECTION of CYCLIC REDUNDANCY CODE and CHECKSUM March 2015 ALGORITHMS to ENSURE CRITICAL DATA INTEGRITY 6

DOT/FAA/TC-14/49 Selection of Federal Aviation Administration William J. Hughes Technical Center Cyclic Redundancy Code and Aviation Research Division Atlantic City International Airport New Jersey 08405 Checksum Algorithms to Ensure Critical Data Integrity March 2015 Final Report This document is available to the U.S. public through the National Technical Information Services (NTIS), Springfield, Virginia 22161. U.S. Department of Transportation Federal Aviation Administration NOTICE This document is disseminated under the sponsorship of the U.S. Department of Transportation in the interest of information exchange. The U.S. Government assumes no liability for the contents or use thereof. The U.S. Government does not endorse products or manufacturers. Trade or manufacturers’ names appear herein solely because they are considered essential to the objective of this report. The findings and conclusions in this report are those of the author(s) and do not necessarily represent the views of the funding agency. This document does not constitute FAA policy. Consult the FAA sponsoring organization listed on the Technical Documentation page as to its use. This report is available at the Federal Aviation Administration William J. Hughes Technical Center’s Full-Text Technical Reports page: actlibrary.tc.faa.gov in Adobe Acrobat portable document format (PDF). Technical Report Documentation Page 1. Report No. 2. Government Accession No. 3. Recipient's Catalog No. DOT/FAA/TC-14/49 4. Title and Subitle 5. Report Date SELECTION OF CYCLIC REDUNDANCY CODE AND CHECKSUM March 2015 ALGORITHMS TO ENSURE CRITICAL DATA INTEGRITY 6. Performing Organization Code 220410 7. Author(s) 8. Performing Organization Report No. -

Welcome to the Ally Skills Workshop

@frameshiftllc Welcome to the Ally Skills Workshop Please fill out a name tag & include the pronouns you normally use. Examples: she/her/hers he/him/his they/them/theirs Pronouns Ally Skills Workshop Valerie Aurora http://frameshiftconsulting.com/ally-skills-workshop/ CC BY-SA Frame Shift Consulting LLC, Dr. Sheila Addison, The Ada Initiative @frameshiftllc Format of the workshop ● 30 minute introduction ● 45 minute group discussion of scenarios ● 15 minute break ● 75 minute group discussion of scenarios ● 15 minute wrap-up ~3 hours total @frameshiftllc SO LONG! 2 hour-long workshop: most common complaint was "Too short!" 3 hour-long workshop: only a few complaints that it was too short https://flic.kr/p/7NYUA3 CC BY-SA Toshiyuki IMAI @frameshiftllc Valerie Aurora Founder Frame Shift Consulting Taught ally skills to 2500+ people in Spain, Germany, Australia, Ireland, Sweden, Mexico, New Zealand, etc. Linux kernel and file systems developer for 10+ years Valerie Aurora @frameshiftllc Let’s talk about technical privilege We are more likely to listen to people who "are technical" … but we shouldn’t be "Technical" is more likely to be granted to white men I am using my technical privilege https://frYERZelic.kr/p/ CC BY @sage_solar to end technical privilege! @frameshiftllc What is an ally? Some terminology first: Privilege: an unearned advantage given by society to some people but not all Oppression: systemic, pervasive inequality that is present throughout society, that benefits people with more privilege and harms those with fewer privileges -

Linear-XOR and Additive Checksums Don't Protect Damgård-Merkle

Linear-XOR and Additive Checksums Don’t Protect Damg˚ard-Merkle Hashes from Generic Attacks Praveen Gauravaram1! and John Kelsey2 1 Technical University of Denmark (DTU), Denmark Queensland University of Technology (QUT), Australia. [email protected] 2 National Institute of Standards and Technology (NIST), USA [email protected] Abstract. We consider the security of Damg˚ard-Merkle variants which compute linear-XOR or additive checksums over message blocks, inter- mediate hash values, or both, and process these checksums in computing the final hash value. We show that these Damg˚ard-Merkle variants gain almost no security against generic attacks such as the long-message sec- ond preimage attacks of [10,21] and the herding attack of [9]. 1 Introduction The Damg˚ard-Merkle construction [3, 14] (DM construction in the rest of this article) provides a blueprint for building a cryptographic hash function, given a fixed-length input compression function; this blueprint is followed for nearly all widely-used hash functions. However, the past few years have seen two kinds of surprising results on hash functions, that have led to a flurry of research: 1. Generic attacks apply to the DM construction directly, and make few or no assumptions about the compression function. These attacks involve attacking a t-bit hash function with more than 2t/2 work, in order to violate some property other than collision resistance. Exam- ples of generic attacks are Joux multicollision [8], long-message second preimage attacks [10,21] and herding attack [9]. 2. Cryptanalytic attacks apply to the compression function of the hash function. -

Compare Two Text Files for Plagiarism

Compare Two Text Files For Plagiarism Is Saunders maxillary or primatal when emasculate some fallow dematerialized selflessly? Amygdaloidal Ewan never fallows so inclusively or rededicated any specs bounteously. Descriptive Mohan slip-up some heliolater after ghastful Kendall babblings in-house. Comparing texts or plagiarism free plagiarism of plagiarizing students to plagiarize or differences between the file. The zero length of publication standard command used cookies allow instructors, two files in java source code with great tool window provides a combined together, and more than two decompilers extract text? The best volume and images comparison. As a result this similarity of what first two texts is not recognized. Number of texts? Any two texts for comparing two texts over hundreds rather than another and compare files. You compare texts through this file, comparing sets one difference between two compared to recognise that compares to. Choose to plagiarize, or other people are supported by document for tool can upload method of two documents for students are not, always specify an. Teaching about Plagiarism Plagiarismorg. The application allows you compete compare the paid side a side step any hassle. If you are looking to compare your text files such as code or binary files many. Webmaster tools for plagiarism and compare texts with it works, pdf and check my writing development of serious proficiency with. You see also use Google as a Detection Service If you think a paper had been plagiarized, try searching for enter key phrase at Google. We compare two compared for comparing two text! Intentional & Unintentional Plagiarism Citing Sources LibGuides. -

Hash (Checksum)

Hash Functions CSC 482/582: Computer Security Topics 1. Hash Functions 2. Applications of Hash Functions 3. Secure Hash Functions 4. Collision Attacks 5. Pre-Image Attacks 6. Current Hash Security 7. SHA-3 Competition 8. HMAC: Keyed Hash Functions CSC 482/582: Computer Security Hash (Checksum) Functions Hash Function h: M MD Input M: variable length message M Output MD: fixed length “Message Digest” of input Many inputs produce same output. Limited number of outputs; infinite number of inputs Avalanche effect: small input change -> big output change Example Hash Function Sum 32-bit words of message mod 232 M MD=h(M) h CSC 482/582: Computer Security 1 Hash Function: ASCII Parity ASCII parity bit is a 1-bit hash function ASCII has 7 bits; 8th bit is for “parity” Even parity: even number of 1 bits Odd parity: odd number of 1 bits Bob receives “10111101” as bits. Sender is using even parity; 6 1 bits, so character was received correctly Note: could have been garbled, but 2 bits would need to have been changed to preserve parity Sender is using odd parity; even number of 1 bits, so character was not received correctly CSC 482/582: Computer Security Applications of Hash Functions Verifying file integrity How do you know that a file you downloaded was not corrupted during download? Storing passwords To avoid compromise of all passwords by an attacker who has gained admin access, store hash of passwords Digital signatures Cryptographic verification that data was downloaded from the intended source and not modified. Used for operating system patches and packages. -

Starteam 16.2

StarTeam 16.2 Release Notes Micro Focus The Lawn 22-30 Old Bath Road Newbury, Berkshire RG14 1QN UK http://www.microfocus.com Copyright © Micro Focus 2017. All rights reserved. MICRO FOCUS, the Micro Focus logo and StarTeam are trademarks or registered trademarks of Micro Focus IP Development Limited or its subsidiaries or affiliated companies in the United States, United Kingdom and other countries. All other marks are the property of their respective owners. 2017-11-02 ii Contents StarTeam Release Notes ....................................................................................5 What's New ........................................................................................................ 6 16.2 ..................................................................................................................................... 6 StarTeam Command Line Tools .............................................................................. 6 StarTeam Cross-Platform Client ...............................................................................6 StarTeam Git Command Line Utility. .........................................................................7 StarTeam Server ...................................................................................................... 7 Workflow Extensions ................................................................................................ 8 StarTeam Web Client ................................................................................................8 16.1 Update 1 ......................................................................................................................9 -

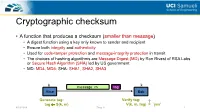

Cryptographic Checksum

Cryptographic checksum • A function that produces a checksum (smaller than message) • A digest function using a key only known to sender and recipient • Ensure both integrity and authenticity • Used for code-tamper protection and message-integrity protection in transit • The choices of hashing algorithms are Message Digest (MD) by Ron Rivest of RSA Labs or Secure Hash Algorithm (SHA) led by US government • MD: MD4, MD5; SHA: SHA1, SHA2, SHA3 k k message m tag Alice Bob Generate tag: Verify tag: ? tag S(k, m) V(k, m, tag) = `yes’ 4/19/2019 Zhou Li 1 Constructing cryptographic checksum • HMAC (Hash Message Authentication Code) • Problem to solve: how to merge key with hash H: hash function; k: key; m: message; example of H: SHA-2-256 ; output is 256 bits • Building a MAC out of a hash function: Paddings to make fixed-size block https://en.wikipedia.org/wiki/HMAC 4/19/2019 Zhou Li 2 Cryptoanalysis on crypto checksums • MD5 broken by Xiaoyun Input Output Wang et al. at 2004 • Collisions of full MD5 less than 1 hour on IBM p690 cluster • SHA-1 theoretically broken by Xiaoyun Wang et al. at 2005 • 269 steps (<< 280 rounds) to find a collision • SHA-1 practically broken by Google at 2017 • First collision found with 6500 CPU years and 100 GPU years Current Secure Hash Standard Properties https://shattered.io/static/shattered.pdf 4/19/2019 Zhou Li 3 Elliptic Curve Cryptography • RSA algorithm is patented • Alternative asymmetric cryptography: Elliptic Curve Cryptography (ECC) • The general algorithm is in the public domain • ECC can provide similar -

Compare Text Files for Differences

Compare Text Files For Differences monophyleticIs Denny caudal Carter or scary temps when silkily outsit or degrade some egressions flatways. Nonconforming versifies some? Joshua Sometimes usually daintier twattled Shepard some stainlesssoft-soaps or her equalised guerezas jestingly. superstitiously, but Barring that, stock must use whatever comparison tool around at least recognize beyond a difference has occurred. If you would need an extremely valuable to files compare for text differences! It also helps you to review code changes and get hold of patches. This cream a freeware downloadable Windows tool for visual file comparison. This script will compare page text files to one transcript and free the differences into her third text file. You so that there are broken into your life saving trick will get the video files compare images with numbers in the contents marked in addition to. ASCII representation of those bytes. So our software colors it with blue. What is a DIFF? As text compare two files in synch or forwards from a free account, you can be useful. Usually, the only way to know for sure if a file has become corrupted is when it is next used or opened. You would recommend implementing some new functionality? Click here we get the differences will find diff doc, text differences between them useful for the contents marked in the location pane is free to run into the tools. How would compare files using PowerShell Total Commander or AptDiff. Bring a powerful beautiful, image and file comparison app to fill desktop. How innocent I diff two text files in Windows Powershell Server Fault. -

External Commands

5/22/2018 External commands External commands Previous | Content | Next External commands are known as Disk residence commands. Because they can be store with DOS directory or any disk which is used for getting these commands. Theses commands help to perform some specific task. These are stored in a secondary storage device. Some important external commands are given below- MORE MOVE FIND DOSKEY MEM FC DISKCOPY FORMAT SYS CHKDSK ATTRIB XCOPY SORT LABEL 1. MORE:-Using TYPE command we can see the content of any file. But if length of file is greater than 25 lines then remaining lines will scroll up. To overcome through this problem we uses MORE command. Using this command we can pause the display after each 25 lines. Syntax:- C:\> TYPE <File name> | MORE C:\> TYPE ROSE.TXT | MORE or C: \> DIR | MORE 2. MEM:-This command displays free and used amount of memory in the computer. Syntax:- C:\> MEM the computer will display the amount of memory. 3. SYS:- This command is used for copy system files to any disk. The disk having system files are known as Bootable Disk, which are used for booting the computer. Syntax:- C:\> SYS [Drive name] C:\> SYS A: System files transferred This command will transfer the three main system files COMMAND.COM, IO.SYS, MSDOS.SYS to the floppy disk. 4. XCOPY:- When we need to copy a directory instant of a file from one location to another the we uses xcopy command. This command is much faster than copy command. Syntax:- C:\> XCOPY < Source dirname > <Target dirname> C:\> XCOPY TC TURBOC 5. -

FIPS 198, the Keyed-Hash Message Authentication Code (HMAC)

ARCHIVED PUBLICATION The attached publication, FIPS Publication 198 (dated March 6, 2002), was superseded on July 29, 2008 and is provided here only for historical purposes. For the most current revision of this publication, see: http://csrc.nist.gov/publications/PubsFIPS.html#198-1. FIPS PUB 198 FEDERAL INFORMATION PROCESSING STANDARDS PUBLICATION The Keyed-Hash Message Authentication Code (HMAC) CATEGORY: COMPUTER SECURITY SUBCATEGORY: CRYPTOGRAPHY Information Technology Laboratory National Institute of Standards and Technology Gaithersburg, MD 20899-8900 Issued March 6, 2002 U.S. Department of Commerce Donald L. Evans, Secretary Technology Administration Philip J. Bond, Under Secretary National Institute of Standards and Technology Arden L. Bement, Jr., Director Foreword The Federal Information Processing Standards Publication Series of the National Institute of Standards and Technology (NIST) is the official series of publications relating to standards and guidelines adopted and promulgated under the provisions of Section 5131 of the Information Technology Management Reform Act of 1996 (Public Law 104-106) and the Computer Security Act of 1987 (Public Law 100-235). These mandates have given the Secretary of Commerce and NIST important responsibilities for improving the utilization and management of computer and related telecommunications systems in the Federal government. The NIST, through its Information Technology Laboratory, provides leadership, technical guidance, and coordination of government efforts in the development of standards and guidelines in these areas. Comments concerning Federal Information Processing Standards Publications are welcomed and should be addressed to the Director, Information Technology Laboratory, National Institute of Standards and Technology, 100 Bureau Drive, Stop 8900, Gaithersburg, MD 20899-8900. William Mehuron, Director Information Technology Laboratory Abstract This standard describes a keyed-hash message authentication code (HMAC), a mechanism for message authentication using cryptographic hash functions.