Illusions of Liveness : Producer As Composer

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

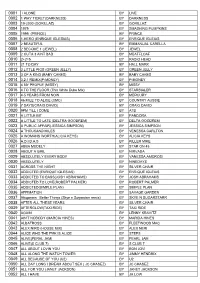

DJ Playlist.Djp

0001 I ALONE BY LIVE 0002 1 WAY TICKET(DARKNESS) BY DARKNESS 0003 19-2000 (GORILLAZ) BY GORILLAZ 0004 1979 BY SMASHING PUMPKINS 0005 1999 (PRINCE) BY PRINCE 0006 1-HERO (ENRIQUE IGLESIAS) BY ENRIQUE IGLEIAS 0007 2 BEAUTIFUL BY EMMANUAL CARELLA 0008 2 BECOME 1 (JEWEL) BY JEWEL 0009 2 OUTA 3 AINT BAD BY MEATFLOAF 0010 2+2=5 BY RADIO HEAD 0011 21 TO DAY BY HALL MARK 0012 3 LITTLE PIGS (GREEN JELLY) BY GREEN JELLY 0013 3 OF A KIND (BABY CAKES) BY BABY CAKES 0014 3,2,1 REMIX(P-MONEY) BY P-MONEY 0015 4 MY PEOPLE (MISSY) BY MISSY 0016 4 TO THE FLOOR (Thin White Duke Mix) BY STARSIALER 0017 4-5 YEARS FROM NOW BY MERCURY 0018 46 MILE TO ALICE (CMC) BY COUNTRY AUSSIE 0019 7 DAYS(CRAIG DAVID) BY CRAIG DAVID 0020 9PM TILL I COME BY ATB 0021 A LITTLE BIT BY PANDORA 0022 A LITTLE TO LATE (DELTRA GOODREM) BY DELTA GOODREM 0023 A PUBLIC AFFAIR(JESSICA SIMPSON) BY JESSICA SIMPSON 0024 A THOUSAND MILES BY VENESSA CARLTON 0025 A WOMANS WORTH(ALICIA KEYS) BY ALICIA KEYS 0026 A.D.I.D.A.S BY KILLER MIKE 0027 ABBA MEDELY BY STAR ON 45 0028 ABOUT A GIRL BY NIRVADA 0029 ABSOLUTELY EVERY BODY BY VANESSA AMOROSI 0030 ABSOLUTELY BY NINEDAYS 0031 ACROSS THE NIGHT BY SILVER CHAIR 0032 ADDICTED (ENRIQUE IGLESIAS) BY ENRIQUE IGLEIAS 0033 ADDICTED TO BASS(JOSH ABRAHAMS) BY JOSH ABRAHAMS 0034 ADDICTED TO LOVE(ROBERT PALMER) BY ROBERT PALMER 0035 ADDICTED(SIMPLE PLAN) BY SIMPLE PLAN 0036 AFFIMATION BY SAVAGE GARDEN 0037 Afropeans Better Things (Skye n Sugarstarr remix) BY SKYE N SUGARSTARR 0038 AFTER ALL THESE YEARS BY SILVER CHAIR 0039 AFTERGLOW(TAXI RIDE) BY TAXI RIDE -

Song List by Artist

Song List by Artist Artist Song Name 10,000 MANIACS BECAUSE THE NIGHT EAT FOR TWO WHAT'S THE MATTER HERE 10CC RUBBER BULLETS THINGS WE DO FOR LOVE 112 ANYWHERE [FEAT LIL'Z] CUPID PEACHES AND CREAM 112 FEAT SUPER CAT NA NA NA NA 112 FEAT. BEANIE SIGEL,LUDACRIS DANCE WITH ME/PEACHES AND CREAM 12TH MAN MARVELLOUS [FEAT MCG HAMMER] 1927 COMPULSORY HERO 2 BROTHERS ON THE 4TH FLOOR COME TAKE MY HAND NEVER ALONE 2 COW BOYS EVERYBODY GONFI GONE 2 HEADS OUT OF THE CITY 2 LIVE CREW LING ME SO HORNY WIGGLE IT 2 PAC ALL ABOUT U BRENDA’S GOT A BABY Page 1 of 366 Song List by Artist Artist Song Name HEARTZ OF MEN HOW LONG WILL THEY MOURN TO ME? I AIN’T MAD AT CHA PICTURE ME ROLLIN’ TO LIVE & DIE IN L.A. TOSS IT UP TROUBLESOME 96’ 2 UNLIMITED LET THE BEAT CONTROL YOUR BODY LETS GET READY TO RUMBLE REMIX NO LIMIT TRIBAL DANCE 2PAC DO FOR LOVE HOW DO YOU WANT IT KEEP YA HEAD UP OLD SCHOOL SMILE [AND SCARFACE] THUGZ MANSION 3 AMIGOS 25 MILES 2001 3 DOORS DOWN BE LIKE THAT WHEN IM GONE 3 JAYS FEELING IT TOO LOVE CRAZY EXTENDED VOCAL MIX 30 SECONDS TO MARS FROM YESTERDAY 33HZ (HONEY PLEASER/BASS TONE) 38 SPECIAL BACK TO PARADISE BACK WHERE YOU BELONG Page 2 of 366 Song List by Artist Artist Song Name BOYS ARE BACK IN TOWN, THE CAUGHT UP IN YOU HOLD ON LOOSELY IF I'D BEEN THE ONE LIKE NO OTHER NIGHT LOVE DON'T COME EASY SECOND CHANCE TEACHER TEACHER YOU KEEP RUNNIN' AWAY 4 STRINGS TAKE ME AWAY 88 4:00 PM SUKIYAKI 411 DUMB ON MY KNEES [FEAT GHOSTFACE KILLAH] 50 CENT 21 QUESTIONS [FEAT NATE DOGG] A BALTIMORE LOVE THING BUILD YOU UP CANDY SHOP (INSTRUMENTAL) CANDY SHOP (VIDEO) CANDY SHOP [FEAT OLIVIA] GET IN MY CAR GOD GAVE ME STYLE GUNZ COME OUT I DON’T NEED ‘EM I’M SUPPOSED TO DIE TONIGHT IF I CAN’T IN DA CLUB IN MY HOOD JUST A LIL BIT MY TOY SOLDIER ON FIRE Page 3 of 366 Song List by Artist Artist Song Name OUTTA CONTROL PIGGY BANK PLACES TO GO POSITION OF POWER RYDER MUSIC SKI MASK WAY SO AMAZING THIS IS 50 WANKSTA 50 CENT FEAT. -

WPU Remembers 9/11

* WEEKLY MONDAY, SEPTEMBER 16, 2002 William Paterson University • Volume 69 No. 2 FREE Plans for Student Center expansion underway ByjimSchofield the problems. Finally, some News Editor aspects of the plan may change due to building codes. ""This is another step along Urinyi said that distribu- the planned and timely growth, tion of offices in the renovat- [of the University]" said ed Student Center is not com- President Speert at the opening plete. This will likely be a of the new Valley Road matter among the various Building, and he meant-it. Plans departments that will be for a $40 million expansion of using the space, including the Student Center are well Hospitality Services, the underway with the intention of Office of Campus Activities breaking ground next summer and Student Leadership, the . according to John Urinyi, Women's Center, the Student Director of Capital- Planning, Government Association and 9/11 memorial outside Student Center photo by Elizabeth Fowler Design and Construction. all affiliated Student According to Urinyi, the Organization and, possibly, expansion will include a'near the office of the Dean of complete renovation of the Student Development Dr. WPU remembers 9/11 existing Student Center facili- John Mar tone- - ties; an addition to the Zanfino The existing Machuga Plaza side of the Student Center Student Center is over 30 By Lori Michael an original poem called September 11 affected their and a new building containing years old. Urinyi, along with The Beacon "Prayer." Miryam Wahrman, lives. The WPU Gospel Choir rooms, and will offer 60,000 'Afimihistration aha stnance • square feet of new space for Steve Bolyai and Tim montage of pictures greeted son, Jeremy, was one of the that was both somber and student organization offices, the Fanning, visited many other students, staff and faculty as passengers who tried to take uplifting. -

Turn up the Radio (Explicit)

(Don't) Give Hate A Chance - Jamiroquai 5 Seconds Of Summer - Girls Talk Boys (Karaoke) (Explicit) Icona Pop - I Love It 5 Seconds Of Summer - Hey Everybody! (Explicit) Madonna - Turn Up The Radio 5 Seconds Of Summer - She Looks So Perfect (Explicit) Nicki Minaj - Pound The Alarm 5 Seconds Of Summer - She's Kinda Hot (Explicit) Rita Ora ft Tinie Tempah - RIP 5 Years Time - Noah and The Whale (Explicit) Wiley ft Ms D - Heatwave 6 Of 1 Thing - Craig David (Let me be your) teddy bear - Elvis Presley 7 Things - Miley Cyrus 1 2 Step - Ciara 99 Luft Balloons - Nena 1 plus 1 - beyonce 99 Souls ft Destiny’s Child & Brandy - The Girl Is Mine 1000 Stars - Natalie Bassingthwaighte 99 Times - Kate Voegele 11. HAIM - Want You Back a better woman - beccy cole 12. Demi Lovato - Sorry Not Sorry a boy named sue - Johnny Cash 13 - Suspicious Minds - Dwight Yoakam A Great Big World ft Christina Aguilera - Say Something 13. Macklemore ft Skylar Grey - Glorious A Hard Day's Night - The Beatles 1973 - James Blunt A Little Bit More - 911 (Karaoke) 1979 - good charlotte A Little Further North - graeme conners 1983 - neon trees A Moment Like This - Kelly Clarkson (Karaoke) 1999 - Prince a pub with no beer - slim dusty.mpg 2 Hearts - Kylie a public affair - jessica simpson 20 Good Reasons - Thirsty Merc.mpg a teenager in love - dion and the belmonts 2012 - Jay Sean ft Miniaj A Thousand Miles - Vanessa Carlton (Karaoke) 21 Guns - Greenday a thousand years - christina perri 21st Century Breakdown - Green Day A Trak ft Jamie Lidell - We All Fall Down 21st century -

Song List by Song

Song List by Song Song Name Artist (ABSOLUTELY) STORY OF A GIRL NINE DAYS (DON’T) GIVE HATE A CHANCE JAMIROQUAI (HONEY PLEASER/BASS TONE) 33HZ (SHE’S GOT) SKILLZ ALL‐4‐ONE 1 2 3 MARIA RED HARDIN 1 4 U SUPERHEIST 1, 2 STEP CIARA FEAT MISSY ELLIOTT 1, 2, 3, 4 GLORIA ESTEFAN AND MIAMI SOUND MACHINE 10,000 PROMISES BACKSTREET BOYS 100 MILES AND RUNNING N.W.A. 100 YEARS FIVE FOR FIGHTING 100% PURE LOVE CRYSTAL WATERS 1234 REMIX COOLIO 13 STEPS TO NOWHERE PANTERA 138 TREK DJ ZINC 18 TIL I DIE BRYAN ADAMS 19 SOMETHIN' Page 1 of 525 Song List by Song Song Name Artist MARK WILLS 19'2000 GORILLAZ 1979 SMASHING PUMPKINS 1985 BOWLING FOR SOUP 1999 PRINCE 1ST OF THA MONTH BONE THUGS N HARMONY 2 LEGIT 2 QUIT MC HAMMER 2 OUT OF 3 AINT BAD MEATLOAF 2 TIMES ANN LEE 20 GOOD REASONS THIRSTY MERC 21 QUESTIONS [FEAT NATE DOGG] 50 CENT 21 SECONDS SO SOLID CREW 21ST CENTURY SCHIZOID MAN KING CRIMSON 22 STEPS DAMIEN LEITH 24 INSANE CLOWN POSSE 24 7 KEVON EDMONDS 24 HOURS ORGINAL MIX AGENT SUMO Page 2 of 525 Song List by Song Song Name Artist 2468 MOTORWAY TOM ROBINSON BAND 25 MILES 2001 3 AMIGOS 25 OR 6 TO 4 CHICAGO 2ND HAND PITCHSHIFTER 2S COMPANY CHARLTON HILL 3 IS FAMILY DANA DAWSON 3 LIBRAS A PERFECT CIRCLE 3 LITTLE PIGS GREEN JELLO 3,2,1 REMIX P MONEY 3:00 AM MATCHBOX 20 37MM AFI 3AM MATCHBOX 20 3AM ETERNAL KLF 4 EVER THE VERONICA’S 4 IN THE MORNING GWEN STEFANI 4 MY PEOPLE MISSY ELLIOTTT 4 SEASONS OF LONELINESS Page 3 of 525 Song List by Song Song Name Artist BOYZ II MEN 40 MILES OF BAD ROAD TWANGS 48 CRASH SUZY QUATRO 4EVER VERONICAS 5 YEARS FROM NOW MERCURY 4 51ST STATE, THE PRE SHRUNK 5678 STEPS 5IVE MEGAMIX 5IVE 5TH SYMPHONY 1ST MOVEMENT BEETHOVEN 60 MILES AN HOUR NEW ORDER 604 ANTHRAX 68 GUNS ALARM 7 PRINCE 7 DAYS CRAIG DAVID 7 YEARS AND 50 DAYS CASCADA 70'S LOVE GROOVE JANET JACKSON 72 HOUR DAZE TAXIRIDE Page 4 of 525 Song List by Song Song Name Artist 7654321 SURPRISE 77% HERD 7TH SYMPHONY BEETHOVEN 8 MILES AND RUNNIN’ FREEWAY FEAT. -

Strength and Empowerment in the Young Adult Fiction Genre

Central Washington University ScholarWorks@CWU Undergraduate Honors Theses Student Scholarship and Creative Works Spring 2021 Night of Awakening: Strength and Empowerment in the Young Adult Fiction Genre Shelby Ann Davis Central Washington University, [email protected] Follow this and additional works at: https://digitalcommons.cwu.edu/undergrad_hontheses Part of the Children's and Young Adult Literature Commons, Fiction Commons, Fine Arts Commons, Modern Literature Commons, Reading and Language Commons, and the Women's Studies Commons Recommended Citation Davis, Shelby Ann, "Night of Awakening: Strength and Empowerment in the Young Adult Fiction Genre" (2021). Undergraduate Honors Theses. 26. https://digitalcommons.cwu.edu/undergrad_hontheses/26 This Thesis is brought to you for free and open access by the Student Scholarship and Creative Works at ScholarWorks@CWU. It has been accepted for inclusion in Undergraduate Honors Theses by an authorized administrator of ScholarWorks@CWU. For more information, please contact [email protected]. Night of Awakening: Strength and Empowerment in the Young Adult Fiction Genre Shelby Ann Davis Senior Thesis Submitted in Partial Fulfillment of the Requirements for Graduation from The William O. Douglas Honors College Central Washington University April, 2021 Accepted by: Sarah Sillin 5/3/2021 Thesis Committee Chair (Sarah Sillin, Assistant Professor, English) Date M. O’Brien 5/4/2021 Thesis Committee Member (M. O'Brien, Assistant Professor, English) Date 5/7/2021 Director, William O. Douglas Honors College Date Please note: A signature was redacted from this page due to security concerns. ABSTRACT The Young Adult Fantasy genre is often written off as a useless, or immature form of writing. -

Jukebox Song List

Jukebox Song List 100% HITS 33 Track Title Artist BE YOURSELF MORCHEEBA BLACK COFFEE ALL SAINTS CHAINED TO YOU SAVAGE GARDEN DANCE WITH ME DEBELAH MORGAN EVERY TIME YOU NEED ME FRAGMA FEAT MARIA RUBIA EVERYTHING YOU NEED MADISON AVENUE FEEL GOOD MADASUN FILL ME IN CRAIG DAVID HOLLER SPICE GIRLS IF THAT WERE ME MELANIE C IRRESISTIBLE THE CORRS KIDS ROBBIE WILLIAMS & KYLIE MINOGUE ON A NIGHT LIKE THIS KYLIE MINOGUE PLEASE DON’T TURN ME ON ARTFUL DODGER SHAPE OF MY HEART BACK STREET BOYS STRONGER BRITNEY SPEARS THE ITCH VITAMIN C THIS I PROMISE YOU NSYNC WALK OF LIFE BILLIE PIPER YELLOW COLDPLAY 100% HITS THE BEST OF & SUMMER 2001 (DISC1) Track Title Artist ALL FOR YOU JANET JACKSON FOLLOW ME UNCLE KRACKER GRAVITY THE SUPER JESUS I MET HER IN MIAMI LIBERTY CITY IMITATION OF LIFE REM ITS RAINING MEN GERI HALLIWELL KIDS ROBBIE WILLIAMS & KYLIE ME MYSELF & I SCANDALUS ONE MORE TIME DAFT PUNK OVERLOAD SUGARBABES POP NSYNC REMINISCING MADISON AVENUE SHUTTER JOE TAKE ME AWAY LASH WASSUP DA MUTTZ WHAT TOOK YOU SO LONG EMMA BUNTON WHEN ITS OVER SUGAR RAY WHOLE AGAIN ATOMIC KITTEN YOUR DISCO NEEDS YOU KYLIE MINOUGE 100% HITS THE BEST OF & SUMMER 2001 (DISC2) Track Title Artist 19-2000 GORILLAZ AMAZING ALEX LLOYD BETTE DAVIS EYES GWYNETH PALTROW BETTER MAN ROBBIE WILLIAMS CHASE THE SUN PLANET FUNK Page 1 of 97 DO YOU REALLY LIKE IT DJ PIED PIPER & THE MASTER OF CEREMONIES DON’T MESS WITH THE RADIO NIVEA FLAWLESS THE ONES GIVE ME A REASON THE CORRS HOLLER SPICE GIRLS I NEED SOMEBODY BARDOT JUST THE THING PAUL MAC FEAT PETA MORRIS ME MYSELF & I JIVE