Image Features

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Snakes, Shapes, and Gradient Vector Flow

IEEE TRANSACTIONS ON IMAGE PROCESSING, VOL. 7, NO. 3, MARCH 1998 359 Snakes, Shapes, and Gradient Vector Flow Chenyang Xu, Student Member, IEEE, and Jerry L. Prince, Senior Member, IEEE Abstract—Snakes, or active contours, are used extensively in There are two key difficulties with parametric active contour computer vision and image processing applications, particularly algorithms. First, the initial contour must, in general, be to locate object boundaries. Problems associated with initializa- close to the true boundary or else it will likely converge tion and poor convergence to boundary concavities, however, have limited their utility. This paper presents a new external force to the wrong result. Several methods have been proposed to for active contours, largely solving both problems. This external address this problem including multiresolution methods [11], force, which we call gradient vector flow (GVF), is computed pressure forces [10], and distance potentials [12]. The basic as a diffusion of the gradient vectors of a gray-level or binary idea is to increase the capture range of the external force edge map derived from the image. It differs fundamentally from fields and to guide the contour toward the desired boundary. traditional snake external forces in that it cannot be written as the negative gradient of a potential function, and the corresponding The second problem is that active contours have difficulties snake is formulated directly from a force balance condition rather progressing into boundary concavities [13], [14]. There is no than a variational formulation. Using several two-dimensional satisfactory solution to this problem, although pressure forces (2-D) examples and one three-dimensional (3-D) example, we [10], control points [13], domain-adaptivity [15], directional show that GVF has a large capture range and is able to move snakes into boundary concavities. -

Edge Detection of an Image Based on Extended Difference of Gaussian

American Journal of Computer Science and Technology 2019; 2(3): 35-47 http://www.sciencepublishinggroup.com/j/ajcst doi: 10.11648/j.ajcst.20190203.11 ISSN: 2640-0111 (Print); ISSN: 2640-012X (Online) Edge Detection of an Image Based on Extended Difference of Gaussian Hameda Abd El-Fattah El-Sennary1, *, Mohamed Eid Hussien1, Abd El-Mgeid Amin Ali2 1Faculty of Science, Aswan University, Aswan, Egypt 2Faculty of Computers and Information, Minia University, Minia, Egypt Email address: *Corresponding author To cite this article: Hameda Abd El-Fattah El-Sennary, Abd El-Mgeid Amin Ali, Mohamed Eid Hussien. Edge Detection of an Image Based on Extended Difference of Gaussian. American Journal of Computer Science and Technology. Vol. 2, No. 3, 2019, pp. 35-47. doi: 10.11648/j.ajcst.20190203.11 Received: November 24, 2019; Accepted: December 9, 2019; Published: December 20, 2019 Abstract: Edge detection includes a variety of mathematical methods that aim at identifying points in a digital image at which the image brightness changes sharply or, more formally, has discontinuities. The points at which image brightness changes sharply are typically organized into a set of curved line segments termed edges. The same problem of finding discontinuities in one-dimensional signals is known as step detection and the problem of finding signal discontinuities over time is known as change detection. Edge detection is a fundamental tool in image processing, machine vision and computer vision, particularly in the areas of feature detection and feature extraction. It's also the most important parts of image processing, especially in determining the image quality. -

Fast Image Registration Using Pyramid Edge Images

Fast Image Registration Using Pyramid Edge Images Kee-Baek Kim, Jong-Su Kim, Sangkeun Lee, and Jong-Soo Choi* The Graduate School of Advanced Imaging Science, Multimedia and Film, Chung-Ang University, Seoul, Korea(R.O.K) *Corresponding author’s E-mail address: [email protected] Abstract: Image registration has been widely used in many image processing-related works. However, the exiting researches have some problems: first, it is difficult to find the accurate information such as translation, rotation, and scaling factors between images; and it requires high computational cost. To resolve these problems, this work proposes a Fourier-based image registration using pyramid edge images and a simple line fitting. Main advantages of the proposed approach are that it can compute the accurate information at sub-pixel precision and can be carried out fast for image registration. Experimental results show that the proposed scheme is more efficient than other baseline algorithms particularly for the large images such as aerial images. Therefore, the proposed algorithm can be used as a useful tool for image registration that requires high efficiency in many fields including GIS, MRI, CT, image mosaicing, and weather forecasting. Keywords: Image registration; FFT; Pyramid image; Aerial image; Canny operation; Image mosaicing. registration information as image size increases [3]. 1. Introduction To solve these problems, this work employs a pyramid-based image decomposition scheme. Image registration is a basic task in image Specifically, first, we use the Gaussian filter to processing to combine two or more images that are remove the noise because it causes many undesired partially overlapped. -

3D Object Modeling and Recognition Using Local Affine-Invariant Image Descriptors and Multi-View Spatial Constraints

3D Object Modeling and Recognition Using Local Affine-Invariant Image Descriptors and Multi-View Spatial Constraints Fred Rothganger ([email protected]) Svetlana Lazebnik ([email protected]) Department of Computer Science and Beckman Institute University of Illinois at Urbana-Champaign, Urbana, IL 61801, USA Cordelia Schmid ([email protected]) INRIA Rhone-Alpesˆ 665, Avenue de l’Europe, 38330 Montbonnot, France Jean Ponce ([email protected]) Department of Computer Science and Beckman Institute University of Illinois at Urbana-Champaign, Urbana, IL 61801, USA Abstract. This article introduces a novel representation for three-dimensional (3D) objects in terms of local affine-invariant descriptors of their images and the spatial relationships between the corresponding surface patches. Geometric constraints associated with different views of the same patches under affine projection are combined with a normalized representation of their appearance to guide matching and reconstruction, allowing the acquisition of true 3D affine and Euclidean models from multiple unregistered images, as well as their recognition in photographs taken from arbitrary viewpoints. The proposed approach does not require a separate segmentation stage, and it is applicable to highly cluttered scenes. Modeling and recognition results are presented. Keywords: Three-dimensional object recognition, image-based modeling, affine-invariant image descriptors, multi- view geometry. 1. Introduction This article addresses the problem of recognizing three-dimensional (3D) objects -

Good Colour Maps: How to Design Them

Good Colour Maps: How to Design Them Peter Kovesi Centre for Exploration Targeting School of Earth and Environment The University of Western Australia Crawley, Western Australia, 6009 [email protected] September 2015 Abstract Many colour maps provided by vendors have highly uneven percep- tual contrast over their range. It is not uncommon for colour maps to have perceptual flat spots that can hide a feature as large as one tenth of the total data range. Colour maps may also have perceptual discon- tinuities that induce the appearance of false features. Previous work in the design of perceptually uniform colour maps has mostly failed to recognise that CIELAB space is only designed to be perceptually uniform at very low spatial frequencies. The most important factor in designing a colour map is to ensure that the magnitude of the incre- mental change in perceptual lightness of the colours is uniform. The specific requirements for linear, diverging, rainbow and cyclic colour maps are developed in detail. To support this work two test images for evaluating colour maps are presented. The use of colour maps in combination with relief shading is considered and the conditions under which colour can enhance or disrupt relief shading are identified. Fi- nally, a set of new basis colours for the construction of ternary images arXiv:1509.03700v1 [cs.GR] 12 Sep 2015 are presented. Unlike the RGB primaries these basis colours produce images whereby the salience of structures are consistent irrespective of the assignment of basis colours to data channels. 1 Introduction A colour map can be thought of as a line or curve drawn through a three dimensional colour space. -

Scale Invariant Feature Transform - Scholarpedia 2015-04-21 15:04

Scale Invariant Feature Transform - Scholarpedia 2015-04-21 15:04 Scale Invariant Feature Transform +11 Recommend this on Google Tony Lindeberg (2012), Scholarpedia, 7(5):10491. doi:10.4249/scholarpedia.10491 revision #149777 [link to/cite this article] Prof. Tony Lindeberg, KTH Royal Institute of Technology, Stockholm, Sweden Scale Invariant Feature Transform (SIFT) is an image descriptor for image-based matching and recognition developed by David Lowe (1999, 2004). This descriptor as well as related image descriptors are used for a large number of purposes in computer vision related to point matching between different views of a 3-D scene and view-based object recognition. The SIFT descriptor is invariant to translations, rotations and scaling transformations in the image domain and robust to moderate perspective transformations and illumination variations. Experimentally, the SIFT descriptor has been proven to be very useful in practice for image matching and object recognition under real-world conditions. In its original formulation, the SIFT descriptor comprised a method for detecting interest points from a grey- level image at which statistics of local gradient directions of image intensities were accumulated to give a summarizing description of the local image structures in a local neighbourhood around each interest point, with the intention that this descriptor should be used for matching corresponding interest points between different images. Later, the SIFT descriptor has also been applied at dense grids (dense SIFT) which have been shown to lead to better performance for tasks such as object categorization, texture classification, image alignment and biometrics . The SIFT descriptor has also been extended from grey-level to colour images and from 2-D spatial images to 2+1-D spatio-temporal video. -

Sobel Operator and Canny Edge Detector ECE 480 Fall 2013 Team 4 Daniel Kim

P a g e | 1 Sobel Operator and Canny Edge Detector ECE 480 Fall 2013 Team 4 Daniel Kim Executive Summary In digital image processing (DIP), edge detection is an important subject matter. There are numerous edge detection methods such as Prewitt, Kirsch, and Robert cross. Two different types of edge detectors are explored and analyzed in this paper: Sobel operator and Canny edge detector. The performance of two edge detection methods are then compared with several input images. Keywords : Digital Image Processing, Edge Detection, Sobel Operator, Canny Edge Detector, Computer Vision, OpenCV P a g e | 2 Table of Contents 1| Introduction……………………………………………………………………………. 3 2|Sobel Operator 2.1 Background……………………………………………………………………..3 2.2 Example………………………………………………………………………...4 3|Canny Edge Detector 3.1 Background…………………………………………………………………….5 3.2 Example………………………………………………………………………...5 4|Comparison of Sobel and Canny 4.1 Discussion………………………………………………………………………6 4.2 Example………………………………………………………………………...7 5|Conclusion………………………………………………………………………............7 6|Appendix 6.1 OpenCV Sobel tutorial code…............................................................................8 6.2 OpenCV Canny tutorial code…………..…………………………....................9 7|Reference………………………………………………………………………............10 P a g e | 3 1) Introduction Edge detection is a crucial step in object recognition. It is a process of finding sharp discontinuities in an image. The discontinuities are abrupt changes in pixel intensity which characterize boundaries of objects in a scene. In short, the goal of edge detection is to produce a line drawing of the input image. The extracted features are then used by computer vision algorithms, e.g. recognition and tracking. A classical method of edge detection involves the use of operators, a two dimensional filter. An edge in an image occurs when the gradient is greatest. -

View Matching with Blob Features

View Matching with Blob Features Per-Erik Forssen´ and Anders Moe Computer Vision Laboratory Department of Electrical Engineering Linkoping¨ University, Sweden Abstract mographic transformation that is not part of the affine trans- formation. This means that our results are relevant for both This paper introduces a new region based feature for ob- wide baseline matching and 3D object recognition. ject recognition and image matching. In contrast to many A somewhat related approach to region based matching other region based features, this one makes use of colour is presented in [1], where tangents to regions are used to de- in the feature extraction stage. We perform experiments on fine linear constraints on the homography transformation, the repeatability rate of the features across scale and incli- which can then be found through linear programming. The nation angle changes, and show that avoiding to merge re- connection is that a line conic describes the set of all tan- gions connected by only a few pixels improves the repeata- gents to a region. By matching conics, we thus implicitly bility. Finally we introduce two voting schemes that allow us match the tangents of the ellipse-approximated regions. to find correspondences automatically, and compare them with respect to the number of valid correspondences they 2. Blob features give, and their inlier ratios. We will make use of blob features extracted using a clus- tering pyramid built using robust estimation in local image regions [2]. Each extracted blob is represented by its aver- 1. Introduction age colour pk,areaak, centroid mk, and inertia matrix Ik. I.e. -

Edge Detection Low Level

Image Processing - Lesson 10 Image Processing - Computer Vision Edge Detection Low Level • Edge detection masks Image Processing representation, compression,transmission • Gradient Detectors • Compass Detectors image enhancement • Second Derivative - Laplace detectors • Edge Linking edge/feature finding • Hough Transform image "understanding" Computer Vision High Level UFO - Unidentified Flying Object Point Detection -1 -1 -1 Convolution with: -1 8 -1 -1 -1 -1 Large Positive values = light point on dark surround Large Negative values = dark point on light surround Example: 5 5 5 5 5 -1 -1 -1 5 5 5 100 5 * -1 8 -1 5 5 5 5 5 -1 -1 -1 0 0 -95 -95 -95 = 0 0 -95 760 -95 0 0 -95 -95 -95 Edge Definition Edge Detection Line Edge Line Edge Detectors -1 -1 -1 -1 -1 2 -1 2 -1 2 -1 -1 2 2 2 -1 2 -1 -1 2 -1 -1 2 -1 -1 -1 -1 2 -1 -1 -1 2 -1 -1 -1 2 gray value gray x edge edge Step Edge Step Edge Detectors -1 1 -1 -1 1 1 -1 -1 -1 -1 -1 -1 -1 -1 -1 1 -1 -1 1 1 -1 -1 -1 -1 1 1 1 1 -1 1 -1 -1 1 1 1 1 1 1 gray value gray -1 -1 1 1 1 -1 1 1 x edge edge Example Edge Detection by Differentiation Step Edge detection by differentiation: 1D image f(x) gray value gray x 1st derivative f'(x) threshold |f'(x)| - threshold Pixels that passed the threshold are -1 -1 1 1 -1 -1 -1 -1 Edge Pixels -1 -1 1 1 -1 -1 -1 -1 -1 -1 1 1 1 1 1 1 -1 -1 1 1 1 -1 1 1 Gradient Edge Detection Gradient Edge - Examples ∂f ∂x ∇ f((x,y) = Gradient ∂f ∂y ∂f ∇ f((x,y) = ∂x , 0 f 2 f 2 Gradient Magnitude ∂ + ∂ √( ∂ x ) ( ∂ y ) ∂f ∂f Gradient Direction tg-1 ( / ) ∂y ∂x ∂f ∇ f((x,y) = 0 , ∂y Differentiation in Digital Images Example Edge horizontal - differentiation approximation: ∂f(x,y) Original FA = ∂x = f(x,y) - f(x-1,y) convolution with [ 1 -1 ] Gradient-X Gradient-Y vertical - differentiation approximation: ∂f(x,y) FB = ∂y = f(x,y) - f(x,y-1) convolution with 1 -1 Gradient-Magnitude Gradient-Direction Gradient (FA , FB) 2 2 1/2 Magnitude ((FA ) + (FB) ) Approx. -

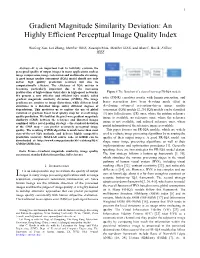

Gradient Magnitude Similarity Deviation: an Highly Efficient Perceptual Image Quality Index

1 Gradient Magnitude Similarity Deviation: An Highly Efficient Perceptual Image Quality Index Wufeng Xue, Lei Zhang, Member IEEE, Xuanqin Mou, Member IEEE, and Alan C. Bovik, Fellow, IEEE Abstract—It is an important task to faithfully evaluate the perceptual quality of output images in many applications such as image compression, image restoration and multimedia streaming. A good image quality assessment (IQA) model should not only deliver high quality prediction accuracy but also be computationally efficient. The efficiency of IQA metrics is becoming particularly important due to the increasing proliferation of high-volume visual data in high-speed networks. Figure 1 The flowchart of a class of two-step FR-IQA models. We present a new effective and efficient IQA model, called ratio (PSNR) correlates poorly with human perception, and gradient magnitude similarity deviation (GMSD). The image gradients are sensitive to image distortions, while different local hence researchers have been devoting much effort in structures in a distorted image suffer different degrees of developing advanced perception-driven image quality degradations. This motivates us to explore the use of global assessment (IQA) models [2, 25]. IQA models can be classified variation of gradient based local quality map for overall image [3] into full reference (FR) ones, where the pristine reference quality prediction. We find that the pixel-wise gradient magnitude image is available, no reference ones, where the reference similarity (GMS) between the reference and distorted images image is not available, and reduced reference ones, where combined with a novel pooling strategy – the standard deviation of the GMS map – can predict accurately perceptual image partial information of the reference image is available. -

Fast and Optimal Laplacian Solver for Gradient-Domain Image Editing Using Green Function Convolution

Fast and Optimal Laplacian Solver for Gradient-Domain Image Editing using Green Function Convolution Dominique Beaini, Sofiane Achiche, Fabrice Nonez, Olivier Brochu Dufour, Cédric Leblond-Ménard, Mahdis Asaadi, Maxime Raison Abstract In computer vision, the gradient and Laplacian of an image are used in different applications, such as edge detection, feature extraction, and seamless image cloning. Computing the gradient of an image is straightforward since numerical derivatives are available in most computer vision toolboxes. However, the reverse problem is more difficult, since computing an image from its gradient requires to solve the Laplacian equation (also called Poisson equation). Current discrete methods are either slow or require heavy parallel computing. The objective of this paper is to present a novel fast and robust method of solving the image gradient or Laplacian with minimal error, which can be used for gradient-domain editing. By using a single convolution based on a numerical Green’s function, the whole process is faster and straightforward to implement with different computer vision libraries. It can also be optimized on a GPU using fast Fourier transforms and can easily be generalized for an n-dimension image. The tests show that, for images of resolution 801x1200, the proposed GFC can solve 100 Laplacian in parallel in around 1.0 milliseconds (ms). This is orders of magnitude faster than our nearest competitor which requires 294ms for a single image. Furthermore, we prove mathematically and demonstrate empirically that the proposed method is the least-error solver for gradient domain editing. The developed method is also validated with examples of Poisson blending, gradient removal, and the proposed gradient domain merging (GDM). -

Fast Almost-Gaussian Filtering

Fast Almost-Gaussian Filtering Peter Kovesi Centre for Exploration Targeting School of Earth and Environment The University of Western Australia 35 Stirling Highway Crawley WA 6009 Email: [email protected] Abstract—Image averaging can be performed very efficiently paper describes how the fixed low cost of averaging achieved using either separable moving average filters or by using summed through separable moving average filters, or via summed area area tables, also known as integral images. Both these methods tables, can be exploited to achieve a good approximation allow averaging to be performed at a small fixed cost per pixel, independent of the averaging filter size. Repeated filtering with to Gaussian filtering also at a small fixed cost per pixel, averaging filters can be used to approximate Gaussian filtering. independent of filter size. Thus a good approximation to Gaussian filtering can be achieved Summed area tables were devised by Crow in 1984 [12] but at a fixed cost per pixel independent of filter size. This paper only recently introduced to the computer vision community by describes how to determine the averaging filters that one needs Viola and Jones [13]. A summed area table, or integral image, to approximate a Gaussian with a specified standard deviation. The design of bandpass filters from the difference of Gaussians can be generated by computing the cumulative sums along the is also analysed. It is shown that difference of Gaussian bandpass rows of an image and then computing the cumulative sums filters share some of the attributes of log-Gabor filters in that down the columns.