The Philosophy of Psychology

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Sociology One Course in Upper Level Writing

North Dakota State University 1 ENGL 120 College Composition II 3 Sociology One Course in Upper Level Writing. Select one of the following: 3 ENGL 320 Business and Professional Writing Sociology is the scientific study of social structure, social inequality, social ENGL 324 Writing in the Sciences change, and social interaction that comprise societies. The sociological ENGL 358 Writing in the Humanities and Social Sciences perspective examines the broad social context in which people live. This context shapes our beliefs and attitudes and sets guidelines for what we ENGL 459 Researching and Writing Grants and Proposal do. COMM 110 Fundamentals of Public Speaking 3 Quantitative Reasoning (R): The curriculum is structured to introduce majors to the sociology STAT 330 Introductory Statistics 3 discipline and provide them with conceptual and practical tools to understand social behavior and societies. Areas of study include small Science & Technology (S): 10 groups, populations, inequality, diversity, gender, social change, families, A one-credit lab must be taken as a co-requisite with a general community development, organizations, medical sociology, aging, and education science/technology course unless the course includes an the environment. embedded lab experience equivalent to a one-credit course. Select from current general education list. The 38-credit requirement includes the following core: Humanities & Fine Arts (A): Select from current general 6 education list ANTH 111 Introduction to Anthropology 3 Social & Behavioral Sciences -

Psycholinguistics

11/09/2013 Psycholinguistics What do these activities have in common? What kind of process is involved in producing and understanding language? 1 11/09/2013 Questions • What is psycholinguistics? • What are the main topics of psycholinguistics? 9.1 Introduction • * Psycholinguistics is the study of the language processing mechanisms. Psycholinguistics deals with the mental processes a person uses in producing and understanding language. It is concerned with the relationship between language and the human mind, for example, how word, sentence, and discourse meaning are represented and computed in the mind. 2 11/09/2013 9.1 Introduction * As the name suggests, it is a subject which links psychology and linguistics. • Psycholinguistics is interdisciplinary in nature and is studied by people in a variety of fields, such as psychology, cognitive science, and linguistics. It is an area of study which draws insights from linguistics and psychology and focuses upon the comprehension and production of language. • The scope of psycholinguistics • The common aim of psycholinguists is to find out the structures and processes which underline a human’s ability to speak and understand language. • Psycholinguists are not necessarily interested in language interaction between people. They are trying above all to probe into what is happening within the individual. 3 11/09/2013 The scope of psycholinguistics • At its heart, psycholinguistic work consists of two questions. – What knowledge of language is needed for us to use language? – What processes are involved in the use of language? The “knowledge” question • Four broad areas of language knowledge: Semantics deals with the meanings of sentences and words. -

Psychology, Meaning Making and the Study of Worldviews: Beyond Religion and Non-Religion

Psychology, Meaning Making and the Study of Worldviews: Beyond Religion and Non-Religion Ann Taves, University of California, Santa Barbara Egil Asprem, Stockholm University Elliott Ihm, University of California, Santa Barbara Abstract: To get beyond the solely negative identities signaled by atheism and agnosticism, we have to conceptualize an object of study that includes religions and non-religions. We advocate a shift from “religions” to “worldviews” and define worldviews in terms of the human ability to ask and reflect on “big questions” ([BQs], e.g., what exists? how should we live?). From a worldviews perspective, atheism, agnosticism, and theism are competing claims about one feature of reality and can be combined with various answers to the BQs to generate a wide range of worldviews. To lay a foundation for the multidisciplinary study of worldviews that includes psychology and other sciences, we ground them in humans’ evolved world-making capacities. Conceptualizing worldviews in this way allows us to identify, refine, and connect concepts that are appropriate to different levels of analysis. We argue that the language of enacted and articulated worldviews (for humans) and worldmaking and ways of life (for humans and other animals) is appropriate at the level of persons or organisms and the language of sense making, schemas, and meaning frameworks is appropriate at the cognitive level (for humans and other animals). Viewing the meaning making processes that enable humans to generate worldviews from an evolutionary perspective allows us to raise news questions for psychology with particular relevance for the study of nonreligious worldviews. Keywords: worldviews, meaning making, religion, nonreligion Acknowledgments: The authors would like to thank Raymond F. -

The 100 Most Eminent Psychologists of the 20Th Century

Review of General Psychology Copyright 2002 by the Educational Publishing Foundation 2002, Vol. 6, No. 2, 139–152 1089-2680/02/$5.00 DOI: 10.1037//1089-2680.6.2.139 The 100 Most Eminent Psychologists of the 20th Century Steven J. Haggbloom Renee Warnick, Jason E. Warnick, Western Kentucky University Vinessa K. Jones, Gary L. Yarbrough, Tenea M. Russell, Chris M. Borecky, Reagan McGahhey, John L. Powell III, Jamie Beavers, and Emmanuelle Monte Arkansas State University A rank-ordered list was constructed that reports the first 99 of the 100 most eminent psychologists of the 20th century. Eminence was measured by scores on 3 quantitative variables and 3 qualitative variables. The quantitative variables were journal citation frequency, introductory psychology textbook citation frequency, and survey response frequency. The qualitative variables were National Academy of Sciences membership, election as American Psychological Association (APA) president or receipt of the APA Distinguished Scientific Contributions Award, and surname used as an eponym. The qualitative variables were quantified and combined with the other 3 quantitative variables to produce a composite score that was then used to construct a rank-ordered list of the most eminent psychologists of the 20th century. The discipline of psychology underwent a eve of the 21st century, the APA Monitor (“A remarkable transformation during the 20th cen- Century of Psychology,” 1999) published brief tury, a transformation that included a shift away biographical sketches of some of the more em- from the European-influenced philosophical inent contributors to that transformation. Mile- psychology of the late 19th century to the stones such as a new year, a new decade, or, in empirical, research-based, American-dominated this case, a new century seem inevitably to psychology of today (Simonton, 1992). -

Philosophy of Psychology, Fall 2010

Philosophy of Psychology, Fall 2010 http://mechanism.ucsd.edu/~bill/teaching/f10/phil149/index.html Phil 149 Philosophy of Psychology Fall 2010, Tues. Thurs., 11:00am-12:20 pm Office Hours: Wednesday, 2:30-4:00, and by Professor: William Bechtel appointment Office: HSS 8076 Email: [email protected] Webpage: mechanism.ucsd.edu/~bill/teaching Telephone: 822-4461 /f10/phil149/ 1. Course Description How do scientists explain mental activities such as thinking, imagining, and remembering? Are the explanations offered in psychology similar to or different from those found in the natural sciences? How do psychological explanations relate to those of other disciplines, especially those included in cognitive science? The course will focus on major research traditions in psychology, with a special focus on contemporary cognitive psychology. Research on memory will provide a focus for the latter portion of the course. Given the nature of the class, substantial material will be presented in lectures that goes beyond what is included in the readings. Also, philosophy is an activity, and learning activities requires active engagement. Accordingly, class attendance and discussion is critical. Although we will have discussions on other occasions as well, several classes are designated as discussion classes. 2. Course Requirements Class attendance is mandatory. Missing classes more than very occasionally will result in a reduction in your grade. To get the most out of the class, it is absolutely essential that every one comes to class prepared to discuss the readings. To ensure that this happens and to foster subsequent discussions in class, you will be required to turn in a very short (one paragraph) comments or questions on reading assigned during each week of the quarter, except when there is an exam. -

Cognitive Psychology

COGNITIVE PSYCHOLOGY PSYCH 126 Acknowledgements College of the Canyons would like to extend appreciation to the following people and organizations for allowing this textbook to be created: California Community Colleges Chancellor’s Office Chancellor Diane Van Hook Santa Clarita Community College District College of the Canyons Distance Learning Office In providing content for this textbook, the following professionals were invaluable: Mehgan Andrade, who was the major contributor and compiler of this work and Neil Walker, without whose help the book could not have been completed. Special Thank You to Trudi Radtke for editing, formatting, readability, and aesthetics. The contents of this textbook were developed under the Title V grant from the Department of Education (Award #P031S140092). However, those contents do not necessarily represent the policy of the Department of Education, and you should not assume endorsement by the Federal Government. Unless otherwise noted, the content in this textbook is licensed under CC BY 4.0 Table of Contents Psychology .................................................................................................................................................... 1 126 ................................................................................................................................................................ 1 Chapter 1 - History of Cognitive Psychology ............................................................................................. 7 Definition of Cognitive Psychology -

Biohumanities: Rethinking the Relationship Between Biosciences, Philosophy and History of Science, and Society*

Biohumanities: Rethinking the relationship between biosciences, philosophy and history of science, and society* Karola Stotz† Paul E. Griffiths‡ ____________________________________________________________________ †Karola Stotz, Cognitive Science Program, 810 Eigenmann, Indiana University, Bloomington, IN 47408, USA, [email protected] ‡Paul Griffiths, School of Philosophical and Historical Inquiry, University of Sydney, NSW 2006, Australia, [email protected] *This material is based upon work supported by the National Science Foundation under Grants #0217567 and #0323496. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the National Science Foundation. Griffiths work on the paper was supported by Australian Research Council Federation Fellowship FF0457917. Abstract We argue that philosophical and historical research can constitute a ‘Biohumanities’ which deepens our understanding of biology itself; engages in constructive 'science criticism'; helps formulate new 'visions of biology'; and facilitates 'critical science communication'. We illustrate these ideas with two recent 'experimental philosophy' studies of the concept of the gene and of the concept of innateness conducted by ourselves and collaborators. 1 1. Introduction: What is 'Biohumanities'? Biohumanities is a view of the relationship between the humanities (especially philosophy and history of science), biology, and society1. In this vision, the humanities not only comment on the significance or implications of biological knowledge, but add to our understanding of biology itself. The history of genetics and philosophical work on the concept of the gene both enrich our understanding of genetics. This is perhaps most evident in classic works on the history of genetics, which not only describe how we reached our current theories but deepen our understanding of those theories through comparing and contrasting them to the alternatives which they displaced (Olby, 1974, 1985). -

Philosophy of Mind

From the Routledge Encyclopedia of Philosophy Philosophy of Mind Frank Jackson, Georges Rey Philosophical Concept ‘Philosophy of mind’, and ‘philosophy of psychology’ are two terms for the same general area of philosophical inquiry: the nature of mental phenomena and their connection with behaviour and, in more recent discussions, the brain. Much work in this area reflects a revolution in psychology that began mid-century. Before then, largely in reaction to traditional claims about the mind being non-physical (see Dualism; Descartes), many thought that a scientific psychology should avoid talk of ‘private’ mental states. Investigation of such states had seemed to be based on unreliable introspection (see Introspection, psychology of), not subject to independent checking (see Private language argument), and to invite dubious ideas of telepathy (see Parapsychology). Consequently, psychologists like B.F. Skinner and J.B. Watson, and philosophers like W.V. Quine and Gilbert Ryle argued that scientific psychology should confine itself to studying publicly observable relations between stimuli and responses (see Behaviourism, methodological and scientific; Behaviourism, analytic). However, in the late 1950s, several developments began to change all this: (i) The experiments behaviourists themselves ran on animals tended to refute behaviouristic hypotheses, suggesting that the behaviour of even rats had to be understood in terms of mental states (see Learning; Animal language and thought). (ii) The linguist Noam Chomsky drew attention to the surprising complexity of the natural languages that children effortlessly learn, and proposed ways of explaining this complexity in terms of largely unconscious mental phenomena. (iii) The revolutionary work of Alan Turing (see Turing machines) led to the development of the modern digital computer. -

Wittgenstein on Freedom of the Will: Not Determinism, Yet Not Indeterminism

Wittgenstein on Freedom of the Will: Not Determinism, Yet Not Indeterminism Thomas Nadelhoffer This is a prepublication draft. This version is being revised for resubmission to a journal. Abstract Since the publication of Wittgenstein’s Lectures on Freedom of the Will, his remarks about free will and determinism have received very little attention. Insofar as these lectures give us an opportunity to see him at work on a traditional—and seemingly intractable—philosophical problem and given the voluminous secondary literature written about nearly every other facet of Wittgenstein’s life and philosophy, this neglect is both surprising and unfortunate. Perhaps these lectures have not attracted much attention because they are available to us only in the form of a single student’s notes (Yorick Smythies). Or perhaps it is because, as one Wittgenstein scholar put it, the lectures represent only “cursory reflections” that “are themselves uncompelling." (Glock 1996: 390) Either way, my goal is to show that Wittgenstein’s views about freedom of the will merit closer attention. All of these arguments might look as if I wanted to argue for the freedom of the will or against it. But I don't want to. --Ludwig Wittgenstein, Lectures on Freedom of the Will Since the publication of Wittgenstein’s Lectures on Freedom of the Will,1 his remarks from these lectures about free will and determinism have received very little attention.2 Insofar as these lectures give us an opportunity to see him at work on a traditional—and seemingly intractable— philosophical problem and given the voluminous secondary literature written about nearly every 1 Wittgenstein’s “Lectures on Freedom of the Will” will be abbreviated as LFW 1993 in this paper (see bibliography) since I am using the version reprinted in Philosophical Occasions (1993). -

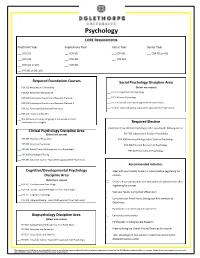

Psychology CORE Requirements

Psychology CORE Requirements Freshman Year Sophomore Year Junior Year Senior Year ___ COR 101 ___ COR 201 ___ COR 301 ___ COR 401 or 402 ___ COR 102 ___ COR 202 ___ COR 302 ___ COR 103 or 104 ___ COR 203 ___ FYS 101 or OGL 101 Required Foundation Courses Social Psychology Discipline Area ___ PSY 101 Introduction to Psychology (Select one course) ___ PSY 209 Behavioral Neuroscience ___ PSY 202 Organizational Psychology ___ PSY 204 Social Psychology ___ PSY 320 Psychological Statistics and Research MethodsI ___ PSY 321 Psychological Statistics and Research Methods II ___ PSY 290 Special Topics (With approval from Psych dept.) ___ PSY 322 Advanced Experimental Psychology ___ PSY 490 Advanced Special Topics (With approval from Psych dept.) ___ PSY 405 History and Systems ___ One Semester of Foreign Language at the second semester elementary level or higher Required Elective Completion of any additional Psychology elective excluding the following courses: Clinical Psychology Discipline Area (Select one course) PSY 200 Independent Study in Psychology ___ PSY 205 Theories of Personality PSY 400 Advanced Independent Study in Psychology ___ PSY 206 Abnormal Psychology PSY 406 Directed Research in Psychology ___ PSY 290 Special Topics (With approval from Psych dept.) PSY 407 Internship in Psychology ___ PSY 303 Psychological Testing ___ PSY 490 Advanced Special Topics (With approval from Psych dept.) Recommended Activities Cognitive/Developmental Psychology Meet with your Faculty Academic Advisor before registering for Discipline Area courses -

The Three Meanings of Meaning in Life: Distinguishing Coherence, Purpose, and Significance

The Journal of Positive Psychology Dedicated to furthering research and promoting good practice ISSN: 1743-9760 (Print) 1743-9779 (Online) Journal homepage: http://www.tandfonline.com/loi/rpos20 The three meanings of meaning in life: Distinguishing coherence, purpose, and significance Frank Martela & Michael F. Steger To cite this article: Frank Martela & Michael F. Steger (2016) The three meanings of meaning in life: Distinguishing coherence, purpose, and significance, The Journal of Positive Psychology, 11:5, 531-545, DOI: 10.1080/17439760.2015.1137623 To link to this article: http://dx.doi.org/10.1080/17439760.2015.1137623 Published online: 27 Jan 2016. Submit your article to this journal Article views: 425 View related articles View Crossmark data Full Terms & Conditions of access and use can be found at http://www.tandfonline.com/action/journalInformation?journalCode=rpos20 Download by: [Colorado State University] Date: 06 July 2016, At: 11:55 The Journal of Positive Psychology, 2016 Vol. 11, No. 5, 531–545, http://dx.doi.org/10.1080/17439760.2015.1137623 The three meanings of meaning in life: Distinguishing coherence, purpose, and significance Frank Martelaa* and Michael F. Stegerb,c aFaculty of Theology, University of Helsinki, P.O. Box 4, Helsinki 00014, Finland; bDepartment of Psychology, Colorado State University, 1876 Campus Delivery, Fort Collins, CO 80523-1876, USA; cSchool of Behavioural Sciences, North-West University, Vanderbijlpark, South Africa (Received 25 June 2015; accepted 3 December 2015) Despite growing interest in meaning in life, many have voiced their concern over the conceptual refinement of the con- struct itself. Researchers seem to have two main ways to understand what meaning in life means: coherence and pur- pose, with a third way, significance, gaining increasing attention. -

History and Philosophy of the Humanities

History and Philosophy of the Humanities History and Philosophy of the Humanities An introduction Michiel Leezenberg and Gerard de Vries Translation by Michiel Leezenberg Amsterdam University Press Original publication: Michiel Leezenberg & Gerard de Vries, Wetenschapsfilosofie voor geesteswetenschappen, derde editie, Amsterdam University Press, 2017 © M. Leezenberg & G. de Vries / Amsterdam University Press B.V., Amsterdam 2017 Translated by Michiel Leezenberg Cover illustration: Johannes Vermeer, De astronoom (1668) Musee du Louvre, R.F. 1983-28 Cover design: Coördesign, Leiden Lay-out: Crius Group, Hulshout isbn 978 94 6372 493 7 e-isbn 978 90 4855 168 2 (pdf) doi 10.5117/9789463724937 nur 730 © Michiel Leezenberg & Gerard de Vries / Amsterdam University Press B.V., Amsterdam 2019 Translation © M. Leezenberg All rights reserved. Without limiting the rights under copyright reserved above, no part of this book may be reproduced, stored in or introduced into a retrieval system, or transmitted, in any form or by any means (electronic, mechanical, photocopying, recording or otherwise) without the written permission of both the copyright owner and the author of the book. Every effort has been made to obtain permission to use all copyrighted illustrations reproduced in this book. Nonetheless, whosoever believes to have rights to this material is advised to contact the publisher. Table of Contents Preface 11 1 Introduction 15 1.1 The Tasks of the Philosophy of the Humanities 15 1.2 Knowledge and Truth 19 1.3 Interpretation and Perspective 23