2 a Review of Overview+Detail, Zooming, and Focus+Context Interfaces

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Computer Essentials – Session 1 – Step-By-Step Guide

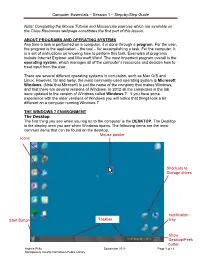

Computer Essentials – Session 1 – Step-by-Step Guide Note: Completing the Mouse Tutorial and Mousercise exercise which are available on the Class Resources webpage constitutes the first part of this lesson. ABOUT PROGRAMS AND OPERATING SYSTEMS Any time a task is performed on a computer, it is done through a program. For the user, the program is the application – the tool – for accomplishing a task. For the computer, it is a set of instructions on knowing how to perform this task. Examples of programs include Internet Explorer and Microsoft Word. The most important program overall is the operating system, which manages all of the computer’s resources and decides how to treat input from the user. There are several different operating systems in circulation, such as Mac O/S and Linux. However, far and away, the most commonly-used operating system is Microsoft Windows. (Note that Microsoft is just the name of the company that makes Windows, and that there are several versions of Windows. In 2012 all the computers in the lab were updated to the version of Windows called Windows 7. If you have some experience with the older versions of Windows you will notice that things look a bit different on a computer running Windows 7. THE WINDOWS 7 ENVIRONMENT The Desktop The first thing you see when you log on to the computer is the DESKTOP. The Desktop is the display area you see when Windows opens. The following items are the most common items that can be found on the desktop: Mouse pointer Icons Shortcuts to Storage drives Notification Start Button Taskbar tray Show Desktop/Peek button Andrea Philo September 2012 Page 1 of 13 Montgomery County-Norristown Public Library Computer Essentials – Session 1 – Step-by-Step Guide Parts of the Windows 7 Desktop Icon: A picture representing a program or file or places to store files. -

Still Photography

Still Photography Soumik Mitra, Published by - Jharkhand Rai University Subject: STILL PHOTOGRAPHY Credits: 4 SYLLABUS Introduction to Photography Beginning of Photography; People who shaped up Photography. Camera; Lenses & Accessories - I What a Camera; Types of Camera; TLR; APS & Digital Cameras; Single-Lens Reflex Cameras. Camera; Lenses & Accessories - II Photographic Lenses; Using Different Lenses; Filters. Exposure & Light Understanding Exposure; Exposure in Practical Use. Photogram Introduction; Making Photogram. Darkroom Practice Introduction to Basic Printing; Photographic Papers; Chemicals for Printing. Suggested Readings: 1. Still Photography: the Problematic Model, Lew Thomas, Peter D'Agostino, NFS Press. 2. Images of Information: Still Photography in the Social Sciences, Jon Wagner, 3. Photographic Tools for Teachers: Still Photography, Roy A. Frye. Introduction to Photography STILL PHOTOGRAPHY Course Descriptions The department of Photography at the IFT offers a provocative and experimental curriculum in the setting of a large, diversified university. As one of the pioneers programs of graduate and undergraduate study in photography in the India , we aim at providing the best to our students to help them relate practical studies in art & craft in professional context. The Photography program combines the teaching of craft, history, and contemporary ideas with the critical examination of conventional forms of art making. The curriculum at IFT is designed to give students the technical training and aesthetic awareness to develop a strong individual expression as an artist. The faculty represents a broad range of interests and aesthetics, with course offerings often reflecting their individual passions and concerns. In this fundamental course, students will identify basic photographic tools and their intended purposes, including the proper use of various camera systems, light meters and film selection. -

Windows 7 Operating Guide

Welcome to Windows 7 1 1 You told us what you wanted. We listened. This Windows® 7 Product Guide highlights the new and improved features that will help deliver the one thing you said you wanted the most: Your PC, simplified. 3 3 Contents INTRODUCTION TO WINDOWS 7 6 DESIGNING WINDOWS 7 8 Market Trends that Inspired Windows 7 9 WINDOWS 7 EDITIONS 10 Windows 7 Starter 11 Windows 7 Home Basic 11 Windows 7 Home Premium 12 Windows 7 Professional 12 Windows 7 Enterprise / Windows 7 Ultimate 13 Windows Anytime Upgrade 14 Microsoft Desktop Optimization Pack 14 Windows 7 Editions Comparison 15 GETTING STARTED WITH WINDOWS 7 16 Upgrading a PC to Windows 7 16 WHAT’S NEW IN WINDOWS 7 20 Top Features for You 20 Top Features for IT Professionals 22 Application and Device Compatibility 23 WINDOWS 7 FOR YOU 24 WINDOWS 7 FOR YOU: SIMPLIFIES EVERYDAY TASKS 28 Simple to Navigate 28 Easier to Find Things 35 Easy to Browse the Web 38 Easy to Connect PCs and Manage Devices 41 Easy to Communicate and Share 47 WINDOWS 7 FOR YOU: WORKS THE WAY YOU WANT 50 Speed, Reliability, and Responsiveness 50 More Secure 55 Compatible with You 62 Better Troubleshooting and Problem Solving 66 WINDOWS 7 FOR YOU: MAKES NEW THINGS POSSIBLE 70 Media the Way You Want It 70 Work Anywhere 81 New Ways to Engage 84 INTRODUCTION TO WINDOWS 7 6 WINDOWS 7 FOR IT PROFESSIONALS 88 DESIGNING WINDOWS 7 8 WINDOWS 7 FOR IT PROFESSIONALS: Market Trends that Inspired Windows 7 9 MAKE PEOPLE PRODUCTIVE ANYWHERE 92 WINDOWS 7 EDITIONS 10 Remove Barriers to Information 92 Windows 7 Starter 11 Access -

Software Manual IDEA – the Software

Software Manual IDEA – The Software Version 1.1 Open Technologies Srl WWW.SCANINABOX.COM Copyright © 2016 Open Technologies Srl First printing, June 2016 Contents I Part One 1 IDEA - the application for Scan in a Box .........................7 1.1 Application Interface7 1.1.1 Project management panel..........................................8 1.1.2 3D view panel....................................................9 1.1.3 Toolbar......................................................... 10 1.1.3.1 Project management (orange)........................................... 10 1.1.3.2 Acquisition (bordeaux)................................................ 10 1.1.3.3 Alignment (light blue)................................................. 11 1.1.3.4 Selection (turquoise).................................................. 11 1.1.3.5 Rendering (pink).................................................... 12 1.1.3.6 General (purple).................................................... 12 1.1.3.7 Features specific to range images (blue)..................................... 13 1.1.3.8 Features specific to triangle meshes (light green)................................ 13 2 Using IDEA ................................................... 17 2.1 Optical set-up 17 2.1.1 Optical set-up mode................................................ 19 2.1.2 Calibration of the optical head........................................ 22 2.2 Capture and alignment 25 2.2.1 Free mode acquisition............................................... 26 2.2.2 Turn Table acquisition mode.......................................... -

The Desktop (Overview)

The desktop (overview) The desktop is the main screen area that you see after you turn on your computer and log on to Windows. Like the top of an actual desk, it serves as a surface for your work. When you open programs or folders, they appear on the desktop. You can also put things on the desktop, such as files and folders, and arrange them however you want. The desktop is sometimes defined more broadly to include the taskbar and Windows Sidebar. The taskbar sits at the bottom of your screen. It shows you which programs are running and allows you to switch between them. It also contains the Start button , which you can use to access programs, folders, and computer settings. On the side of the screen, Sidebar contains small programs called gadgets. The desktop, taskbar, and Sidebar Where did my desktop go? Because programs run on top of the desktop, the desktop itself is often partially or completely hidden. But it's still there, underneath everything. To see the whole desktop without closing any of your open programs or windows, click the Show Desktop button on the taskbar. The desktop is revealed. Click the icon again to restore all of your windows to the way they were. Desktop Training Session Handout Page 1 http://ict.maxwell.syr.edu/vista/ Working with desktop icons Icons are small pictures that represent files, folders, programs, and other items. When you first start Windows, you'll see at least one icon on your desktop: the Recycle Bin (more on that later). -

Bforartists UI Redesign Design Document Part 2 - Theming

Bforartists UI redesign Design document part 2 - Theming Content Preface...........................................................................................................................6 The editor and window types......................................................................................7 Python console.............................................................................................................8 Layout:................................................................................................................................................................8 The Console Window.........................................................................................................................................8 Menu bar with a menu........................................................................................................................................8 Dropdown box with icon....................................................................................................................................9 RMB menu for menu bar....................................................................................................................................9 Toolbar................................................................................................................................................................9 Button Textform..................................................................................................................................................9 -

Navigate Windows and Folders

Windows® 7 Step by Step by Joan Preppernau and Joyce Cox To learn more about this book, visit Microsoft Learning at http://www.microsoft.com/MSPress/books/ 9780735626676 ©2009 Joan Preppernau and Joyce Cox Early Content—Subject to Change Windows 7 Step by Step Advance Content–Subject to Change Windows 7 Step by Step Draft Table of Contents Overview Front Matter ............................................................................................................................................. 3 Contents ................................................................................................................................................ 3 About the Authors ................................................................................................................................ 3 Features and Conventions of This Book ................................................................................................ 3 Using the Companion CD ...................................................................................................................... 3 Getting Help .......................................................................................................................................... 3 Introducing Windows 7 ......................................................................................................................... 3 Part I: Getting Started with Windows 7 .................................................................................................... 4 1 Explore Windows 7 ........................................................................................................................... -

10 Tips on How to Master the Cinematic Tools And

10 TIPS ON HOW TO MASTER THE CINEMATIC TOOLS AND ENHANCE YOUR DANCE FILM - the cinematographer point of view Your skills at the service of the movement and the choreographer - understand the language of the Dance and be able to transmute it into filmic images. 1. The Subject - The Dance is the Star When you film, frame and light the Dance, the primary subject is the Dance and the related movement, not the dancers, not the scenography, not the music, just the Dance nothing else. The Dance is about movement not about positions: when you film the dance you are filming the movement not a sequence of positions and in order to completely comprehend this concept you must understand what movement is: like the French philosopher Gilles Deleuze said “w e always tend to confuse movement with traversed space…” 1. The movement is the act of traversing, when you film the Dance you film an act not an aestheticizing image of a subject. At the beginning it is difficult to understand how to film something that is abstract like the movement but with practice you will start to focus on what really matters and you will start to forget about the dancers. Movement is life and the more you can capture it the more the characters are alive therefore more real in a way that you can almost touch them, almost dance with them. The Dance is a movement with a rhythm and when you film it you have to become part of the whole rhythm, like when you add an instrument to a music composition, the vocabulary of cinema is just another layer on the whole art work. -

Spot-Tracking Lens: a Zoomable User Interface for Animated Bubble Charts

Spot-Tracking Lens: A Zoomable User Interface for Animated Bubble Charts Yueqi Hu, Tom Polk, Jing Yang ∗ Ye Zhao y Shixia Liu z University of North Carolina at Charlotte Kent State University Tshinghua University Figure 1: A screenshot of the spot-tracking lens. The lens is following Belarus in the year 1995. Egypt, Syria, and Tunisia are automatically labeled since they move faster than Belarus. Ukraine and Russia are tracked. They are visible even when they go out of the spotlight. The color coding of countries is the same as in Gapminder[1], in which countries from the same geographic region share the same color. The world map on the top right corner provides a legend of the colors. ABSTRACT thus see more details. Zooming brings many benefits to visualiza- Zoomable user interfaces are widely used in static visualizations tion: it allows users to examine the context of an interesting object and have many benefits. However, they are not well supported in by zooming in the area where the object resides; labels overcrowded animated visualizations due to problems such as change blindness in the original view can be displayed without overlaps after zoom- and information overload. We propose the spot-tracking lens, a new ing in; it allows users to focus on a local area and thus reduce their zoomable user interface for animated bubble charts, to tackle these cognitive load. problems. It couples zooming with automatic panning and provides In spite of these benefits, zooming is not as well supported in an- a rich set of auxiliary techniques to enhance its effectiveness. -

Adding the Maine Prescription Monitoring System As a Favorite

Adding the Maine Prescription Monitoring System as a Favorite Beginning 1/1/17 all newly prescribed narcotic medications ordered when discharging a patient from the Emergency Department or an Inpatient department must be recorded in the Maine prescription monitoring system (Maine PMP). Follow these steps to access the Maine PMP site from the SeHR/Epic system and create shortcuts to the activity to simplify access. Access the Maine PMP site from hyperspace 1. Follow this path for the intial launch of the Maine PMP site: Epic>Help>Maine PMP Program Please note: the Maine PMP database is managed and maintained by the State of Maine and does NOT support single-sign on 2. Click Login to enter your Maine PMP user name and password a. If you know your user name and password, click Login b. To retrieve your user name click Retrieve User Name c. To retrieve your password click Retrieve Password Save the Maine PMP site as a favorite under the Epic button After accessing the site from the Epic menu path you can make the link a favorite under the Epic menu. 1. Click the Epic button, the Maine PMP program hyperlink will appear under your Recent activities, click the Star icon to save it as a favorite. After staring the activity it becomes a favorite stored under the Epic button Save the Maine PMP site as a Quick Button on your Epic Toolbar After accessing the site from the Epic menu path you may choose to make the Maine PMP hyperlink a quick button on your main Epic toolbar. -

Organizing Windows Desktop/Workspace

Organizing Windows Desktop/Workspace Instructions Below are the different places in Windows that you may want to customize. On your lab computer, go ahead and set up the environment in different ways to see how you’d like to customize your work computer. Start Menu and Taskbar ● Size: Click on the Start Icon (bottom left). As you move your mouse to the edges of the Start Menu window, your mouse icon will change to the resize icons . Click and drag the mouse to the desired Start Menu size. ● Open Start Menu, and “Pin” apps to the Start Menu/Taskbar by finding them in the list, right-clicking the app, and select “Pin to Start” or “More-> “Pin to Taskbar” OR click and drag the icon to the Tiles section. ● Drop “Tiles” on top of each other to create folders of apps. ● Right-click on Tiles (for example the Weather Tile), and you can resize the Tile (maybe for apps you use more often), and also Turn On live tiles to get updates automatically in the Tile (not for all Tiles) ● Right-click applications in the Taskbar to view “jump lists” for certain applications, which can show recently used documents, visited websites, or other application options. ● If you prefer using the keyboard for opening apps, you probably won’t need to customize the start menu. Simply hit the Windows Key and start typing the name of the application to open, then hit enter when it is highlighted. As the same searches happen, the most used apps will show up as the first selection. -

Tilt-Based Automatic Zooming and Scaling in Mobile Devices – a State-Space Implementation

Tilt-Based Automatic Zooming and Scaling in Mobile Devices – a state-space implementation Parisa Eslambolchilar 1, Roderick Murray-Smith 1,2 1 Hamilton Institute, National University of Ireland, NUI, Maynooth, Co.Kildare , Ireland [email protected] 2 Department of Computing Science, Glasgow University, Glasgow G12 8QQ, Scotland [email protected] Abstract. We provide a dynamic systems interpretation of the coupling of in- ternal states involved in speed-dependent automatic zooming, and test our im- plementation on a text browser on a Pocket PC instrumented with an acceler- ometer. The dynamic systems approach to the design of such continuous interaction interfaces allows the incorporation of analytical tools and construc- tive techniques from manual and automatic control theory. We illustrate ex- perimental results of the use of the proposed coupled navigation and zooming interface with classical scroll and zoom alternatives. 1 Introduction Navigation techniques such as scrolling (or panning) and zooming are essential components of mobile device applications such as map browsing and reading text documents, allowing the user access to a larger information space than can be viewed on the small screen. Scrolling allows the user to move to different locations, while zooming allows the user to view a target at different scales. However, the restrictions in screen space on mobile devices make it difficult to browse a large document effi- ciently. Using the traditional scroll bar, the user must move back and forth between the document and the scroll bar, which can increase the effort required to use the in- terface. In addition, in a long document, a small movement of the handle can cause a sudden jump to a distant location, resulting in disorientation and frustration.