A New Backend for Standard ML of New Jersey (Draft Paper)

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Type-Safe Multi-Tier Programming with Standard ML Modules

Experience Report: Type-Safe Multi-Tier Programming with Standard ML Modules Martin Elsman Philip Munksgaard Ken Friis Larsen Department of Computer Science, iAlpha AG, Switzerland Department of Computer Science, University of Copenhagen, Denmark [email protected] University of Copenhagen, Denmark [email protected] kfl[email protected] Abstract shared module, it is now possible, on the server, to implement a We describe our experience with using Standard ML modules and module that for each function responds to a request by first deseri- Standard ML’s core language typing features for ensuring type- alising the argument into a value, calls the particular function and safety across physically separated tiers in an extensive web applica- replies to the client with a serialised version of the result value. tion built around a database-backed server platform and advanced Dually, on the client side, for each function, the client code can client code that accesses the server through asynchronous server serialise the argument and send the request asynchronously to the requests. server. When the server responds, the client will deserialise the re- sult value and apply the handler function to the value. Both for the server part and for the client part, the code can be implemented in 1. Introduction a type-safe fashion. In particular, if an interface type changes, the The iAlpha Platform is an advanced asset management system programmer will be notified about all type-inconsistencies at com- featuring a number of analytics modules for combining trading pile -

Strict Protection for Virtual Function Calls in COTS C++ Binaries

vfGuard: Strict Protection for Virtual Function Calls in COTS C++ Binaries Aravind Prakash Xunchao Hu Heng Yin Department of EECS Department of EECS Department of EECS Syracuse University Syracuse University Syracuse University [email protected] [email protected] [email protected] Abstract—Control-Flow Integrity (CFI) is an important se- these binary-only solutions are unfortunately coarse-grained curity property that needs to be enforced to prevent control- and permissive. flow hijacking attacks. Recent attacks have demonstrated that existing CFI protections for COTS binaries are too permissive, While coarse-grained CFI solutions have significantly re- and vulnerable to sophisticated code reusing attacks. Accounting duced the attack surface, recent efforts by Goktas¸¨ et al. [9] for control flow restrictions imposed at higher levels of semantics and Carlini [10] have demonstrated that coarse-grained CFI is key to increasing CFI precision. In this paper, we aim to provide solutions are too permissive, and can be bypassed by reusing more stringent protection for virtual function calls in COTS large gadgets whose starting addresses are allowed by these C++ binaries by recovering C++ level semantics. To achieve this solutions. The primary reason for such permissiveness is the goal, we recover C++ semantics, including VTables and virtual lack of higher level program semantics that introduce certain callsites. With the extracted C++ semantics, we construct a sound mandates on the control flow. For example, given a class CFI policy and further improve the policy precision by devising two filters, namely “Nested Call Filter” and “Calling Convention inheritance, target of a virtual function dispatch in C++ must Filter”. -

Four Lectures on Standard ML

Mads Tofte March Lab oratory for Foundations of Computer Science Department of Computer Science Edinburgh University Four Lectures on Standard ML The following notes give an overview of Standard ML with emphasis placed on the Mo dules part of the language The notes are to the b est of my knowledge faithful to The Denition of Standard ML Version as regards syntax semantics and terminology They have b een written so as to b e indep endent of any particular implemen tation The exercises in the rst lectures can b e tackled without the use of a machine although having access to an implementation will no doubt b e b enecial The pro ject in Lecture presupp oses access to an implementation of the full language including mo dules At present the Edinburgh compiler do es not fall into this category the author used the New Jersey Standard ML compiler Lecture gives an introduction to ML aimed at the reader who is familiar with some programming language but do es not know ML Both the Core Language and the Mo dules are covered by way of example Lecture discusses the use of ML mo dules in the development of large programs A useful metho dology for programming with functors signatures and structures is presented Lecture gives a fairly detailed account of the static semantics of ML mo dules for those who really want to understand the crucial notions of sharing and signature matching Lecture presents a one day pro ject intended to give the student an opp ortunity of mo difying a nontrivial piece of software using functors signatures and structures -

Tricore Architecture Manual for a Detailed Discussion of Instruction Set Encoding and Semantics

User’s Manual, v2.3, Feb. 2007 TriCore 32-bit Unified Processor Core Embedded Applications Binary Interface (EABI) Microcontrollers Edition 2007-02 Published by Infineon Technologies AG 81726 München, Germany © Infineon Technologies AG 2007. All Rights Reserved. Legal Disclaimer The information given in this document shall in no event be regarded as a guarantee of conditions or characteristics (“Beschaffenheitsgarantie”). With respect to any examples or hints given herein, any typical values stated herein and/or any information regarding the application of the device, Infineon Technologies hereby disclaims any and all warranties and liabilities of any kind, including without limitation warranties of non- infringement of intellectual property rights of any third party. Information For further information on technology, delivery terms and conditions and prices please contact your nearest Infineon Technologies Office (www.infineon.com). Warnings Due to technical requirements components may contain dangerous substances. For information on the types in question please contact your nearest Infineon Technologies Office. Infineon Technologies Components may only be used in life-support devices or systems with the express written approval of Infineon Technologies, if a failure of such components can reasonably be expected to cause the failure of that life-support device or system, or to affect the safety or effectiveness of that device or system. Life support devices or systems are intended to be implanted in the human body, or to support and/or maintain and sustain and/or protect human life. If they fail, it is reasonable to assume that the health of the user or other persons may be endangered. User’s Manual, v2.3, Feb. -

A Separate Compilation Extension to Standard ML

A Separate Compilation Extension to Standard ML David Swasey Tom Murphy VII Karl Crary Robert Harper Carnegie Mellon {swasey,tom7,crary,rwh}@cs.cmu.edu Abstract The goal of this work is to consolidate and synthesize previ- We present an extension to Standard ML, called SMLSC, to support ous work on compilation management for ML into a formally de- separate compilation. The system gives meaning to individual pro- fined extension to the Standard ML language. The extension itself gram fragments, called units. Units may depend on one another in a is syntactically and conceptually very simple. A unit is a series of way specified by the programmer. A dependency may be mediated Standard ML top-level declarations, given a name. To the current by an interface (the type of a unit); if so, the units can be compiled top-level declarations such as structure and functor we add an separately. Otherwise, they must be compiled in sequence. We also import declaration that is to units what the open declaration is to propose a methodology for programming in SMLSC that reflects structures. An import declaration may optionally specify an inter- code development practice and avoids syntactic repetition of inter- face for the unit, in which case we are able to compile separately faces. The language is given a formal semantics, and we argue that against that dependency; with no interface we must compile incre- this semantics is implementable in a variety of compilers. mentally. Compatibility with existing compilers, including whole- program compilers, is assured by making no commitment to the Categories and Subject Descriptors D.3.3 [Programming Lan- precise meaning of “compile” and “link”—a compiler is free to guages]: Language Constructs and Features—Modules, pack- limit compilation to elaboration and type checking, and to perform ages; D.3.1 [Programming Languages]: Formal Definitions and code generation as part of linking. -

IAR C/C++ Compiler Reference Guide for V850

IAR Embedded Workbench® IAR C/C++ Compiler Reference Guide for the Renesas V850 Microcontroller Family CV850-9 COPYRIGHT NOTICE © 1998–2013 IAR Systems AB. No part of this document may be reproduced without the prior written consent of IAR Systems AB. The software described in this document is furnished under a license and may only be used or copied in accordance with the terms of such a license. DISCLAIMER The information in this document is subject to change without notice and does not represent a commitment on any part of IAR Systems. While the information contained herein is assumed to be accurate, IAR Systems assumes no responsibility for any errors or omissions. In no event shall IAR Systems, its employees, its contractors, or the authors of this document be liable for special, direct, indirect, or consequential damage, losses, costs, charges, claims, demands, claim for lost profits, fees, or expenses of any nature or kind. TRADEMARKS IAR Systems, IAR Embedded Workbench, C-SPY, visualSTATE, The Code to Success, IAR KickStart Kit, I-jet, I-scope, IAR and the logotype of IAR Systems are trademarks or registered trademarks owned by IAR Systems AB. Microsoft and Windows are registered trademarks of Microsoft Corporation. Renesas is a registered trademark of Renesas Electronics Corporation. V850 is a trademark of Renesas Electronics Corporation. Adobe and Acrobat Reader are registered trademarks of Adobe Systems Incorporated. All other product names are trademarks or registered trademarks of their respective owners. EDITION NOTICE Ninth edition: May 2013 Part number: CV850-9 This guide applies to version 4.x of IAR Embedded Workbench® for the Renesas V850 microcontroller family. -

Functional Programming (ML)

Functional Programming CSE 215, Foundations of Computer Science Stony Brook University http://www.cs.stonybrook.edu/~cse215 Functional Programming Function evaluation is the basic concept for a programming paradigm that has been implemented in functional programming languages. The language ML (“Meta Language”) was originally introduced in the 1970’s as part of a theorem proving system, and was intended for describing and implementing proof strategies. Standard ML of New Jersey (SML) is an implementation of ML. The basic mode of computation in SML is the use of the definition and application of functions. 2 (c) Paul Fodor (CS Stony Brook) Install Standard ML Download from: http://www.smlnj.org Start Standard ML: Type ''sml'' from the shell (run command line in Windows) Exit Standard ML: Ctrl-Z under Windows Ctrl-D under Unix/Mac 3 (c) Paul Fodor (CS Stony Brook) Standard ML The basic cycle of SML activity has three parts: read input from the user, evaluate it, print the computed value (or an error message). 4 (c) Paul Fodor (CS Stony Brook) First SML example SML prompt: - Simple example: - 3; val it = 3 : int The first line contains the SML prompt, followed by an expression typed in by the user and ended by a semicolon. The second line is SML’s response, indicating the value of the input expression and its type. 5 (c) Paul Fodor (CS Stony Brook) Interacting with SML SML has a number of built-in operators and data types. it provides the standard arithmetic operators - 3+2; val it = 5 : int The Boolean values true and false are available, as are logical operators such as not (negation), andalso (conjunction), and orelse (disjunction). -

Robert Harper Carnegie Mellon University

The Future Of Standard ML Robert Harper Carnegie Mellon University Sunday, September 22, 13 Whither SML? SML has been hugely influential in both theory and practice. The world is slowly converging on ML as the language of choice. There remain big opportunities to be exploited in research and education. Sunday, September 22, 13 Convergence The world moves inexorably toward ML. Eager, not lazy evaluation. Static, not dynamic, typing. Value-, not object-, oriented. Modules, not classes. Every new language is more “ML-like”. Sunday, September 22, 13 Convergence Lots of ML’s and ML-like languages being developed. O’Caml, F#, Scala, Rust SML#, Manticore O’Caml is hugely successful in both research and industry. Sunday, September 22, 13 Convergence Rich typing supports verification. Polytyping >> Unityping Not all types are pointed. Useful cost model, especially for parallelism and space usage. Modules are far better than objects. Sunday, September 22, 13 Standard ML Standard ML remains important as a vehicle for teaching and research. Intro CS @ CMU is in SML. Lots of extensions proposed. We should consolidate advances and move forward. Sunday, September 22, 13 Standard ML SML is a language, not a compiler! It “exists” as a language. Stable, definitive criterion for compatibility. Having a semantics is a huge asset, provided that it can evolve. Sunday, September 22, 13 Standard ML At least five compatible compilers: SML/NJ, PolyML, MLKit, MosML, MLton, MLWorks (?). Several important extensions: CML, SML#, Manticore, SMLtoJS, ParallelSML (and probably more). Solid foundation on which to build and develop. Sunday, September 22, 13 The Way Forward Correct obvious shortcomings. -

Lecture 21: Calling Conventions Menu Calling Convention Calling Convention X86 C Calling Convention

CS216: Program and Data Representation University of Virginia Computer Science Spring 2006 David Evans Menu Lecture 21: Calling Conventions • x86 C Calling Convention • Java Byte Code Wizards http://www.cs.virginia.edu/cs216 UVa CS216 Spring 2006 - Lecture 21: Calling Conventions 2 Calling Convention Calling Convention • Rules for how the caller and the Caller Callee callee make subroutine calls • Where to put • Where to find • Caller needs to know: params params • What registers can I • What registers – How to pass parameters assume are same must I not bash – What registers might be bashed by • Where to find result • Where to put result called function • State of stack • State of stack – What is state of stack after return – Where to get result UVa CS216 Spring 2006 - Lecture 21: Calling Conventions 3 UVa CS216 Spring 2006 - Lecture 21: Calling Conventions 4 Calling Convention: x86 C Calling Convention Easiest for Caller Caller Callee Caller Callee • Where to put • Where to find • Params on stack in • Find params on stack params params reverse order in reverse order • What registers can I • What registers • Can assume EBX, • Must not bash EBX, assume are same must I not bash EDI and ESI are EDI or ESI – All of them – None of them same • Put result in EAX • Where to find result • Where to put result • Find result in EAX • Stack rules next • State of stack • State of stack • Stack rules next Need to save and Need to save and restore restore EBX, EDI (EAX), ECX, EDX and ESI UVa CS216 Spring 2006 - Lecture 21: Calling Conventions 5 UVa CS216 -

![Standard ML Mini-Tutorial [1Mm] (In Particular SML/NJ)](https://docslib.b-cdn.net/cover/9771/standard-ml-mini-tutorial-1mm-in-particular-sml-nj-1459771.webp)

Standard ML Mini-Tutorial [1Mm] (In Particular SML/NJ)

Standard ML Mini-tutorial (in particular SML/NJ) Programming Languages CS442 David Toman School of Computer Science University of Waterloo David Toman (University of Waterloo) Standard ML 1 / 21 Introduction • SML (Standard Meta Language) ⇒ originally part of the LCF project (Gordon et al.) • Industrial strength PL (SML’90, SML’97) ⇒ based formal semantics (Milner et al.) • SML “Basis Library” (all you ever wanted) ⇒ based on advanced module system • Quality compilers: ⇒ SML/NJ (Bell Labs) ⇒ Moscow ML David Toman (University of Waterloo) Standard ML 2 / 21 Features • Everything is built from expressions ⇒ functions are first class citizens ⇒ pretty much extension of our simple functional PL • Support for structured values: lists, trees, . • Strong type system ⇒ let-polymorphic functions ⇒ type inference • Powerful module system ⇒ signatures, implementations, ADTs,. • Imperative features (e.g., I/O) David Toman (University of Waterloo) Standard ML 3 / 21 Tutorial Goals 1 Make link from our functional language to SML 2 Provide enough SML syntax and examples for A2 • How to use SML/NJ interactive environment • How to write simple functional programs • How to define new data types • How to understand compiler errors • Where to find more information 3 Show type inference in action (so we understand what’s coming) David Toman (University of Waterloo) Standard ML 4 / 21 Getting started • Starting it up: sml in UNIX (click somewhere in W/XP) Example Standard ML of New Jersey, Version 110.0.7 [CM&CMB] - ⇒ great support in Emacs • Notation and simple -

SML# JSON Support

A Calculus with Partially Dynamic Records for Typeful Manipulation of JSON Objects∗ Atsushi Ohori1,2, Katsuhiro Ueno1,2, Tomohiro Sasaki1,2, and Daisuke Kikuchi1,3 1 Research Institute of Electrical Communication, Tohoku University Sendai, Miyagi, 980-8577, Japan {ohori,katsu,tsasaki,kikuchi}@riec.tohoku.ac.jp 2 Graduate School of Information Sciences, Tohoku University Sendai, Miyagi, 980-8579, Japan 3 Hitachi Solutions East Japan, Ltd. Sendai, Miyagi, 980-0014, Japan [email protected] Abstract This paper investigates language constructs for high-level and type-safe manipulation of JSON objects in a typed functional language. A major obstacle in representing JSON in a static type system is their heterogeneous nature: in most practical JSON APIs, a JSON array is a heterogeneous list consisting of, for example, objects having common fields and possibly some optional fields. This paper presents a typed calculus that reconciles static typing constraints and heterogeneous JSON arrays based on the idea of partially dynamic records originally proposed and sketched by Buneman and Ohori for complex database object manipulation. Partially dynamic records are dynamically typed records, but some parts of their structures are statically known. This feature enables us to represent JSON objects as typed data structures. The proposed calculus smoothly extends with ML-style pattern matching and record polymorphism. These results yield a typed functional language where the programmer can directly import JSON data as terms having static types, and can manipulate them with the full benefits of static polymorphic type-checking. The proposed calculus has been embodied in SML#, an extension of Standard ML with record polymorphism and other practically useful features. -

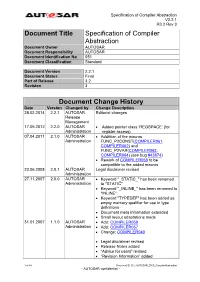

Specification of Compiler Abstraction

Specification of Compiler Abstraction V2.2.1 R3.2 Rev 3 Document Title Specification of Compiler Abstraction Document Owner AUTOSAR Document Responsibility AUTOSAR Document Identification No 051 Document Classification Standard Document Version 2.2.1 Document Status Final Part of Release 3.2 Revision 3 Document Change History Date Version Changed by Change Description 28.02.2014 2.2.1 AUTOSAR Editorial changes Release Management 17.05.2012 2.2.0 AUTOSAR Added pointer class ‘REGSPACE’ (for Administration register access) 07.04.2011 2.1.0 AUTOSAR Addtition of the macros Administration FUNC_P2CONST(COMPILER061, COMPILER062) and FUNC_P2VAR(COMPILER063, COMPILER064) (see bug #43874) Rework of COMPILER058 to be compatible to the added macros 23.06.2008 2.0.1 AUTOSAR Legal disclaimer revised Administration 27.11.2007 2.0.0 AUTOSAR Keyword "_STATIC_" has been renamed Administration to "STATIC" Keyword "_INLINE_" has been renamed to "INLINE" Keyword "TYPEDEF" has been added as empty memory qualifier for use in type definitions Document meta information extended Small layout adaptations made 31.01.2007 1.1.0 AUTOSAR Add: COMPILER058 Administration Add: COMPILER057 Change: COMPILER040 Legal disclaimer revised Release Notes added “Advice for users” revised “Revision Information” added 1 of 44 Document ID 051: AUTOSAR_SWS_CompilerAbstraction - AUTOSAR confidential - Specification of Compiler Abstraction V2.2.1 R3.2 Rev 3 Document Change History Date Version Changed by Change Description 27.04.2006 1.0.0 AUTOSAR Initial Release Administration 2 of 44 Document ID 051: AUTOSAR_SWS_CompilerAbstraction - AUTOSAR confidential - Specification of Compiler Abstraction V2.2.1 R3.2 Rev 3 Disclaimer This specification and the material contained in it, as released by AUTOSAR is for the purpose of information only.