Code Access Security

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Domain-Specific Programming Systems

Lecture 22: Domain-Specific Programming Systems Parallel Computer Architecture and Programming CMU 15-418/15-618, Spring 2020 Slide acknowledgments: Pat Hanrahan, Zach Devito (Stanford University) Jonathan Ragan-Kelley (MIT, Berkeley) Course themes: Designing computer systems that scale (running faster given more resources) Designing computer systems that are efficient (running faster under constraints on resources) Techniques discussed: Exploiting parallelism in applications Exploiting locality in applications Leveraging hardware specialization (earlier lecture) CMU 15-418/618, Spring 2020 Claim: most software uses modern hardware resources inefficiently ▪ Consider a piece of sequential C code - Call the performance of this code our “baseline performance” ▪ Well-written sequential C code: ~ 5-10x faster ▪ Assembly language program: maybe another small constant factor faster ▪ Java, Python, PHP, etc. ?? Credit: Pat Hanrahan CMU 15-418/618, Spring 2020 Code performance: relative to C (single core) GCC -O3 (no manual vector optimizations) 51 40/57/53 47 44/114x 40 = NBody 35 = Mandlebrot = Tree Alloc/Delloc 30 = Power method (compute eigenvalue) 25 20 15 10 5 Slowdown (Compared to C++) Slowdown (Compared no data no 0 data no Java Scala C# Haskell Go Javascript Lua PHP Python 3 Ruby (Mono) (V8) (JRuby) Data from: The Computer Language Benchmarks Game: CMU 15-418/618, http://shootout.alioth.debian.org Spring 2020 Even good C code is inefficient Recall Assignment 1’s Mandelbrot program Consider execution on a high-end laptop: quad-core, Intel Core i7, AVX instructions... Single core, with AVX vector instructions: 5.8x speedup over C implementation Multi-core + hyper-threading + AVX instructions: 21.7x speedup Conclusion: basic C implementation compiled with -O3 leaves a lot of performance on the table CMU 15-418/618, Spring 2020 Making efficient use of modern machines is challenging (proof by assignments 2, 3, and 4) In our assignments, you only programmed homogeneous parallel computers. -

Configure .NET Code-Access Security

© 2002 Visual Studio Magazine Fawcette Technical Publications Issue VSM November 2002 Section Black Belt column Main file name VS0211BBt2.rtf Listing file name -- Sidebar file name -- Table file name VS0211BBtb1.rtf Screen capture file names VS0211BBfX.bmp Infographic/illustration file names VS0211BBf1,2.bmp Photos or book scans ISBN 0596003471 Special instructions for Art dept. Editor LT Status TE’d3 Spellchecked (set Language to English U.S.) * PM review Character count 15,093 + 1,162 online table Package length 3.5 (I think, due to no inline code/listings) ToC blurb Learn how to safely grant assemblies permissions to perform operations with external entities such as the file system, registry, UIs, and more. ONLINE SLUGS Name of Magazine VSM November 2002 Name of feature/column/department Black Belt column 180-character blurb Learn how to safely grant assemblies permissions to perform operations with external entities such as the file system, registry, UIs, and more. 90-character blurb Learn how to safely grant assemblies permissions to perform operations with external entities. 90-character blurb describing download NA Locator+ code for article VS0211BB_T Photo (for columnists) location On file TITLE TAG & METATAGS <title> Visual Studio Magazine – Black Belt - Secure Access to Your .NET Code Configure .NET Code-Access Security </title> <!-- Start META Tags --> <meta name="Category" content=" .NET "> <meta name="Subcategory" content=" C#, Visual Basic .NET "> <meta name="Keywords" content=" .NET, C#, Visual Basic .NET, security permission, security evidence, security policy, permission sets, evidence, security, security permission stack walk, custom permission set, code group "> [[ Please check these and add/subtract as you see fit .]] <meta name="DESCRIPTION" content=" Learn how to grant assemblies permissions to perform operations with external entities such as the file system, registry, UIs, and more. -

Eagle: Tcl Implementation in C

Eagle: Tcl Implementation in C# Joe Mistachkin <[email protected]> 1. Abstract Eagle [1], Extensible Adaptable Generalized Logic Engine, is an implementation of the Tcl [2] scripting language for the Microsoft Common Language Runtime (CLR) [3]. It is designed to be a universal scripting solution for any CLR based language, and is written completely in C# [4]. Su- perficially, it is similar to Jacl [5], but it was written from scratch based on the design and imple- mentation of Tcl 8.4 [6]. It provides most of the functionality of the Tcl 8.4 interpreter while bor- rowing selected features from Tcl 8.5 [7] and the upcoming Tcl 8.6 [8] in addition to adding en- tirely new features. This paper explains how Eagle adds value to both Tcl/Tk and CLR-based applications and how it differs from other “dynamic languages” hosted by the CLR and its cousin, the Microsoft Dy- namic Language Runtime (DLR) [9]. It then describes how to use, integrate with, and extend Ea- gle effectively. It also covers some important implementation details and the overall design phi- losophy behind them. 2. Introduction This paper presents Eagle, which is an open-source [10] implementation of Tcl for the Microsoft CLR written entirely in C#. The goal of this project was to create a dynamic scripting language that could be used to automate any host application running on the CLR. 3. Rationale and Motivation Tcl makes it relatively easy to script applications written in C [11] and/or C++ [12] and so can also script applications written in many other languages (e.g. -

NET Framework

Advanced Windows Programming .NET Framework based on: A. Troelsen, Pro C# 2005 and .NET 2.0 Platform, 3rd Ed., 2005, Apress J. Richter, Applied .NET Frameworks Programming, 2002, MS Press D. Watkins et al., Programming in the .NET Environment, 2002, Addison Wesley T. Thai, H. Lam, .NET Framework Essentials, 2001, O’Reilly D. Beyer, C# COM+ Programming, M&T Books, 2001, chapter 1 Krzysztof Mossakowski Faculty of Mathematics and Information Science http://www.mini.pw.edu.pl/~mossakow Advanced Windows Programming .NET Framework - 2 Contents The most important features of .NET Assemblies Metadata Common Type System Common Intermediate Language Common Language Runtime Deploying .NET Runtime Garbage Collection Serialization Krzysztof Mossakowski Faculty of Mathematics and Information Science http://www.mini.pw.edu.pl/~mossakow Advanced Windows Programming .NET Framework - 3 .NET Benefits In comparison with previous Microsoft’s technologies: Consistent programming model – common OO programming model Simplified programming model – no error codes, GUIDs, IUnknown, etc. Run once, run always – no "DLL hell" Simplified deployment – easy to use installation projects Wide platform reach Programming language integration Simplified code reuse Automatic memory management (garbage collection) Type-safe verification Rich debugging support – CLR debugging, language independent Consistent method failure paradigm – exceptions Security – code access security Interoperability – using existing COM components, calling Win32 functions Krzysztof -

Starting up an Application Domain

04_596985 ch01.qxp 12/14/05 7:46 PM Page 1 Initial Phases of a Web Request Before the first line of code you write for an .aspx page executes, both Internet Information Services (IIS) and ASP.NET have performed a fair amount of logic to establish the execution context for a HyperText Transfer Protocol (HTTP) request. IIS may have negotiated security credentials with your browser. IIS will have determined that ASP.NET should process the request and will perform a hand- off of the request to ASP.NET. At that point, ASP.NET performs various one-time initializations as well as per-request initializations. This chapter will describe the initial phases of a Web request and will drill into the various security operations that occur during these phases. In this chapter, you will learn about the following steps that IIS carries out for a request: ❑ The initial request handling and processing performed both by the operating system layer and the ASP.NET Internet Server Application Programming Interface (ISAPI) filter ❑ How IIS handles static content requests versus dynamic ASP.NET content requests ❑ How the ASP.NET ISAPI filter transitions the request from the world of IIS into the ASP.NET world Having an understandingCOPYRIGHTED of the more granular portions MATERIAL of request processing also sets the stage for future chapters that expand on some of the more important security processing that occurs during an ASP.NET request as well as the extensibility points available to you for modifying ASP.NET’s security behavior. This book describes security behavior primarily for Windows Server 2003 running IIS6 and ASP.NET. -

Data Driven Software Engineering Track

Judith Bishop Microsoft Research 2001 2002 2003 2004 2005 2006 2007 2008 2009 2010 C# 1.0 C# 2.0 C# 3.0 C#4.0 Spec#1.0 Spec# Code CodeCont .5 1.0.6 Contracts racts 1.4 Java 1.5 F# Java 6 F# in VS F# C Ruby on LINQ Python Rails 3.0 Firefox 2 Firefox 3 IE6 Safari 1 IE7 Safari 4 IE8 Safari 5 Windows Windows DLR beta Windows DLR 1.0 XP Vista 7 .NET Rotor Mono 1.0 .NET 2 Rotor 2.0 .NET 3.5 .Net 4.0 Mac OS Ubuntu Mac OS Mac OSX Mac OS X Linux X Intel Leopard XSnow. VS 2003 VS 2005 VS2008 VS2010 Eclipse Eclipse Eclipse 1.0 3.0 3.6 Advantages Uses • Rapid feedback loop (REPL) • Scripting applications • Simultaneous top-down and • Building web sites bottom-up development • Test harnesses • Rapid refactoring and code • Server farm maintenance changing • One-off utilities or data • Easy glue code crunching C# 1.0 2001 C# 2.0 2005 C# 3.0 2007 C# 4.0 2009 structs generics implicit typing dynamic lookup properties anonymous anonymous types named and foreach loops methods object and array optional autoboxing iterators initializers arguments delegates and partial types extension COM interop events nullable types methods, variance indexers generic lambda operator delegates expressions overloading query expressions enumerated types (LINQ) with IO in, out and ref parameters formatted output API Serializable std generic Reflection delegates The dynamic language runtime (DLR) is a runtime environment that adds a set of services for dynamic languages – and dynamic featues of statically typed languages – to the common language runtime (CLR) • Dynamic Lookup • Calls, accesses and invocations bypass static type checking and get resolved at runtime • Named, default and optional parameters • COM interop • Variance • Extends type checking in generic types • E.g. -

Arquitectura .NET

Distribution and Integration Technologies .NET Architecture Traditional Architectures Web Local Distributed Other applications applications applications applications GUI Network Scripts Data Other Services Services Web Access Services Libraries Execution environment (Posix, Win32, ...) Operating System .NET Architecture 2 Java Architecture Web Local Distributed Other applications applications applications applications Swing Enterprise Java Server JDBC Others Java Beans Pages Standard Java Packages Java Virtual Machine (JVM) Operating System .NET Architecture 3 .NET Architecture Web Local Distributed Other applications applications applications applications Windows Enterprise ASP.NET ADO.NET Others Forms Services .NET Framework Class Library (FCL) Common Language Runtime (CLR) Operating System .NET Architecture 4 Common Language Runtime (CLR) All .NET applications use the CLR The CLR is OO It is independent from high level languages The CLR supports: . A common set of data types for all languages (CTS) . An intermediate language independent from the native code (CIL) . A common format for compiled code files (assemblies) All the software developed using the CLR is known as managed code .NET Architecture 5 Common Type System (CTS) All the languages able to generate code for the CLR make use of the CTS (the CLR implements the CTS) heap There is 2 type categories: string . Value “abcdef” • Simple types stack • Allocated in the stack top class A 41 String: . Reference Double: 271.6 • Complex types • Allocated in the heap • Destroyed automatically -

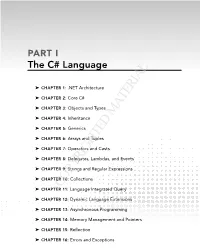

COPYRIGHTED MATERIAL ➤➤ Chapter 11: Language Integrated Query

PART I The C# Language ➤➤ CHAPtER 1: .NET Architecture ➤➤ CHAPtER 2: Core C# ➤➤ CHAPtER 3: Objects and Types ➤➤ CHAPtER 4: Inheritance ➤➤ CHAPtER 5: Generics ➤➤ CHAPtER 6: Arrays and Tuples ➤➤ CHAPtER 7: Operators and Casts ➤➤ CHAPtER 8: Delegates, Lambdas, and Events ➤➤ CHAPtER 9: Strings and Regular Expressions ➤➤ CHAPtER 10: Collections COPYRIGHTED MATERIAL ➤➤ CHAPtER 11: Language Integrated Query ➤➤ CHAPtER 12: Dynamic Language Extensions ➤➤ CHAPtER 13: Asynchronous Programming ➤➤ CHAPtER 14: Memory Management and Pointers ➤➤ CHAPtER 15: Reflection ➤➤ CHAPtER 16: Errors and Exceptions c01.indd 1 22-01-2014 07:50:30 c01.indd 2 22-01-2014 07:50:30 1 .NET Architecture WHAt’S IN THiS CHAPtER? ➤➤ Compiling and running code that targets .NET ➤➤ Advantages of Microsoft Intermediate Language (MSIL) ➤➤ Value and reference types ➤➤ Data typing ➤➤ Understanding error handling and attributes ➤➤ Assemblies, .NET base classes, and namespaces CODE DOwNlOADS FOR THiS CHAPtER There are no code downloads for this chapter. THE RElAtiONSHiP OF C# tO .NET This book emphasizes that the C# language must be considered in parallel with the .NET Framework, rather than viewed in isolation. The C# compiler specifically targets .NET, which means that all code written in C# always runs using the .NET Framework. This has two important consequences for the C# language: 1. The architecture and methodologies of C# reflect the underlying methodologies of .NET. 2. In many cases, specific language features of C# actually depend on features of .NET or of the .NET base classes. Because of this dependence, you must gain some understanding of the architecture and methodology of .NET before you begin C# programming, which is the purpose of this chapter. -

INTRODUCTION to .NET FRAMEWORK NET Framework .NET Framework Is a Complete Environment That Allows Developers to Develop, Run, An

INTRODUCTION TO .NET FRAMEWORK NET Framework .NET Framework is a complete environment that allows developers to develop, run, and deploy the following applications: Console applications Windows Forms applications Windows Presentation Foundation (WPF) applications Web applications (ASP.NET applications) Web services Windows services Service-oriented applications using Windows Communication Foundation (WCF) Workflow-enabled applications using Windows Workflow Foundation (WF) .NET Framework also enables a developer to create sharable components to be used in distributed computing architecture. NET Framework supports the object-oriented programming model for multiple languages, such as Visual Basic, Visual C#, and Visual C++. NET Framework supports multiple programming languages in a manner that allows language interoperability. This implies that each language can use the code written in some other language. The main components of .NET Framework? The following are the key components of .NET Framework: .NET Framework Class Library Common Language Runtime Dynamic Language Runtimes (DLR) Application Domains Runtime Host Common Type System Metadata and Self-Describing Components Cross-Language Interoperability .NET Framework Security Profiling Side-by-Side Execution Microsoft Intermediate Language (MSIL) The .NET Framework is shipped with compilers of all .NET programming languages to develop programs. Each .NET compiler produces an intermediate code after compiling the source code. 1 The intermediate code is common for all languages and is understandable only to .NET environment. This intermediate code is known as MSIL. IL Intermediate Language is also known as MSIL (Microsoft Intermediate Language) or CIL (Common Intermediate Language). All .NET source code is compiled to IL. IL is then converted to machine code at the point where the software is installed, or at run-time by a Just-In-Time (JIT) compiler. -

COM and .NET Interoperability

*0112_ch00_CMP2.qxp 3/25/02 2:10 PM Page i COM and .NET Interoperability ANDREW TROELSEN *0112_ch00_CMP2.qxp 3/25/02 2:10 PM Page ii COM and .NET Interoperability Copyright © 2002 by Andrew Troelsen All rights reserved. No part of this work may be reproduced or transmitted in any form or by any means, electronic or mechanical, including photocopying, recording, or by any information storage or retrieval system, without the prior written permission of the copyright owner and the publisher. ISBN (pbk): 1-59059-011-2 Printed and bound in the United States of America 12345678910 Trademarked names may appear in this book. Rather than use a trademark symbol with every occurrence of a trademarked name, we use the names only in an editorial fashion and to the benefit of the trademark owner, with no intention of infringement of the trademark. Technical Reviewers: Habib Heydarian, Eric Gunnerson Editorial Directors: Dan Appleman, Peter Blackburn, Gary Cornell, Jason Gilmore, Karen Watterson, John Zukowski Managing Editor: Grace Wong Copy Editors: Anne Friedman, Ami Knox Proofreaders: Nicole LeClerc, Sofia Marchant Compositor: Diana Van Winkle, Van Winkle Design Artist: Kurt Krames Indexer: Valerie Robbins Cover Designer: Tom Debolski Marketing Manager: Stephanie Rodriguez Distributed to the book trade in the United States by Springer-Verlag New York, Inc., 175 Fifth Avenue, New York, NY, 10010 and outside the United States by Springer-Verlag GmbH & Co. KG, Tiergartenstr. 17, 69112 Heidelberg, Germany. In the United States, phone 1-800-SPRINGER, email [email protected], or visit http://www.springer-ny.com. Outside the United States, fax +49 6221 345229, email [email protected], or visit http://www.springer.de. -

Programming with Windows Forms

A P P E N D I X A ■ ■ ■ Programming with Windows Forms Since the release of the .NET platform (circa 2001), the base class libraries have included a particular API named Windows Forms, represented primarily by the System.Windows.Forms.dll assembly. The Windows Forms toolkit provides the types necessary to build desktop graphical user interfaces (GUIs), create custom controls, manage resources (e.g., string tables and icons), and perform other desktop- centric programming tasks. In addition, a separate API named GDI+ (represented by the System.Drawing.dll assembly) provides additional types that allow programmers to generate 2D graphics, interact with networked printers, and manipulate image data. The Windows Forms (and GDI+) APIs remain alive and well within the .NET 4.0 platform, and they will exist within the base class library for quite some time (arguably forever). However, Microsoft has shipped a brand new GUI toolkit called Windows Presentation Foundation (WPF) since the release of .NET 3.0. As you saw in Chapters 27-31, WPF provides a massive amount of horsepower that you can use to build bleeding-edge user interfaces, and it has become the preferred desktop API for today’s .NET graphical user interfaces. The point of this appendix, however, is to provide a tour of the traditional Windows Forms API. One reason it is helpful to understand the original programming model: you can find many existing Windows Forms applications out there that will need to be maintained for some time to come. Also, many desktop GUIs simply might not require the horsepower offered by WPF. -

NET Technology Guide for Business Applications // 1

.NET Technology Guide for Business Applications Professional Cesar de la Torre David Carmona Visit us today at microsoftpressstore.com • Hundreds of titles available – Books, eBooks, and online resources from industry experts • Free U.S. shipping • eBooks in multiple formats – Read on your computer, tablet, mobile device, or e-reader • Print & eBook Best Value Packs • eBook Deal of the Week – Save up to 60% on featured titles • Newsletter and special offers – Be the first to hear about new releases, specials, and more • Register your book – Get additional benefits Hear about it first. Get the latest news from Microsoft Press sent to your inbox. • New and upcoming books • Special offers • Free eBooks • How-to articles Sign up today at MicrosoftPressStore.com/Newsletters Wait, there’s more... Find more great content and resources in the Microsoft Press Guided Tours app. The Microsoft Press Guided Tours app provides insightful tours by Microsoft Press authors of new and evolving Microsoft technologies. • Share text, code, illustrations, videos, and links with peers and friends • Create and manage highlights and notes • View resources and download code samples • Tag resources as favorites or to read later • Watch explanatory videos • Copy complete code listings and scripts Download from Windows Store Free ebooks From technical overviews to drilldowns on special topics, get free ebooks from Microsoft Press at: www.microsoftvirtualacademy.com/ebooks Download your free ebooks in PDF, EPUB, and/or Mobi for Kindle formats. Look for other great resources at Microsoft Virtual Academy, where you can learn new skills and help advance your career with free Microsoft training delivered by experts.