A Pairwise Comparison of Dna Sequence Alignment Using an Openmp Implementation of the Swamp Parallel Smith-Waterman Algorithm

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

To Find Information About Arabidopsis Genes Leonore Reiser1, Shabari

UNIT 1.11 Using The Arabidopsis Information Resource (TAIR) to Find Information About Arabidopsis Genes Leonore Reiser1, Shabari Subramaniam1, Donghui Li1, and Eva Huala1 1Phoenix Bioinformatics, Redwood City, CA USA ABSTRACT The Arabidopsis Information Resource (TAIR; http://arabidopsis.org) is a comprehensive Web resource of Arabidopsis biology for plant scientists. TAIR curates and integrates information about genes, proteins, gene function, orthologs gene expression, mutant phenotypes, biological materials such as clones and seed stocks, genetic markers, genetic and physical maps, genome organization, images of mutant plants, protein sub-cellular localizations, publications, and the research community. The various data types are extensively interconnected and can be accessed through a variety of Web-based search and display tools. This unit primarily focuses on some basic methods for searching, browsing, visualizing, and analyzing information about Arabidopsis genes and genome, Additionally we describe how members of the community can share data using TAIR’s Online Annotation Submission Tool (TOAST), in order to make their published research more accessible and visible. Keywords: Arabidopsis ● databases ● bioinformatics ● data mining ● genomics INTRODUCTION The Arabidopsis Information Resource (TAIR; http://arabidopsis.org) is a comprehensive Web resource for the biology of Arabidopsis thaliana (Huala et al., 2001; Garcia-Hernandez et al., 2002; Rhee et al., 2003; Weems et al., 2004; Swarbreck et al., 2008, Lamesch, et al., 2010, Berardini et al., 2016). The TAIR database contains information about genes, proteins, gene expression, mutant phenotypes, germplasms, clones, genetic markers, genetic and physical maps, genome organization, publications, and the research community. In addition, seed and DNA stocks from the Arabidopsis Biological Resource Center (ABRC; Scholl et al., 2003) are integrated with genomic data, and can be ordered through TAIR. -

Orthofiller: Utilising Data from Multiple Species to Improve the Completeness of Genome Annotations Michael P

Dunne and Kelly BMC Genomics (2017) 18:390 DOI 10.1186/s12864-017-3771-x SOFTWARE Open Access OrthoFiller: utilising data from multiple species to improve the completeness of genome annotations Michael P. Dunne and Steven Kelly* Abstract Backround: Complete and accurate annotation of sequenced genomes is of paramount importance to their utility and analysis. Differences in gene prediction pipelines mean that genome annotations for a species can differ considerably in the quality and quantity of their predicted genes. Furthermore, genes that are present in genome sequences sometimes fail to be detected by computational gene prediction methods. Erroneously unannotated genes can lead to oversights and inaccurate assertions in biological investigations, especially for smaller-scale genome projects, which rely heavily on computational prediction. Results: Here we present OrthoFiller, a tool designed to address the problem of finding and adding such missing genes to genome annotations. OrthoFiller leverages information from multiple related species to identify those genes whose existence can be verified through comparison with known gene families, but which have not been predicted. By simulating missing gene annotations in real sequence datasets from both plants and fungi we demonstrate the accuracy and utility of OrthoFiller for finding missing genes and improving genome annotations. Furthermore, we show that applying OrthoFiller to existing “complete” genome annotations can identify and correct substantial numbers of erroneously missing genes in these two sets of species. Conclusions: We show that significant improvements in the completeness of genome annotations can be made by leveraging information from multiple species. Keywords: Genome annotation, Gene prediction, Orthology, Orthogroup Background of several effective algorithms for identifying genes in Genome sequences have become fundamental to many de novo sequenced genomes [3]. -

Infravec2 Open Research Data Management Plan

INFRAVEC2 OPEN RESEARCH DATA MANAGEMENT PLAN Authors: Andrea Crisanti, Gareth Maslen, Andy Yates, Paul Kersey, Alain Kohl, Clelia Supparo, Ken Vernick Date: 10th July 2020 Version: 3.0 Overview Infravec2 will align to Open Research Data, as follows: Data Types and Standards Infravec2 will generate a variety of data types, including molecular data types: genome sequence and assembly, structural annotation (gene models, repeats, other functional regions) and functional annotation (protein function assignment), variation data, and transcriptome data; arbovirus and malaria experimental infection data, linked to archived samples; and microbiome data (Operational Taxonomic Units), including natural virome composition. All data will be released according to the appropriate standards and formats for each data type. For example, DNA sequence will be released in FASTA format; variant calls in Variant Call Format; sequence alignments in BAM (Binary Alignment Map) and CRAM (Compressed Read Alignment Map) formats, etc. We will strongly encourage the organisation of linked data sets as Track Hubs, a mechanism for publishing a set of linked genomic data that aids data discovery, sharing, and selection for subsequent analysis. We will develop internal standards within the consortium to define minimal metadata that will accompany all data sets, following the template of the Minimal Information Standards for Biological and Biomedical Investigations (http://www.dcc.ac.uk/resources/metadata-standards/mibbi-minimum-information-biological- and-biomedical-investigations). Data Exploitation, Accessibility, Curation and Preservation All molecular data for which existing public data repositories exist will be submitted to such repositories on or before the publication of written manuscripts, with early release of data (i.e. -

Lab 5: Bioinformatics I Sanger Sequence Analysis

Lab 5: Bioinformatics I Sanger Sequence Analysis Project Guide The Wolbachia Project 1 Arthropod Identification 2 DNA Extraction 3 PCR 4 Gel Electrophoresis 5 Bioinformatics Content is made available under the Creative Commons Attribution-NonCommercial-No Derivatives International License. Contact ([email protected]) if you would like to make adaptations for distribution beyond the classroom. The Wolbachia Project: Discover the Microbes Within! was developed by a collaboration of scientists, educators, and outreach specialists. It is directed by the Bordenstein Lab at Vanderbilt University. https://www.vanderbilt.edu/wolbachiaproject 2 Activity at a Glance Goal To analyze and interpret the quality of Sanger sequences, and generate a consensus DNA sequence for bioinformatics analyses. Learning Objectives Upon completion of this activity, students will (i) understand the Sanger method of sequencing, also known as the chain-termination method; (ii) be able to interpret chromatograms; (iii) evaluate sequencing Quality Scores; and (iv) generate a consensus DNA sequence based on forward and reverse Sanger reactions. Prerequisite Skills While no computer programming skills are necessary to complete this work, prior exposure to personal computers and the Internet is assumed. Teaching Time: One class period Recommended Background Tutorials • DNA Learning Center Animation: Sanger Method of DNA Sequencing (https://www.dnalc.org/view/15479-sanger-method-of-dna-sequencing-3d-animation-with- narration.html) • YouTube video: The Sanger -

Introduction to Bioinformatics (Elective) – SBB1609

SCHOOL OF BIO AND CHEMICAL ENGINEERING DEPARTMENT OF BIOTECHNOLOGY Unit 1 – Introduction to Bioinformatics (Elective) – SBB1609 1 I HISTORY OF BIOINFORMATICS Bioinformatics is an interdisciplinary field that develops methods and software tools for understanding biologicaldata. As an interdisciplinary field of science, bioinformatics combines computer science, statistics, mathematics, and engineering to analyze and interpret biological data. Bioinformatics has been used for in silico analyses of biological queries using mathematical and statistical techniques. Bioinformatics derives knowledge from computer analysis of biological data. These can consist of the information stored in the genetic code, but also experimental results from various sources, patient statistics, and scientific literature. Research in bioinformatics includes method development for storage, retrieval, and analysis of the data. Bioinformatics is a rapidly developing branch of biology and is highly interdisciplinary, using techniques and concepts from informatics, statistics, mathematics, chemistry, biochemistry, physics, and linguistics. It has many practical applications in different areas of biology and medicine. Bioinformatics: Research, development, or application of computational tools and approaches for expanding the use of biological, medical, behavioral or health data, including those to acquire, store, organize, archive, analyze, or visualize such data. Computational Biology: The development and application of data-analytical and theoretical methods, mathematical modeling and computational simulation techniques to the study of biological, behavioral, and social systems. "Classical" bioinformatics: "The mathematical, statistical and computing methods that aim to solve biological problems using DNA and amino acid sequences and related information.” The National Center for Biotechnology Information (NCBI 2001) defines bioinformatics as: "Bioinformatics is the field of science in which biology, computer science, and information technology merge into a single discipline. -

A Tool to Sanity Check and If Needed Reformat FASTA Files

bioRxiv preprint doi: https://doi.org/10.1101/024448; this version posted August 13, 2015. The copyright holder for this preprint (which was not certified by peer review) is the author/funder, who has granted bioRxiv a license to display the preprint in perpetuity. It is made available under aCC-BY 4.0 International license. Fasta-O-Matic: a tool to sanity check and if needed reformat FASTA files Jennifer Shelton Kansas State University August 11, 2015 Abstract As the shear volume of bioinformatic sequence data increases the only way to take advantage of this content is to more completely automate ro- bust analysis workflows. Analysis bottlenecks are often mundane and overlooked processing steps. Idiosyncrasies in reading and/or writing bioinformatics file formats can halt or impair analysis workflows by in- terfering with the transfer of data from one informatics tools to another. Fasta-O-Matic automates handling of common but minor format issues that otherwise may halt pipelines. The need for automation must be balanced by the need for manual confirmation that any formatting error is actually minor rather than indicative of a corrupt data file. To that end Fasta-O-Matic reports any issues detected to the user with optionally color coded and quiet or verbose logs. Fasta-O-Matic can be used as a general pre-processing tool in bioin- formatics workflows (e.g. to automatically wrap FASTA files so that they can be read by BioPerl). It was also developed as a sanity check for bioinformatic core facilities that tend to repeat common analysis steps on FASTA files received from disparate sources. -

Genbank Dennis A

Published online 28 November 2016 Nucleic Acids Research, 2017, Vol. 45, Database issue D37–D42 doi: 10.1093/nar/gkw1070 GenBank Dennis A. Benson, Mark Cavanaugh, Karen Clark, Ilene Karsch-Mizrachi, David J. Lipman, James Ostell and Eric W. Sayers* National Center for Biotechnology Information, National Library of Medicine, National Institutes of Health, Building 38A, 8600 Rockville Pike, Bethesda, MD 20894, USA Received September 15, 2016; Revised October 19, 2016; Editorial Decision October 24, 2016; Accepted November 07, 2016 ABSTRACT data from sequencing centers. The U.S. Patent and Trade- ® mark Office also contributes sequences from issued patents. GenBank (www.ncbi.nlm.nih.gov/genbank/)isa GenBank participates with the EMBL-EBI European Nu- comprehensive database that contains publicly avail- cleotide Archive (ENA) (2) and the DNA Data Bank of able nucleotide sequences for 370 000 formally de- Japan (DDBJ) (3) as a partner in the International Nu- Downloaded from scribed species. These sequences are obtained pri- cleotide Sequence Database Collaboration (INSDC) (4). marily through submissions from individual labora- The INSDC partners exchange data daily to ensure that tories and batch submissions from large-scale se- a uniform and comprehensive collection of sequence infor- quencing projects, including whole genome shotgun mation is available worldwide. NCBI makes GenBank data (WGS) and environmental sampling projects. Most available at no cost over the Internet, through FTP and a submissions are made using the web-based BankIt wide range of Web-based retrieval and analysis services (5). http://nar.oxfordjournals.org/ or the NCBI Submission Portal. GenBank staff assign accession numbers upon data receipt. -

The FASTA Program Package Introduction

fasta-36.3.8 December 4, 2020 The FASTA program package Introduction This documentation describes the version 36 of the FASTA program package (see W. R. Pearson and D. J. Lipman (1988), “Improved Tools for Biological Sequence Analysis”, PNAS 85:2444-2448, [17] W. R. Pearson (1996) “Effective protein sequence comparison” Meth. Enzymol. 266:227- 258 [15]; and Pearson et. al. (1997) Genomics 46:24-36 [18]. Version 3 of the FASTA packages contains many programs for searching DNA and protein databases and for evaluating statistical significance from randomly shuffled sequences. This document is divided into four sections: (1) A summary overview of the programs in the FASTA3 package; (2) A guide to using the FASTA programs; (3) A guide to installing the programs and databases. Section (4) provides answers to some Frequently Asked Questions (FAQs). In addition to this document, the changes v36.html, changes v35.html and changes v34.html files list functional changes to the programs. The readme.v30..v36 files provide a more complete revision history of the programs, including bug fixes. The programs are easy to use; if you are using them on a machine that is administered by someone else, you can focus on sections (1) and (2) to learn how to use the programs. If you are installing the programs on your own machine, you will need to read section (3) carefully. FASTA and BLAST – FASTA and BLAST have the same goal: to identify statistically signifi- cant sequence similarity that can be used to infer homology. The FASTA programs offer several advantages over BLAST: 1. -

The Candida Genome Database: the New Homology Information Page Highlights Protein Similarity and Phylogeny Jonathan Binkley, Martha B

Published online 31 October 2013 Nucleic Acids Research, 2014, Vol. 42, Database issue D711–D716 doi:10.1093/nar/gkt1046 The Candida Genome Database: The new homology information page highlights protein similarity and phylogeny Jonathan Binkley, Martha B. Arnaud*, Diane O. Inglis, Marek S. Skrzypek, Prachi Shah, Farrell Wymore, Gail Binkley, Stuart R. Miyasato, Matt Simison and Gavin Sherlock Department of Genetics, Stanford University Medical School, Stanford, CA 94305-5120, USA Received September 12, 2013; Revised October 9, 2013; Accepted October 10, 2013 ABSTRACT mammalian hosts as well as a pathogen that causes painful opportunistic mucosal infections in otherwise The Candida Genome Database (CGD, http://www. healthy individuals and causes severe and deadly blood- candidagenome.org/) is a freely available online stream infections in the susceptible severely ill and/or im- resource that provides gene, protein and sequence munocompromised patient population (2). This fungus information for multiple Candida species, along with exhibits a number of properties associated with the web-based tools for accessing, analyzing and ability to invade host tissue, to resist the effects of exploring these data. The goal of CGD is to facilitate antifungal therapeutic drugs and the human immune and accelerate research into Candida pathogenesis system and to alternately cause disease or coexist with and biology. The CGD Web site is organized around the host as a commensal, including the ability to grow in Locus pages, which display information collected multiple morphological forms and to switch between about individual genes. Locus pages have multiple them, and the ability to grow as drug-resistant biofilms (3–7). -

The Dynamic Landscape of Fission Yeast Meiosis Alternative-Splice Isoforms

Downloaded from genome.cshlp.org on October 8, 2021 - Published by Cold Spring Harbor Laboratory Press The dynamic landscape of fission yeast meiosis alternative- splice isoforms Zheng Kuang1,2, Jef D. Boeke1,2* and Stefan Canzar3,4* Affiliations 1Institute for Systems Genetics, NYU Langone Medical Center, New York City, NY 10016, USA 2Department of Biochemistry and Molecular Pharmacology, NYU Langone Medical Center, New York City, NY 10016, USA 3Toyota Technological Institute at Chicago, Chicago, IL 60637, USA. 4Gene Center, Ludwig-Maximilians-Universität München, Munich, Germany. *Corresponding authors Contact: Jef D. Boeke: [email protected] Stefan Canzar: [email protected] Running title: Isoform landscape in fission yeast meiosis Keywords: alternative splicing, isoforms, fission yeast, meiosis, dynamic transcriptome 1 Downloaded from genome.cshlp.org on October 8, 2021 - Published by Cold Spring Harbor Laboratory Press Abstract Alternative splicing increases the diversity of transcriptomes and proteomes in metazoans. The extent to which alternative splicing is active and functional in unicellular organisms is less understood. Here we exploit a single-molecule long-read sequencing technique and develop an open-source software program called SpliceHunter to characterize the transcriptome in the meiosis of fission yeast. We reveal 14353 alternative splicing events in 17669 novel isoforms at different stages of meiosis, including antisense and read-through transcripts. Intron retention is the major type of alternative splicing, followed by alternate “intron in exon”. 770 novel transcription units are detected; 53 of the predicted proteins show homology in other species and form theoretical stable structures. We report the complexity of alternative splicing along isoforms, including 683 intramolecularly co-associated intron pairs. -

BLAST Practice

Using BLAST BLAST (Basic Local Alignment Search Tool) is an online search tool provided by NCBI (National Center for Biotechnology Information). It allows you to “find regions of similarity between biological sequences” (nucleotide or protein). The NCBI maintains a huge database of biological sequences, which it compares the query sequences to in order to find the most similar ones. Using BLAST, you can input a gene sequence of interest and search entire genomic libraries for identical or similar sequences in a matter of seconds. The amount of information on the BLAST website is a bit overwhelming — even for the scientists who use it on a frequent basis! You are not expected to know every detail of the BLAST program. BLAST results have the following fields: E value: The E value (expected value) is a number that describes how many times you would expect a match by chance in a database of that size. The lower the E value is, the more significant the match. Percent Identity: The percent identity is a number that describes how similar the query sequence is to the target sequence (how many characters in each sequence are identical). The higher the percent identity is, the more significant the match. Query Cover: The query cover is a number that describes how much of the query sequence is covered by the target sequence. If the target sequence in the database spans the whole query sequence, then the query cover is 100%. This tells us how long the sequences are, relative to each other. FASTA format FASTA format is used to represent either nucleotide or peptide sequences. -

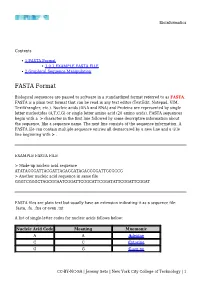

FASTA Format 1.0.1 EXAMPLE FASTA FILE 2 Graphical Sequence Manipulation

Bioinformatics Contents 1 FASTA Format 1.0.1 EXAMPLE FASTA FILE 2 Graphical Sequence Manipulation FASTA Format Biological sequences are passed to software in a standardized format referred to as FASTA. FASTA is a plain text format that can be read in any text editor (TextEdit, Notepad, VIM, TextWrangler, etc.). Nucleic acids (DNA and RNA) and Proteins are represented by single letter nucleotides (A,T,C,G) or single letter amino acid (20 amino acids). FASTA sequences begin with a > character in the first line followed by some descriptive information about the sequence, like a sequence name. The next line consists of the sequence information. A FASTA file can contain multiple sequence entries all demarcated by a new line and a title line beginning with > . EXAMPLE FASTA FILE > Made-up nucleic acid sequence ATATAGGGATTAGGATTAGAGGATAGAGGGGATTGCGCCG > Another nucleic acid sequence in same file GGGTCGGGCTAGCGGAATCGGATTCGGCATTCGGATATTCGGATTCGGAT FASTA files are plain text but usually have an extension indicating it as a sequence file: .fasta, .fa, .fna or even .txt A list of single-letter codes for nucleic acids follows below: Nucleic Acid Code Meaning Mnemonic A A Adenine C C Cytosine G G Guanine CC-BY-NC-SA | Jeremy Seto | New York City College of Technology | 1 Bioinformatics Nucleic Acid Code Meaning Mnemonic T T Thymine U U Uracil R A or G puRine Y C, T or U pYrimidines K G, T or U bases which are Ketones M A or C bases with aMino groups S C or G Strong interaction W A, T or U Weak interaction B not A (i.e.