Master Thesis Long-Term Fault Tolerant Storage of Critical Sensor Data in the Intercloud

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Nasuni Cloud File Services™ the Modern, Cloud-Based Platform to Store, Protect, Synchronize, and Collaborate on Files Across All Locations at Scale

Data Sheet Data Sheet Nasuni Cloud File Services Nasuni Cloud File Services™ The modern, cloud-based platform to store, protect, synchronize, and collaborate on files across all locations at scale. Solution Overview What is Nasuni? Nasuni® Cloud File Services™ enables organizations to Nasuni Cloud File Services is a software-defined store, protect, synchronize and collaborate on files platform that leverages the low cost, scalability, and across all locations at scale. Licensed as stand-alone or durability of Amazon Simple Storage Service (Amazon bundled services, the Nasuni platform modernizes S3) and Nasuni’s cloud-based UniFS® global file system primary and archive file storage, file backup, and to transform file storage. disaster recovery, while offering advanced capabilities Just like device-based file systems were needed to for multi-site file synchronization. It is also the first make disk storage usable for storing files, Nasuni UniFS platform that automatically reduces costs as files age so overcomes obstacles inherent in Amazon S3 related to data never has to be migrated again. latency, file retrieval, and organization to make object With Nasuni, IT gains a more scalable, affordable, storage usable for storing files, with key features like: manageable, and highly available file infrastructure that • Fast access to files in any edge location. meets cloud-first objectives and eliminates tedious NAS • Legacy application support, without app rewrites. migrations and forklift upgrades. End users gain limitless capacity for group shares, project directories, and home • Familiar hierarchical folder structure for fast search. drives; LAN-speed access to files in any edge location; • Unified platform for primary and archive file storage and fast recovery of files to any point in time. -

Data Migration for Large Scientific Datasets in Clouds

Azerbaijan Journal of High Performance Computing, Vol 1, Issue 1, 2018, pp. 66-86 https://doi.org/10.32010/26166127.2018.1.1.66.86 Data Migration for Large Scientific Datasets in Clouds Akos Hajnal1, Eniko Nagy1, Peter Kacsuk1 and Istvan Marton1 1Institute for Computer Science and Control, Hungarian Academy of Sciences (MTA SZTAKI), Budapest, Hungary, [email protected], [email protected], *Correspondence: [email protected] Peter Kacsuk, nstitute for Computer Science and Control, Hungarian Academy of Sciences Abstract (MTA SZTAKI), Budapest, Hungary, peter.kacsuk@ Transferring large data files between various storages including sztaki.mta.hu cloud storages is an important task both for academic and commercial users. This should be done in an efficient and secure way. The paper describes Data Avenue that fulfills all these conditions. Data Avenue can efficiently transfer large files even in the range of TerraBytes among storages having very different access protocols (Amazon S3, OpenStack Swift, SFTP, SRM, iRODS, etc.). It can be used in personal, organizational and public deployment with all the security mechanisms required for these usage configurations. Data Avenue can be used by a GUI as well as by a REST API. The papers describes in detail all these features and usage modes of Data Avenue and also provides performance measurement results proving the efficiency of the tool that can be accessed and used via several public web pages. Keywords: data management, data transfer, data migration, cloud storage 1. Introduction In the last 20 years collecting and processing large scientific data sets have been gaining ever increasing importance. -

Nasuni for Business Continuity

+1.857.444.8500 Solution Brief nasuni.com [email protected] Nasuni for Business Continuity Summary of Nasuni Modern Cloud File Services Enables Remote Access to Critical Capabilities for Business File Assets and Remote Administration of File Infrastructure Continuity and Remote Work Anytime, Anywhere A key part of business continuity is main- Consolidation of Primary File Data in Remote File Access taining access to critical business con- Cloud Storage with Fast, Edge Access Anywhere, Anytime tent no matter what happens. Files are Highly resilient file storage is a funda- through standard drive the fastest-growing business content in mental building block for continuous file mappings or Web almost every industry. Providing remote file access. Nasuni stores the “gold copies” browser access, then, is a first step toward supporting of all file data in cloud object storage such Object Storage Dura- a “Work From Anywhere” model that can as Azure Blob, Amazon S3, or Google bility, Scalability, and sustain business operations through one-off Cloud Storage instead of legacy block Economics using Azure, hardware failures, local outages, regional storage. This approach leverages the AWS, and Google cloud office disasters, or global pandemics. superior durability, scalability, and availability storage of object storage to provision limitless file A remotely accessible file infrastructure sharing capacity, at substantially lower cost. Built-in Backup with that enables remote work must also be Better RPO/RTO with remotely manageable. IT departments Nasuni Edge Appliances – lightweight continuous file versioning must be able to provision new file storage virtual machines that cache copies of just to cloud storage capacity, create file shares, map drives, the frequently accessed files from cloud Rapid, Low-Cost DR recover data, and more without needing storage – can be deployed for as many that restores file shares skilled administrators “on the ground” in a offices as needed to give workers access in <15 minutes without datacenter or back office. -

Cloud Computing for Logistics 12

The Supply Chain Cloud YOUR GUIDE TO CONTRACTS STANDARDS SOLUTIONS www.thesupplychaincloud.com 1 CONTENTS EUROPEAN COMMISSION PAGE Unleashing the Potential of Cloud Computing 4 TRADE TECH Cloud Solutions for Trade Security Implications for the Overall Supply Chain 7 CLOUD SECURITY ALLIANCE Cloud Computing: It’s a Matter of Transparency and Education 10 FRAUNHOFER INSTITUTE The Logistics Mall – Cloud Computing for Logistics 12 AXIT AG connecting logistics Supply Chain Management at the Push of a Button 17 INTEGRATION POINT Global Trade Management and the Cloud – Does It Work? 18 THE OPEN GROUP Cloud Computing Open Standards 20 WORKBOOKS CRM As A Service for Supply Chain Management 24 GREENQLOUD Sustainability in the Data Supply Chain 26 CLOUD INDUSTRY FORUM Why the need for recognised Cloud certification? 31 COVISINT Moving to the Cloud 32 CLOUD STANDARDS CUSTOMER COUNCIL Applications in the Cloud – Adopt, Migrate, or Build? 38 FABASOFT “United Clouds of Europe” for the Supply Chain Industry 42 EUROCLOUD How Cloud Computing will Revolutionise the European Economy 45 BP DELIVERY The cloud value chain needs services brokers 48 2 3 EUROPEAN COMMISSION Unleashing the Potential of Cloud Computing Cloud computing can bring significant advantages to citizens, businesses, public administrations and other organisations in Europe. It allows cost savings, efficiency boosts, user-friendliness and accelerated innovation. However, today cloud computing benefits cannot be fully exploited, given a number of uncertainties and challenges that the use of cloud computing services brings. In September 2012, the European Commission announced its Cutting Through The Jungle of Standards Cloud Computing Strategy, a new strategy to boost European The cloud market sees a number of standards, covering different business and government productivity via cloud computing. -

Nasuni for Pharmaceuticals

+1.857.444.8500 Solution Brief nasuni.com [email protected] Nasuni for Pharmaceuticals Faster Collaboration Accelerate Research Collaboration with the Power of the Cloud between global research Now more than ever, pharmaceutical Nasuni Cloud File Storage for and manufacturing companies need to collaborate efficiently Pharmaceuticals locations across research and development centers, Major pharmaceutical companies have manufacturing facilities, and regulatory Unlimited Cloud solved their unstructured data storage, management centers. Critical research Storage On-Demand sharing, security, and protection challeng- and manufacturing processes generate in Microsoft Azure, AWS, es with Nasuni. terabytes of unstructured data that needs to IBM Cloud, Google be shared quickly — and protected securely. Immutable Storage in the Cloud Cloud or others elimi- Nasuni enables pharmaceutical com- nates on-premises NAS For IT, this requirement puts a tangible panies to consolidate all their file data in & file servers stress on traditionally siloed storage hard- cloud object storage (e.g. Microsoft Azure 50% Cost Savings ware and network bandwidth resources. Blob Storage, Amazon S3, Google Cloud when compared to Supporting unstructured data sharing Storage) instead of trapping it in silos of traditional NAS & backup forces IT to add storage capacity at an traditional, on-premises file servers or Net- solutions unpredictable rate, then bolt on expensive work-Attached Storage (NAS). Free from networking capabilities. Yet failing to keep physical device constraints, the combina- End-to-End Encryption up can impact both business and re- tion of Nasuni and cloud object storage secures research and search progress. Long delays waiting for offers limitless, scalable capacity at lower manufacturing data research files and manufacturing data to costs than on-premises storage. -

Utrecht University Dynamic Automated Selection and Deployment Of

Utrecht University Master Thesis Business Informatics Dynamic Automated Selection and Deployment of Software Components within a Heterogeneous Multi-Platform Environment June 10, 2015 Version 1.0 Author Sander Knape [email protected] Master of Business Informatics Utrecht University Supervisors dr. S. Jansen (Utrecht University) dr.ir. J.M.E.M. van der Werf (Utrecht University) dr. S. van Dijk (ORTEC) Additional Information Thesis Title Dynamic Automated Selection and Deployment of Software Components within a Heterogeneous Multi-Platform Environment Author S. Knape Student ID 3220958 First Supervisor dr. S. Jansen Universiteit Utrecht Department of Information and Computing Sciences Buys Ballot Laboratory, office 584 Princetonplein 5, De Uithof 3584 CC Utrecht Second Supervisor dr.ir. J.M.E.M. van der Werf Universiteit Utrecht Department of Information and Computing Sciences Buys Ballot Laboratory, office 584 Princetonplein 5, De Uithof 3584 CC Utrecht External Supervisor dr. S. van Dijk Manager Technology & Innovation ORTEC Houtsingel 5 2719 EA Zoetermeer i Declaration of Authorship I, Sander Knape, declare that this thesis titled, ’Dynamic Automated Selection and Deployment of Software Components within a Heterogeneous Multi-Platform Environment’ and the work presented in it are my own. I confirm that: This work was done wholly or mainly while in candidature for a research degree at this University. Where any part of this thesis has previously been submitted for a degree or any other qualification at this University or any other institution, this has been clearly stated. Where I have consulted the published work of others, this is always clearly attributed. Where I have quoted from the work of others, the source is always given. -

Egnyte + Alberici Case Study

+ Customer Success From the Office to the Project Site, How Alberici Uses Egnyte to Keep Teams in Sync Egnyte has opened new doors of possibilities where we didn’t have access out in the field previously. The VDC and BIM teams utilize Egnyte to sync huge files offline. They have been our largest proponents of Egnyte. — Ron Borror l Director, IT Infrastructure $2B + Annual Revenue Introduction Solving complex challenges on aggressive timelines is in the DNA of Alberici Corporation, a diversified construction company 17 that works in industrial and commercial markets globally. Regional Offices When longtime client General Motors Company called on Alberici for an urgent design-build request to transform an 100 86,000 square-foot warehouse into an emergency ventilator Average Number manufacturing facility during the COVID-19 pandemic, they of Projects answered the call. Within just two weeks, the transformed facility produced its first ventilator and, within a month, it was making 500 life-saving devices a day. AT A GLANCE Drawing on their more than 100-years of experience successfully completing complex projects like the largest hydroelectric plant on the Ohio River, automotive assembly plants for leading car manufacturers, and SSM Health Saint Louis University Hospital, Alberici was ready to act fast. Engineering News-Record recently ranked Alberici as the 31st- largest construction company in the United States with annual revenues of $2B. Nearly 80 percent of Alberici’s business is generated by repeat clients, a testament to their service and quality and the foundation for consistent growth. www.egnyte.com | © 2020 by Egnyte Inc. All rights reserved. -

Sustainability As a Growth Strategy Eiríkur S

Green is the new Green: Sustainability as a growth strategy Eiríkur S. Hrafnsson Co-Founder Chief Global Strategist @greenqloud GreenQloud.com Cloud Computing Rocket fuel for innovation New and previously impossible services based on public clouds Mobile computing needs clouds! Internet of things has hardly started “Within seven years there will be 50 billion intelligent devices connected to the internet." - Al Gore Hyper growth challenge 40 Global Data is doubling Global storage (Zettabytes) * 30 every 2 years. 20 We have only processed 1% 10 2012 - 2+ ZB 2020 - 40 ZB 2010 2011 2012 2013 2014 2015 2016 2017 2018 2019 2020 2022 - 80 ZB Most data centers are running on fossil fuels. Cloud providers use less than 25% renewable energy of global CO2 emissions in 2007 - We are at ~3% now! 2% - Gartner 2020 estimation of CO2 emissions by IT - McKinsey & Company 4% “Your sustainability strategy IS your growth strategy” -Hannah Jones CSO Circular Economy - Growth from sustainability Circular Economy - Growth from sustainability IT Growth, Foresting, Food Production... XYZ industry growth cannot be sustained without sustainability...duh! Sustainability focused brands Hyper growth challenge 40 Global Data is doubling Global storage (Zettabytes) * 30 every 2 years. 20 We have only processed 1% 10 2012 - 2+ ZB 2020 - 40 ZB 2010 2011 2012 2013 2014 2015 2016 2017 2018 2019 2020 2022 - 80 ZB Sustainable Public Cloud Computing Easy to use on Iceland & Renewable Energy Seattle (Q1) StorageQloud ComputeQloud (S3 & SQAPI) (EC2) Truly Green and Cheaper Turnkey -

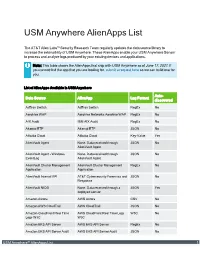

USM Anywhere Alienapps List

USM Anywhere AlienApps List The AT&T Alien Labs™ Security Research Team regularly updates the data source library to increase the extensibility of USM Anywhere. These AlienApps enable your USM Anywhere Sensor to process and analyze logs produced by your existing devices and applications. Note: This table shows the AlienApps that ship with USM Anywhere as of June 17, 2021. If you cannot find the app that you are looking for, submit a request here so we can build one for you. List of AlienApps Available in USM Anywhere Auto- Data Source AlienApp Log Format discovered AdTran Switch AdTran Switch RegEx No Aerohive WAP Aerohive Networks Aerohive WAP RegEx No AIX Audit IBM AIX Audit RegEx No Akamai ETP Akamai ETP JSON No Alibaba Cloud Alibaba Cloud Key-Value Yes AlienVault Agent None. Data received through JSON No AlienVault Agent AlienVault Agent - Windows None. Data received through JSON No EventLog AlienVault Agent AlienVault Cluster Management AlienVault Cluster Management RegEx No Application Application AlienVault Internal API AT&T Cybersecurity Forensics and JSON No Response AlienVault NIDS None. Data received through a JSON Yes deployed sensor Amazon Aurora AWS Aurora CSV No Amazon AWS CloudTrail AWS CloudTrail JSON No Amazon CloudFront Real Time AWS CloudFront Real Time Logs W3C No Logs W3C W3C Amazon EKS API Server AWS EKS API Server RegEx No Amazon EKS API Server Audit AWS EKS API Server Audit JSON No USM Anywhere™ AlienApps List 1 List of AlienApps Available in USM Anywhere (Continued) Auto- Data Source AlienApp Log Format discovered -

Reducing Email Service's Carbon Emission with Minimum Cost THESIS Presented in Partial Fulfillment of the Requireme

GreenMail: Reducing Email Service’s Carbon Emission with Minimum Cost THESIS Presented in Partial Fulfillment of the Requirements for the Degree Master of Science in the Graduate School of The Ohio State University By Chen Li, B.S. Graduate Program in Computer Science and Engineering The Ohio State University 2013 Master's Examination Committee: Christopher Charles Stewart, Advisor Xiaorui Wang Copyright by Chen Li 2013 Abstract Internet services contribute a large fraction of worldwide carbon emission nowadays, in a context of increasing number of companies tending to provide and more and more developers use Internet services. Noticeably, a trend is those service providers are trying to reduce their carbon emissions by utilizing on-site or off-site renewable energy in their datacenters in order to attract more customers. With such efforts have been paid, there are still some users who are aggressively calling for even cleaner Internet services. For example, over 500,000 Facebook users petitioned the social networking site to use renewable energy to power its datacenter [1]. However, it seems impossible for such demand to be satisfied merely from the inside of those production datacenters, considering the transition cost and stability. Outside the existing Internet services, on the other hand, may easily set up a proxy service to attract those renewable-energy-sensitive users, by 1) using carbon neutral or even over-offsetting cloud instances to bridge the end user and traditional Internet services; and 2) estimating and offsetting the carbon emissions from the traditional Internet services. In our paper, we proposed GreenMail, which is a general IMAP proxy caching system that connects email users and traditional email services. -

Tech Trailblazers Awards Finalists Voting Opens

FOR IMMEDIATE RELEASE www.techtrailblazers.com Tech Trailblazers Awards Finalists Voting Opens Which startups have the most promising technologies? Vote for your favorites now London, 18th December 2013 – The Tech Trailblazers Awards, first annual awards program for enterprise information technology startups, today announced the opening of voting for shortlisted finalists across the nine categories of Big Data, Cloud, Emerging Markets, Infosecurity, Mobile, Networking, Storage, Sustainable IT and Virtualization. The voting process is managed using technology by web-based ideation and innovation management pioneer Wazoku. The winners of the Tech Trailblazers Awards will be determined by the voting public and the judging panel. Which startups have the most promising technologies? Discover the finalists and vote for your favorites now on the Tech Trailblazers website by clicking on the Vote Now tab. Voting closes at 23:59pm on Thursday 23rd January 2014. This second year of the awards has received an even higher level of high quality entrants, with an 18% increase in entrants, making the work of the judges even more challenging. The finalists showcase the finest of enterprise tech innovation that the world has to offer with representative startups from Argentina, Belgium, France, Iceland, India, Ireland, Kenya, Nigeria, Norway, Spain, the UK and the US. Here is an overview of all the amazing enterprise tech startups you can vote for over the coming months. Finalists in the Tech Trailblazers Awards Big Data -Cedexis (@Cedexis), www.cedexis.com -

File Servers: Is It Time to Say Goodbye? Unstructured Data Is the Fastest-Growing Segment of Data in the Data Center

File Servers: Is It Time to Say Goodbye? Unstructured data is the fastest-growing segment of data in the data center. A significant portion of data within unstructured data is the data that users create though office productivity and other specialized applications. User data also often represents the bulk of the organization’s intellectual property. Traditionally, user data is stored on either file servers or network-attached storage (NAS) systems, which IT tries to locate solely at a primary data center. Initially, the problem that IT faced with storing unstructured data was keeping up with its growth, which leads to file server or NAS sprawl. Now the problem IT faces in storing file data is that users are no longer located in a single primary headquarters. The distribution of employees exacerbates file server sprawl. It also makes it almost impossible for collaboration on the data between locations. IT has tried various workarounds like routing everyone to a single server via a virtual private network (VPN), which leads to inconsistent connections and unacceptable performance. Users rebel and implement workarounds like consumer file sync and share, which puts corporate data at risk. Organizations are looking to the cloud for alternatives. Still, most cloud solutions are either attempts to harden file sync and share or are Cloud-only NAS implementations that don’t allow for cloud latency. Nasuni A File Services Platform Built for the Cloud Nasuni is a global file system that enables distributed organizations to work together as if they were all in a single office. It leverages the cloud as a primary storage point but integrates on-premises edge appliances, often running as virtual machines, to overcome cloud latency issues.