Assessing Resilience of Software Systems by Application of Chaos Engineering – a Case Study

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Maîtriser Apache Jmeter Du Test De Charge À Devops

Maîtriser Apache JMeter Du test de charge à Devops Antonio Gomes Rodrigues, Bruno Demion (Milamber) et Philippe Mouawad Ce livre est en vente à http://leanpub.com/maitriser-jmeter-du-test-de-charge-a-devops Version publiée le 2018-09-30 ISBN 978-2-9555036-1-4 Ce livre est publié par Leanpub. Leanpub permet aux auteurs et aux éditeurs de bénéficier du Lean Publishing. Lean Publishing consiste à publier à l’aide d’outils très simples de nombreuses itérations d’un livre électronique en cours de rédaction, d’obtenir des retours et commentaires des lecteurs afin d’améliorer le livre. © 2014 - 2018 Antonio Gomes Rodrigues, Bruno Demion (Milamber) et Philippe Mouawad Tweet ce livre ! S’il vous plaît aidez Antonio Gomes Rodrigues, Bruno Demion (Milamber) et Philippe Mouawad en parlant de ce livre sur Twitter ! Le tweet suggéré pour ce livre est : Je viens d’acheter Maîtriser Apache JMeter : Du test de charge à #Devops par @ra0077, @milamberspace, @philmdot sur https ://leanpub.com/maitriser-jmeter-du-test-de-charge-a-devops Le hashtag suggéré pour ce livre est #jmeter. Découvrez ce que les gens disent à propos du livre en cliquant sur ce lien pour rechercher ce hashtag sur Twitter : #jmeter Couverture et quatrième de couverture conçues par Cécile Platteeuw (C’grafic) Table des matières Droits ............................................ 1 Présentation des auteurs ................................ 2 Antonio Gomes Rodrigues ............................. 2 Bruno Demion (Milamber) ............................. 2 Philippe Mouawad (Philippe M.) ......................... 3 L’écosystème d’Apache JMeter ............................ 5 Introduction ...................................... 5 Plugin polyvalent ................................... 5 JMeter Plugins .................................. 5 JMeter dans le cloud ................................. 18 BlazeMeter .................................... 19 Tricentis Flood .................................. 23 Redline 13 ................................... -

Studying Dependency Updates and a Framework for Multi-Versioning in Docker Containers by Sara Gholami Ghasem Abad

Studying Dependency Updates and a Framework for Multi-Versioning in Docker Containers by Sara Gholami Ghasem Abad A thesis submitted in partial fulfillment of the requirements for the degree of Master of Science in Software Engineering and Intelligent Systems Department of Electrical and Computer Engineering University of Alberta © Sara Gholami Ghasem Abad, 2020 Abstract Containerized software systems are becoming more popular and complex as they are one of the essential techniques that enable cloud computing. One of the enabling technologies for containerized software systems is the Docker framework. Docker is an open-source framework for deploying containers, lightweight, standalone, and executable units of software with all their dependencies (packages and libraries) that can run on any computing environment. Docker images facilitate deploying and upgrading systems as all of the dependencies required for a software package are included in an image. However, there exist several risks with running Docker images in production environments. One risky situation can occur when upgrading images, as an upgrade may result in many changing packages or libraries at once. Therefore, in this thesis, we study the Docker images and analyze them to identify the risks of package changes. Also, we propose our solution, DockerMV, to mitigate this risk by running multiple versions of an image at the same time. In this first part of this thesis, we analyze the official Docker image repositories that are available on Docker Hub, Docker’s public registry that holds Docker images. For each image in these repositories, we extract details about its native, Node, and Python packages. Afterward, we investigate which types of applications have more package changes in their image upgrades. -

Kuali Student Service System: Technical Architecture Phase 1 Recommendations

Kuali Student Service System Technical Architecture Phase 1 Recommendations Kuali Student Service System Technical Architecture Phase 1 Recommendations December 31 2007 Kuali Student Technical Team Technical Architecture Phase 1 deliverables 2/14/2008 1 Kuali Student Service System Technical Architecture Phase 1 Recommendations Table of Contents 1 OVERVIEW ........................................................................................................................ 4 1.1 REASON FOR THE INVESTIGATION ................................................................................... 4 1.2 SCOPE OF THE INVESTIGATION ....................................................................................... 4 1.3 METHODOLOGY OF THE INVESTIGATION .......................................................................... 4 1.4 CONCLUSIONS ............................................................................................................... 5 1.5 DECISIONS THAT HAVE BEEN DELAYED ............................................................................ 6 2 STANDARDS ..................................................................................................................... 7 2.1 INTRODUCTION .............................................................................................................. 7 2.2 W3C STANDARDS .......................................................................................................... 7 2.3 OASIS STANDARDS ...................................................................................................... -

Apache Jmeter

Apache JMeter #jmeter Table of Contents About 1 Chapter 1: Getting started with Apache JMeter 2 Remarks 2 Versions 2 Examples 3 Installation or Setup 3 Overview of Apache JMeter components at high level 4 Chapter 2: Apache JMeter Correlations 7 Introduction 7 Examples 7 Correlation Using the Regular Expression Extractor in Apache JMeter 7 Correlation Using the XPath Extractor in JMeter 12 Correlation Using the CSS/JQuery Extractor in JMeter 14 Correlation Using the JSON Extractor 17 Automated Correlation by Using BlazeMeter’s ‘SmartJMX’ 20 Chapter 3: Apache JMeter parameterization 24 Introduction 24 Examples 24 Parameterization using external files 24 Parameterization using databases 31 Parameterization using the ‘Parameterized Controller’ plugin 41 Chapter 4: Apache JMeter: Test scenario recording 47 Introduction 47 Examples 47 Script Recording with the JMeter Template Feature 47 Script Recording with the JMeter Proxy Recorder 49 Recording Performance Scripts for Mobile Devices 52 Recording HTTPS Traffic 53 Script Recording with the BlazeMeter Chrome Extension 56 Script Recording with BadBoy 58 Credits 60 About You can share this PDF with anyone you feel could benefit from it, downloaded the latest version from: apache-jmeter It is an unofficial and free Apache JMeter ebook created for educational purposes. All the content is extracted from Stack Overflow Documentation, which is written by many hardworking individuals at Stack Overflow. It is neither affiliated with Stack Overflow nor official Apache JMeter. The content is released under Creative Commons BY-SA, and the list of contributors to each chapter are provided in the credits section at the end of this book. Images may be copyright of their respective owners unless otherwise specified. -

Beginning Netbeans IDE

Beginning NetBeans IDE for Java Developers Geertjan Wielenga Beginning NetBeans IDE Copyright © 2015 by Geertjan Wielenga This work is subject to copyright. All rights are reserved by the Publisher, whether the whole or part of the material is concerned, specifically the rights of translation, reprinting, reuse of illustrations, recitation, broadcasting, reproduction on microfilms or in any other physical way, and transmission or information storage and retrieval, electronic adaptation, computer software, or by similar or dissimilar methodology now known or hereafter developed. Exempted from this legal reservation are brief excerpts in connection with reviews or scholarly analysis or material supplied specifically for the purpose of being entered and executed on a computer system, for exclusive use by the purchaser of the work. Duplication of this publication or parts thereof is permitted only under the provisions of the Copyright Law of the Publisher’s location, in its current version, and permission for use must always be obtained from Springer. Permissions for use may be obtained through RightsLink at the Copyright Clearance Center. Violations are liable to prosecution under the respective Copyright Law. ISBN-13 (pbk): 978-1-4842-1258-5 ISBN-13 (electronic): 978-1-4842-1257-8 Trademarked names, logos, and images may appear in this book. Rather than use a trademark symbol with every occurrence of a trademarked name, logo, or image we use the names, logos, and images only in an editorial fashion and to the benefit of the trademark owner, with no intention of infringement of the trademark. The use in this publication of trade names, trademarks, service marks, and similar terms, even if they are not identified as such, is not to be taken as an expression of opinion as to whether or not they are subject to proprietary rights. -

Supercontra: Cross-Language, Cross-Runtime Contracts As a Service

SuperContra: Cross-Language, Cross-Runtime Contracts As a Service Stratos Dimopoulos, Chandra Krintz, Rich Wolski, Anand Gupta Department of Computer Science University of California, Santa Barbara [email protected] Abstract—This paper presents SuperContra - a Design-by- the annotations and delegates their validation to the evaluator. Contract (DbC) framework that can ship with future PaaS The contract evaluator, evaluates the contracts and returns offerings to enforce lightweight contracts across different pro- the result to the interceptor. Finally, the communicator allows gramming systems, as-a-service. SuperContra is unique in that for the seamless communication between the interceptor and developers employ a familiar, high-level language to write con- the evaluator across programming languages, by transforming, tracts regardless of the programming language used to implement serializing and transferring the contracts and the corresponding the component under test. We evaluate SuperContra using widely used, open-source software and compare its performance against outcomes. The current implementation of SuperContra ex- existing DbC frameworks. Our results show that SuperContra emplifies its cross-language and cross-runtime capabilities by performs on par with non-service-based DbC approaches and in evaluating contracts between Java clients and a Python contract some cases similarly to code running without contracts. evaluator. We evaluate the performance of SuperContra using Apache I. INTRODUCTION JMeter [2], a popular open-source load testing application, The wide number of libraries and application programming with which we generate traffic on an instrumented with interfaces (APIs) offered by current Platform-as-a-Service contracts version of Synapse [3], a widely-used open-source providers, has enabled the rapid deployment of software devel- Enterprise Service Bus (ESB). -

DI Siegfried GOESCHL Rittergasse 6/23 A-1040 Wien First Level Skills

DI Siegfried GOESCHL Rittergasse 6/23 A-1040 Wien [email protected] http://people.apache.org/~sgoeschl First Level Skills Java Backend Development, REST & SOAP APIs, Full-text Search, Performance Testing, Build & Configuration Management Second Level Skills RDBMS, XML/XSLT, Scripting, DevOps, Web Development Contracting Experience 05/2015 – 04/2017 Erste Bank, Am Belvedere 1, A-1100 Vienna Senior Software Engineer – rolling out Erste Groups's Online Banking (aka George) in Czech & Slovak Republic based on JDK 8, Elastic & Oracle. Adding multi-tenant support to the existing code base, providing developer support, bridging the gap between operations & development team, implementing functional & performance tests using Gatling and help other people to get their work done. 12/2014 – 05/2015 TechTalk, Leonhard-Bernstein-Straße 10, A-1220 Vienna Senior Software Engineer – working on Berlin's new Civic Issue Tracking system based on JBoss, Vaadin & Oracle 11g. Focused on filling gaps and removing obstacles to keep the core development team productive. Setting up a performance test infrastructure using Selenium, JAMon & JavaMelody to pass the client-witnessed performance acceptance test. 09/2011 – 11/2014 willhaben.at, Landstraßer Hauptstraße 97-101, A-1030 Vienna Senior Software Engineer – ongoing development and maintenance of willhaben.at's iAd platform (Java, Tomcat, JSP, Sybase, Solr), including performance tuning, technical trouble- shooting, operations support and mentoring of new colleagues. Technical Lead - migrating Microsoft FAST server installation to an Apache Solr cluster handling 350 search requests per seconds querying 2.5 million indexed documents - 24x7 operation without downtime in two years. Technical Lead - implementing Willhaben's REST API based on the Jersey REST framework used by iOS & Android apps and the mobile web site, rolling out in production, scaling to 1.2 million downloaded apps and 850 million page impressions / month. -

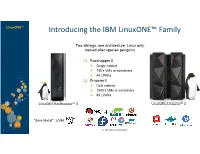

Introducing the IBM Linuxone™ Family

LinuxONE™ Introducing the IBM LinuxONE™ Family Two siblings, one architecture, Linux only, named after species penguins Rockhopper II Single cabinet 100’s VMs or containers 40 LPARs Emperor II Dual cabinet 1000’s VMs or containers 85 LPARs LinuxONE Rockhopper™ II LinuxONE Emperor™ II “Bare Metal” z/VM 1 © 2017 IBM Corporation© 2018 IBM Corporation LinuxONE™ An IBM LinuxONE for everyone “Right-size” to fit your needs Rockhopper II Emperor II Performance in a Smaller Footprint Extreme Scale • Equivalent to ~200 x86 cores • Equivalent to ~1300 x86 cores • Up to 8 TB memory • Up to 32 TB memory • I/O support for up to 2 million • I/O requirements up to 9 million IOPS IOPS, • Raw I/O bandwidth of 128 GB/S • Raw I/O bandwidth of • 19” industry standard form factor 832 GB/S • PDU-based1 with 200v-240v • Bulk power based on 480v power • Massive Capacity Back Up (CBU) • Optional 16U of available frame on demand space for additional components, • Can fill need for on-site disaster e.g., storage, server, network recovery switch • Air-cooled or water-cooled • Air-cooled only 2 © 2017 IBM Corporation© 2018 IBM Corporation Our 18-year journey with Linux on the Platform 2018: 2017: § I BM z14 ZR1 § I BM z14 M01-M05 § Preview: § z/ VM Subcapacity z/VM 7.1 2016: § I BM Wave 1.2 SP6 § KVM 1.1.2 § z/ VM 6.4 2015: § KVM for I BM z § I BM Wave upd. • I BM Cloud Private § I BM zAware for Linux • Db2 Warehouse § I BM LinuxONE™ • Blockchain • DBaaS ref.archit. -

Technology Profile

2021 Technology Profile https://azati.ai +375 (29) 6845855 Belarus, 31 K. Marks Street, Sections 5-6 Grodno, 230025 1 Table Of Contents TABLE OF CONTENTS page 01 DEPLOYMENT, BI & DATA page 09 WAREHOUSING GENERAL INFORMATION page 02 DATA SCIENCE & MACHINE LEARNING page 10 JAVA TECHNOLOGIES page 03 MONITORING TOOLS, PORTALS & SOLUTIONS, page 11 VERSION CONTROL RUBY & JAVASCRIPT TECHNOLOGIES page 04 VERSION CONTROL, SDK & OTHER TOOLS page 12 WEB & PHP TECHNOLOGIES page 05 OTHER TOOLS page 13 MOBILE DEVELOPMENT & DATABASES page 06 SOFTWARE TESTING & QA page 07 APPLICATION DEPLOYMENT page 08 2 General Information 01 PROGRAMMING LANGUAGES: 02 MARK-UP AND MODELING 05 SOFTWARE ARCHITECTURE PATTERNS: LANGUAGES: Java Representational State Transfer (REST/RESTful) JavaScript (ES5/ES6) HTML (4/5) Model-View-Controller (MVC) PHP XSLT Microservices TypeScript UML GraphQL PL/SQL Kotlin Smalltalk C 03 PROJECT MANAGEMENT C++ METHODOLOGIES: C# Agile (Kanban/SCRUM) Groovy Waterfall Delphi Behavior-driven development (BDD) Pascal Test-driven development (TDD) Python Feature-driven development (FDD) SQL Ruby R CoffeeScript 04 DEVELOPMENT APPROACHES: Perl Continuous Delivery (CD) Bash Continuous Integration (CI) Shell 3 Java Technologies 06 JAVA TECHNOLOGIES: 07 JAVA FRAMEWORKS: Apache POI Java (7/8/9) Spring Apache Wicket Java Servlet Spring Boot Apache CXF Java Database Connectivity (JDBC) Spring REST Apache Shiro Java REST Spring MVC Apache Camel Java Persistence API (JPA) Spring Data Java Message Service (JMS) Spring Security 08 JAVA LIBRARIES: JBoss Drools -

Performance Testing with Jmeter Second Edition

[ 1 ] Performance Testing with JMeter Second Edition Test web applications using Apache JMeter with practical, hands-on examples Bayo Erinle BIRMINGHAM - MUMBAI Performance Testing with JMeter Second Edition Copyright © 2015 Packt Publishing All rights reserved. No part of this book may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, without the prior written permission of the publisher, except in the case of brief quotations embedded in critical articles or reviews. Every effort has been made in the preparation of this book to ensure the accuracy of the information presented. However, the information contained in this book is sold without warranty, either express or implied. Neither the author nor Packt Publishing, and its dealers and distributors will be held liable for any damages caused or alleged to be caused directly or indirectly by this book. Packt Publishing has endeavored to provide trademark information about all of the companies and products mentioned in this book by the appropriate use of capitals. However, Packt Publishing cannot guarantee the accuracy of this information. First published: July 2013 Second edition: April 2015 Production reference: 1200415 Published by Packt Publishing Ltd. Livery Place 35 Livery Street Birmingham B3 2PB, UK. ISBN 978-1-78439-481-3 www.packtpub.com Credits Author Project Coordinator Bayo Erinle Kinjal Bari Reviewers Proofreaders Vinay Madan Simran Bhogal Satyajit Rai Safis Editing Ripon Al Wasim Joanna McMahon Commissioning Editor Indexer Pramila Balan Monica Ajmera Mehta Acquisition Editor Production Coordinator Llewellyn Rozario Arvindkumar Gupta Content Development Editor Cover Work Adrian Raposo Arvindkumar Gupta Technical Editors Tanvi Bhatt Narsimha Pai Mahesh Rao Copy Editors Charlotte Carneiro Pranjali Chury Rashmi Sawant About the Author Bayo Erinle is an author and senior software engineer with over 11 years of experience in designing, developing, testing, and architecting software. -

CERN SSO. Identity Provider

A Thousand Things You Always Wanted To Know About SSO But Never Dared Ask Oracle Code One. San Francisco. 24/10/2018 Luis Rodríguez Fernández 1 Agenda What’s this presentation about ? About your speaker About CERN About CERN openlab Why SSO? CERN SSO. Identity Provider CERN SSO. WS-Fed & SAML2 CERN SSO. OAUTH2 CERN SSO. What’s next? Take-aways Luis Rodríguez Fernández 2 What is this presentation about? ● SSO components – Identity Provider – Service Provider ● IdP high level implementation details SAML2 ● Focus on securing applications IDP WS-FED SP OAUTH2 ● SAML2, WS-Fed, Oauth2 (client credentials) ● Real Use Cases ● Open-source & commercial solutions ● Tips & Tricks Luis Rodríguez Fernández 3 Agenda What’s this presentation about ? About your speaker About CERN About CERN openlab Why SSO? CERN SSO. Identity Provider CERN SSO. WS-Fed & SAML2 CERN SSO. OAUTH2 CERN SSO. What’s next? Take-aways Luis Rodríguez Fernández 4 About your speaker ● Software Engineer ● Service Manager – Databases Applications Service ● Oracle WebLogic (~350 servers) ● Apache Tomcat (~40 servers) ● ~200 URLs ● From Spain (Asturias) Luis Rodríguez Fernández 5 Agenda What’s this presentation about ? About your speaker About CERN About CERN openlab Why SSO? CERN SSO. Identity Provider CERN SSO. WS-Fed & SAML2 CERN SSO. OAUTH2 CERN SSO. What’s next? Take-aways Luis Rodríguez Fernández 6 About CERN ● Fundamental Research – What’s the Universe made of ? – How did it start ? – What matter is made of ? ● Tools – Accelerators – Detectors ● Three pillars – Research – Innovation – Education ● Science for peace Luis Rodríguez Fernández 7 Agenda What’s this presentation about ? About your speaker About CERN About CERN openlab Why SSO? CERN SSO. -

The Design and Implementation of Unified Invoking Component Based

JOURNAL OF SOFTWARE, VOL. 9, NO. 8, AUGUST 2014 2073 The Design and Implementation of Unified Invoking Component Based on Web Services Framework Wenpeng Sua, Zhonghua Yana;b¤, Chenghui Lianga a School of Mechanical, Electrical and Information Engineering, Shandong University, Weihai 264209, China Email: [email protected], [email protected] b Integrated Electronic Systems Lab Co. Ltd., Jinan 250100, China Email: [email protected] Abstract— Web Services is a platform which enables the and Spring for web application developers. In the field of applications interoperate on the Internet. It is widely used Web Services, there are four famous frameworks called in designing and building systems in open and dynamic Axis, Axis2, XFire and CXF. All of the frameworks en- distributed environments such as EAI (Enterprise Appli- cation Integration) and B2B (Business to Business). As the capsulate the low-level information and provide powerful development of framework technology, it is convenient and development APIs for developers, which can really reduce standardized to use framework to develop web applications. the development difficulty and save development time. For Web Services, the frameworks Axis, Axis2, XFire The QoS (Quality of Service) refers to resource reser- and CXF are widely used. By the performance testing of vation control mechanisms, and it is an ability to provide the four frameworks, this paper not only introduces the four framework but also analyzes the differences of the different priority to different applications, or to guarantee four frameworks, and then makes some suggestions for a certain level of performance to a data flow [2]. QoS developers to choose the appropriate one.