UVI Image Segmentation of Auroral Oval: Dual Level Set and Convolutional Neural Network Based Approach

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Van Allen Probes' Contribution to the Space Weather System

L. J. Zanetti et al. The Van Allen Probes’ Contribution to the Space Weather System Lawrence J. Zanetti, Ramona L. Kessel, Barry H. Mauk, Aleksandr Y. Ukhorskiy, Nicola J. Fox, Robin J. Barnes, Michele Weiss, Thomas S. Sotirelis, and NourEddine Raouafi ABSTRACT The Van Allen Probes mission, formerly the Radiation Belt Storm Probes mission, was renamed soon after launch to honor the late James Van Allen, who discovered Earth’s radiation belts at the beginning of the space age. While most of the science data are telemetered to the ground using a store-and-then-dump schedule, some of the space weather data are broadcast continu- ously when the Probes are not sending down the science data (approximately 90% of the time). This space weather data set is captured by contributed ground stations around the world (pres- ently Korea Astronomy and Space Science Institute and the Institute of Atmospheric Physics, Czech Republic), automatically sent to the ground facility at the Johns Hopkins University Applied Phys- ics Laboratory, converted to scientific units, and published online in the form of digital data and plots—all within less than 15 minutes from the time that the data are accumulated onboard the Probes. The real-time Van Allen Probes space weather information is publicly accessible via the Van Allen Probes Gateway web interface. INTRODUCTION The overarching goal of the study of space weather ing radiation, were the impetus for implementing a space is to understand and address the issues caused by solar weather broadcast capability on NASA’s Van Allen disturbances and the effects of those issues on humans Probes’ twin pair of satellites, which were launched in and technological systems. -

Transmittal of Geotail Prelaunch Mission Operation Report

National Aeronautics and Space Administration Washington, D.C. 20546 ss Reply to Attn of: TO: DISTRIBUTION FROM: S/Associate Administrator for Space Science and Applications SUBJECT: Transmittal of Geotail Prelaunch Mission Operation Report I am pleased to forward with this memorandum the Prelaunch Mission Operation Report for Geotail, a joint project of the Institute of Space and Astronautical Science (ISAS) of Japan and NASA to investigate the geomagnetic tail region of the magnetosphere. The satellite was designed and developed by ISAS and will carry two ISAS, two NASA, and three joint ISAS/NASA instruments. The launch, on a Delta II expendable launch vehicle (ELV), will take place no earlier than July 14, 1992, from Cape Canaveral Air Force Station. This launch is the first under NASA’s Medium ELV launch service contract with the McDonnell Douglas Corporation. Geotail is an element in the International Solar Terrestrial Physics (ISTP) Program. The overall goal of the ISTP Program is to employ simultaneous and closely coordinated remote observations of the sun and in situ observations both in the undisturbed heliosphere near Earth and in Earth’s magnetosphere to measure, model, and quantitatively assess the processes in the sun/Earth interaction chain. In the early phase of the Program, simultaneous measurements in the key regions of geospace from Geotail and the two U.S. satellites of the Global Geospace Science (GGS) Program, Wind and Polar, along with equatorial measurements, will be used to characterize global energy transfer. The current schedule includes, in addition to the July launch of Geotail, launches of Wind in August 1993 and Polar in May 1994. -

Radiation Belt Storm Probes Launch

National Aeronautics and Space Administration PRESS KIT | AUGUST 2012 Radiation Belt Storm Probes Launch www.nasa.gov Table of Contents Radiation Belt Storm Probes Launch ....................................................................................................................... 1 Media Contacts ........................................................................................................................................................ 4 Media Services Information ..................................................................................................................................... 5 NASA’s Radiation Belt Storm Probes ...................................................................................................................... 6 Mission Quick Facts ................................................................................................................................................. 7 Spacecraft Quick Facts ............................................................................................................................................ 8 Spacecraft Details ...................................................................................................................................................10 Mission Overview ...................................................................................................................................................11 RBSP General Science Objectives ........................................................................................................................12 -

Polar Observations of Electron Density Distribution in the Earth's

c Annales Geophysicae (2002) 20: 1711–1724 European Geosciences Union 2002 Annales Geophysicae Polar observations of electron density distribution in the Earth’s magnetosphere. 1. Statistical results H. Laakso1, R. Pfaff2, and P. Janhunen3 1ESA Space Science Department, Noordwijk, Netherlands 2NASA Goddard Space Flight Center, Code 696, Greenbelt, MD, USA 3Finnish Meteorological Institute, Geophysics Research, Helsinki, Sweden Received: 3 September 2001 – Revised: 3 July 2002 – Accepted: 10 July 2002 Abstract. Forty-five months of continuous spacecraft poten- Key words. Magnetospheric physics (magnetospheric con- tial measurements from the Polar satellite are used to study figuration and dynamics; plasmasphere; polar cap phenom- the average electron density in the magnetosphere and its de- ena) pendence on geomagnetic activity and season. These mea- surements offer a straightforward, passive method for mon- itoring the total electron density in the magnetosphere, with high time resolution and a density range that covers many 1 Introduction orders of magnitude. Within its polar orbit with geocentric perigee and apogee of 1.8 and 9.0 RE, respectively, Polar en- The total electron number density is a key parameter needed counters a number of key plasma regions of the magneto- for both characterizing and understanding the structure and sphere, such as the polar cap, cusp, plasmapause, and auroral dynamics of the Earth’s magnetosphere. The spatial varia- zone that are clearly identified in the statistical averages pre- tion of the electron density and its temporal evolution dur- sented here. The polar cap density behaves quite systemati- ing different geomagnetic conditions and seasons reveal nu- cally with season. At low distance (∼2 RE), the density is an merous fundamental processes concerning the Earth’s space order of magnitude higher in summer than in winter; at high environment and how it responds to energy and momentum distance (>4 RE), the variation is somewhat smaller. -

Equivalence of Current–Carrying Coils and Magnets; Magnetic Dipoles; - Law of Attraction and Repulsion, Definition of the Ampere

GEOPHYSICS (08/430/0012) THE EARTH'S MAGNETIC FIELD OUTLINE Magnetism Magnetic forces: - equivalence of current–carrying coils and magnets; magnetic dipoles; - law of attraction and repulsion, definition of the ampere. Magnetic fields: - magnetic fields from electrical currents and magnets; magnetic induction B and lines of magnetic induction. The geomagnetic field The magnetic elements: (N, E, V) vector components; declination (azimuth) and inclination (dip). The external field: diurnal variations, ionospheric currents, magnetic storms, sunspot activity. The internal field: the dipole and non–dipole fields, secular variations, the geocentric axial dipole hypothesis, geomagnetic reversals, seabed magnetic anomalies, The dynamo model Reasons against an origin in the crust or mantle and reasons suggesting an origin in the fluid outer core. Magnetohydrodynamic dynamo models: motion and eddy currents in the fluid core, mechanical analogues. Background reading: Fowler §3.1 & 7.9.2, Lowrie §5.2 & 5.4 GEOPHYSICS (08/430/0012) MAGNETIC FORCES Magnetic forces are forces associated with the motion of electric charges, either as electric currents in conductors or, in the case of magnetic materials, as the orbital and spin motions of electrons in atoms. Although the concept of a magnetic pole is sometimes useful, it is diácult to relate precisely to observation; for example, all attempts to find a magnetic monopole have failed, and the model of permanent magnets as magnetic dipoles with north and south poles is not particularly accurate. Consequently moving charges are normally regarded as fundamental in magnetism. Basic observations 1. Permanent magnets A magnet attracts iron and steel, the attraction being most marked close to its ends. -

The Polar Bear Magnetic Field Experiment

PETER F. BYTHROW, THOMAS A. POTEMRA, LAWRENCE 1. ZANETTI, FREDERICK F. MOBLEY, LEONARD SCHEER, and WADE E. RADFORD THE POLAR BEAR MAGNETIC FIELD EXPERIMENT A primary route for the transfer of solar wind energy to the earth's ionosphere is via large-scale cur rents that flow along geomagnetic field lines. The currents, called "Birkeland" or "field aligned," are associated with complex plasma processes that produce ionospheric scintillations, joule heating, and auroral emissions. The Polar BEAR magnetic field experiment, in conjunction with auroral imaging and radio beacon experiments, provides a way to evaluate the role of Birkeland currents in the generation of auroral phenomena. INTRODUCTION 12 Less than 10 years after the launch of the fIrst artifIcial earth satellites, magnetic field experiments on polar orbit ing spacecraft detected large-scale magnetic disturbances transverse to the geomagnetic field. I Transverse mag netic disturbances ranging from - 10 to 10 3 nT (l nanotesla = 10 - 5 gauss) have been associated with electric currents that flow along the geomagnetic field into and away from the earth's polar regions. 2 These currents were named after the Norwegian scientist Kris 18~--~--~-+~~-+-----+--~-r----~6 tian Birkeland who, in the first decade of the twentieth century, suggested their existence and their association with the aurora. Birkeland currents are now known to be a primary vehicle for coupling energy from the solar wind to the auroral ionosphere. Auroral ovals are annular regions of auroral emission that encircle the earth's polar regions. Roughly 3 to 7 0 latitude in width, they are offset - 50 toward midnight from the geomagnetic poles. -

European Space Agency Announces Contest to Name the Cluster Quartet

European Space Agency Announces Contest to Name the Cluster Quartet. 1. Contest rules The European Space Agency (ESA) is launching a public competition to find the most suitable names for its four Cluster II space weather satellites. The quartet, which are currently known as flight models 5, 6, 7 and 8, are scheduled for launch from Baikonur Space Centre in Kazakhstan in June and July 2000. Professor Roger Bonnet, ESA Director of Science Programme, announced the competition for the first time to the European Delegations on the occasion of the Science Programme Committee (SPC) meeting held in Paris on 21-22 February 2000. The competition is open to people of all the ESA member states. Each entry should include a set of FOUR names (places, people, or things from his- tory, mythology, or fiction, but NOT living persons). Contestants should also describe in a few sentences why their chosen names would be appropriate for the four Cluster II satellites. The winners will be those which are considered most suitable and relevant for the Cluster II mission. The names must not have been used before on space missions by ESA, other space organizations or individual countries. One winning entry per country will be selected to go to the Finals of the competition. The prize for each national winner will be an invitation to attend the first Cluster II launch event in mid-June 2000 with their family (4 persons) in a 3-day trip (including excursions to tourist sites) to one of these ESA establishments: ESRIN (near Rome, Italy): winners from France, Ireland, United King- dom, Belgium. -

Exploring the Solar Poles and the Heliosphere from High Helio-Latitude

A white paper submitted to ESA for the Voyage 2050 long- term plan ? A journey to the polar regions of a star: Exploring the solar poles and the heliosphere from high helio-latitude Louise Harra ([email protected]) and the solar polar team PMOC/WRC, Dorfstrasse 33, CH-7260 Davos Dorf & ETH-Zürich, Switzerland [Image: Polar regions of major Solar System bodies. Top left, clockwise - Near-surface zonal flows around the solar north pole Bogart et al. (2015); Earth’s changing magnetic field from ESA’s Swarm constellation; Southern polar cap of Mars from ESA’s ExoMars; Jupiter’s poles from the NASA Juno mission; Saturn’s poles from the NASA Cassini mission.] Overview We aim to embark on one of humankind’s great journeys – to travel over the poles of our star, with a spacecraft unprecedented in its technology and instrumentation – to explore the polar regions of the Sun and their effect on the inner heliosphere in which we live. The polar vantage point provides a unique opportunity for major scientific advances in the field of heliophysics, and thus also provides the scientific underpinning for space weather applications. It has long been a scientific goal to study the poles of the Sun, illustrated by the NASA/ESA International Solar Polar Mission that was proposed over four decades ago, which led to the flight of ESA’s Ulysses spacecraft (1990 to 2009). Indeed, with regard to the Earth, we took the first tentative steps to explore the Earth’s polar regions only in the 1800s. Today, with the aid of space missions, key measurements relating to the nature and evolution of Earth’s polar regions are being made, providing vital input to climate-change models. -

The Cluster-II Mission – Rising from the Ashes

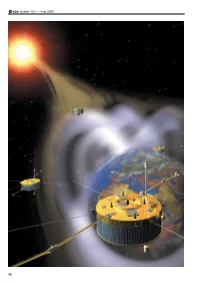

r bulletin 102 — may 2000 bull 46 cluster-II The Cluster-II Mission – Rising from the Ashes The Cluster II Project Team Scientific Projects Department, ESA Directorate of Scientific Programmes, ESTEC, Noordwijk, The Netherlands In June and July of this year, four Cluster-II rebuilt and is ready to complete the mission to spacecraft will be launched in pairs from the magnetosphere planned for its predecessors. Baikonur Cosmodrome in Kazakhstan (Fig. 1). If all goes well, these launches will mark the From concept to reality culmination of a remarkable recovery from the The Cluster mission was first proposed in tragic loss of the original Cluster mission. November 1982 in response to an ESA Call for Proposals for the next series of science In June 1996, an explosion of the first Ariane-5 missions. It grew out of an original idea from a launch vehicle shortly after lift-off destroyed the group of European scientists to carry out a flotilla of Cluster spacecraft. Now, just four detailed study of the Earth’s magnetotail in the years later, this unique Cornerstone of ESA’s equatorial plane. This idea was then developed Horizons 2000 Science Programme has been into a proposal to study the ‘cusp’ regions of the magnetosphere with a polar orbiting Four years ago, the first Cluster mission was lost when the maiden mission. flight of Ariane-5 came to a tragic end. Today, through the combined efforts of the ESA Project Team, its industrial partners and The Assessment Study ran from February to collaborating scientific institutions, the Cluster quartet has been born August 1983 and was followed by a Phase-A again. -

Geotail HEP-LD and Polar PIXIE Observations

Magnetospheric and ionospheric response to a substorm: PAPER 3 Geotail HEP-LD and Polar PIXIE observations S. Håland,1 N. Østgaard,1 J. Bjordal,1 J. Stadsnes,1 S. Ullaland,1,2 B. Wilken,3 T. Yamamoto,4,5 T. Doke,6 D. L. Chenette,7 G. K. Parks,8 M. J. Brittnacher,8 G. D. Reeves9 Accepted for publ. in J. Geophys. Res., May 1999 Abstract. The High Energy Particle - Low energy particle Detector experiment (HEP-LD) on board the Geotail spacecraft and the Polar Ionospheric X-ray Imaging Experiment (PIXIE) on board the Polar satellite have been used to examine a substorm event. On December 10, 1996, around 1700 UT, a substorm event with two onsets took place. The event occurred during a weak magnetic storm that started on December 9. Several of the classical substorm features were observed during the event: reconnection and neutral-line formation in the near-Earth geomagnetic tail, injection of energetic particles at geosynchronous orbit, and particle precipitation into the ionosphere. Magnetic field line mapping of the energetic precipitation area into the geomagnetic tail shows that the substorm development on ground is closely correlated with topological changes in the near-Earth tail. In the first onset, mainly soft electrons are involved, with only a transient energetic precipitation delayed relative to the onset. The second onset about 30 min later includes both soft and energetic electrons. The source regions of both onsets are found to be located near the earthward edge of the plasma sheet, while the source region of the transient energetic precipitation during the first onset is in the distant tail. -

Exploring the Earth's Magnetic Field

([SORULQJWKH(DUWK·V0DJQHWLF)LHOG $Q,0$*(6DWHOOLWH*XLGHWRWKH0DJQHWRVSKHUH An IMAGE Satellite Guide to Exploring the Earth’s Magnetic Field 1 $FNQRZOHGJPHQWV Dr. James Burch IMAGE Principal Investigator Dr. William Taylor IMAGE Education and Public Outreach Raytheon ITS and NASA Goddard SFC Dr. Sten Odenwald IMAGE Education and Public Outreach Raytheon ITS and NASA Goddard SFC Ms. Annie DiMarco This resource was developed by Greenwood Elementary School the NASA Imager for Brookville, Maryland Magnetopause-to-Auroral Global Exploration (IMAGE) Ms. Susan Higley Cherry Hill Middle School Information about the IMAGE Elkton, Maryland Mission is available at: http://image.gsfc.nasa.gov Mr. Bill Pine http://pluto.space.swri.edu/IMAGE Chaffey High School Resources for teachers and Ontario, California students are available at: Mr. Tom Smith http://image.gsfc.nasa.gov/poetry Briggs-Chaney Middle School Silver Spring, Maryland Cover Artwork: Image of the Earth’s ring current observed by the IMAGE, HENA instrument. Some representative magnetic field lines are shown in white. An IMAGE Satellite Guide to Exploring the Earth’s Magnetic Field 2 &RQWHQWV Chapter 1: What is a Magnet? , *UDGH 3OD\LQJ:LWK0DJQHWLVP ,, *UDGH ([SORULQJ0DJQHWLF)LHOGV ,,, *UDGH ([SORULQJWKH(DUWKDVD0DJQHW ,9 *UDGH (OHFWULFLW\DQG0DJQHWLVP Chapter 2: Investigating Earth’s Magnetism 9 *UDGH *UDGH7KH:DQGHULQJ0DJQHWLF3ROH 9, *UDGH 3ORWWLQJ3RLQWVLQ3RODU&RRUGLQDWHV 9,, *UDGH 0HDVXULQJ'LVWDQFHVRQWKH3RODU0DS 9,,, *UDGH :DQGHULQJ3ROHVLQWKH/DVW<HDUV ,; *UDGH 7KH0DJQHWRVSKHUHDQG8V -

Active Spacecraft Potential Control for Cluster – Implementation and first Results

c Annales Geophysicae (2001) 19: 1289–1302 European Geophysical Society 2001 Annales Geophysicae Active spacecraft potential control for Cluster – implementation and first results K. Torkar1, W. Riedler1, C. P. Escoubet2, M. Fehringer2, R. Schmidt2, R. J. L. Grard2, H. Arends2, F. Rudenauer¨ 3, W. Steiger4, B. T. Narheim5, K. Svenes5, R. Torbert6, M. Andre´7, A. Fazakerley8, R. Goldstein9, R. C. Olsen10, A. Pedersen11, E. Whipple12, and H. Zhao13 1Space Research Institute, Austrian Academy of Sciences, Schmiedlstrasse 6, 8042 Graz, Austria 2Space Science Department of ESA/ESTEC, 2200 AG Noordwijk, The Netherlands 3Now at: International Atomic Energy Agency, Safeguards Analytical Laboratory, 2444 Seibersdorf, Austria 4Institute for Physics, Austrian Research Centers Seibersdorf, 2444 Seibersdorf, Austria 5Forsvarets Forskningsinstitutt, Avdeling for Elektronikk, 2007 Kjeller, Norway 6Space Science Center, Science and Engineering Research Center, University of New Hampshire, Durham, NH 03824, USA 7Swedish Institute of Space Physics, Uppsala Division, 75121 Uppsala, Sweden 8Dept. of Physics, Mullard Space Science Laboratory, University College London, Dorking, Surrey, UK 9Southwest Research Institute, San Antonio, Texas 78238, USA 10Physics Department, Naval Postgraduate School, Monterey, California 93943, USA 11Dept. of Physics, University of Oslo, Blindern, Norway 12University of Washington, Geophysics Department, Seattle, Washington 98195, USA 13Center for Space Science and Applied Research, Chinese Academy of Sciences, Beijing 100080, P. R. China Received: 17 April 2001 – Revised: 20 August 2001 – Accepted: 23 August 2001 Abstract. Electrostatic charging of a spacecraft modifies the larged sheath around the spacecraft which causes problems distribution of electrons and ions before the particles enter for boom-mounted probes. the sensors mounted on the spacecraft body. The floating Key words.