A Parameter-Free Affinity Based Clustering

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

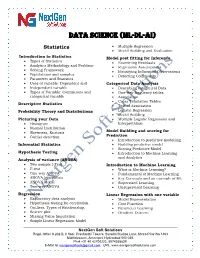

Data Science (ML-DL-Ai)

Data science (ML-DL-ai) Statistics Multiple Regression Model Building and Evaluation Introduction to Statistics Model post fitting for Inference Types of Statistics Examining Residuals Analytics Methodology and Problem- Regression Assumptions Solving Framework Identifying Influential Observations Populations and samples Detecting Collinearity Parameter and Statistics Uses of variable: Dependent and Categorical Data Analysis Independent variable Describing categorical Data Types of Variable: Continuous and One-way frequency tables categorical variable Association Cross Tabulation Tables Descriptive Statistics Test of Association Probability Theory and Distributions Logistic Regression Model Building Picturing your Data Multiple Logistic Regression and Histogram Interpretation Normal Distribution Skewness, Kurtosis Model Building and scoring for Outlier detection Prediction Introduction to predictive modelling Inferential Statistics Building predictive model Scoring Predictive Model Hypothesis Testing Introduction to Machine Learning and Analytics Analysis of variance (ANOVA) Two sample t-Test Introduction to Machine Learning F-test What is Machine Learning? One-way ANOVA Fundamental of Machine Learning ANOVA hypothesis Key Concepts and an example of ML ANOVA Model Supervised Learning Two-way ANOVA Unsupervised Learning Regression Linear Regression with one variable Exploratory data analysis Model Representation Hypothesis testing for correlation Cost Function Outliers, Types of Relationship, -

Machine Learning V1.1

An Introduction to Machine Learning v1.1 E. J. Sagra Agenda ● Why is Machine Learning in the News again? ● ArtificiaI Intelligence vs Machine Learning vs Deep Learning ● Artificial Intelligence ● Machine Learning & Data Science ● Machine Learning ● Data ● Machine Learning - By The Steps ● Tasks that Machine Learning solves ○ Classification ○ Cluster Analysis ○ Regression ○ Ranking ○ Generation Agenda (cont...) ● Model Training ○ Supervised Learning ○ Unsupervised Learning ○ Reinforcement Learning ● Reinforcement Learning - Going Deeper ○ Simple Example ○ The Bellman Equation ○ Deterministic vs. Non-Deterministic Search ○ Markov Decision Process (MDP) ○ Living Penalty ● Machine Learning - Decision Trees ● Machine Learning - Augmented Random Search (ARS) Why is Machine Learning In The News Again? Processing capabilities General ● GPU’s etc have reached level where Machine ● Tools / Languages / Automation Learning / Deep Learning practical ● Need for Data Science no longer limited to ● Cloud computing allows even individuals the tech giants capability to create / train complex models on ● Education is behind in creating Data vast data sets Scientists ● Organizing data is hard. Organizations Memory (Hard Drive (now SSD) as well RAM) challenged ● Speed / capacity increasing ● High demand due to lack of qualified talent ● Cost decreasing Data ● Volume of Data ● Access to vast public data sets ArtificiaI Intelligence vs Machine Learning vs Deep Learning Artificial Intelligence is the all-encompassing concept that initially erupted Followed by Machine Learning that thrived later Finally Deep Learning is escalating the advances of Artificial Intelligence to another level Artificial Intelligence Artificial intelligence (AI) is perhaps the most vaguely understood field of data science. The main idea behind building AI is to use pattern recognition and machine learning to build an agent able to think and reason as humans do (or approach this ability). -

Dynamic Feature Scaling for Online Learning of Binary Classifiers

Dynamic Feature Scaling for Online Learning of Binary Classifiers Danushka Bollegala University of Liverpool, Liverpool, United Kingdom May 30, 2017 Abstract Scaling feature values is an important step in numerous machine learning tasks. Different features can have different value ranges and some form of a feature scal- ing is often required in order to learn an accurate classifier. However, feature scaling is conducted as a preprocessing task prior to learning. This is problematic in an online setting because of two reasons. First, it might not be possible to accu- rately determine the value range of a feature at the initial stages of learning when we have observed only a handful of training instances. Second, the distribution of data can change over time, which render obsolete any feature scaling that we perform in a pre-processing step. We propose a simple but an effective method to dynamically scale features at train time, thereby quickly adapting to any changes in the data stream. We compare the proposed dynamic feature scaling method against more complex methods for estimating scaling parameters using several benchmark datasets for classification. Our proposed feature scaling method consistently out- performs more complex methods on all of the benchmark datasets and improves classification accuracy of a state-of-the-art online classification algorithm. 1 Introduction Machine learning algorithms require train and test instances to be represented using a set of features. For example, in supervised document classification [9], a document is often represented as a vector of its words and the value of a feature is set to the num- ber of times the word corresponding to the feature occurs in that document. -

Capacity and Trainability in Recurrent Neural Networks

Published as a conference paper at ICLR 2017 CAPACITY AND TRAINABILITY IN RECURRENT NEURAL NETWORKS Jasmine Collins,∗ Jascha Sohl-Dickstein & David Sussillo Google Brain Google Inc. Mountain View, CA 94043, USA {jlcollins, jaschasd, sussillo}@google.com ABSTRACT Two potential bottlenecks on the expressiveness of recurrent neural networks (RNNs) are their ability to store information about the task in their parameters, and to store information about the input history in their units. We show experimentally that all common RNN architectures achieve nearly the same per-task and per-unit capacity bounds with careful training, for a variety of tasks and stacking depths. They can store an amount of task information which is linear in the number of parameters, and is approximately 5 bits per parameter. They can additionally store approximately one real number from their input history per hidden unit. We further find that for several tasks it is the per-task parameter capacity bound that determines performance. These results suggest that many previous results comparing RNN architectures are driven primarily by differences in training effectiveness, rather than differences in capacity. Supporting this observation, we compare training difficulty for several architectures, and show that vanilla RNNs are far more difficult to train, yet have slightly higher capacity. Finally, we propose two novel RNN architectures, one of which is easier to train than the LSTM or GRU for deeply stacked architectures. 1 INTRODUCTION Research and application of recurrent neural networks (RNNs) have seen explosive growth over the last few years, (Martens & Sutskever, 2011; Graves et al., 2009), and RNNs have become the central component for some very successful model classes and application domains in deep learning (speech recognition (Amodei et al., 2015), seq2seq (Sutskever et al., 2014), neural machine translation (Bahdanau et al., 2014), the DRAW model (Gregor et al., 2015), educational applications (Piech et al., 2015), and scientific discovery (Mante et al., 2013)). -

Training and Testing of a Single-Layer LSTM Network for Near-Future Solar Forecasting

applied sciences Conference Report Training and Testing of a Single-Layer LSTM Network for Near-Future Solar Forecasting Naylani Halpern-Wight 1,2,*,†, Maria Konstantinou 1, Alexandros G. Charalambides 1 and Angèle Reinders 2,3 1 Chemical Engineering Department, Cyprus University of Technology, Lemesos 3036, Cyprus; [email protected] (M.K.); [email protected] (A.G.C.) 2 Energy Technology Group, Department of Mechanical Engineering, Eindhoven University of Technology, 5612 AE Eindhoven, The Netherlands; [email protected] 3 Department of Design, Production and Management, Faculty of Engineering Technology, University of Twente, 7522 NB Enschede, The Netherlands * Correspondence: [email protected] or [email protected] † Current address: Archiepiskopou Kyprianou 30, Limassol 3036, Cyprus. Received: 30 June 2020; Accepted: 31 July 2020; Published: 25 August 2020 Abstract: Increasing integration of renewable energy sources, like solar photovoltaic (PV), necessitates the development of power forecasting tools to predict power fluctuations caused by weather. With trustworthy and accurate solar power forecasting models, grid operators could easily determine when other dispatchable sources of backup power may be needed to account for fluctuations in PV power plants. Additionally, PV customers and designers would feel secure knowing how much energy to expect from their PV systems on an hourly, daily, monthly, or yearly basis. The PROGNOSIS project, based at the Cyprus University of Technology, is developing a tool for intra-hour solar irradiance forecasting. This article presents the design, training, and testing of a single-layer long-short-term-memory (LSTM) artificial neural network for intra-hour power forecasting of a single PV system in Cyprus. -

Training Dnns: Tricks

Training DNNs: Tricks Ju Sun Computer Science & Engineering University of Minnesota, Twin Cities March 5, 2020 1 / 33 Recap: last lecture Training DNNs m 1 X min ` (yi; DNNW (xi)) +Ω( W ) W m i=1 { What methods? Mini-batch stochastic optimization due to large m * SGD (with momentum), Adagrad, RMSprop, Adam * diminishing LR (1/t, exp delay, staircase delay) { Where to start? * Xavier init., Kaiming init., orthogonal init. { When to stop? * early stopping: stop when validation error doesn't improve This lecture: additional tricks/heuristics that improve { convergence speed { task-specific (e.g., classification, regression, generation) performance 2 / 33 Outline Data Normalization Regularization Hyperparameter search, data augmentation Suggested reading 3 / 33 Why scaling matters? : 1 Pm | Consider a ML objective: minw f (w) = m i=1 ` (w xi; yi), e.g., 1 Pm | 2 { Least-squares (LS): minw m i=1 kyi − w xik2 h i 1 Pm | w|xi { Logistic regression: minw − m i=1 yiw xi − log 1 + e 1 Pm | 2 { Shallow NN prediction: minw m i=1 kyi − σ (w xi)k2 1 Pm 0 | Gradient: rwf = m i=1 ` (w xi; yi) xi. { What happens when coordinates (i.e., features) of xi have different orders of magnitude? Partial derivatives have different orders of magnitudes =) slow convergence of vanilla GD (recall why adaptive grad methods) 2 1 Pm 00 | | Hessian: rwf = m i=1 ` (w xi; yi) xixi . | { Suppose the off-diagonal elements of xixi are relatively small (e.g., when features are \independent"). { What happens when coordinates (i.e., features) of xi have different orders 2 of magnitude? Conditioning of rwf is bad, i.e., f is elongated 4 / 33 Fix the scaling: first idea Normalization: make each feature zero-mean and unit variance, i.e., make each row of X = [x1;:::; xm] zero-mean and unit variance, i.e. -

Temporal Convolutional Neural Network for the Classification Of

Article Temporal Convolutional Neural Network for the Classification of Satellite Image Time Series Charlotte Pelletier 1*, Geoffrey I. Webb 1 and François Petitjean 1 1 Faculty of Information Technology, Monash University, Melbourne, VIC, 3800 * Correspondence: [email protected] Academic Editor: name Version February 1, 2019 submitted to Remote Sens.; Typeset by LATEX using class file mdpi.cls Abstract: New remote sensing sensors now acquire high spatial and spectral Satellite Image Time Series (SITS) of the world. These series of images are a key component of classification systems that aim at obtaining up-to-date and accurate land cover maps of the Earth’s surfaces. More specifically, the combination of the temporal, spectral and spatial resolutions of new SITS makes possible to monitor vegetation dynamics. Although traditional classification algorithms, such as Random Forest (RF), have been successfully applied for SITS classification, these algorithms do not make the most of the temporal domain. Conversely, some approaches that take into account the temporal dimension have recently been tested, especially Recurrent Neural Networks (RNNs). This paper proposes an exhaustive study of another deep learning approaches, namely Temporal Convolutional Neural Networks (TempCNNs) where convolutions are applied in the temporal dimension. The goal is to quantitatively and qualitatively evaluate the contribution of TempCNNs for SITS classification. This paper proposes a set of experiments performed on one million time series extracted from 46 Formosat-2 images. The experimental results show that TempCNNs are more accurate than RF and RNNs, that are the current state of the art for SITS classification. We also highlight some differences with results obtained in computer vision, e.g. -

Introduction to Machine Learning: Examples of Unsupervised And

In [1]: from __future__ import division, print_function, absolute_import, unicode_literals Introduction to Machine Learning: Examples of Unsupervised and Supervised Machine- Learning Algorithms Version 0.1 Broadly speaking, machine-learning methods constitute a diverse collection of data-driven algorithms designed to classify/characterize/analyze sources in multi-dimensional spaces. The topics and studies that fall under the umbrella of machine learning is growing, and there is no good catch-all definition. The number (and variation) of algorithms is vast, and beyond the scope of these exercises. While we will discuss a few specific algorithms today, more importantly, we will explore the scope of the two general methods: unsupervised learning and supervised learning and introduce the powerful (and dangerous?) Python package scikit- learn (http://scikit-learn.org/stable/). By AA Miller (Jet Propulsion Laboratory, California Institute of Technology.) (c) 2016 California Institute of Technology. Government sponsorship acknowledged. In [2]: import numpy as np from astropy.table import Table import matplotlib.pyplot as plt %matplotlib inline Problem 1) Introduction to scikit-learn At the most basic level, scikit-learn makes machine learning extremely easy within Python. By way of example, here is a short piece of code that builds a complex, non-linear model to classify sources in the Iris data set that we learned about yesterday: from sklearn import datasets from sklearn.ensemble import RandomForestClassifier iris = datasets.load_iris() RFclf = RandomForestClassifier().fit(iris.data, iris.target) Those 4 lines of code have constructed a model that is superior to any system of hard cuts that we could have encoded while looking at the multidimensional space. -

Continuous State-Space Models for Optimal Sepsis Treatment-A Deep Reinforcement Learning Approach

CONTINUOUS STATE-SPACE MODELS FOR OPTIMAL SEPSIS TREATMENT Continuous State-Space Models for Optimal Sepsis Treatment - a Deep Reinforcement Learning Approach Aniruddh Raghu [email protected] Matthieu Komorowski [email protected] Leo Anthony Celi [email protected] Peter Szolovits [email protected] Marzyeh Ghassemi [email protected] Computer Science and Artificial Intelligence Lab, MIT Cambridge, MA Abstract Sepsis is a leading cause of mortality in intensive care units (ICUs) and costs hospitals billions annually. Treating a septic patient is highly challenging, because individual patients respond very differently to medical interventions and there is no universally agreed-upon treatment for sepsis. Understanding more about a patient’s physiological state at a given time could hold the key to ef- fective treatment policies. In this work, we propose a new approach to deduce optimal treatment policies for septic patients by using continuous state-space models and deep reinforcement learn- ing. Learning treatment policies over continuous spaces is important, because we retain more of the patient’s physiological information. Our model is able to learn clinically interpretable treat- ment policies, similar in important aspects to the treatment policies of physicians. Evaluating our algorithm on past ICU patient data, we find that our model could reduce patient mortality in the hospital by up to 3.6% over observed clinical policies, from a baseline mortality of 13.7%. The learned treatment policies could be used to aid intensive care clinicians in medical decision making and improve the likelihood of patient survival. 1. Introduction arXiv:1705.08422v1 [cs.LG] 23 May 2017 Sepsis (severe infections with organ failure) is a dangerous condition that costs hospitals billions of pounds in the UK alone (Vincent et al., 2006), and is a leading cause of patient mortality (Co- hen et al., 2006). -

Data Science Documentation Release 0.1

Data Science Documentation Release 0.1 Jake Teo Aug 06, 2021 Contents 1 General Notes 3 1.1 Virtual Environment...........................................3 1.2 Modeling.................................................4 2 Learning 11 2.1 Datasets.................................................. 11 2.2 Kaggle.................................................. 13 3 Exploratory Analysis 15 3.1 Univariate................................................ 15 3.2 Multi-Variate............................................... 20 4 Feature Preprocessing 23 4.1 Missing Values.............................................. 23 4.2 Outliers.................................................. 25 4.3 Encoding................................................. 26 4.4 Coordinates................................................ 28 5 Feature Normalization 29 5.1 Scaling.................................................. 29 5.2 Pipeline.................................................. 31 5.3 Persistance................................................ 31 6 Feature Engineering 33 6.1 Manual.................................................. 33 6.2 Auto................................................... 35 7 Class Imbalance 39 7.1 Over-Sampling.............................................. 39 7.2 Under-Sampling............................................. 40 7.3 Under/Over-Sampling.......................................... 40 7.4 Cost Sensitive Classification....................................... 40 8 Data Leakage 41 8.1 Examples................................................ -

Deep Learning Vs. Standard Machine Learning in Classifying Beehive Audio Samples

applied sciences Article Toward Audio Beehive Monitoring: Deep Learning vs. Standard Machine Learning in Classifying Beehive Audio Samples Vladimir Kulyukin *, Sarbajit Mukherjee and Prakhar Amlathe Department of Computer Science, Utah State University, 4205 Old Main Hill, Logan, UT 84322-4205, USA; [email protected] (V.K); [email protected] (S.M); [email protected] (P.A.) * Correspondence: [email protected] Received: 31 May 2018; Accepted: 30 August 2018; Published: 6 September 2018 Abstract: Electronic beehive monitoring extracts critical information on colony behavior and phenology without invasive beehive inspections and transportation costs. As an integral component of electronic beehive monitoring, audio beehive monitoring has the potential to automate the identification of various stressors for honeybee colonies from beehive audio samples. In this investigation, we designed several convolutional neural networks and compared their performance with four standard machine learning methods (logistic regression, k-nearest neighbors, support vector machines, and random forests) in classifying audio samples from microphones deployed above landing pads of Langstroth beehives. On a dataset of 10,260 audio samples where the training and testing samples were separated from the validation samples by beehive and location, a shallower raw audio convolutional neural network with a custom layer outperformed three deeper raw audio convolutional neural networks without custom layers and performed on par with the four machine learning methods trained to classify feature vectors extracted from raw audio samples. On a more challenging dataset of 12,914 audio samples where the training and testing samples were separated from the validation samples by beehive, location, time, and bee race, all raw audio convolutional neural networks performed better than the four machine learning methods and a convolutional neural network trained to classify spectrogram images of audio samples. -

Recurrent Neural Networks for Financial Asset Forecasting

DEGREE PROJECT IN MATHEMATICS, SECOND CYCLE, 30 CREDITS STOCKHOLM, SWEDEN 2018 Recurrent neural networks for financial asset forecasting GUSTAF TEGNÉR KTH ROYAL INSTITUTE OF TECHNOLOGY SCHOOL OF ENGINEERING SCIENCES Recurrent neural networks for financial asset forecasting GUSTAF TEGNÉR Degree Projects in Mathematical Statistics (30 ECTS credits) Degree Programme in Applied and Computational Mathematics (120 credits) KTH Royal Institute of Technology year 2018 Supervisor at Lynx Asset Management: Martin Rehn Supervisor at KTH: Timo Koski Examiner at KTH: Timo Koski TRITA-SCI-GRU 2018:262 MAT-E 2018:60 Royal Institute of Technology School of Engineering Sciences KTH SCI SE-100 44 Stockholm, Sweden URL: www.kth.se/sci Sammanfattning Tillämpningen av neurala nätverk i finans har fått förnyat intresse under de senaste åren. Neurala nätverk har en erkänd förmåga att kunna modellera icke-linjära förhållanden och har bevisligen visat sig användbara inom områ- den som bild och taligenkänning. Dessa egenskaper gör neurala nätverk till ett attraktivt val av model för att studera finansmarknaden Denna uppsats studerar användandet av rekurrenta neurala nätverk för pre- diktering av framtida prisrörelser av ett antal futures kontrakt. För att un- derlätta får analys jämför vi dessa nätverk med en uppsättning av enkla framåtkopplade nätverk. Vi dyker sedan djupare in i vår analys genom att jämföra olika målfunktioner för nätverken och hur de påverkar våra nätverks prestation. Vi utökar sedan den här diskussionen genom att också undersöka multi-förlust nätverk. Användandet av flera förlust funktioner visar på bety- delsen av vårt urval av attribut från indatan. Vi studerar ett par simpla och komplexa attribut och hur de påverkar vår modell.