Finding and Understanding Bugs in C Compilers

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Glibc and System Calls Documentation Release 1.0

Glibc and System Calls Documentation Release 1.0 Rishi Agrawal <[email protected]> Dec 28, 2017 Contents 1 Introduction 1 1.1 Acknowledgements...........................................1 2 Basics of a Linux System 3 2.1 Introduction...............................................3 2.2 Programs and Compilation........................................3 2.3 Libraries.................................................7 2.4 System Calls...............................................7 2.5 Kernel.................................................. 10 2.6 Conclusion................................................ 10 2.7 References................................................ 11 3 Working with glibc 13 3.1 Introduction............................................... 13 3.2 Why this chapter............................................. 13 3.3 What is glibc .............................................. 13 3.4 Download and extract glibc ...................................... 14 3.5 Walkthrough glibc ........................................... 14 3.6 Reading some functions of glibc ................................... 17 3.7 Compiling and installing glibc .................................... 18 3.8 Using new glibc ............................................ 21 3.9 Conclusion................................................ 23 4 System Calls On x86_64 from User Space 25 4.1 Setting Up Arguements......................................... 25 4.2 Calling the System Call......................................... 27 4.3 Retrieving the Return Value...................................... -

Preview Objective-C Tutorial (PDF Version)

Objective-C Objective-C About the Tutorial Objective-C is a general-purpose, object-oriented programming language that adds Smalltalk-style messaging to the C programming language. This is the main programming language used by Apple for the OS X and iOS operating systems and their respective APIs, Cocoa and Cocoa Touch. This reference will take you through simple and practical approach while learning Objective-C Programming language. Audience This reference has been prepared for the beginners to help them understand basic to advanced concepts related to Objective-C Programming languages. Prerequisites Before you start doing practice with various types of examples given in this reference, I'm making an assumption that you are already aware about what is a computer program, and what is a computer programming language? Copyright & Disclaimer © Copyright 2015 by Tutorials Point (I) Pvt. Ltd. All the content and graphics published in this e-book are the property of Tutorials Point (I) Pvt. Ltd. The user of this e-book can retain a copy for future reference but commercial use of this data is not allowed. Distribution or republishing any content or a part of the content of this e-book in any manner is also not allowed without written consent of the publisher. We strive to update the contents of our website and tutorials as timely and as precisely as possible, however, the contents may contain inaccuracies or errors. Tutorials Point (I) Pvt. Ltd. provides no guarantee regarding the accuracy, timeliness or completeness of our website or its contents including this tutorial. If you discover any errors on our website or in this tutorial, please notify us at [email protected] ii Objective-C Table of Contents About the Tutorial .................................................................................................................................. -

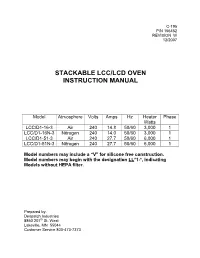

Stackable Lcc/Lcd Oven Instruction Manual

C-195 P/N 156452 REVISION W 12/2007 STACKABLE LCC/LCD OVEN INSTRUCTION MANUAL Model Atmosphere Volts Amps Hz Heater Phase Watts LCC/D1-16-3 Air 240 14.8 50/60 3,000 1 LCC/D1-16N-3 Nitrogen 240 14.0 50/60 3,000 1 LCC/D1-51-3 Air 240 27.7 50/60 6,000 1 LCC/D1-51N-3 Nitrogen 240 27.7 50/60 6,000 1 Model numbers may include a “V” for silicone free construction. Model numbers may begin with the designation LL *1-*, indicating Models without HEPA filter. Prepared by: Despatch Industries 8860 207 th St. West Lakeville, MN 55044 Customer Service 800-473-7373 NOTICE Users of this equipment must comply with operating procedures and training of operation personnel as required by the Occupational Safety and Health Act (OSHA) of 1970, Section 6 and relevant safety standards, as well as other safety rules and regulations of state and local governments. Refer to the relevant safety standards in OSHA and National Fire Protection Association (NFPA), section 86 of 1990. CAUTION Setup and maintenance of the equipment should be performed by qualified personnel who are experienced in handling all facets of this type of system. Improper setup and operation of this equipment could cause an explosion that may result in equipment damage, personal injury or possible death. Dear Customer, Thank you for choosing Despatch Industries. We appreciate the opportunity to work with you and to meet your heat processing needs. We believe that you have selected the finest equipment available in the heat processing industry. -

The Lcc 4.X Code-Generation Interface

The lcc 4.x Code-Generation Interface Christopher W. Fraser and David R. Hanson Microsoft Research [email protected] [email protected] July 2001 Technical Report MSR-TR-2001-64 Abstract Lcc is a widely used compiler for Standard C described in A Retargetable C Compiler: Design and Implementation. This report details the lcc 4.x code- generation interface, which defines the interaction between the target- independent front end and the target-dependent back ends. This interface differs from the interface described in Chap. 5 of A Retargetable C Com- piler. Additional infomation about lcc is available at http://www.cs.princ- eton.edu/software/lcc/. Microsoft Research Microsoft Corporation One Microsoft Way Redmond, WA 98052 http://www.research.microsoft.com/ The lcc 4.x Code-Generation Interface 1. Introduction Lcc is a widely used compiler for Standard C described in A Retargetable C Compiler [1]. Version 4.x is the current release of lcc, and it uses a different code-generation interface than the inter- face described in Chap. 5 of Reference 1. This report details the 4.x interface. Lcc distributions are available at http://www.cs.princeton.edu/software/lcc/ along with installation instruc- tions [2]. The code generation interface specifies the interaction between lcc’s target-independent front end and target-dependent back ends. The interface consists of a few shared data structures, a 33-operator language, which encodes the executable code from a source program in directed acyclic graphs, or dags, and 18 functions, that manipulate or modify dags and other shared data structures. On most targets, implementations of many of these functions are very simple. -

Undefined Behaviour in the C Language

FAKULTA INFORMATIKY, MASARYKOVA UNIVERZITA Undefined Behaviour in the C Language BAKALÁŘSKÁ PRÁCE Tobiáš Kamenický Brno, květen 2015 Declaration Hereby I declare, that this paper is my original authorial work, which I have worked out by my own. All sources, references, and literature used or excerpted during elaboration of this work are properly cited and listed in complete reference to the due source. Vedoucí práce: RNDr. Adam Rambousek ii Acknowledgements I am very grateful to my supervisor Miroslav Franc for his guidance, invaluable help and feedback throughout the work on this thesis. iii Summary This bachelor’s thesis deals with the concept of undefined behavior and its aspects. It explains some specific undefined behaviors extracted from the C standard and provides each with a detailed description from the view of a programmer and a tester. It summarizes the possibilities to prevent and to test these undefined behaviors. To achieve that, some compilers and tools are introduced and further described. The thesis contains a set of example programs to ease the understanding of the discussed undefined behaviors. Keywords undefined behavior, C, testing, detection, secure coding, analysis tools, standard, programming language iv Table of Contents Declaration ................................................................................................................................ ii Acknowledgements .................................................................................................................. iii Summary ................................................................................................................................. -

Defining the Undefinedness of C

Technical Report: Defining the Undefinedness of C Chucky Ellison Grigore Ros, u University of Illinois {celliso2,grosu}@illinois.edu Abstract naturally capture undefined behavior simply by exclusion, be- This paper investigates undefined behavior in C and offers cause of the complexity of undefined behavior, it takes active a few simple techniques for operationally specifying such work to avoid giving many undefined programs semantics. behavior formally. A semantics-based undefinedness checker In addition, capturing the undefined behavior is at least as for C is developed using these techniques, as well as a test important as capturing the defined behavior, as it represents suite of undefined programs. The tool is evaluated against a source of many subtle program bugs. While a semantics other popular analysis tools, using the new test suite in of defined programs can be used to prove their behavioral addition to a third-party test suite. The semantics-based tool correctness, any results are contingent upon programs actu- performs at least as well or better than the other tools tested. ally being defined—it takes a semantics capturing undefined behavior to decide whether this is the case. 1. Introduction C, together with C++, is the king of undefined behavior—C has over 200 explicitly undefined categories of behavior, and A programming language specification or semantics has dual more that are left implicitly undefined [11]. Many of these duty: to describe the behavior of correct programs and to behaviors can not be detected statically, and as we show later identify incorrect programs. The process of identifying incor- (Section 2.6), detecting them is actually undecidable even rect programs can also be seen as describing which programs dynamically. -

The Glib/GTK+ Development Platform

The GLib/GTK+ Development Platform A Getting Started Guide Version 0.8 Sébastien Wilmet March 29, 2019 Contents 1 Introduction 3 1.1 License . 3 1.2 Financial Support . 3 1.3 Todo List for this Book and a Quick 2019 Update . 4 1.4 What is GLib and GTK+? . 4 1.5 The GNOME Desktop . 5 1.6 Prerequisites . 6 1.7 Why and When Using the C Language? . 7 1.7.1 Separate the Backend from the Frontend . 7 1.7.2 Other Aspects to Keep in Mind . 8 1.8 Learning Path . 9 1.9 The Development Environment . 10 1.10 Acknowledgments . 10 I GLib, the Core Library 11 2 GLib, the Core Library 12 2.1 Basics . 13 2.1.1 Type Definitions . 13 2.1.2 Frequently Used Macros . 13 2.1.3 Debugging Macros . 14 2.1.4 Memory . 16 2.1.5 String Handling . 18 2.2 Data Structures . 20 2.2.1 Lists . 20 2.2.2 Trees . 24 2.2.3 Hash Tables . 29 2.3 The Main Event Loop . 31 2.4 Other Features . 33 II Object-Oriented Programming in C 35 3 Semi-Object-Oriented Programming in C 37 3.1 Header Example . 37 3.1.1 Project Namespace . 37 3.1.2 Class Namespace . 39 3.1.3 Lowercase, Uppercase or CamelCase? . 39 3.1.4 Include Guard . 39 3.1.5 C++ Support . 39 1 3.1.6 #include . 39 3.1.7 Type Definition . 40 3.1.8 Object Constructor . 40 3.1.9 Object Destructor . -

Improving System Security Through TCB Reduction

Improving System Security Through TCB Reduction Bernhard Kauer March 31, 2015 Dissertation vorgelegt an der Technischen Universität Dresden Fakultät Informatik zur Erlangung des akademischen Grades Doktoringenieur (Dr.-Ing.) Erstgutachter Prof. Dr. rer. nat. Hermann Härtig Technische Universität Dresden Zweitgutachter Prof. Dr. Paulo Esteves Veríssimo University of Luxembourg Verteidigung 15.12.2014 Abstract The OS (operating system) is the primary target of todays attacks. A single exploitable defect can be sufficient to break the security of the system and give fully control over all the software on the machine. Because current operating systems are too large to be defect free, the best approach to improve the system security is to reduce their code to more manageable levels. This work shows how the security-critical part of theOS, the so called TCB (Trusted Computing Base), can be reduced from millions to less than hundred thousand lines of code to achieve these security goals. Shrinking the software stack by more than an order of magnitude is an open challenge since no single technique can currently achieve this. We therefore followed a holistic approach and improved the design as well as implementation of several system layers starting with a newOS called NOVA. NOVA provides a small TCB for both newly written applications but also for legacy code running inside virtual machines. Virtualization is thereby the key technique to ensure that compatibility requirements will not increase the minimal TCB of our system. The main contribution of this work is to show how the virtual machine monitor for NOVA was implemented with significantly less lines of code without affecting the per- formance of its guest OS. -

The Art, Science, and Engineering of Fuzzing: a Survey

1 The Art, Science, and Engineering of Fuzzing: A Survey Valentin J.M. Manes,` HyungSeok Han, Choongwoo Han, Sang Kil Cha, Manuel Egele, Edward J. Schwartz, and Maverick Woo Abstract—Among the many software vulnerability discovery techniques available today, fuzzing has remained highly popular due to its conceptual simplicity, its low barrier to deployment, and its vast amount of empirical evidence in discovering real-world software vulnerabilities. At a high level, fuzzing refers to a process of repeatedly running a program with generated inputs that may be syntactically or semantically malformed. While researchers and practitioners alike have invested a large and diverse effort towards improving fuzzing in recent years, this surge of work has also made it difficult to gain a comprehensive and coherent view of fuzzing. To help preserve and bring coherence to the vast literature of fuzzing, this paper presents a unified, general-purpose model of fuzzing together with a taxonomy of the current fuzzing literature. We methodically explore the design decisions at every stage of our model fuzzer by surveying the related literature and innovations in the art, science, and engineering that make modern-day fuzzers effective. Index Terms—software security, automated software testing, fuzzing. ✦ 1 INTRODUCTION Figure 1 on p. 5) and an increasing number of fuzzing Ever since its introduction in the early 1990s [152], fuzzing studies appear at major security conferences (e.g. [225], has remained one of the most widely-deployed techniques [52], [37], [176], [83], [239]). In addition, the blogosphere is to discover software security vulnerabilities. At a high level, filled with many success stories of fuzzing, some of which fuzzing refers to a process of repeatedly running a program also contain what we consider to be gems that warrant a with generated inputs that may be syntactically or seman- permanent place in the literature. -

User's Manual

rBOX610 Linux Software User’s Manual Disclaimers This manual has been carefully checked and believed to contain accurate information. Axiomtek Co., Ltd. assumes no responsibility for any infringements of patents or any third party’s rights, and any liability arising from such use. Axiomtek does not warrant or assume any legal liability or responsibility for the accuracy, completeness or usefulness of any information in this document. Axiomtek does not make any commitment to update the information in this manual. Axiomtek reserves the right to change or revise this document and/or product at any time without notice. No part of this document may be reproduced, stored in a retrieval system, or transmitted, in any form or by any means, electronic, mechanical, photocopying, recording, or otherwise, without the prior written permission of Axiomtek Co., Ltd. Trademarks Acknowledgments Axiomtek is a trademark of Axiomtek Co., Ltd. ® Windows is a trademark of Microsoft Corporation. Other brand names and trademarks are the properties and registered brands of their respective owners. Copyright 2014 Axiomtek Co., Ltd. All Rights Reserved February 2014, Version A2 Printed in Taiwan ii Table of Contents Disclaimers ..................................................................................................... ii Chapter 1 Introduction ............................................. 1 1.1 Specifications ...................................................................................... 2 Chapter 2 Getting Started ...................................... -

Compiler for Tiny Register Machine

COMPILER FOR TINY REGISTER MACHINE An Undergraduate Research Scholars Thesis by SIDIAN WU Submitted to Honors and Undergraduate Research Texas A&M University in partial fulfillment of the requirements for the designation as an UNDERGRADUATE RESEARCH SCHOLAR Approved by Research Advisor: Dr. Riccardo Bettati May 2015 Major: Computer Science TABLE OF CONTENTS Page ABSTRACT .................................................................................................................................. 1 CHAPTER I INTRODUCTION ................................................................................................ 2 II BACKGROUND .................................................................................................. 5 Tiny Register Machine .......................................................................................... 5 Computer Language syntax .................................................................................. 6 Compiler ............................................................................................................... 7 III COMPILER DESIGN ......................................................................................... 12 Symbol Table ...................................................................................................... 12 Memory Layout .................................................................................................. 12 Recursive Descent Parsing .................................................................................. 13 IV CONCLUSIONS -

Linux Assembly HOWTO Linux Assembly HOWTO

Linux Assembly HOWTO Linux Assembly HOWTO Table of Contents Linux Assembly HOWTO..................................................................................................................................1 Konstantin Boldyshev and François−René Rideau................................................................................1 1.INTRODUCTION................................................................................................................................1 2.DO YOU NEED ASSEMBLY?...........................................................................................................1 3.ASSEMBLERS.....................................................................................................................................1 4.METAPROGRAMMING/MACROPROCESSING............................................................................2 5.CALLING CONVENTIONS................................................................................................................2 6.QUICK START....................................................................................................................................2 7.RESOURCES.......................................................................................................................................2 1. INTRODUCTION...............................................................................................................................2 1.1 Legal Blurb........................................................................................................................................2