Resource Management: Linux Kernel Namespaces and Cgroups

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Improving Route Scalability: Nexthops As Separate Objects

Improving Route Scalability: Nexthops as Separate Objects September 2019 David Ahern | Cumulus Networks !1 Agenda Executive Summary ▪ If you remember nothing else about this talk … Driving use case Review legacy route API Dive into Nexthop API Benefits of the new API Cumulus Networks !2 Performance with the Legacy Route API route route route prefix/lenroute prefix/lendev prefix/lendev gatewayprefix/len gatewaydev gatewaydev gateway Cumulus Networks !3 Splitting Next Hops from Routes Routes with separate Nexthop objects Legacy Route API route route prefix/len nexthop route nexthop id dev route gateway prefix/lenroute prefix/lendev prefix/lendev gatewayprefix/len gatewaydev gatewaydev gateway route prefix/len nexthop nexthop nexthop id group nexthopdev nexthop[N] gatewaydev gateway Cumulus Networks !4 Dramatically Improves Route Scalability … Cumulus Networks !5 … with the Potential for Constant Insert Times Cumulus Networks !6 Networking Operating System Using Linux APIs Routing daemon or utility manages switchd ip FRR entries in kernel FIBs via rtnetlink APIs SDK userspace ▪ Enables other control plane software to use Linux networking APIs rtnetlink Data path connections, stats, troubleshooting, … FIB notifications FIB Management of hardware offload is separate kernel upper devices tunnels ▪ Keeps hardware in sync with kernel ... eth0 swp1 swp2 swpN Userspace driver with SDK leveraging driver driver driver kernel notifications NIC switch ASIC H / W Cumulus Networks !7 NOS with switchdev Driver In-kernel switchdev driver ip FRR Leverages -

Checkpoint and Restoration of Micro-Service in Docker Containers

3rd International Conference on Mechatronics and Industrial Informatics (ICMII 2015) Checkpoint and Restoration of Micro-service in Docker Containers Chen Yang School of Information Security Engineering, Shanghai Jiao Tong University, China 200240 [email protected] Keywords: Lightweight Virtualization, Checkpoint/restore, Docker. Abstract. In the present days of rapid adoption of micro-service, it is imperative to build a system to support and ensure the high performance and high availability for micro-services. Lightweight virtualization, which we also called container, has the ability to run multiple isolated sets of processes under a single kernel instance. Because of the possibility of obtaining a low overhead comparable to the near-native performance of a bare server, the container techniques, such as openvz, lxc, docker, they are widely used for micro-service [1]. In this paper, we present the high availability of micro-service in containers. We investigate capabilities provided by container (docker, openvz) to model and build the Micro-service infrastructure and compare different checkpoint and restore technologies for high availability. Finally, we present preliminary performance results of the infrastructure tuned to the micro-service. Introduction Lightweight virtualization, named the operating system level virtualization technology, partitions the physical machines resource, creating multiple isolated user-space instances. Each container acts exactly like a stand-alone server. A container can be rebooted independently and have root access, users, IP address, memory, processes, files, etc. Unlike traditional virtualization with the hypervisor layer, containerization takes place at the kernel level. Most modern operating system kernels now support the primitives necessary for containerization, including Linux with openvz, vserver and more recently lxc, Solaris with zones, and FreeBSD with Jails [2]. -

Desktop Migration and Administration Guide

Red Hat Enterprise Linux 7 Desktop Migration and Administration Guide GNOME 3 desktop migration planning, deployment, configuration, and administration in RHEL 7 Last Updated: 2021-05-05 Red Hat Enterprise Linux 7 Desktop Migration and Administration Guide GNOME 3 desktop migration planning, deployment, configuration, and administration in RHEL 7 Marie Doleželová Red Hat Customer Content Services [email protected] Petr Kovář Red Hat Customer Content Services [email protected] Jana Heves Red Hat Customer Content Services Legal Notice Copyright © 2018 Red Hat, Inc. This document is licensed by Red Hat under the Creative Commons Attribution-ShareAlike 3.0 Unported License. If you distribute this document, or a modified version of it, you must provide attribution to Red Hat, Inc. and provide a link to the original. If the document is modified, all Red Hat trademarks must be removed. Red Hat, as the licensor of this document, waives the right to enforce, and agrees not to assert, Section 4d of CC-BY-SA to the fullest extent permitted by applicable law. Red Hat, Red Hat Enterprise Linux, the Shadowman logo, the Red Hat logo, JBoss, OpenShift, Fedora, the Infinity logo, and RHCE are trademarks of Red Hat, Inc., registered in the United States and other countries. Linux ® is the registered trademark of Linus Torvalds in the United States and other countries. Java ® is a registered trademark of Oracle and/or its affiliates. XFS ® is a trademark of Silicon Graphics International Corp. or its subsidiaries in the United States and/or other countries. MySQL ® is a registered trademark of MySQL AB in the United States, the European Union and other countries. -

CST8207 – Linux O/S I

Mounting a Filesystem Directory Structure Fstab Mount command CST8207 - Algonquin College 2 Chapter 12: page 467 - 496 CST8207 - Algonquin College 3 The mount utility connects filesystems to the Linux directory hierarchy. The mount point is a directory in the local filesystem where you can access mounted filesystem. This directory must exist before you can mount a filesystem. All filesystems visible on the system exist as a mounted filesystem someplace below the root (/) directory CST8207 - Algonquin College 4 can be mounted manually ◦ can be listed in /etc/fstab, but not necessary ◦ all mounting information supplied manually at command line by user or administrator can be mounted automatically on startup ◦ must be listed /etc/fstab, with all appropriate information and options required Every filesystem, drive, storage device is listed as a mounted filesystem associated to a directory someplace under the root (/) directory CST8207 - Algonquin College 5 CST8207 - Algonquin College 6 Benefits Scalable ◦ As new drives are added and new partitions are created, further filesystems can be mounted at various mount points as required. ◦ This means a Linux system does not need to worry about running out of disk space. Transparent ◦ No application would stop working if transferred to a different partition, because access to data is done via the mount point. ◦ Also transparent to user CST8207 - Algonquin College 7 All known filesystems volumes are typically listed in the /etc/fstab (static information about filesystem) file to help automate the mounting process If it is not listed in the /etc/fstab file, then all appropriate information about the filesystem needs to be listed manually at the command line. -

Security Assurance Requirements for Linux Application Container Deployments

NISTIR 8176 Security Assurance Requirements for Linux Application Container Deployments Ramaswamy Chandramouli This publication is available free of charge from: https://doi.org/10.6028/NIST.IR.8176 NISTIR 8176 Security Assurance Requirements for Linux Application Container Deployments Ramaswamy Chandramouli Computer Security Division Information Technology Laboratory This publication is available free of charge from: https://doi.org/10.6028/NIST.IR.8176 October 2017 U.S. Department of Commerce Wilbur L. Ross, Jr., Secretary National Institute of Standards and Technology Walter Copan, NIST Director and Under Secretary of Commerce for Standards and Technology NISTIR 8176 SECURITY ASSURANCE FOR LINUX CONTAINERS National Institute of Standards and Technology Internal Report 8176 37 pages (October 2017) This publication is available free of charge from: https://doi.org/10.6028/NIST.IR.8176 Certain commercial entities, equipment, or materials may be identified in this document in order to describe an experimental procedure or concept adequately. Such identification is not intended to imply recommendation or endorsement by NIST, nor is it intended to imply that the entities, materials, or equipment are necessarily the best available for the purpose. This p There may be references in this publication to other publications currently under development by NIST in accordance with its assigned statutory responsibilities. The information in this publication, including concepts and methodologies, may be used by federal agencies even before the completion of such companion publications. Thus, until each ublication is available free of charge from: http publication is completed, current requirements, guidelines, and procedures, where they exist, remain operative. For planning and transition purposes, federal agencies may wish to closely follow the development of these new publications by NIST. -

An Introduction to Linux IPC

An introduction to Linux IPC Michael Kerrisk © 2013 linux.conf.au 2013 http://man7.org/ Canberra, Australia [email protected] 2013-01-30 http://lwn.net/ [email protected] man7 .org 1 Goal ● Limited time! ● Get a flavor of main IPC methods man7 .org 2 Me ● Programming on UNIX & Linux since 1987 ● Linux man-pages maintainer ● http://www.kernel.org/doc/man-pages/ ● Kernel + glibc API ● Author of: Further info: http://man7.org/tlpi/ man7 .org 3 You ● Can read a bit of C ● Have a passing familiarity with common syscalls ● fork(), open(), read(), write() man7 .org 4 There’s a lot of IPC ● Pipes ● Shared memory mappings ● FIFOs ● File vs Anonymous ● Cross-memory attach ● Pseudoterminals ● proc_vm_readv() / proc_vm_writev() ● Sockets ● Signals ● Stream vs Datagram (vs Seq. packet) ● Standard, Realtime ● UNIX vs Internet domain ● Eventfd ● POSIX message queues ● Futexes ● POSIX shared memory ● Record locks ● ● POSIX semaphores File locks ● ● Named, Unnamed Mutexes ● System V message queues ● Condition variables ● System V shared memory ● Barriers ● ● System V semaphores Read-write locks man7 .org 5 It helps to classify ● Pipes ● Shared memory mappings ● FIFOs ● File vs Anonymous ● Cross-memory attach ● Pseudoterminals ● proc_vm_readv() / proc_vm_writev() ● Sockets ● Signals ● Stream vs Datagram (vs Seq. packet) ● Standard, Realtime ● UNIX vs Internet domain ● Eventfd ● POSIX message queues ● Futexes ● POSIX shared memory ● Record locks ● ● POSIX semaphores File locks ● ● Named, Unnamed Mutexes ● System V message queues ● Condition variables ● System V shared memory ● Barriers ● ● System V semaphores Read-write locks man7 .org 6 It helps to classify ● Pipes ● Shared memory mappings ● FIFOs ● File vs Anonymous ● Cross-memoryn attach ● Pseudoterminals tio a ● proc_vm_readv() / proc_vm_writev() ● Sockets ic n ● Signals ● Stream vs Datagram (vs uSeq. -

Linux and Free Software: What and Why?

Linux and Free Software: What and Why? (Qué son Linux y el Software libre y cómo beneficia su uso a las empresas para lograr productividad económica y ventajas técnicas?) JugoJugo CreativoCreativo Michael Kerrisk UniversidadUniversidad dede SantanderSantander UDESUDES © 2012 Bucaramanga,Bucaramanga, ColombiaColombia [email protected] 77 JuneJune 20122012 http://man7.org/ man7.org 1 Who am I? ● Programmer, educator, and writer ● UNIX since 1987; Linux since late 1990s ● Linux man-pages maintainer since 2004 ● Author of a book on Linux programming man7.org 2 Overview ● What is Linux? ● How are Linux and Free Software created? ● History ● Where is Linux used today? ● What is Free Software? ● Source code; Software licensing ● Importance and advantages of Free Software and Software Freedom ● Concluding remarks man7.org 3 ● What is Linux? ● How are Linux and Free Software created? ● History ● Where is Linux used today? ● What is Free Software? ● Source code; Software licensing ● Importance and advantages of Free Software and Software Freedom ● Concluding remarks man7.org 4 What is Linux? ● An operating system (sistema operativo) ● (Operating System = OS) ● Examples of other operating systems: ● Windows ● Mac OS X Penguins are the Linux mascot man7.org 5 But, what's an operating system? ● Two definitions: ● Kernel ● Kernel + package of common programs man7.org 6 OS Definition 1: Kernel ● Computer scientists' definition: ● Operating System = Kernel (núcleo) ● Kernel = fundamental program on which all other programs depend man7.org 7 Programs can live -

In Search of the Ideal Storage Configuration for Docker Containers

In Search of the Ideal Storage Configuration for Docker Containers Vasily Tarasov1, Lukas Rupprecht1, Dimitris Skourtis1, Amit Warke1, Dean Hildebrand1 Mohamed Mohamed1, Nagapramod Mandagere1, Wenji Li2, Raju Rangaswami3, Ming Zhao2 1IBM Research—Almaden 2Arizona State University 3Florida International University Abstract—Containers are a widely successful technology today every running container. This would cause a great burden on popularized by Docker. Containers improve system utilization by the I/O subsystem and make container start time unacceptably increasing workload density. Docker containers enable seamless high for many workloads. As a result, copy-on-write (CoW) deployment of workloads across development, test, and produc- tion environments. Docker’s unique approach to data manage- storage and storage snapshots are popularly used and images ment, which involves frequent snapshot creation and removal, are structured in layers. A layer consists of a set of files and presents a new set of exciting challenges for storage systems. At layers with the same content can be shared across images, the same time, storage management for Docker containers has reducing the amount of storage required to run containers. remained largely unexplored with a dizzying array of solution With Docker, one can choose Aufs [6], Overlay2 [7], choices and configuration options. In this paper we unravel the multi-faceted nature of Docker storage and demonstrate its Btrfs [8], or device-mapper (dm) [9] as storage drivers which impact on system and workload performance. As we uncover provide the required snapshotting and CoW capabilities for new properties of the popular Docker storage drivers, this is a images. None of these solutions, however, were designed with sobering reminder that widespread use of new technologies can Docker in mind and their effectiveness for Docker has not been often precede their careful evaluation. -

Mesalock Linux: Towards a Memory-Safe Linux Distribution

MesaLock Linux Towards a memory-safe Linux distribution Mingshen Sun MesaLock Linux Maintainer | Baidu X-Lab, USA Shanghai Jiao Tong University, 2018 whoami • Senior Security Research in Baidu X-Lab, Baidu USA • PhD, The Chinese University of Hong Kong • System security, mobile security, IoT security, and car hacking • MesaLock Linux, TaintART, Pass for iOS, etc. • mssun @ GitHub | https://mssun.me !2 MesaLock Linux • Why • What • How !3 Why • Memory corruption occurs in a computer program when the contents of a memory location are unintentionally modified; this is termed violating memory safety. • Memory safety is the state of being protected from various software bugs and security vulnerabilities when dealing with memory access, such as buffer overflows and dangling pointers. !4 Stack Buffer Overflow • https://youtu.be/T03idxny9jE !5 Types of memory errors • Access errors • Buffer overflow • Race condition • Use after free • Uninitialized variables • Memory leak • Double free !6 Memory-safety in user space • CVE-2017-13089 wget: Stack-based buffer overflow in HTTP protocol handling • A stack-based buffer overflow when processing chunked, encoded HTTP responses was found in wget. By tricking an unsuspecting user into connecting to a malicious HTTP server, an attacker could exploit this flaw to potentially execute arbitrary code. • https://bugzilla.redhat.com/show_bug.cgi?id=1505444 • POC: https://github.com/r1b/CVE-2017-13089 !7 What • Linux distribution • Memory-safe user space !8 Linux Distribution • A Linux distribution (often abbreviated as distro) is an operating system made from a software collection, which is based upon the Linux kernel and, often, a package management system. !9 Linux Distros • Server: CentOS, Federa, RedHat, Debian • Desktop: Ubuntu • Mobile: Android • Embedded: OpenWRT, Yocto • Hard-core: Arch Linux, Gentoo • Misc: ChromeOS, Alpine Linux !10 Security and Safety? • Gentoo Hardened: enables several risk-mitigating options in the toolchain, supports PaX, grSecurity, SELinux, TPE and more. -

1. D-Bus a D-Bus FAQ Szerint D-Bus Egy Interprocessz-Kommunikációs Protokoll, És Annak Referenciamegvalósítása

Az Udev / D-Bus rendszer - a modern asztali Linuxok alapja A D-Bus rendszer minden modern Linux disztribúcióban jelen van, sőt mára már a Linux, és más UNIX jellegű, sőt nem UNIX rendszerek (különösen a desktopon futó változatok) egyik legalapvetőbb technológiája, és az ismerete a rendszergazdák számára lehetővé tesz néhány rendkívül hasznos trükköt, az alkalmazásfejlesztőknek pedig egyszerűen KÖTELEZŐ ismerniük. Miért ilyen fontos a D-Bus? Mit csinál? D-Bus alapú technológiát teszik lehetővé többek között azt, hogy közönséges felhasználóként a kedvenc asztali környezetünkbe bejelentkezve olyan feladatokat hajtsunk végre, amiket a kernel csak a root felasználónak engedne meg. Felmountolunk egy USB meghajtót? NetworkManagerrel konfiguráljuk a WiFi-t, a 3G internetet vagy bármilyen más hálózati csatolót, és kapcsolódunk egy hálózathoz? Figyelmeztetést kapunk a rendszertől, hogy új szoftverfrissítések érkeztek, majd telepítjük ezeket? Hibernáljuk, felfüggesztjük a gépet? A legtöbb esetben ma már D-Bus alapú technológiát használunk ilyen esetben. A D-Bus lehetővé teszi, hogy egymástól függetlenül, jellemzően más UID alatt indított szoftverösszetevők szabványos és biztonságos módon igénybe vegyék egymás szolgáltatásait. Ha valaha lesz a Linuxhoz professzionális desktop tűzfal vagy vírusirtó megoldás, a dolgok jelenlegi állasa szerint annak is D- Bus technológiát kell használnia. A D-Bus technológia legfontosabb ihletője a KDE DCOP rendszere volt, és mára a D-Bus leváltotta a DCOP-ot, csakúgy, mint a Gnome Bonobo technológiáját. 1. D-Bus A D-Bus FAQ szerint D-Bus egy interprocessz-kommunikációs protokoll, és annak referenciamegvalósítása. Ezen referenciamegvalósítás egyik összetevője, a libdbus könyvtár a D- Bus szabványnak megfelelő kommunikáció megvalósítását segíti. Egy másik összetevő, a dbus- daemon a D-Bus üzenetek routolásáért, szórásáért felelős. -

Hypervisors Vs. Lightweight Virtualization: a Performance Comparison

2015 IEEE International Conference on Cloud Engineering Hypervisors vs. Lightweight Virtualization: a Performance Comparison Roberto Morabito, Jimmy Kjällman, and Miika Komu Ericsson Research, NomadicLab Jorvas, Finland [email protected], [email protected], [email protected] Abstract — Virtualization of operating systems provides a container and alternative solutions. The idea is to quantify the common way to run different services in the cloud. Recently, the level of overhead introduced by these platforms and the lightweight virtualization technologies claim to offer superior existing gap compared to a non-virtualized environment. performance. In this paper, we present a detailed performance The remainder of this paper is structured as follows: in comparison of traditional hypervisor based virtualization and Section II, literature review and a brief description of all the new lightweight solutions. In our measurements, we use several technologies and platforms evaluated is provided. The benchmarks tools in order to understand the strengths, methodology used to realize our performance comparison is weaknesses, and anomalies introduced by these different platforms in terms of processing, storage, memory and network. introduced in Section III. The benchmark results are presented Our results show that containers achieve generally better in Section IV. Finally, some concluding remarks and future performance when compared with traditional virtual machines work are provided in Section V. and other recent solutions. Albeit containers offer clearly more dense deployment of virtual machines, the performance II. BACKGROUND AND RELATED WORK difference with other technologies is in many cases relatively small. In this section, we provide an overview of the different technologies included in the performance comparison. -

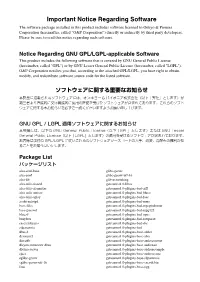

Important Notice Regarding Software

Important Notice Regarding Software The software package installed in this product includes software licensed to Onkyo & Pioneer Corporation (hereinafter, called “O&P Corporation”) directly or indirectly by third party developers. Please be sure to read this notice regarding such software. Notice Regarding GNU GPL/LGPL-applicable Software This product includes the following software that is covered by GNU General Public License (hereinafter, called "GPL") or by GNU Lesser General Public License (hereinafter, called "LGPL"). O&P Corporation notifies you that, according to the attached GPL/LGPL, you have right to obtain, modify, and redistribute software source code for the listed software. ソフトウェアに関する重要なお知らせ 本製品に搭載されるソフトウェアには、オンキヨー & パイオニア株式会社(以下「弊社」とします)が 第三者より直接的に又は間接的に使用の許諾を受けたソフトウェアが含まれております。これらのソフト ウェアに関する本お知らせを必ずご一読くださいますようお願い申し上げます。 GNU GPL / LGPL 適用ソフトウェアに関するお知らせ 本製品には、以下の GNU General Public License(以下「GPL」とします)または GNU Lesser General Public License(以下「LGPL」とします)の適用を受けるソフトウェアが含まれております。 お客様は添付の GPL/LGPL に従いこれらのソフトウェアソースコードの入手、改変、再配布の権利があ ることをお知らせいたします。 Package List パッケージリスト alsa-conf-base glibc-gconv alsa-conf glibc-gconv-utf-16 alsa-lib glib-networking alsa-utils-alsactl gstreamer1.0-libav alsa-utils-alsamixer gstreamer1.0-plugins-bad-aiff alsa-utils-amixer gstreamer1.0-plugins-bad-bluez alsa-utils-aplay gstreamer1.0-plugins-bad-faac avahi-autoipd gstreamer1.0-plugins-bad-mms base-files gstreamer1.0-plugins-bad-mpegtsdemux base-passwd gstreamer1.0-plugins-bad-mpg123 bluez5 gstreamer1.0-plugins-bad-opus busybox gstreamer1.0-plugins-bad-rawparse