7 Passthrough+: Real-Time Stereoscopic View Synthesis for Mobile Mixed Reality

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Cybersickness in Head-Mounted Displays Is Caused by Differences in the User's Virtual and Physical Head Pose

University of Wollongong Research Online Faculty of Arts, Social Sciences and Humanities - Papers Faculty of Arts, Social Sciences & Humanities January 2020 Cybersickness in Head-Mounted Displays Is Caused by Differences in the User's Virtual and Physical Head Pose Stephen Palmisano University of Wollongong, [email protected] Robert S. Allison Juno Kim Follow this and additional works at: https://ro.uow.edu.au/asshpapers Recommended Citation Palmisano, Stephen; Allison, Robert S.; and Kim, Juno, "Cybersickness in Head-Mounted Displays Is Caused by Differences in the User's Virtual and Physical Head Pose" (2020). Faculty of Arts, Social Sciences and Humanities - Papers. 389. https://ro.uow.edu.au/asshpapers/389 Research Online is the open access institutional repository for the University of Wollongong. For further information contact the UOW Library: [email protected] Cybersickness in Head-Mounted Displays Is Caused by Differences in the User's Virtual and Physical Head Pose Abstract Sensory conflict, eye-movement, and postural instability theories each have difficulty accounting for the motion sickness experienced during head-mounted display based virtual reality (HMD VR). In this paper we review the limitations of existing theories in explaining cybersickness and propose a practical alternative approach. We start by providing a clear operational definition of provocative motion stimulation during active HMD VR. In this situation, whenever the user makes a head movement, his/her virtual head will tend to trail its true position and orientation due to the display lag (or motion to photon latency). Importantly, these differences in virtual and physical head pose (DVP) will vary over time. -

Lab Report Template

VIRTUAL AND AUGMENTED REALITY Juan Jose Castano Moreno Department of Engineering Elizabethtown College Elizabethtown, PA 17022 USA I. ABSTRACT Augmented and virtual reality have been booming in the last On the one hand, virtual reality consists on a computer- decades. However, their differences and characteristics are generated virtual environment. It is the use of a specialized commonly not very discussed or well-known. This paper will headset to get immersed in a totally digital world. It is very provide a deeper insight on what exactly these technologies used in the videogame industry and also, in learning are. Furthermore, their current applications and developments environments. On the other hand, augmented reality is the are also going to be discussed. Based on all of this combination of computer-generated data with the real world. information, the future scope of these technologies will be In AR, the real world serves as a base to be enhanced by the analyzed as well. virtual elements added by the computer, like in Pokémon go. II. INTRODUCTION According to Dawait Amit Vyas and Dvijesh Bhatt [2], “One In the last decades, two of the technologies that have been can envision AR as a technology in which one could see more most developed are Virtual Reality (VR) and Augmented than others see, hear more than what others hear and perhaps Reality (AR). It is in the most special interest of many even touch and feel, smell and taste things that other people companies to take these technologies to the next level, like cannot feel.” These definitions are crucial to understand the Apple Inc. -

Laser Scanning for Bim and Results Visualization Using Vr

The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Volume XLII-5/W2, 2019 Measurement, Visualisation and Processing in BIM for Design and Construction Management, 24–25 September 2019, Prague, Czech Republic LASER SCANNING FOR BIM AND RESULTS VISUALIZATION USING VR K. Pavelka, jr.1, B. Michalík 2 1 Czech Technical University in Prague, Faculty of Civil Engineering, dept. of Geomatics, Thakurova 7, Prague 6,16629 [email protected] 2 G4D s.r.o., Prague, Czech Republic, Hauptova 594 156 00 Praha 5-Zbraslav, Czech Republic, [email protected] KEY WORDS: Virtual reality, photogrammetry, laser scanning, BIM, documentation ABSTRACT: Virtual Reality (VR) is a highly topical subject in many branches of science and industry. Thanks to the rapid development and advancement of computer technology in recent years, it can now be used to a large extent, with more detail to show and is now more affordable than before. The use of virtual reality is currently devoted to many disciplines and it can be expected that its popularity will grow progressively over the next few years. The Laboratory of Photogrammetry at the Czech Technical University in Prague is also interested in VR and focuses mainly on documentation and visualization of historical buildings and objects. Our opinion is that in the field of virtual reality there is great potential and extensive possibilities. 3D models of historical objects, primarily created by photogrammetric IBRM technology (image based modelling and rendering) or by laser scanning, gain a completely different perspective in VR. In general, most of the newly designed buildings are now being implemented into BIM. -

Off-The-Shelf Stylus: Using XR Devices for Handwriting and Sketching on Physically Aligned Virtual Surfaces

TECHNOLOGY AND CODE published: 04 June 2021 doi: 10.3389/frvir.2021.684498 Off-The-Shelf Stylus: Using XR Devices for Handwriting and Sketching on Physically Aligned Virtual Surfaces Florian Kern*, Peter Kullmann, Elisabeth Ganal, Kristof Korwisi, René Stingl, Florian Niebling and Marc Erich Latoschik Human-Computer Interaction (HCI) Group, Informatik, University of Würzburg, Würzburg, Germany This article introduces the Off-The-Shelf Stylus (OTSS), a framework for 2D interaction (in 3D) as well as for handwriting and sketching with digital pen, ink, and paper on physically aligned virtual surfaces in Virtual, Augmented, and Mixed Reality (VR, AR, MR: XR for short). OTSS supports self-made XR styluses based on consumer-grade six-degrees-of-freedom XR controllers and commercially available styluses. The framework provides separate modules for three basic but vital features: 1) The stylus module provides stylus construction and calibration features. 2) The surface module provides surface calibration and visual feedback features for virtual-physical 2D surface alignment using our so-called 3ViSuAl procedure, and Edited by: surface interaction features. 3) The evaluation suite provides a comprehensive test bed Daniel Zielasko, combining technical measurements for precision, accuracy, and latency with extensive University of Trier, Germany usability evaluations including handwriting and sketching tasks based on established Reviewed by: visuomotor, graphomotor, and handwriting research. The framework’s development is Wolfgang Stuerzlinger, Simon Fraser University, Canada accompanied by an extensive open source reference implementation targeting the Unity Thammathip Piumsomboon, game engine using an Oculus Rift S headset and Oculus Touch controllers. The University of Canterbury, New Zealand development compares three low-cost and low-tech options to equip controllers with a *Correspondence: tip and includes a web browser-based surface providing support for interacting, Florian Kern fl[email protected] handwriting, and sketching. -

UCLA Electronic Theses and Dissertations

UCLA UCLA Electronic Theses and Dissertations Title Transcoded Identities: Identification in Games and Play Permalink https://escholarship.org/uc/item/0394m0xb Author Juliano, Linzi Publication Date 2015 Peer reviewed|Thesis/dissertation eScholarship.org Powered by the California Digital Library University of California UNIVERSITY OF CALIFORNIA Los Angeles Transcoded Identities: Identification in Games and Play A dissertation submitted in partial satisfaction of the requirements for the degree of Doctor of Philosophy in Theater and Performance Studies By Linzi Michel Juliano 2015 © Copyright by Linzi Michel Juliano 2015 ABSTRACT OF THE DISSERTATION Transcoded Identities: Identification in Games and Play By Linzi Michel Juliano Doctor of Philosophy in Theater and Performance Studies University of California, Los Angeles, 2015 Professor Sue-Ellen Case, Chair This work foregrounds how technologies create and emerge from sociocultural, economic and political discourses. My use of transcode, a term introduced by the semiotician A.J. Greimas and carried into the digital realm by Lev Manovich, refers to how cultural elements such as assumptions, biases, priorities emerge within programming code and software. It demonstrates how cultural norms persist across different mediums and posits that, in many ways, the capacity to be flexible defines cultural ideologies. At the software level, programming languages work like performative speech: grammar which produces effects. When cast as speech, coming from a body (or bodies) instead of hardware, information structures can be perceived as acting within regimes of corporeality; when cast as software, information structures demonstrate and advertise the capabilities of hardware. Although often aligned with veracity and stability in its proximity to (computer) science, software is not culturally neutral. -

PL 53N4 Julyaug14.Pdf

Every Child Ready to Read®, Second Edition materials are based on research from the PLA/ALSC Early Literacy Initiative, a project of the Association for Library Service to Children (ALSC) and the Public Library Association (PLA), divisions of the American Library Association. Feature Articles 24 27 37 Simple Steps to Starting YA Spaces and the End Time to Rethink Readers’ a Seed Library of Postural Tyranny Advisory Education? By Emily Weak By Anthony Bernier & Mike Males By Bill Crowley Outlines Mountain View (Calif.) Library’s Studies the importance of accommodat- Examines how readers’ advisory theory process of beginning their seed library, ing YA-specific spatial needs in existing and practice may produce a service from simple plant exchange to a robust library spaces. philosophy out of sync with contempo- deployment of community resources. rary culture. Departments PLA News 3 By the Book 44 Kathleen Hughes Catherine Hakala-Ausperk President’s Message 5 New Product News 46 Larry P. Neal Heather Teysko & Tanya Novak Tales from the Front 10 Under the Radar 48 Mary Rzepczynski Kaite Mediatore Stover & Jessica Moyer Perspectives 12 Cover illustration by James LaRue Jim Lange Design (jimlangedesign@ The Wired Library 18 Extras sbcglobal.net) R. Toby Greenwalt Fundraising Beyond Editor’s Note 2 Book Sales 20 Verso—Public Libraries: Liz Boyd How to Save Them 7 Forward Thinking 22 Advertiser Index 9 John Spears EDITORIAL EDITOR: Kathleen M. Hughes PL Editor CONTRIBUTING EDITORS: Liz Boyd, R. Toby Greenwalt, Catherine KATHLEEN M. Hakala-Ausperk, Nanci Milone Hill, Kevin King, James LaRue, HUGHES Editor’s Note Jessica Moyer, Tanya Novak, Mary Rzepczynski, John Spears, Kaite Mediatore Stover, Heather Teysko During the years I have worked on the magazine, I’ve been consistently amazed ADVISORY COMMITTEE Joanne King, Queens (N.Y.) Library (Chair); Loida A. -

Method for Estimating Display Lag in the Oculus Rift S and CV1

Method for estimating display lag in the Oculus Rift S and CV1 Jason Feng Juno Kim Wilson Luu School of Optometry and Vision School of Optometry and Vision School of Optometry and Vision Science Science Science UNSW Sydney, Australia, UNSW Sydney, Australia, UNSW Sydney, Australia, [email protected] [email protected] [email protected] Stephen Palmisano School of Psychology University of Wollongong, Australia, [email protected] ABSTRACT for a change in angular head orientation (i.e., motion-to-photon delay). Indeed, high display lag has been found to generate We validated an optical method for measuring the display lag of perceived scene instability and reduce presence [Kim et al. 2018] modern head-mounted displays (HMDs). The method used a and increase cybersickness [Palmisano et al. 2017]. high-speed digital camera to track landmarks rendered on a New systems like the Oculus Rift CV1 and S reduce display display panel of the Oculus Rift CV1 and S models. We used an lag by invoking Asynchronous Time and Space Warp (ATW and Nvidia GeForce RTX 2080 graphics adapter and found that the ASW), which also significantly reduces perceived system lag by minimum estimated baseline latency of both the Oculus CV1 and users [Freiwald et al. 2018]. Here, we devised a method based on S was extremely short (~2 ms). Variability in lag was low, even a previous technique [Kim et al. 2015] to ascertain the best when the lag was systematically inflated. Cybersickness was approach to estimating display lag and its effects on induced with the small baseline lag and increased as this lag was cybersickness in modern VR HMDs. -

Platform Overview. December 2020 Contents

Platform Overview. December 2020 Contents. Contents. .................................................................................................................................... 2 Introduction. ............................................................................................................................... 3 Glue overview. ............................................................................................................................ 4 Glue Teams .............................................................................................................................................................. 4 Authentication .................................................................................................................................................... 4 Glue Team Spaces .................................................................................................................................................. 5 Spatial audio ....................................................................................................................................................... 5 3D avatars ............................................................................................................................................................ 5 Objects ................................................................................................................................................................. 5 Persistence ......................................................................................................................................................... -

Remote Indoor Construction Progress Monitoring Using Extended Reality

Preprints (www.preprints.org) | NOT PEER-REVIEWED | Posted: 23 November 2020 doi:10.20944/preprints202011.0585.v1 Article Remote Indoor Construction Progress Monitoring Using Extended Reality Ahmed Khairadeen Ali 1,2 , One Jae Lee 2, Doyeop Lee 1 and Chansik Park1,* 1 School of Architecture and Building Sciences, Chung-Ang University, Seoul 06974, Republic of Korea 2 Haenglim Architecture and Engineering Company, Republic of Korea; [email protected] * School of Architecture and Building Sciences, Chung-Ang University, Seoul 06974, Republic of Korea: [email protected] Abstract: Despite recent developments in monitoring and visualizing construction progress, the data exchange between construction jobsite and office lacks automation and real-time recording. To address this issue, a near real-time construction work inspection system called iVR is proposed; this system integrates 3D scanning, extended reality, and visual programming to visualize interactive onsite inspection for indoor activities and provide numeric data. iVR comprises five modules: iVR-location finder (finding laser scanner located in the construction site) iVR-scan (capture point cloud data of jobsite indoor activity), iVR-prepare (processes and convert 3D scan data into a 3D model), iVR-inspect (conduct immersive visual reality inspection in construction office), and iVR-feedback (visualize inspection feedback from jobsite using augmented reality). An experimental lab test is conducted to validate the applicability of iVR process; it successfully exchanges required information between construction jobsite and office in a specific time. This system is expected to assist Engineers and workers in quality assessment, progress assessments, and decision-making which can realize a productive and practical communication platform, unlike conventional monitoring or data capturing, processing, and storage methods, which involve storage, compatibility, and time-consumption issues. -

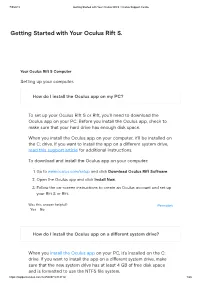

Getting Started with Your Oculus Rift S

7/5/2019 Getting Started with Your Oculus Rift S | Oculus Support Centre Getting Started with Your Oculus Rift S. Your Oculus Rift S Computer Setting up your computer. How do I install the Oculus app on my PC? To set up your Oculus Rift S or Rift, you'll need to download the Oculus app on your PC. Before you install the Oculus app, check to make sure that your hard drive has enough disk space. When you install the Oculus app on your computer, it'll be installed on the C: drive. If you want to install the app on a different system drive, read this support article for additional instructions. To download and install the Oculus app on your computer: 1. Go to www.oculus.com/setup and click Download Oculus Rift Software. 2. Open the Oculus app and click Install Now. 3. Follow the on-screen instructions to create an Oculus account and set up your Rift S or Rift. Was this answer helpful? Permalink Yes No How do I install the Oculus app on a different system drive? When you install the Oculus app on your PC, it's installed on the C: drive. If you want to install the app on a different system drive, make sure that the new system drive has at least 4 GB of free disk space and is formatted to use the NTFS file system. https://support.oculus.com/1225089714318112/ 1/26 7/5/2019 Getting Started with Your Oculus Rift S | Oculus Support Centre To install the Oculus app on a different system drive: 1. -

Access to Platforms and Headset Compatibility 77Th Venice International Film Festival

Access to platforms and Headset Compatibility 77th Venice International Film Festival Accreditation Free access access Venice VR Expanded Platform on VRChat designed by VRrOOM Arrival experience √ √ Exhibition hall (44 works complete selection) √ √ 3DoF screenings in streaming √ √ 6DoF previews √ √ Garden √ Social, XR professional meetings, panels, √ talks and events Opening party and closing ceremony √ Viveport 6DoF projects (see program details) √ √ Viveport Video 3DoF projects (see program details) √ √ Oculus Store Quest only 6DoF projects (see program √ details) Oculus TV 3DoF projects (see program details) √ √ Other apps 6DoF projects (see program details) √ Access to platforms and Headset Compatibility 77th Venice International Film Festival program Details VRrOOm/ Viveport Viveport Video Oculus store Oculus TV Standalone app Standalone app VRChat Compatible device PC – PCVR Vive, Vive Pro, PC – HTC Vive Oculus Quest Oculus Quest Oculus Rift Smartphones Oculus Quest Rift, Rift S devices via key Oculus Rift, Oculus Oculus Rift S – Android Rift S, Oculus Go Desktop – Mac and IOS * Vive Cosmos and Windows Headphones needed 3DoF Full Experience Full Experience Full Experience Full Experience 4 Feet High √ √ √ Au pays du cancre mou (In the Land √ √ √ of the Flabby Schnook) OM DEVI: Sheroes Revolution √ √ √ Penggantian (Replacements) √ √ √ Recoding Entropia √ √ √ Wo Sheng Ming Zhong De 60 Miao √ √ √ (One More Minute) 1st Step – From Earth to the Moon √ √ √ Jiou Jia (Home) √ √ √ Meet Mortaza VR √ √ √ Access to platforms and Headset Compatibility -

Immersive Technologies in Education

Immersive Technologies in Education Talking Teaching Seminar Omar K. Matar @MatarLab http://www.imperial.ac.uk/matar-fluids-group/ Chem. Eng. Fluid Mechanics Teaching Chem. Eng. Fluid Mechanics Teaching Poiseuille Flow Chem. Eng. Fluid Mechanics Teaching Flow Past a Sphere Chem. Eng. Fluid Mechanics Teaching Rising Bubble Chem. Eng. Fluid Mechanics Teaching Mixing https://www.youtube.com/watch?v=ccb0Lth1k2g CRI Program for Medical Students • Collaboration: Digital Learning Hub • Objective: Assess the impact of VR in learning • Thee Medical Students conducted studies on 30+ subjects. • Poster Title: Does VR increase learning gain when compared to a similar traditional learning experience? CRI VR Session under progress (image) CRI Program for Medical Students Figure from Poster (image) from Poster Figure Molecular Dynamics • Collaboration: Prof. Erich Muller, ChemEng • Objective: Simulate the dynamics of molecules in VR (Solid, Liquid, Vapor Phase transition) • Based on: Lennard Jones Potential Model VR MD Simulation VR at Earth Sciences • Collaboration: Mark Sutton, Royal School of Mines • Objective: Assess the impact of VR in learning of Palaeobiology and Earth Science. • Cases: VR Miller Indices, VR Field Work, VR Showcase Cognitive Education and Training Framework using Virtual Reality • Collaboration: Shell (under discussion) • Objective: If VR can be effective for safety training along with or in lieu of conventional methods. • Hypothesis: VR based training would be feasible and effective, in terms of human learning and recall in identifying and assessing safety risks, that would equivalent training using conventional methods. • Proposal: VR training platform that can measure and non- invasively track the cognitive engagement and load using Brain Computing Interface (BCI), can adapt and evaluate the Proposed Platform Figure (image) training process.