Symposium on Telescope Science

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Annualreport2005 Web.Pdf

Vision Statement The Space Science Institute is a thriving center of talented, entrepreneurial scientists, educators, and other professionals who make outstanding contributions to humankind’s understanding and appreciation of planet Earth, the Solar System, the galaxy, and beyond. 2 | Space Science Institute | Annual Report 2005 From Our Director Excite. Explore. Discover. These words aptly describe what we do in the research realm as well as in education. In fact, they defi ne the essence of our mission. Our mission is facilitated by a unique blend of on- and off-site researchers coupled with an extensive portfolio of education and public outreach (EPO) projects. This past year has seen SSI grow from $4.1M to over $4.3M in grants, an increase of nearly 6%. We now have over fi fty full and part-time staff. SSI’s support comes mostly from NASA and the National Sci- ence Foundation. Our Board of Directors now numbers eight. Their guidance and vision—along with that of senior management—have created an environment that continues to draw world-class scientists to the Institute and allows us to develop educa- tion and outreach programs that benefi t millions of people worldwide. SSI has a robust scientifi c research program that includes robotic missions such as the Mars Exploration Rovers, fl ight missions such as Cassini and the Spitzer Space Telescope, Hubble Space Telescope (HST), and ground-based programs. Dr. Tom McCord joined the Institute in 2005 as a Senior Research Scientist. He directs the Bear Fight Center, a 3,000 square-foot research and meeting facility in Washington state. -

The Minor Planet Bulletin

THE MINOR PLANET BULLETIN OF THE MINOR PLANETS SECTION OF THE BULLETIN ASSOCIATION OF LUNAR AND PLANETARY OBSERVERS VOLUME 36, NUMBER 3, A.D. 2009 JULY-SEPTEMBER 77. PHOTOMETRIC MEASUREMENTS OF 343 OSTARA Our data can be obtained from http://www.uwec.edu/physics/ AND OTHER ASTEROIDS AT HOBBS OBSERVATORY asteroid/. Lyle Ford, George Stecher, Kayla Lorenzen, and Cole Cook Acknowledgements Department of Physics and Astronomy University of Wisconsin-Eau Claire We thank the Theodore Dunham Fund for Astrophysics, the Eau Claire, WI 54702-4004 National Science Foundation (award number 0519006), the [email protected] University of Wisconsin-Eau Claire Office of Research and Sponsored Programs, and the University of Wisconsin-Eau Claire (Received: 2009 Feb 11) Blugold Fellow and McNair programs for financial support. References We observed 343 Ostara on 2008 October 4 and obtained R and V standard magnitudes. The period was Binzel, R.P. (1987). “A Photoelectric Survey of 130 Asteroids”, found to be significantly greater than the previously Icarus 72, 135-208. reported value of 6.42 hours. Measurements of 2660 Wasserman and (17010) 1999 CQ72 made on 2008 Stecher, G.J., Ford, L.A., and Elbert, J.D. (1999). “Equipping a March 25 are also reported. 0.6 Meter Alt-Azimuth Telescope for Photometry”, IAPPP Comm, 76, 68-74. We made R band and V band photometric measurements of 343 Warner, B.D. (2006). A Practical Guide to Lightcurve Photometry Ostara on 2008 October 4 using the 0.6 m “Air Force” Telescope and Analysis. Springer, New York, NY. located at Hobbs Observatory (MPC code 750) near Fall Creek, Wisconsin. -

Alactic Observer Gjohn J

alactic Observer GJohn J. McCarthy Observatory Volume 5, No. 2 February 2012 Belly of the Beast At the center of the Milky Way galaxy, a gas cloud is on a perilous journey into a supermassive black hole. As the cloud stretches and accelerates, it gives away the location of its silent predator. For more information , see page 9 inside, or go to http://www.nasa.gov/ centers/goddard/news/topstory/2008/ blackhole_slumber.html. Credit: NASA/CXC/MIT/Frederick K. Baganoff et al. The John J. McCarthy Observatory Galactic Observvvererer New Milford High School Editorial Committee 388 Danbury Road Managing Editor New Milford, CT 06776 Bill Cloutier Phone/Voice: (860) 210-4117 Production & Design Phone/Fax: (860) 354-1595 Allan Ostergren www.mccarthyobservatory.org Website Development John Gebauer JJMO Staff Marc Polansky It is through their efforts that the McCarthy Observatory has Josh Reynolds established itself as a significant educational and recreational Technical Support resource within the western Connecticut community. Bob Lambert Steve Barone Allan Ostergren Dr. Parker Moreland Colin Campbell Cecilia Page Dennis Cartolano Joe Privitera Mike Chiarella Bruno Ranchy Jeff Chodak Josh Reynolds Route Bill Cloutier Barbara Richards Charles Copple Monty Robson Randy Fender Don Ross John Gebauer Ned Sheehey Elaine Green Gene Schilling Tina Hartzell Diana Shervinskie Tom Heydenburg Katie Shusdock Phil Imbrogno Jon Wallace Bob Lambert Bob Willaum Dr. Parker Moreland Paul Woodell Amy Ziffer In This Issue THE YEAR OF THE SOLAR SYSTEM ................................ 4 SUNRISE AND SUNSET .................................................. 11 OUT THE WINDOW ON YOUR LEFT ............................... 5 ASTRONOMICAL AND HISTORICAL EVENTS ...................... 11 FRA MAURA ................................................................ 5 REFERENCES ON DISTANCES ....................................... -

Comet Section Observing Guide

Comet Section Observing Guide 1 The British Astronomical Association Comet Section www.britastro.org/comet BAA Comet Section Observing Guide Front cover image: C/1995 O1 (Hale-Bopp) by Geoffrey Johnstone on 1997 April 10. Back cover image: C/2011 W3 (Lovejoy) by Lester Barnes on 2011 December 23. © The British Astronomical Association 2018 2018 December (rev 4) 2 CONTENTS 1 Foreword .................................................................................................................................. 6 2 An introduction to comets ......................................................................................................... 7 2.1 Anatomy and origins ............................................................................................................................ 7 2.2 Naming .............................................................................................................................................. 12 2.3 Comet orbits ...................................................................................................................................... 13 2.4 Orbit evolution .................................................................................................................................... 15 2.5 Magnitudes ........................................................................................................................................ 18 3 Basic visual observation ........................................................................................................ -

Asteroid Regolith Weathering: a Large-Scale Observational Investigation

University of Tennessee, Knoxville TRACE: Tennessee Research and Creative Exchange Doctoral Dissertations Graduate School 5-2019 Asteroid Regolith Weathering: A Large-Scale Observational Investigation Eric Michael MacLennan University of Tennessee, [email protected] Follow this and additional works at: https://trace.tennessee.edu/utk_graddiss Recommended Citation MacLennan, Eric Michael, "Asteroid Regolith Weathering: A Large-Scale Observational Investigation. " PhD diss., University of Tennessee, 2019. https://trace.tennessee.edu/utk_graddiss/5467 This Dissertation is brought to you for free and open access by the Graduate School at TRACE: Tennessee Research and Creative Exchange. It has been accepted for inclusion in Doctoral Dissertations by an authorized administrator of TRACE: Tennessee Research and Creative Exchange. For more information, please contact [email protected]. To the Graduate Council: I am submitting herewith a dissertation written by Eric Michael MacLennan entitled "Asteroid Regolith Weathering: A Large-Scale Observational Investigation." I have examined the final electronic copy of this dissertation for form and content and recommend that it be accepted in partial fulfillment of the equirr ements for the degree of Doctor of Philosophy, with a major in Geology. Joshua P. Emery, Major Professor We have read this dissertation and recommend its acceptance: Jeffrey E. Moersch, Harry Y. McSween Jr., Liem T. Tran Accepted for the Council: Dixie L. Thompson Vice Provost and Dean of the Graduate School (Original signatures are on file with official studentecor r ds.) Asteroid Regolith Weathering: A Large-Scale Observational Investigation A Dissertation Presented for the Doctor of Philosophy Degree The University of Tennessee, Knoxville Eric Michael MacLennan May 2019 © by Eric Michael MacLennan, 2019 All Rights Reserved. -

Clasificación Taxonómica De Asteroides

Clasificación Taxonómica de Asteroides Cercanos a la Tierra por Ana Victoria Ojeda Vera Tesis sometida como requisito parcial para obtener el grado de MAESTRO EN CIENCIAS EN CIENCIA Y TECNOLOGÍA DEL ESPACIO en el Instituto Nacional de Astrofísica, Óptica y Electrónica Agosto 2019 Tonantzintla, Puebla Bajo la supervisión de: Dr. José Ramón Valdés Parra Investigador Titular INAOE Dr. José Silviano Guichard Romero Investigador Titular INAOE c INAOE 2019 El autor otorga al INAOE el permiso de reproducir y distribuir copias parcial o totalmente de esta tesis. II Dedicatoria A mi familia, con gran cariño. A mis sobrinos Ian y Nahil, y a mi pequeña Lia. III Agradecimientos Gracias a mi familia por su apoyo incondicional. A mi mamá Tere, por enseñarme a ser perseverante y dedicada, y por sus miles de muestras de afecto. A mi hermana Fernanda, por darme el tiempo, consejos y cariño que necesitaba. A mi pareja Odi, por su amor y cariño estos tres años, por su apoyo, paciencia y muchas horas de ayuda en la maestría, pero sobre todo por darme el mejor regalo del mundo, nuestra pequeña Lia. Gracias a mis asesores Dr. José R. Valdés y Dr. José S. Guichard, promotores de esta tesis, por su paciencia, consejos y supervisión, y por enseñarme con sus clases divertidas y motivadoras todo lo que se refiere a este trabajo. A los miembros del comité, Dra. Raquel Díaz, Dr. Raúl Mújica y Dr. Sergio Camacho, por tomarse el tiempo de revisar y evaluar mi trabajo. Estoy muy agradecida con todos por sus críticas constructivas y sugerencias. -

Aqueous Alteration on Main Belt Primitive Asteroids: Results from Visible Spectroscopy1

Aqueous alteration on main belt primitive asteroids: results from visible spectroscopy1 S. Fornasier1,2, C. Lantz1,2, M.A. Barucci1, M. Lazzarin3 1 LESIA, Observatoire de Paris, CNRS, UPMC Univ Paris 06, Univ. Paris Diderot, 5 Place J. Janssen, 92195 Meudon Pricipal Cedex, France 2 Univ. Paris Diderot, Sorbonne Paris Cit´e, 4 rue Elsa Morante, 75205 Paris Cedex 13 3 Department of Physics and Astronomy of the University of Padova, Via Marzolo 8 35131 Padova, Italy Submitted to Icarus: November 2013, accepted on 28 January 2014 e-mail: [email protected]; fax: +33145077144; phone: +33145077746 Manuscript pages: 38; Figures: 13 ; Tables: 5 Running head: Aqueous alteration on primitive asteroids Send correspondence to: Sonia Fornasier LESIA-Observatoire de Paris arXiv:1402.0175v1 [astro-ph.EP] 2 Feb 2014 Batiment 17 5, Place Jules Janssen 92195 Meudon Cedex France e-mail: [email protected] 1Based on observations carried out at the European Southern Observatory (ESO), La Silla, Chile, ESO proposals 062.S-0173 and 064.S-0205 (PI M. Lazzarin) Preprint submitted to Elsevier September 27, 2018 fax: +33145077144 phone: +33145077746 2 Aqueous alteration on main belt primitive asteroids: results from visible spectroscopy1 S. Fornasier1,2, C. Lantz1,2, M.A. Barucci1, M. Lazzarin3 Abstract This work focuses on the study of the aqueous alteration process which acted in the main belt and produced hydrated minerals on the altered asteroids. Hydrated minerals have been found mainly on Mars surface, on main belt primitive asteroids and possibly also on few TNOs. These materials have been produced by hydration of pristine anhydrous silicates during the aqueous alteration process, that, to be active, needed the presence of liquid water under low temperature conditions (below 320 K) to chemically alter the minerals. -

Creation and Application of Routines for Determining Physical Properties of Asteroids and Exoplanets from Low Signal-To-Noise Data Sets

University of Central Florida STARS Electronic Theses and Dissertations, 2004-2019 2014 Creation and Application of Routines for Determining Physical Properties of Asteroids and Exoplanets from Low Signal-To-Noise Data Sets Nathaniel Lust University of Central Florida Part of the Astrophysics and Astronomy Commons, and the Physics Commons Find similar works at: https://stars.library.ucf.edu/etd University of Central Florida Libraries http://library.ucf.edu This Doctoral Dissertation (Open Access) is brought to you for free and open access by STARS. It has been accepted for inclusion in Electronic Theses and Dissertations, 2004-2019 by an authorized administrator of STARS. For more information, please contact [email protected]. STARS Citation Lust, Nathaniel, "Creation and Application of Routines for Determining Physical Properties of Asteroids and Exoplanets from Low Signal-To-Noise Data Sets" (2014). Electronic Theses and Dissertations, 2004-2019. 4635. https://stars.library.ucf.edu/etd/4635 CREATION AND APPLICATION OF ROUTINES FOR DETERMINING PHYSICAL PROPERTIES OF ASTEROIDS AND EXOPLANETS FROM LOW SIGNAL-TO-NOISE DATA-SETS by NATE B LUST B.S. University of Central Florida, 2007 A dissertation submitted in partial fulfilment of the requirements for the degree of Doctor of Philosophy in Physics in the Department of Physics in the College of Sciences at the University of Central Florida Orlando, Florida Fall Term 2014 Major Professor: Daniel Britt © 2014 Nate B Lust ii ABSTRACT Astronomy is a data heavy field driven by observations of remote sources reflecting or emitting light. These signals are transient in nature, which makes it very important to fully utilize every observation. -

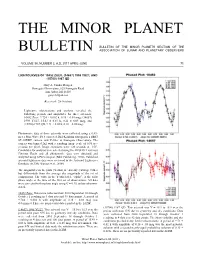

The Minor Planet Bulletin

THE MINOR PLANET BULLETIN OF THE MINOR PLANETS SECTION OF THE BULLETIN ASSOCIATION OF LUNAR AND PLANETARY OBSERVERS VOLUME 38, NUMBER 2, A.D. 2011 APRIL-JUNE 71. LIGHTCURVES OF 10452 ZUEV, (14657) 1998 YU27, AND (15700) 1987 QD Gary A. Vander Haagen Stonegate Observatory, 825 Stonegate Road Ann Arbor, MI 48103 [email protected] (Received: 28 October) Lightcurve observations and analysis revealed the following periods and amplitudes for three asteroids: 10452 Zuev, 9.724 ± 0.002 h, 0.38 ± 0.03 mag; (14657) 1998 YU27, 15.43 ± 0.03 h, 0.21 ± 0.05 mag; and (15700) 1987 QD, 9.71 ± 0.02 h, 0.16 ± 0.05 mag. Photometric data of three asteroids were collected using a 0.43- meter PlaneWave f/6.8 corrected Dall-Kirkham astrograph, a SBIG ST-10XME camera, and V-filter at Stonegate Observatory. The camera was binned 2x2 with a resulting image scale of 0.95 arc- seconds per pixel. Image exposures were 120 seconds at –15C. Candidates for analysis were selected using the MPO2011 Asteroid Viewing Guide and all photometric data were obtained and analyzed using MPO Canopus (Bdw Publishing, 2010). Published asteroid lightcurve data were reviewed in the Asteroid Lightcurve Database (LCDB; Warner et al., 2009). The magnitudes in the plots (Y-axis) are not sky (catalog) values but differentials from the average sky magnitude of the set of comparisons. The value in the Y-axis label, “alpha”, is the solar phase angle at the time of the first set of observations. All data were corrected to this phase angle using G = 0.15, unless otherwise stated. -

RASNZ Occultation Section Circular CN2009/1 April 2013 NOTICES

ISSN 11765038 (Print) RASNZ ISSN 23241853 (Online) OCCULTATION CIRCULAR CN2009/1 April 2013 SECTION Lunar limb Profile produced by Dave Herald's Occult program showing 63 events for the lunar graze of a bright, multiple star ZC2349 (aka Al Niyat, sigma Scorpi) on 31 July 2009 by two teams of observers from Wellington and Christchurch. The lunar profile is drawn using data from the Kaguya lunar surveyor, which became available after this event. The path the star followed across the lunar landscape is shown for one set of observers (Murray Forbes and Frank Andrews) by the trail of white circles. There are several instances where a stepped event was seen, due to the two brightest components disappearing or reappearing. See page 61 for more details. Visit the Occultation Section website at http://www.occultations.org.nz/ Newsletter of the Occultation Section of the Royal Astronomical Society of New Zealand Table of Contents From the Director.............................................................................................................................. 2 Notices................................................................................................................................................. 3 Seventh TransTasman Symposium on Occultations............................................................3 Important Notice re Report File Naming...............................................................................4 Observing Occultations using Video: A Beginner's Guide.................................................. -

A Study of Asteroid Pole-Latitude Distribution Based on an Extended

Astronomy & Astrophysics manuscript no. aa˙2009 c ESO 2018 August 22, 2018 A study of asteroid pole-latitude distribution based on an extended set of shape models derived by the lightcurve inversion method 1 1 1 2 3 4 5 6 7 J. Hanuˇs ∗, J. Durechˇ , M. Broˇz , B. D. Warner , F. Pilcher , R. Stephens , J. Oey , L. Bernasconi , S. Casulli , R. Behrend8, D. Polishook9, T. Henych10, M. Lehk´y11, F. Yoshida12, and T. Ito12 1 Astronomical Institute, Faculty of Mathematics and Physics, Charles University in Prague, V Holeˇsoviˇck´ach 2, 18000 Prague, Czech Republic ∗e-mail: [email protected] 2 Palmer Divide Observatory, 17995 Bakers Farm Rd., Colorado Springs, CO 80908, USA 3 4438 Organ Mesa Loop, Las Cruces, NM 88011, USA 4 Goat Mountain Astronomical Research Station, 11355 Mount Johnson Court, Rancho Cucamonga, CA 91737, USA 5 Kingsgrove, NSW, Australia 6 Observatoire des Engarouines, 84570 Mallemort-du-Comtat, France 7 Via M. Rosa, 1, 00012 Colleverde di Guidonia, Rome, Italy 8 Geneva Observatory, CH-1290 Sauverny, Switzerland 9 Benoziyo Center for Astrophysics, The Weizmann Institute of Science, Rehovot 76100, Israel 10 Astronomical Institute, Academy of Sciences of the Czech Republic, Friova 1, CZ-25165 Ondejov, Czech Republic 11 Severni 765, CZ-50003 Hradec Kralove, Czech republic 12 National Astronomical Observatory, Osawa 2-21-1, Mitaka, Tokyo 181-8588, Japan Received 17-02-2011 / Accepted 13-04-2011 ABSTRACT Context. In the past decade, more than one hundred asteroid models were derived using the lightcurve inversion method. Measured by the number of derived models, lightcurve inversion has become the leading method for asteroid shape determination. -

Spin States of Asteroids in the Eos Collisional Family

Spin states of asteroids in the Eos collisional family J. Hanuša,∗, M. Delbo’b, V. Alí-Lagoac, B. Bolinb, R. Jedicked, J. Durechˇ a, H. Cibulkováa, P. Pravece, P. Kušniráke, R. Behrendf, F. Marchisg, P. Antoninih, L. Arnoldi, M. Audejeanj, M. Bachschmidti, L. Bernasconik, L. Brunettol, S. Casullim, R. Dymockn, N. Esseivao, M. Estebanp, O. Gerteisi, H. de Grootq, H. Gullyi, H. Hamanowar, H. Hamanowar, P. Kraffti, M. Lehkýa, F. Manzinis, J. Michelett, E. Morelleu, J. Oeyv, F. Pilcherw, F. Reignierx, R. Royy, P.A. Salomp, B.D. Warnerz aAstronomical Institute, Faculty of Mathematics and Physics, Charles University, V Holešoviˇckách 2, 18000 Prague, Czech Republic bUniversité Côte d’Azur, OCA, CNRS, Lagrange, France cMax-Planck-Institut für extraterrestrische Physik, Giessenbachstraße, Postfach 1312, 85741 Garching, Germany dInstitute for Astronomy, University of Hawaii at Manoa, Honolulu, HI 96822, USA eAstronomical Institute, Academy of Sciences of the Czech Republic, Friˇcova 1, CZ-25165 Ondˇrejov, Czech Republic fGeneva Observatory, CH-1290 Sauverny, Switzerland gSETI Institute, Carl Sagan Center, 189 Bernado Avenue, Mountain View CA 94043, USA hObservatoire des Hauts Patys, F-84410 Bédoin, France iAix Marseille Université, CNRS, OHP (Observatoire de Haute Provence), Institut Pythéas (UMS 3470) 04870 Saint-Michel-l’Observatoire, France jObservatoire de Chinon, Mairie de Chinon, 37500 Chinon, France kObservatoire des Engarouines, 1606 chemin de Rigoy, F-84570 Malemort-du-Comtat, France lLe Florian, Villa 4, 880 chemin de Ribac-Estagnol,