Understanding Bias in Facial Recognition Technologies an Explainer

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Synthesizing Images of Humans in Unseen Poses

Synthesizing Images of Humans in Unseen Poses Guha Balakrishnan Amy Zhao Adrian V. Dalca Fredo Durand MIT MIT MIT and MGH MIT [email protected] [email protected] [email protected] [email protected] John Guttag MIT [email protected] Abstract We address the computational problem of novel human pose synthesis. Given an image of a person and a desired pose, we produce a depiction of that person in that pose, re- taining the appearance of both the person and background. We present a modular generative neural network that syn- Source Image Target Pose Synthesized Image thesizes unseen poses using training pairs of images and poses taken from human action videos. Our network sepa- Figure 1. Our method takes an input image along with a desired target pose, and automatically synthesizes a new image depicting rates a scene into different body part and background lay- the person in that pose. We retain the person’s appearance as well ers, moves body parts to new locations and refines their as filling in appropriate background textures. appearances, and composites the new foreground with a hole-filled background. These subtasks, implemented with separate modules, are trained jointly using only a single part details consistent with the new pose. Differences in target image as a supervised label. We use an adversarial poses can cause complex changes in the image space, in- discriminator to force our network to synthesize realistic volving several moving parts and self-occlusions. Subtle details conditioned on pose. We demonstrate image syn- details such as shading and edges should perceptually agree thesis results on three action classes: golf, yoga/workouts with the body’s configuration. -

The Meanings of Marimba Music in Rural Guatemala

The Meanings of Marimba Music in Rural Guatemala Sergio J. Navarrete Pellicer Ph D Thesis in Social Anthropology University College London University of London October 1999 ProQuest Number: U643819 All rights reserved INFORMATION TO ALL USERS The quality of this reproduction is dependent upon the quality of the copy submitted. In the unlikely event that the author did not send a complete manuscript and there are missing pages, these will be noted. Also, if material had to be removed, a note will indicate the deletion. uest. ProQuest U643819 Published by ProQuest LLC(2016). Copyright of the Dissertation is held by the Author. All rights reserved. This work is protected against unauthorized copying under Title 17, United States Code. Microform Edition © ProQuest LLC. ProQuest LLC 789 East Eisenhower Parkway P.O. Box 1346 Ann Arbor, Ml 48106-1346 Abstract This thesis investigates the social and ideological process of the marimba musical tradition in rural Guatemalan society. A basic assumption of the thesis is that “making music” and “talking about music” are forms of communication whose meanings arise from the social and cultural context in which they occur. From this point of view the main aim of this investigation is the analysis of the roles played by music within society and the construction of its significance as part of the social and cultural process of adaptation, continuity and change of Achi society. For instance the thesis elucidates how the dynamic of continuity and change affects the transmission of a musical tradition. The influence of the radio and its popular music on the teaching methods, music genres and styles of marimba music is part of a changing Indian society nevertheless it remains an important symbols of locality and ethnic identity. -

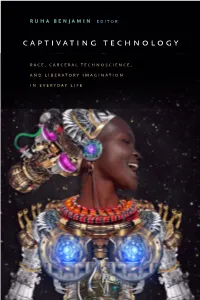

Captivating Technology

Ruha Benjamin editoR Captivating teChnology RaCe, CaRCeRal teChnosCienCe, and libeRatoRy imagination ine ev Ryday life captivating technology Captivating Technology race, carceral technoscience, and liberatory imagination in everyday life Ruha Benjamin, editor Duke University Press Durham and London 2019 © 2019 Duke University Press All rights reserved Printed in the United States of Amer i ca on acid- free paper ∞ Designed by Kim Bryant Typeset in Merope and Scala Sans by Westchester Publishing Services Library of Congress Cataloging- in- Publication Data Names: Benjamin, Ruha, editor. Title: Captivating technology : race, carceral technoscience, and liberatory imagination in everyday life / Ruha Benjamin, editor. Description: Durham : Duke University Press, 2019. | Includes bibliographical references and index. Identifiers: lccn 2018042310 (print) | lccn 2018056888 (ebook) isbn 9781478004493 (ebook) isbn 9781478003236 (hardcover : alk. paper) isbn 9781478003816 (pbk. : alk. paper) Subjects: lcsh: Prisons— United States. | Electronic surveillance— Social aspects— United States. | Racial profiling in law enforcement— United States. | Discrimination in criminal justice administration— United States. | African Americans— Social conditions—21st century. | United States— Race relations—21st century. | Privacy, Right of— United States. Classification: lcc hv9471 (ebook) | lcc hv9471 .c2825 2019 (print) | ddc 364.028/4— dc23 lc rec ord available at https:// lccn . loc . gov / 2018042310 An earlier version of chapter 1, “Naturalizing Coersion,” by Britt Rusert, was published as “ ‘A Study of Nature’: The Tuskegee Experiments and the New South Plantation,” in Journal of Medical Humanities 30, no. 3 (summer 2009): 155–71. The author thanks Springer Nature for permission to publish an updated essay. Chapter 13, “Scratch a Theory, You Find a Biography,” the interview of Troy Duster by Alondra Nelson, originally appeared in the journal Public Culture 24, no. -

How Big Data Enables Economic Harm to Consumers, Especially to Low-Income and Other Vulnerable Sectors of the Population

How Big Data Enables Economic Harm to Consumers, Especially to Low-Income and Other Vulnerable Sectors of the Population The author of these comments, Nathan Newman, has been writing for twenty years about the impact of technology on society, including his 2002 book Net Loss: Internet Profits, Private Profits and the Costs to Community, based on doctoral research on rising regional economic inequality in Silicon Valley and the nation and the role of Internet policy in shaping economic opportunity. He has been writing extensively about big data as a research fellow at the New York University Information Law Institute for the last two years, including authoring two law reviews this year on the subject. These comments are adapted with some additional research from one of those law reviews, "The Costs of Lost Privacy: Consumer Harm and Rising Economic Inequality in the Age of Google” published in the William Mitchell Law Review. He has a J.D. from Yale Law School and a Ph.D. from UC-Berkeley’s Sociology Department; Executive Summary: ϋBͤ͢ Dͯ͜͜ό ͫͧͯͪͭͨͮ͜͡ ͮͰͣ͞ ͮ͜ Gͪͪͧ͢͠ ͩ͜͟ Fͪͪͦ͜͞͠͝ ͭ͜͠ ͪͨͤͩ͢͝͠͞ ͪͨͤͩͩͯ͟͜ institutions organizing information not just about the world but about consumers themselves, thereby reshaping a range of markets based on empowering a narrow set of corporate advertisers and others to prey on consumers based on behavioral profiling. While big data can benefit consumers in certain instances, there are a range of new consumer harms to users from its unregulated use by increasingly centralized data platforms. A. These harms start with the individual surveillance of users by employers, financial institutions, the government and other players that these platforms allow, but also extend to ͪͭͨͮ͡ ͪ͡ Ͳͣͯ͜ ͣͮ͜ ͩ͝͠͠ ͧͧ͜͟͞͠ ϋͧͪͭͤͯͣͨͤ͜͢͞ ͫͭͪͤͧͤͩ͢͡ό ͯͣͯ͜ ͧͧͪ͜Ͳ ͪͭͫͪͭͯ͜͞͠ ͤͩͮͯͤͯͰͯͤͪͩͮ to discriminate and exploit consumers as categorical groups. -

Memristor-Based Approximated Computation

Memristor-based Approximated Computation Boxun Li1, Yi Shan1, Miao Hu2, Yu Wang1, Yiran Chen2, Huazhong Yang1 1Dept. of E.E., TNList, Tsinghua University, Beijing, China 2Dept. of E.C.E., University of Pittsburgh, Pittsburgh, USA 1 Email: [email protected] Abstract—The cessation of Moore’s Law has limited further architectures, which not only provide a promising hardware improvements in power efficiency. In recent years, the physical solution to neuromorphic system but also help drastically close realization of the memristor has demonstrated a promising the gap of power efficiency between computing systems and solution to ultra-integrated hardware realization of neural net- works, which can be leveraged for better performance and the brain. The memristor is one of those promising devices. power efficiency gains. In this work, we introduce a power The memristor is able to support a large number of signal efficient framework for approximated computations by taking connections within a small footprint by taking the advantage advantage of the memristor-based multilayer neural networks. of the ultra-integration density [7]. And most importantly, A programmable memristor approximated computation unit the nonvolatile feature that the state of the memristor could (Memristor ACU) is introduced first to accelerate approximated computation and a memristor-based approximated computation be tuned by the current passing through itself makes the framework with scalability is proposed on top of the Memristor memristor a potential, perhaps even the best, device to realize ACU. We also introduce a parameter configuration algorithm of neuromorphic computing systems with picojoule level energy the Memristor ACU and a feedback state tuning circuit to pro- consumption [8], [9]. -

A Survey of Autonomous Driving: Common Practices and Emerging Technologies

Accepted March 22, 2020 Digital Object Identifier 10.1109/ACCESS.2020.2983149 A Survey of Autonomous Driving: Common Practices and Emerging Technologies EKIM YURTSEVER1, (Member, IEEE), JACOB LAMBERT 1, ALEXANDER CARBALLO 1, (Member, IEEE), AND KAZUYA TAKEDA 1, 2, (Senior Member, IEEE) 1Nagoya University, Furo-cho, Nagoya, 464-8603, Japan 2Tier4 Inc. Nagoya, Japan Corresponding author: Ekim Yurtsever (e-mail: [email protected]). ABSTRACT Automated driving systems (ADSs) promise a safe, comfortable and efficient driving experience. However, fatalities involving vehicles equipped with ADSs are on the rise. The full potential of ADSs cannot be realized unless the robustness of state-of-the-art is improved further. This paper discusses unsolved problems and surveys the technical aspect of automated driving. Studies regarding present challenges, high- level system architectures, emerging methodologies and core functions including localization, mapping, perception, planning, and human machine interfaces, were thoroughly reviewed. Furthermore, many state- of-the-art algorithms were implemented and compared on our own platform in a real-world driving setting. The paper concludes with an overview of available datasets and tools for ADS development. INDEX TERMS Autonomous Vehicles, Control, Robotics, Automation, Intelligent Vehicles, Intelligent Transportation Systems I. INTRODUCTION necessary here. CCORDING to a recent technical report by the Eureka Project PROMETHEUS [11] was carried out in A National Highway Traffic Safety Administration Europe between 1987-1995, and it was one of the earliest (NHTSA), 94% of road accidents are caused by human major automated driving studies. The project led to the errors [1]. Against this backdrop, Automated Driving Sys- development of VITA II by Daimler-Benz, which succeeded tems (ADSs) are being developed with the promise of in automatically driving on highways [12]. -

Vinyls-Collection.Com Page 1/222 - Total : 8629 Vinyls Au 05/10/2021 Collection "Artistes Divers Toutes Catã©Gorie

Collection "Artistes divers toutes catégorie. TOUT FORMATS." de yvinyl Artiste Titre Format Ref Pays de pressage !!! !!! LP GSL39 Etats Unis Amerique 10cc Windows In The Jungle LP MERL 28 Royaume-Uni 10cc The Original Soundtrack LP 9102 500 France 10cc Ten Out Of 10 LP 6359 048 France 10cc Look Hear? LP 6310 507 Allemagne 10cc Live And Let Live 2LP 6641 698 Royaume-Uni 10cc How Dare You! LP 9102.501 France 10cc Deceptive Bends LP 9102 502 France 10cc Bloody Tourists LP 9102 503 France 12°5 12°5 LP BAL 13015 France 13th Floor Elevators The Psychedelic Sounds LP LIKP 003 Inconnu 13th Floor Elevators Live LP LIKP 002 Inconnu 13th Floor Elevators Easter Everywhere LP IA 5 Etats Unis Amerique 18 Karat Gold All-bumm LP UAS 29 559 1 Allemagne 20/20 20/20 LP 83898 Pays-Bas 20th Century Steel Band Yellow Bird Is Dead LP UAS 29980 France 3 Hur-el Hürel Arsivi LP 002 Inconnu 38 Special Wild Eyed Southern Boys LP 64835 Pays-Bas 38 Special W.w. Rockin' Into The Night LP 64782 Pays-Bas 38 Special Tour De Force LP SP 4971 Etats Unis Amerique 38 Special Strength In Numbers LP SP 5115 Etats Unis Amerique 38 Special Special Forces LP 64888 Pays-Bas 38 Special Special Delivery LP SP-3165 Etats Unis Amerique 38 Special Rock & Roll Strategy LP SP 5218 Etats Unis Amerique 45s (the) 45s CD hag 009 Inconnu A Cid Symphony Ernie Fischbach And Charles Ew...3LP AK 090/3 Italie A Euphonius Wail A Euphonius Wail LP KS-3668 Etats Unis Amerique A Foot In Coldwater Or All Around Us LP 7E-1025 Etats Unis Amerique A's (the A's) The A's LP AB 4238 Etats Unis Amerique A.b. -

When Diving Deep Into Operationalizing the Ethical Development of Artificial Intelligence, One Immediately Runs Into the “Fractal Problem”

When diving deep into operationalizing the ethical development of artificial intelligence, one immediately runs into the “fractal problem”. This may also be called the “infinite onion” problem.1 That is, each problem within development that you pinpoint expands into a vast universe of new complex problems. It can be hard to make any measurable progress as you run in circles among different competing issues, but one of the paths forward is to pause at a specific point and detail what you see there. Here I list some of the complex points that I see at play in the firing of Dr. Timnit Gebru, and why it will remain forever after a really, really, really terrible decision. The Punchline. The firing of Dr. Timnit Gebru is not okay, and the way it was done is not okay. It appears to stem from the same lack of foresight that is at the core of modern technology,2 and so itself serves as an example of the problem. The firing seems to have been fueled by the same underpinnings of racism and sexism that our AI systems, when in the wrong hands, tend to soak up. How Dr. Gebru was fired is not okay, what was said about it is not okay, and the environment leading up to it was -- and is -- not okay. Every moment where Jeff Dean and Megan Kacholia do not take responsibility for their actions is another moment where the company as a whole stands by silently as if to intentionally send the horrifying message that Dr. Gebru deserves to be treated this way. -

Bias and Fairness in NLP

Bias and Fairness in NLP Margaret Mitchell Kai-Wei Chang Vicente Ordóñez Román Google Brain UCLA University of Virginia Vinodkumar Prabhakaran Google Brain Tutorial Outline ● Part 1: Cognitive Biases / Data Biases / Bias laundering ● Part 2: Bias in NLP and Mitigation Approaches ● Part 3: Building Fair and Robust Representations for Vision and Language ● Part 4: Conclusion and Discussion “Bias Laundering” Cognitive Biases, Data Biases, and ML Vinodkumar Prabhakaran Margaret Mitchell Google Brain Google Brain Andrew Emily Simone Parker Lucy Ben Elena Deb Timnit Gebru Zaldivar Denton Wu Barnes Vasserman Hutchinson Spitzer Raji Adrian Brian Dirk Josh Alex Blake Hee Jung Hartwig Blaise Benton Zhang Hovy Lovejoy Beutel Lemoine Ryu Adam Agüera y Arcas What’s in this tutorial ● Motivation for Fairness research in NLP ● How and why NLP models may be unfair ● Various types of NLP fairness issues and mitigation approaches ● What can/should we do? What’s NOT in this tutorial ● Definitive answers to fairness/ethical questions ● Prescriptive solutions to fix ML/NLP (un)fairness What do you see? What do you see? ● Bananas What do you see? ● Bananas ● Stickers What do you see? ● Bananas ● Stickers ● Dole Bananas What do you see? ● Bananas ● Stickers ● Dole Bananas ● Bananas at a store What do you see? ● Bananas ● Stickers ● Dole Bananas ● Bananas at a store ● Bananas on shelves What do you see? ● Bananas ● Stickers ● Dole Bananas ● Bananas at a store ● Bananas on shelves ● Bunches of bananas What do you see? ● Bananas ● Stickers ● Dole Bananas ● Bananas -

Issues, Challenges, and Solutions: Big Data Mining

ISSUES , CHALLENGES , AND SOLUTIONS : BIG DATA MINING Jaseena K.U. 1 and Julie M. David 2 1,2 Department of Computer Applications, M.E.S College, Marampally, Aluva, Cochin, India [email protected],[email protected] ABSTRACT Data has become an indispensable part of every economy, industry, organization, business function and individual. Big Data is a term used to identify the datasets that whose size is beyond the ability of typical database software tools to store, manage and analyze. The Big Data introduce unique computational and statistical challenges, including scalability and storage bottleneck, noise accumulation, spurious correlation and measurement errors. These challenges are distinguished and require new computational and statistical paradigm. This paper presents the literature review about the Big data Mining and the issues and challenges with emphasis on the distinguished features of Big Data. It also discusses some methods to deal with big data. KEYWORDS Big data mining, Security, Hadoop, MapReduce 1. INTRODUCTION Data is the collection of values and variables related in some sense and differing in some other sense. In recent years the sizes of databases have increased rapidly. This has lead to a growing interest in the development of tools capable in the automatic extraction of knowledge from data [1]. Data are collected and analyzed to create information suitable for making decisions. Hence data provide a rich resource for knowledge discovery and decision support. A database is an organized collection of data so that it can easily be accessed, managed, and updated. Data mining is the process discovering interesting knowledge such as associations, patterns, changes, anomalies and significant structures from large amounts of data stored in databases, data warehouses or other information repositories. -

CSE 152: Computer Vision Manmohan Chandraker

CSE 152: Computer Vision Manmohan Chandraker Lecture 15: Optimization in CNNs Recap Engineered against learned features Label Convolutional filters are trained in a Dense supervised manner by back-propagating classification error Dense Dense Convolution + pool Label Convolution + pool Classifier Convolution + pool Pooling Convolution + pool Feature extraction Convolution + pool Image Image Jia-Bin Huang and Derek Hoiem, UIUC Two-layer perceptron network Slide credit: Pieter Abeel and Dan Klein Neural networks Non-linearity Activation functions Multi-layer neural network From fully connected to convolutional networks next layer image Convolutional layer Slide: Lazebnik Spatial filtering is convolution Convolutional Neural Networks [Slides credit: Efstratios Gavves] 2D spatial filters Filters over the whole image Weight sharing Insight: Images have similar features at various spatial locations! Key operations in a CNN Feature maps Spatial pooling Non-linearity Convolution (Learned) . Input Image Input Feature Map Source: R. Fergus, Y. LeCun Slide: Lazebnik Convolution as a feature extractor Key operations in a CNN Feature maps Rectified Linear Unit (ReLU) Spatial pooling Non-linearity Convolution (Learned) Input Image Source: R. Fergus, Y. LeCun Slide: Lazebnik Key operations in a CNN Feature maps Spatial pooling Max Non-linearity Convolution (Learned) Input Image Source: R. Fergus, Y. LeCun Slide: Lazebnik Pooling operations • Aggregate multiple values into a single value • Invariance to small transformations • Keep only most important information for next layer • Reduces the size of the next layer • Fewer parameters, faster computations • Observe larger receptive field in next layer • Hierarchically extract more abstract features Key operations in a CNN Feature maps Spatial pooling Non-linearity Convolution (Learned) . Input Image Input Feature Map Source: R. -

Gender Shades: Intersectional Accuracy Disparities in Commercial Gender Classification∗

Proceedings of Machine Learning Research 81:1{15, 2018 Conference on Fairness, Accountability, and Transparency Gender Shades: Intersectional Accuracy Disparities in Commercial Gender Classification∗ Joy Buolamwini [email protected] MIT Media Lab 75 Amherst St. Cambridge, MA 02139 Timnit Gebru [email protected] Microsoft Research 641 Avenue of the Americas, New York, NY 10011 Editors: Sorelle A. Friedler and Christo Wilson Abstract who is hired, fired, granted a loan, or how long Recent studies demonstrate that machine an individual spends in prison, decisions that learning algorithms can discriminate based have traditionally been performed by humans are on classes like race and gender. In this rapidly made by algorithms (O'Neil, 2017; Citron work, we present an approach to evaluate and Pasquale, 2014). Even AI-based technologies bias present in automated facial analysis al- that are not specifically trained to perform high- gorithms and datasets with respect to phe- stakes tasks (such as determining how long some- notypic subgroups. Using the dermatolo- one spends in prison) can be used in a pipeline gist approved Fitzpatrick Skin Type clas- that performs such tasks. For example, while sification system, we characterize the gen- face recognition software by itself should not be der and skin type distribution of two facial analysis benchmarks, IJB-A and Adience. trained to determine the fate of an individual in We find that these datasets are overwhelm- the criminal justice system, it is very likely that ingly composed of lighter-skinned subjects such software is used to identify suspects. Thus, (79:6% for IJB-A and 86:2% for Adience) an error in the output of a face recognition algo- and introduce a new facial analysis dataset rithm used as input for other tasks can have se- which is balanced by gender and skin type.