Investigating Diagrammatic Reasoning with Deep Neural Networks

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

MGF 1106 Learning Objectives

Learning Objectives L01 Set Concepts and Subsets (2.1, 2.2) 1. Indicate sets by the description method, roster method, and by using set builder notation. 2. Determine if a set is well defined. 3. Determine if a set is finite or infinite. 4. Determine if sets are equal, equivalent, or neither. 5. Find the cardinal number of a set. 6. Determine if a set is equal to the empty set. 7. Use the proper notation for the empty set. 8. Give an example of a universal set and list all of its subsets and all of its proper subsets. 9. Properly use notation for element, subset, and proper subset. 10. Determine if a number represents a cardinal number or an ordinal number. 11. Be able to determine the number of subsets and proper subsets that can be formed from a universal set without listing them. L02 Venn Diagrams and Set Operations (2.3, 2.4) 1. Determine if sets are disjoint. 2. Find the complement of a set 3. Find the intersection of two sets. 4. Find the union of two sets. 5. Find the difference of two sets. 6. Apply several set operations involved in a statement. 7. Determine sets from a Venn diagram. 8. Use the formula that yields the cardinal number of a union. 9. Construct a Venn diagram given two sets. 10. Construct a Venn diagram given three sets. L03 Equality of Sets; Applications of Sets (2.4, 2.5) 1. Determine if set statements are equal by using Venn diagrams or DeMorgan's laws. -

Intuitionistic Euler-Venn Diagrams⋆

Intuitionistic Euler-Venn Diagrams? Sven Linker[0000−0003−2913−7943] University of Liverpool, UK [email protected] Abstract. We present an intuitionistic interpretation of Euler-Venn di- agrams with respect to Heyting algebras. In contrast to classical Euler- Venn diagrams, we treat shaded and missing zones differently, to have diagrammatic representations of conjunction, disjunction and intuition- istic implication. Furthermore, we need to add new syntactic elements to express these concepts. We present a cut-free sequent calculus for this language, and prove it to be sound and complete. Furthermore, we show that the rules of cut, weakening and contraction are admissible. Keywords: intuitionistic logic · Euler-Venn diagrams · proof theory 1 Introduction Most visualisations for logical systems, like Peirce's Existential Graphs [6] and the Venn systems of Shin [16], are dedicated to some form of classical reasoning. However, for example, within Computer Science, constructive reasoning in the form of intuitionistic logic is very important as well, due to the Curry-Howard correspondence of constructive proofs and programs, or, similarly, of formulas and types. That is, each formula corresponds to a unique type, and a proof of the formula corresponds to the execution of a function of this type. Hence, a visu- alisation of intutionistic logic would be beneficial not only from the perspective of formal logic, but also for visualising program types and their relations. Typical semantics of intuitionistic logic are given in the form of Heyting algebras, a slight generalisation of Boolean algebras, and an important subclass of Heyting algebras is induced by topologies: the set of open sets of a topology forms a Heyting algebra. -

Introducing 3D Venn and Euler Diagrams

Introducing 3D Venn and Euler Diagrams Peter Rodgers1, Jean Flower2, and Gem Stapleton3 1 University of Kent, UK [email protected] 2 Autodesk, UK 3 Visual Modelling Group, University of Brighton, UK [email protected] Abstract. In 2D, Venn and Euler diagrams consist of labelled simple closed curves and have been widely studied. The advent of 3D display and interaction mechanisms means that extending these diagrams to 3D is now feasible. However, 3D versions of these diagrams have not yet been examined. Here, we begin the investigation into 3D Euler diagrams by defining them to comprise of labelled, orientable closed surfaces. As in 2D, these 3D Euler diagrams visually represent the set-theoretic notions of intersection, containment and disjointness. We extend the concept of wellformedness to the 3D case and compare it to wellformedness in the 2D case. In particular, we demonstrate that some data can be visualized with wellformed 3D diagrams that cannot be visualized with wellformed 2D diagrams. We also note that whilst there is only one topologically distinct embedding of wellformed Venn-3 in 2D, there are four such em- beddings in 3D when the surfaces are topologically equivalent to spheres. Furthermore, we hypothesize that all data sets can be visualized with 3D Euler diagrams whereas this is not the case for 2D Euler diagrams, unless non-simple curves and/or duplicated labels are permitted. As this paper is the first to consider 3D Venn and Euler diagrams, we include a set of open problems and conjectures to stimulate further research. -

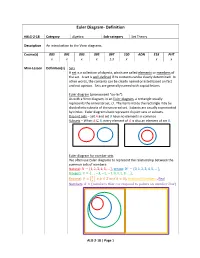

Euler Diagram- Definition

Euler Diagram- Definition #ALG-2-18 Category Algebra Sub-category Set Theory Description An introduction to the Venn diagrams. Course(s) 085 091 093 095 097 100 ADN E18 PHT x x x x 1.3 x x x Mini-Lesson Definition(s) Sets A set is a collection of objects, which are called elements or members of the set. A set is well-defined if its contents can be clearly determined. In other words, the contents can be clearly named or listed based on fact and not opinion. Sets are generally named with capital letters. Euler diagram (pronounced “oy-ler”) As with a Venn diagram, in an Euler diagram, a rectangle usually represents the universal set, U. The items inside the rectangle may be divided into subsets of the universal set. Subsets are usually represented by circles. Euler diagrams best represent disjoint sets or subsets. Disjoint sets – Set A and set B have no elements in common. Subsets – When A ⊆ B, every element of A is also an element of set B. Euler diagram for number sets We often use Euler diagrams to represent the relationship between the common sets of numbers: Natural: 푁 = {1, 2, 3, 4, 5, … }, Whole: 푊 = {0, 1, 2, 3, 4, 5, … }, Integers: 푍 = {… , −3, −2, −1, 0, 1, 2, 3, … }, 푎 Rational: 푄 = { | 푎, 푏 ∈ 푍 푎푛푑 푏 ≠ 0}, Irrational Numbers , Real 푏 Numbers: 푅 = {푛푢푚푏푒푟푠 푡ℎ푎푡 푐표푟푟푒푠푝표푛푑 푡표 푝표푛푡푠 표푛 푛푢푚푏푒푟 푙푛푒} ALG-2-18 | Page 1 Rule Familiarize yourself with Euler Diagrams. Example ퟐ Consider the set of numbers: {−ퟏퟎ, −ퟎ. -

Constructing Internal Diagrammatic Proofs from External Logic Diagrams

Constructing internal diagrammatic proofs from external logic diagrams Yuri Sato, Koji Mineshima, and Ryo Takemura Department of Philosophy, Keio University fsato, minesima, takemurag@abelard.flet.keio.ac.jp Abstract “No C are A”. In what follows, we call such a syntactic ma- nipulation of diagrams to derive a conclusion of deductive Internal syntactic operations on diagrams play a key role in accounting for efficacy of diagram use in reasoning. How- reasoning a construction of a diagrammatic proof. The point ever, it is often held that in the case of complex deductive here is that by unifying two diagrams in premises and ob- reasoning, diagrams can serve merely as an auxiliary source serving the topological relationship between the circles, one of information in interpreting sentences or constructing mod- els. Based on experiments comparing subjects’ performances can automatically read off the correct conclusion. Shimojima in syllogism solving where logic diagrams of several different (1996) calls this a “free ride” property, and shows that it can forms are used, we argue that internal manipulations of dia- be seen to exist in other kinds of diagram use in reasoning grams, or what we call internal constructions of diagrammatic proofs, actually exist, and that such constructions are naturally and problem solving. triggered even for users without explicit prior knowledge of In general, a deductive reasoning task would be easy if their inference rules or strategies. it could be replaced with a task of constructing a concrete Keywords: External representation; Diagrammatic reasoning; Logic diagram; Deductive reasoning diagrammatic proof. Typically, such a construction is sup- posed to be triggered by external diagrams and carried out Introduction internally, without actual drawing or movement of physical objects. -

Interactive Visualization for Interdisciplinary Research

©2016 Society for Imaging Science and Technology Interactive Visualization for Interdisciplinary Research Naomi Keena*, Mohamed Aly Etman*1, Josh Draper, Paulo Pinheiro, Anna Dyson; Center for Architecture Science and Ecology (CASE), Rensselaer Polytechnic Institute (RPI), New York, New York. an interdisciplinary context the need to reveal relationships between constituents presents a complex representational problem. A Abstract successful visualization can make the relationships between datasets Studies show that many multi-scalar research problems comprehendible, offering a shorter route to help guide the decision cannot easily be addressed from the confines of individual making process and become a tool to convey information critically disciplines for they require the participation of many experts, each [5]. Studies show that the difficulty of interdisciplinary work is often viewing the problem from their distinctive disciplinary perspective. disciplinarily in nature, in that a discipline is seen as an identity or The bringing together of disparate experts or fields of expertise is boundary condition of specification not differentiation. In order for known as interdisciplinary research. The benefit of such an interdisciplinary work to occur such boundaries or stereotypes need approach is that discourse and collaboration among experts in to be broken. To help break these boundaries and allow researchers distinct fields can generate new insights to the research problem at to better understand how their knowledge can enhance the hand. With this approach comes large amounts of multivariate data interdisciplinary process by assisting in the completion of a holistic and understanding the possible relationships between variables and evaluation of an interdisciplinary problem, studies in this area their corresponding relevance to the problem is in itself a challenge. -

Drawing Area-Proportional Venn and Euler Diagrams

Drawing Area-Proportional Venn and Euler Diagrams Stirling Chow and Frank Ruskey Department of Computer Science, University of Victoria, Canada {schow,fruskey}@cs.uvic.ca Abstract. We consider the problem of drawing Venn diagrams for which each region’s area is proportional to some weight (e.g., population or percentage) assigned to that region. These area-proportional Venn dia- grams have an enhanced ability over traditional Venn diagrams to visu- ally convey information about data sets with interacting characteristics. We develop algorithms for drawing area-proportional Venn diagrams for any population distribution over two characteristics using circles and over three characteristics using rectangles and near-rectangular poly- gons; modifications of these algorithms are then presented for drawing the more general Euler diagrams. We present results concerning which population distributions can be drawn using specific shapes. A program to aid further investigation of area-proportional Venn diagrams is also described. 1 Introduction In 1880, John Venn introduced a notation for representing logical propositions using intersecting Jordan curves [11]. These Venn diagrams were specialized instances of a more general notation for representing set relations which Leonard Euler developed in the 18th century [5]. Many people encounter the familiar 2-set and 3-set Venn diagrams shown in Fig. 1 at some point in their mathematical education; they are often used to introduce students to set theory. Informally, an n-set Venn diagram is comprised of n Jordan curves The curves divide the plane into 2n connected sets of points called regions which uniquely represent all possible intersections of the (open) interiors and exteriors of the curves. -

SPEULER: Semantics-Preserving Euler Diagrams

To appear in IEEE Transactions on Visualization and Computer Graphics SPEULER: Semantics-preserving Euler Diagrams Rebecca Kehlbeck, Jochen Gortler,¨ Yunhai Wang, and Oliver Deussen LITTLE SMALL OPHAN HOUSE IN E ANNIE THE PRAIRIE L CLAIMS DIPPER T COURT IT RICHARD L IG BANG PLANET RICHARD WONDER B THEORY LIES HOUSE IN POTATOES L THE PRAIRIE PROFESSOR SOLDIER ECHILADA SISTER BLUE HERON LA FOREST A BAT B PROFESSOR R I R FOOT G LEAGUE G PLANET TIME BOARD FOOT G OPHAN TIME FOREST E DIPPER ANNIE FOUNDATION EMERALD BANG SCREEN BAT E SISTER LIES SCREEN THEORY SUR WORLD FORMAT ECHILADA ONE LEAGUE FROG END FOUNDATION BEND BOARD MILLIMETER BLUE HERON ISLAND TOOTHED TELESOPE EYED SUR BEER GAME ASPEN WORLD ISLAND CONGER LAKE FROG TOOTHED EYED CIRCLE ASPEN HEARTED WHITE CONGER BARRIER ONE BLUE HADRON REEF ROOM BLUE GAME BARRIER COLLIDER BILLED REEF FLYING POX INTESTINE SEED WHITE FOX INTESTINE CARDIAC FLYING WALL OF FINCH EMERALD WALL OF END MAGELLANIC FORMAT CHINA CHINA VEIN FOX CLOUD CIRCLE MAGELLANIC HEARTED DEPRESSION POX ROOM WONDER CLOUD BEND MILLIMETER BEER TELESOPE SOLDIER DEPRESSION LAKE TERROR CARDIAC BILLED VEIN CLAIMS SEED HADRON G FINCH R AUNT COURT G TERROR COLLIDER E POTATOES R A L E AUNT E T L A RG SMA T LA (a) Original (https://xkcd.com/2122/) (b) Reconstructed using our method (c) Well-matched result using our method Fig. 1: Venn diagrams are often used to highlight complex interactions of sets. This example from xkcd.com shows which adjectives can be used in combination (a). Using our method, we can recreate this manually created Venn diagram (b). -

Topological Venn Diagrams and Graphs

International Journal of Science and Research (IJSR) ISSN: 2319-7064 ResearchGate Impact Factor (2018): 0.28 | SJIF (2018): 7.426 Topological Venn Diagrams and Graphs T. Jean Brice1, G. Easwara Prasad2, G. Selvam3 1Department of Mathematics, Research Scholar, S.T.Hindu College, Nagercoil 2Department of Mathematics, Head of the Department, S.T.Hindu College, Nagercoil 3Department of Mathematics, Vinayaka Mission’s, Kirupananda Variyar Engineering College, Vinayaka Mission’s Research Foundation, (Deemed to be University), Salem 636 308, India Abstract: This paper is an attempt to expound Venn diagrams and its applications in Topology and Graph Theory. John Venn introduced Venn diagrams in 1880. Venn diagrams can be called as Eulerian Circles. Euler invented this in the 18th century. Venn diagram consists of curves and circles. Venn diagrams are similar to Euler diagrams. Edwards discusses the dual graph of a Venn diagram is a maximal planar subgraph. Edwards-Venn diagrams are topologically equivalent to diagrams with graphs. 2D and 3D Venn diagrams consist of labeled simple closed curves. 3D Venn diagrams and 3D Euler diagrams are all combinations of surface intersections. In brief this paper will give a vivid picture of Venn diagrams and its close relationship with Topology and Graph Theory. Keywords: Venn diagram–Euler diagram – planar Venn diagram – Topologically faithful Venn diagram – 2D and 3D Venn diagram 1. Introduction by a subset of 1, 2, …, n, indicating the subset of the indices of the curves whose interiors are included in the A Venn diagram is a diagram that shows all possible logical intersection. Pairs of curves are assumed to intersect only at relations between a finite collection of different sets. -

Logic Made Easy: How to Know When Language Deceives

LOGIC MADE EASY ALSO BY DEBORAH J. BENNETT Randomness LOGIC MADE EASY How to Know When Language Deceives You DEBORAH J.BENNETT W • W • NORTON & COMPANY I ^ I NEW YORK LONDON Copyright © 2004 by Deborah J. Bennett All rights reserved Printed in the United States of America First Edition For information about permission to reproduce selections from this book, write to Permissions, WW Norton & Company, Inc., 500 Fifth Avenue, New York, NY 10110 Manufacturing by The Haddon Craftsmen, Inc. Book design by Margaret M.Wagner Production manager: Julia Druskin Library of Congress Cataloging-in-Publication Data Bennett, Deborah J., 1950- Logic made easy : how to know when language deceives you / Deborah J. Bennett.— 1st ed. p. cm. Includes bibliographical references and index. ISBN 0-393-05748-8 1. Reasoning. 2. Language and logic. I.Title. BC177 .B42 2004 160—dc22 2003026910 WW Norton & Company, Inc., 500 Fifth Avenue, New York, N.Y. 10110 www. wwnor ton. com WW Norton & Company Ltd., Castle House, 75/76Wells Street, LondonWlT 3QT 1234567890 CONTENTS INTRODUCTION: LOGIC IS RARE I 1 The mistakes we make l 3 Logic should be everywhere 1 8 How history can help 19 1 PROOF 29 Consistency is all I ask 29 Proof by contradiction 33 Disproof 3 6 I ALL 40 All S are P 42 Vice Versa 42 Familiarity—help or hindrance? 41 Clarity or brevity? 50 7 8 CONTENTS 3 A NOT TANGLES EVERYTHING UP 53 The trouble with not 54 Scope of the negative 5 8 A and E propositions s 9 When no means yes—the "negative pregnant" and double negative 61 k SOME Is PART OR ALL OF ALL 64 Some -

Exact and Approximate Area-Proportional Circular Venn and Euler Diagrams

Exact and Approximate Area-proportional Circular Venn and Euler Diagrams Leland Wilkinson Abstract— Scientists conducting microarray and other experiments use circular Venn and Euler diagrams to analyze and illustrate their results. As one solution to this problem, this article introduces a statistical model for fitting area-proportional Venn and Euler diagrams to observed data. The statistical model outlined in this report includes a statistical loss function and a minimization procedure that enables formal estimation of the Venn/Euler area-proportional model for the first time. A significance test of the null hypothesis is computed for the solution. Residuals from the model are available for inspection. As a result, this algorithm can be used for both exploration and inference on real datasets. A Java program implementing this algorithm is available under the Mozilla Public License. An R function venneuler() is available as a package in CRAN and a plugin is available in Cytoscape. Index Terms—visualization, bioinformatics, statistical graphics 1 INTRODUCTION searchers use Venn and Euler diagrams to locate genes induced or repressed above a user-defined threshold. Consistencies across ex- Venn diagrams are collections of n simple closed curves dividing the periments are expected to yield large overlapping areas. An informal plane into 2n nonempty connected regions uniquely representing all survey of 72 Venn/Euler diagrams published in articles from the 2009 possible intersections of the interiors and exteriors of the curves [51]. volumes of Science, Nature, and online affiliated journals shows these The requirement that the curves be simple means that no more than diagrams have several common features: 1) almost all of them (65/72) two curves may intersect in a single point. -

Well-Matchedness in Euler Diagrams

Well-matchedness in Euler Diagrams Mithileysh Sathiyanarayanan and John Howse Visual Modelling Group, University of Brighton, UK fM.Sathiyanarayanan,[email protected] Abstract. Euler diagrams are used for visualizing set-based informa- tion. Closed curves represent sets and the relationship between the curves correspond to relationships between sets. A notation is well-matched to meaning when its syntactic relationships are reflected in the seman- tic relationships being represented. Euler diagrams are said to be well- matched to meaning because, for example, curve containment corre- sponds to the subset relationship. In this paper we explore the concept of well-matchedness in Euler diagrams, considering different levels of well-matchedness. We also discuss how the properties, sometimes called well-formedness conditions, of an Euler diagram relate to the levels of well-matchedness. 1 Introduction An Euler diagram is a collection of closed curves in the plane. Curves repre- sent sets and the spatial relationships between the curves represent relationships between the sets. The lefthand diagram in fig. 1 represents the set-theoretic re- lationships C is a subset of A and A is disjoint from B. The righthand diagram in fig. 1 is a Venn diagram and has the same semantics as this Euler diagram. A Venn diagram contains all possible intersections of curves and shading is used to indicate empty sets. For example, the intersection between the curves labelled A and B is shaded indicating that the sets A and B are disjoint. The only part of the curve labelled C that is unshaded is that part within A and outside B indicating that C is a subset of A.