Scattered Data Interpolation for Computer Graphics V1.0

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

On Multivariate Interpolation

On Multivariate Interpolation Peter J. Olver† School of Mathematics University of Minnesota Minneapolis, MN 55455 U.S.A. [email protected] http://www.math.umn.edu/∼olver Abstract. A new approach to interpolation theory for functions of several variables is proposed. We develop a multivariate divided difference calculus based on the theory of non-commutative quasi-determinants. In addition, intriguing explicit formulae that connect the classical finite difference interpolation coefficients for univariate curves with multivariate interpolation coefficients for higher dimensional submanifolds are established. † Supported in part by NSF Grant DMS 11–08894. April 6, 2016 1 1. Introduction. Interpolation theory for functions of a single variable has a long and distinguished his- tory, dating back to Newton’s fundamental interpolation formula and the classical calculus of finite differences, [7, 47, 58, 64]. Standard numerical approximations to derivatives and many numerical integration methods for differential equations are based on the finite dif- ference calculus. However, historically, no comparable calculus was developed for functions of more than one variable. If one looks up multivariate interpolation in the classical books, one is essentially restricted to rectangular, or, slightly more generally, separable grids, over which the formulae are a simple adaptation of the univariate divided difference calculus. See [19] for historical details. Starting with G. Birkhoff, [2] (who was, coincidentally, my thesis advisor), recent years have seen a renewed level of interest in multivariate interpolation among both pure and applied researchers; see [18] for a fairly recent survey containing an extensive bibli- ography. De Boor and Ron, [8, 12, 13], and Sauer and Xu, [61, 10, 65], have systemati- cally studied the polynomial case. -

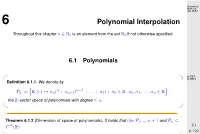

Polynomial Interpolation

Numerical Methods 401-0654 6 Polynomial Interpolation Throughout this chapter n N is an element from the set N if not otherwise specified. ∈ 0 0 6.1 Polynomials D-ITET, ✬ ✩D-MATL Definition 6.1.1. We denote by n n 1 n := R t αnt + αn 1t − + ... + α1t + α0 R: α0, α1,...,αn R . P ∋ 7→ − ∈ ∈ the R-vectorn space of polynomials with degree n. o ≤ ✫ ✪ ✬ ✩ Theorem 6.1.2 (Dimension of space of polynomials). It holds that dim n = n +1 and n P P ⊂ 6.1 C∞(R). p. 192 ✫ ✪ n n 1 ➙ Remark 6.1.1 (Polynomials in Matlab). MATLAB: αnt + αn 1t − + ... + α0 Vector Numerical − Methods (αn, αn 1,...,α0) (ordered!). 401-0654 − △ Remark 6.1.2 (Horner scheme). Evaluation of a polynomial in monomial representation: Horner scheme p(t)= t t t t αnt + αn 1 + αn 2 + + α1 + α0 . ··· − − ··· Code 6.1: Horner scheme, polynomial in MATLAB format 1 function y = polyval (p,x) 2 y = p(1); for i =2: length (p), y = x y+p( i ); end ∗ D-ITET, D-MATL Asymptotic complexity: O(n) Use: MATLAB “built-in”-function polyval(p,x); △ 6.2 p. 193 6.2 Polynomial Interpolation: Theory Numerical Methods 401-0654 Goal: (re-)constructionofapolynomial(function)frompairs of values (fit). ✬ Lagrange polynomial interpolation problem ✩ Given the nodes < t < t < < tn < and the values y ,...,yn R compute −∞ 0 1 ··· ∞ 0 ∈ p n such that ∈P j 0, 1,...,n : p(t )= y . ∀ ∈ { } j j ✫ ✪ D-ITET, D-MATL 6.2 p. 194 6.2.1 Lagrange polynomials Numerical Methods 401-0654 ✬ ✩ Definition 6.2.1. -

Sparse Polynomial Interpolation and Testing

Sparse Polynomial Interpolation and Testing by Andrew Arnold A thesis presented to the University of Waterloo in fulfillment of the thesis requirement for the degree of Doctor of Philsophy in Computer Science Waterloo, Ontario, Canada, 2016 © Andrew Arnold 2016 I hereby declare that I am the sole author of this thesis. This is a true copy of the thesis, including any required final revisions, as accepted by my examiners. I understand that my thesis may be made electronically available to the public. ii Abstract Interpolation is the process of learning an unknown polynomial f from some set of its evalua- tions. We consider the interpolation of a sparse polynomial, i.e., where f is comprised of a small, bounded number of terms. Sparse interpolation dates back to work in the late 18th century by the French mathematician Gaspard de Prony, and was revitalized in the 1980s due to advancements by Ben-Or and Tiwari, Blahut, and Zippel, amongst others. Sparse interpolation has applications to learning theory, signal processing, error-correcting codes, and symbolic computation. Closely related to sparse interpolation are two decision problems. Sparse polynomial identity testing is the problem of testing whether a sparse polynomial f is zero from its evaluations. Sparsity testing is the problem of testing whether f is in fact sparse. We present effective probabilistic algebraic algorithms for the interpolation and testing of sparse polynomials. These algorithms assume black-box evaluation access, whereby the algorithm may specify the evaluation points. We measure algorithmic costs with respect to the number and types of queries to a black-box oracle. -

Sparse Polynomial Interpolation Codes and Their Decoding Beyond Half the Minimum Distance *

Sparse Polynomial Interpolation Codes and their Decoding Beyond Half the Minimum Distance * Erich L. Kaltofen Clément Pernet Dept. of Mathematics, NCSU U. J. Fourier, LIP-AriC, CNRS, Inria, UCBL, Raleigh, NC 27695, USA ÉNS de Lyon [email protected] 46 Allée d’Italie, 69364 Lyon Cedex 7, France www4.ncsu.edu/~kaltofen [email protected] http://membres-liglab.imag.fr/pernet/ ABSTRACT General Terms: Algorithms, Reliability We present algorithms performing sparse univariate pol- Keywords: sparse polynomial interpolation, Blahut's ynomial interpolation with errors in the evaluations of algorithm, Prony's algorithm, exact polynomial fitting the polynomial. Based on the initial work by Comer, with errors. Kaltofen and Pernet [Proc. ISSAC 2012], we define the sparse polynomial interpolation codes and state that their minimal distance is precisely the code-word length 1. INTRODUCTION divided by twice the sparsity. At ISSAC 2012, we have Evaluation-interpolation schemes are a key ingredient given a decoding algorithm for as much as half the min- in many of today's computations. Model fitting for em- imal distance and a list decoding algorithm up to the pirical data sets is a well-known one, where additional minimal distance. information on the model helps improving the fit. In Our new polynomial-time list decoding algorithm uses particular, models of natural phenomena often happen sub-sequences of the received evaluations indexed by to be sparse, which has motivated a wide range of re- an arithmetic progression, allowing the decoding for a search including compressive sensing [4], and sparse in- larger radius, that is, more errors in the evaluations terpolation of polynomials [23,1, 15, 13,9, 11]. -

Chinese Remainder Theorem

THE CHINESE REMAINDER THEOREM KEITH CONRAD We should thank the Chinese for their wonderful remainder theorem. Glenn Stevens 1. Introduction The Chinese remainder theorem says we can uniquely solve every pair of congruences having relatively prime moduli. Theorem 1.1. Let m and n be relatively prime positive integers. For all integers a and b, the pair of congruences x ≡ a mod m; x ≡ b mod n has a solution, and this solution is uniquely determined modulo mn. What is important here is that m and n are relatively prime. There are no constraints at all on a and b. Example 1.2. The congruences x ≡ 6 mod 9 and x ≡ 4 mod 11 hold when x = 15, and more generally when x ≡ 15 mod 99, and they do not hold for other x. The modulus 99 is 9 · 11. We will prove the Chinese remainder theorem, including a version for more than two moduli, and see some ways it is applied to study congruences. 2. A proof of the Chinese remainder theorem Proof. First we show there is always a solution. Then we will show it is unique modulo mn. Existence of Solution. To show that the simultaneous congruences x ≡ a mod m; x ≡ b mod n have a common solution in Z, we give two proofs. First proof: Write the first congruence as an equation in Z, say x = a + my for some y 2 Z. Then the second congruence is the same as a + my ≡ b mod n: Subtracting a from both sides, we need to solve for y in (2.1) my ≡ b − a mod n: Since (m; n) = 1, we know m mod n is invertible. -

Anl-7826 Chinese Remainder Ind Interpolation

ANL-7826 CHINESE REMAINDER IND INTERPOLATION ALGORITHMS John D. Lipson UolC-AUA-USAEC- ARGONNE NATIONAL LABORATORY, ARGONNE, ILLINOIS Prepared for the U.S. ATOMIC ENERGY COMMISSION under contract W-31-109-Eng-38 The facilities of Argonne National Laboratory are owned by the United States Govern ment. Under the terms of a contract (W-31 -109-Eng-38) between the U. S. Atomic Energy 1 Commission. Argonne Universities Association and The University of Chicago, the University employs the staff and operates the Laboratory in accordance with policies and programs formu lated, approved and reviewed by the Association. MEMBERS OF ARGONNE UNIVERSITIES ASSOCIATION The University of Arizona Kansas State University The Ohio State University Carnegie-Mellon University The University of Kansas Ohio University Case Western Reserve University Loyola University The Pennsylvania State University The University of Chicago Marquette University Purdue University University of Cincinnati Michigan State University Saint Louis University Illinois Institute of Technology The University of Michigan Southern Illinois University University of Illinois University of Minnesota The University of Texas at Austin Indiana University University of Missouri Washington University Iowa State University Northwestern University Wayne State University The University of Iowa University of Notre Dame The University of Wisconsin NOTICE This report was prepared as an account of work sponsored by the United States Government. Neither the United States nor the United States Atomic Energy Commission, nor any of their employees, nor any of their contractors, subcontrac tors, or their employees, makes any warranty, express or implied, or assumes any legal liability or responsibility for the accuracy, completeness or usefulness of any information, apparatus, product or process disclosed, or represents that its use would not infringe privately-owned rights. -

Polynomial Interpolation: Summary ∏

Polynomial Interpolation 1 Polynomial Interpolation: Summary • Statement of problem. We are given a table of n + 1 data points (xi,yi): x x0 x1 x2 ... xn y y0 y1 y2 ... yn and seek a polynomial p of lowest degree such that p(xi) = yi for 0 ≤ i ≤ n Such a polynomial is said to interpolate the data. • Finding the interpolating polynomial using the Vandermonde matrix. 2 n Here, pn(x) = ao +a1x +a2x +...+anx where the coefficients are found by imposing p(xi) = yi for each i = 0 to n. The resulting system is 2 n 1 x0 x0 ... x0 a0 y0 1 x x2 ... xn a y 1 1 1 1 1 1 x x2 ... xn a y 2 2 2 2 = 2 . . .. . . . . . 2 n 1 xn xn ... xn an yn The matrix above is called the Vandermonde matrix. If this was singular it would imply that for some nonzero set of coefficients the associated polynomial of degree ≤ n would have n + 1 zeros. This can’t be so this matrix equation can be solved for the unknown coefficients of the polynomial. • The Lagrange interpolation polynomial. n x − x p (x) = y ` (x) + y ` (x) + ... + y ` (x) where ` (x) = ∏ j n 0 0 1 1 n n i x − x j = 0 i j j 6= i • The Newton interpolation polynomial (Divided Differences) pn(x) = c0 + c1(x − x0) + c2(x − x0)(x − x1) + ... + cn(x − x0)(x − x1)···(x − xn−1) where these coefficients will be found using divided differences. It is fairly clear however that c0 = y0 and c = y1−y0 . -

Polynomial Interpolation: Standard Lagrange Interpolation, Barycentric Lagrange Interpolation and Chebyshev Interpolation

Polynomial 9 Interpolation Lab Objective: Learn and compare three methods of polynomial interpolation: standard Lagrange interpolation, Barycentric Lagrange interpolation and Chebyshev interpolation. Explore Runge's phe- nomenon and how the choice of interpolating points aect the results. Use polynomial interpolation to study air polution by approximating graphs of particulates in air. Polynomial Interpolation Polynomial interpolation is the method of nding a polynomial that matches a function at specic points in its range. More precisely, if f(x) is a function on the interval [a; b] and p(x) is a poly- nomial then p(x) interpolates the function f(x) at the points x0; x1; : : : ; xn if p(xj) = f(xj) for all j = 0; 1; : : : ; n. In this lab most of the discussion is focused on using interpolation as a means of approximating functions or data, however, polynomial interpolation is useful in a much wider array of applications. Given a function and a set of unique points n , it can be shown that there exists f(x) fxigi=0 a unique interpolating polynomial p(x). That is, there is one and only one polynomial of degree n that interpolates f(x) through those points. This uniqueness property is why, for the remainder of this lab, an interpolating polynomial is referred to as the interpolating polynomial. One approach to nding the unique interpolating polynomial of degree n is Lagrange interpolation. Lagrange interpolation Given a set n of points to interpolate, a family of basis functions with the following property fxigi=1 n n is constructed: ( 0 if i 6= j Lj(xi) = : 1 if i = j The Lagrange form of this family of basis functions is n Y (x − xk) k=1;k6=j (9.1) Lj(x) = n Y (xj − xk) k=1;k6=j 1 2 Lab 9. -

Change of Basis in Polynomial Interpolation

NUMERICAL LINEAR ALGEBRA WITH APPLICATIONS Numer. Linear Algebra Appl. 2000; 00:1–6 Prepared using nlaauth.cls [Version: 2000/03/22 v1.0] Change of basis in polynomial interpolation W. Gander Institute of Computational Science ETH Zurich CH-8092 Zurich Switzerland SUMMARY Several representations for the interpolating polynomial exist: Lagrange, Newton, orthogonal polynomials etc. Each representation is characterized by some basis functions. In this paper we investigate the transformations between the basis functions which map a specific representation to another. We show that for this purpose the LU- and the QR decomposition of the Vandermonde matrix play a crucial role. Copyright c 2000 John Wiley & Sons, Ltd. key words: Polynomial interpolation, basis change 1. REPRESENTATIONS OF THE INTERPOLATING POLYNOMIAL Given function values x x , x , ··· x 0 1 n , f(x) f0, f1, ··· fn with xi 6= xj for i 6= j. There exists a unique polynomial Pn of degree less or equal n which interpolates these values, i.e. Pn(xi) = fi, i = 0, 1, . , n. (1) Several representations of Pn are known, we will present in this paper the basis transformations among four of them. 1.1. Monomial Basis We consider first the monomials m(x) = (1, x, x2, . , xn)T and the representation n−1 n Pn(x) = a0 + a1x + ... + an−1x + anx . †Please ensure that you use the most up to date class file, available from the NLA Home Page at http://www.interscience.wiley.com/jpages/1070-5325/ Contract/grant sponsor: Publishing Arts Research Council; contract/grant number: 98–1846389 Received ?? Copyright c 2000 John Wiley & Sons, Ltd. -

Projection and Its Importance in Scientific Computing ______

Projection and its Importance in Scientific Computing ________________________________________________ Stan Tomov EECS Department The University of Tennessee, Knoxville March 22, 2017 COCS 594 Lecture Notes Slide 1 / 41 03/22/2017 Contact information office : Claxton 317 phone : (865) 974-6317 email : [email protected] Additional reference materials: [1] R.Barrett, M.Berry, T.F.Chan, J.Demmel, J.Donato, J. Dongarra, V. Eijkhout, R.Pozo, C.Romine, and H.Van der Vorst, Templates for the Solution of Linear Systems: Building Blocks for Iterative Methods (2nd edition) http://netlib2.cs.utk.edu/linalg/html_templates/Templates.html [2] Yousef Saad, Iterative methods for sparse linear systems (1st edition) http://www-users.cs.umn.edu/~saad/books.html Slide 2 / 41 Topics as related to high-performance scientific computing Projection in Scientific Computing Sparse matrices, PDEs, Numerical parallel implementations solution, Tools, etc. Iterative Methods Slide 3 / 41 Topics on new architectures – multicore, GPUs (CUDA & OpenCL), MIC Projection in Scientific Computing Sparse matrices, PDEs, Numerical parallel implementations solution, Tools, etc. Iterative Methods Slide 4 / 41 Outline Part I – Fundamentals Part II – Projection in Linear Algebra Part III – Projection in Functional Analysis (e.g. PDEs) HPC with Multicore and GPUs Slide 5 / 41 Part I Fundamentals Slide 6 / 41 Projection in Scientific Computing [ an example – in solvers for PDE discretizations ] A model leading to self-consistent iteration with need for high-performance diagonalization -

Polynomial, Lagrange, and Newton Interpolation

Polynomial, Lagrange, and Newton Interpolation Mridul Aanjaneya November 14, 2017 Interpolation We are often interested in a certain function f(x), but despite the fact that f may be defined over an entire interval of values [a; b] (which may be the entire real line) we only know its precise value at select point x1; x2; : : : ; xN . select value samples the actual function f(x) There may be several good reasons why we could only have a limited number of values for f(x), instead of its entire graph: • Perhaps we do not have an analytic formula for f(x) because it is the result of a complex process that is only observed experimentally. For example, f(x) could correspond to a physical quantity (temperature, den- sity, concentration, velocity, etc.) which varies over time in a laboratory experiment. Instead of an explicit formula, we use a measurement device to capture sample values of f at predetermined points in time. 1 • Or, perhaps we do have a formula for f(x), but this formula is not trivially easy to evaluate. Consider for example: x Z 2 f(x) = sin(x) or f(x) = ln(x) or f(x) = e−t dt 0 Perhaps evaluating f(x) with such a formula is a very expensive oper- ation and we want to consider a less expensive way to obtain a \crude approximation". In fact, in years when computers were not as ubiquitous as today, trigonometric tables were very popular. For example: Angle sin(θ) cos(θ) ::: ::: ::: 44◦ 0:695 0:719 45◦ 0:707 0:707 46◦ 0:719 0:695 47◦ 0:731 0:682 ::: ::: ::: If we were asked to approximate the value of sin(44:6◦), it would be natural to consider deriving an estimate from these tabulated values, rather than attempting to write an analytic expression for this quantity. -

Interpolation

Chapter 11 Interpolation So far we have derived methods for analyzing functions f , e.g. finding their minima and roots. Evaluating f (~x) at a particular ~x 2 Rn might be expensive, but a fundamental assumption of the methods we developed in previous chapters is that we can obtain f (~x) when we want it, regardless of ~x. There are many contexts when this assumption is not realistic. For instance, if we take a pho- tograph with a digital camera, we receive an n × m grid of pixel color values sampling the contin- uum of light coming into a camera lens. We might think of a photograph as a continuous function from image position (x, y) to color (r, g, b), but in reality we only know the image value at nm separated locations on the image plane. Similarly, in machine learning and statistics, often we only are given samples of a function at points where we collected data, and we must interpolate it to have values elsewhere; in a medical setting we may monitor a patient’s response to different dosages of a drug but only can predict what will happen at a dosage we have not tried explicitly. In these cases, before we can minimize a function, find its roots, or even compute values f (~x) at arbitrary locations ~x, we need a model for interpolating f (~x) to all of Rn (or some subset thereof) given a collection of samples f (~xi). Of course, techniques solving this interpolation problem are inherently approximate, since we do not know the true values of f , so instead we seek for the interpolated function to be smooth and serve as a “reasonable” prediction of function values.