HMMER User's Guide

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

To Find Information About Arabidopsis Genes Leonore Reiser1, Shabari

UNIT 1.11 Using The Arabidopsis Information Resource (TAIR) to Find Information About Arabidopsis Genes Leonore Reiser1, Shabari Subramaniam1, Donghui Li1, and Eva Huala1 1Phoenix Bioinformatics, Redwood City, CA USA ABSTRACT The Arabidopsis Information Resource (TAIR; http://arabidopsis.org) is a comprehensive Web resource of Arabidopsis biology for plant scientists. TAIR curates and integrates information about genes, proteins, gene function, orthologs gene expression, mutant phenotypes, biological materials such as clones and seed stocks, genetic markers, genetic and physical maps, genome organization, images of mutant plants, protein sub-cellular localizations, publications, and the research community. The various data types are extensively interconnected and can be accessed through a variety of Web-based search and display tools. This unit primarily focuses on some basic methods for searching, browsing, visualizing, and analyzing information about Arabidopsis genes and genome, Additionally we describe how members of the community can share data using TAIR’s Online Annotation Submission Tool (TOAST), in order to make their published research more accessible and visible. Keywords: Arabidopsis ● databases ● bioinformatics ● data mining ● genomics INTRODUCTION The Arabidopsis Information Resource (TAIR; http://arabidopsis.org) is a comprehensive Web resource for the biology of Arabidopsis thaliana (Huala et al., 2001; Garcia-Hernandez et al., 2002; Rhee et al., 2003; Weems et al., 2004; Swarbreck et al., 2008, Lamesch, et al., 2010, Berardini et al., 2016). The TAIR database contains information about genes, proteins, gene expression, mutant phenotypes, germplasms, clones, genetic markers, genetic and physical maps, genome organization, publications, and the research community. In addition, seed and DNA stocks from the Arabidopsis Biological Resource Center (ABRC; Scholl et al., 2003) are integrated with genomic data, and can be ordered through TAIR. -

Homology & Alignment

Protein Bioinformatics Johns Hopkins Bloomberg School of Public Health 260.655 Thursday, April 1, 2010 Jonathan Pevsner Outline for today 1. Homology and pairwise alignment 2. BLAST 3. Multiple sequence alignment 4. Phylogeny and evolution Learning objectives: homology & alignment 1. You should know the definitions of homologs, orthologs, and paralogs 2. You should know how to determine whether two genes (or proteins) are homologous 3. You should know what a scoring matrix is 4. You should know how alignments are performed 5. You should know how to align two sequences using the BLAST tool at NCBI 1 Pairwise sequence alignment is the most fundamental operation of bioinformatics • It is used to decide if two proteins (or genes) are related structurally or functionally • It is used to identify domains or motifs that are shared between proteins • It is the basis of BLAST searching (next topic) • It is used in the analysis of genomes myoglobin Beta globin (NP_005359) (NP_000509) 2MM1 2HHB Page 49 Pairwise alignment: protein sequences can be more informative than DNA • protein is more informative (20 vs 4 characters); many amino acids share related biophysical properties • codons are degenerate: changes in the third position often do not alter the amino acid that is specified • protein sequences offer a longer “look-back” time • DNA sequences can be translated into protein, and then used in pairwise alignments 2 Find BLAST from the home page of NCBI and select protein BLAST… Page 52 Choose align two or more sequences… Page 52 Enter the two sequences (as accession numbers or in the fasta format) and click BLAST. -

Assembly Exercise

Assembly Exercise Turning reads into genomes Where we are • 13:30-14:00 – Primer Design to Amplify Microbial Genomes for Sequencing • 14:00-14:15 – Primer Design Exercise • 14:15-14:45 – Molecular Barcoding to Allow Multiplexed NGS • 14:45-15:15 – Processing NGS Data – de novo and mapping assembly • 15:15-15:30 – Break • 15:30-15:45 – Assembly Exercise • 15:45-16:15 – Annotation • 16:15-16:30 – Annotation Exercise • 16:30-17:00 – Submitting Data to GenBank Log onto ILRI cluster • Log in to HPC using ILRI instructions • NOTE: All the commands here are also in the file - assembly_hands_on_steps.txt • If you are like me, it may be easier to cut and paste Linux commands from this file instead of typing them in from the slides Start an interactive session on larger servers • The interactive command will start a session on a server better equipped to do genome assembly $ interactive • Switch to csh (I use some csh features) $ csh • Set up Newbler software that will be used $ module load 454 A norovirus sample sequenced on both 454 and Illumina • The vendors use different file formats unknown_norovirus_454.GACT.sff unknown_norovirus_illumina.fastq • I have converted these files to additional formats for use with the assembly tools unknown_norovirus_454_convert.fasta unknown_norovirus_454_convert.fastq unknown_norovirus_illumina_convert.fasta Set up and run the Newbler de novo assembler • Create a new de novo assembly project $ newAssembly de_novo_assembly • Add read data to the project $ addRun de_novo_assembly unknown_norovirus_454.GACT.sff -

HMMER User's Guide

HMMER User’s Guide Biological sequence analysis using profile hidden Markov models http://hmmer.org/ Version 3.0rc1; February 2010 Sean R. Eddy for the HMMER Development Team Janelia Farm Research Campus 19700 Helix Drive Ashburn VA 20147 USA http://eddylab.org/ Copyright (C) 2010 Howard Hughes Medical Institute. Permission is granted to make and distribute verbatim copies of this manual provided the copyright notice and this permission notice are retained on all copies. HMMER is licensed and freely distributed under the GNU General Public License version 3 (GPLv3). For a copy of the License, see http://www.gnu.org/licenses/. HMMER is a trademark of the Howard Hughes Medical Institute. 1 Contents 1 Introduction 5 How to avoid reading this manual . 5 How to avoid using this software (links to similar software) . 5 What profile HMMs are . 5 Applications of profile HMMs . 6 Design goals of HMMER3 . 7 What’s still missing in HMMER3 . 8 How to learn more about profile HMMs . 9 2 Installation 10 Quick installation instructions . 10 System requirements . 10 Multithreaded parallelization for multicores is the default . 11 MPI parallelization for clusters is optional . 11 Using build directories . 12 Makefile targets . 12 3 Tutorial 13 The programs in HMMER . 13 Files used in the tutorial . 13 Searching a sequence database with a single profile HMM . 14 Step 1: build a profile HMM with hmmbuild . 14 Step 2: search the sequence database with hmmsearch . 16 Searching a profile HMM database with a query sequence . 22 Step 1: create an HMM database flatfile . 22 Step 2: compress and index the flatfile with hmmpress . -

The Uniprot Knowledgebase BLAST

Introduction to bioinformatics The UniProt Knowledgebase BLAST UniProtKB Basic Local Alignment Search Tool A CRITICAL GUIDE 1 Version: 1 August 2018 A Critical Guide to BLAST BLAST Overview This Critical Guide provides an overview of the BLAST similarity search tool, Briefly examining the underlying algorithm and its rise to popularity. Several WeB-based and stand-alone implementations are reviewed, and key features of typical search results are discussed. Teaching Goals & Learning Outcomes This Guide introduces concepts and theories emBodied in the sequence database search tool, BLAST, and examines features of search outputs important for understanding and interpreting BLAST results. On reading this Guide, you will Be aBle to: • search a variety of Web-based sequence databases with different query sequences, and alter search parameters; • explain a range of typical search parameters, and the likely impacts on search outputs of changing them; • analyse the information conveyed in search outputs and infer the significance of reported matches; • examine and investigate the annotations of reported matches, and their provenance; and • compare the outputs of different BLAST implementations and evaluate the implications of any differences. finding short words – k-tuples – common to the sequences Being 1 Introduction compared, and using heuristics to join those closest to each other, including the short mis-matched regions Between them. BLAST4 was the second major example of this type of algorithm, From the advent of the first molecular sequence repositories in and rapidly exceeded the popularity of FastA, owing to its efficiency the 1980s, tools for searching dataBases Became essential. DataBase searching is essentially a ‘pairwise alignment’ proBlem, in which the and Built-in statistics. -

Multiple Alignments, Blast and Clustalw

Multiple alignments, blast and clustalW 1. Blast idea: a. Filter out low complexity regions (tandem repeats… that sort of thing) [optional] b. Compile list of high-scoring strings (words, in BLAST jargon) of fixed length in query (threshold T) c. Extend alignments (highs scoring pairs) d. Report High Scoring pairs: score at least S (or an E value lower than some threshold) 2. Multiple Sequence Alignments: a. Attempts to extend dynamic programming techniques to multiple sequences run into problems after only a few proteins (8 average proteins were a problem early in 2000s) b. Heuristic approach c. Idea (Progressive Approach): i. homologous sequences are evolutionarily related ii. Build multiple alignment by series of pairwise alignments based off some phylogenetic tree (the initial tree or the guide tree ) iii. Add in more distantly related sequences d. Progressive Sequence alignment example: 1. NYLS & NKYLS: N YLS N(K|-)YLS NKYLS 2. NFS & NFLS: N YLS NF S NF(L|-)S NKYLS NFLS 3. N(K|-)YLS & NF(L|-)S N YLS N(K|-)(Y|F)(L|-)S NKYLS N YLS N F S N FLS e. Assessment: i. Works great for fairly similar sequences ii. Not so well for highly divergent ones f. Two Problems: i. local minimum problem: Algorithm greedily adds sequences based off of tree— might miss global solution ii. Alignment parameters: Mistakes (misaligned regions) early in procedure can’t be corrected later. g. ClustalW does multiple alignments and attempts to solve alignment parameter problem i. gap costs are dynamically varied based on position and amino acid ii. weight matrices are changed as the level of divergence between sequence increases (say going from PAM30 -> PAM60) iii. -

Validation and Annotation of Virus Sequence Submissions to Genbank Alejandro A

Schäffer et al. BMC Bioinformatics (2020) 21:211 https://doi.org/10.1186/s12859-020-3537-3 SOFTWARE Open Access VADR: validation and annotation of virus sequence submissions to GenBank Alejandro A. Schäffer1,2, Eneida L. Hatcher2, Linda Yankie2, Lara Shonkwiler2,3,J.RodneyBrister2, Ilene Karsch-Mizrachi2 and Eric P. Nawrocki2* *Correspondence: [email protected] Abstract 2National Center for Biotechnology Background: GenBank contains over 3 million viral sequences. The National Center Information, National Library of for Biotechnology Information (NCBI) previously made available a tool for validating Medicine, National Institutes of Health, Bethesda, MD, 20894 USA and annotating influenza virus sequences that is used to check submissions to Full list of author information is GenBank. Before this project, there was no analogous tool in use for non-influenza viral available at the end of the article sequence submissions. Results: We developed a system called VADR (Viral Annotation DefineR) that validates and annotates viral sequences in GenBank submissions. The annotation system is based on the analysis of the input nucleotide sequence using models built from curated RefSeqs. Hidden Markov models are used to classify sequences by determining the RefSeq they are most similar to, and feature annotation from the RefSeq is mapped based on a nucleotide alignment of the full sequence to a covariance model. Predicted proteins encoded by the sequence are validated with nucleotide-to-protein alignments using BLAST. The system identifies 43 types of “alerts” that (unlike the previous BLAST-based system) provide deterministic and rigorous feedback to researchers who submit sequences with unexpected characteristics. VADR has been integrated into GenBank’s submission processing pipeline allowing for viral submissions passing all tests to be accepted and annotated automatically, without the need for any human (GenBank indexer) intervention. -

BLAST Practice

Using BLAST BLAST (Basic Local Alignment Search Tool) is an online search tool provided by NCBI (National Center for Biotechnology Information). It allows you to “find regions of similarity between biological sequences” (nucleotide or protein). The NCBI maintains a huge database of biological sequences, which it compares the query sequences to in order to find the most similar ones. Using BLAST, you can input a gene sequence of interest and search entire genomic libraries for identical or similar sequences in a matter of seconds. The amount of information on the BLAST website is a bit overwhelming — even for the scientists who use it on a frequent basis! You are not expected to know every detail of the BLAST program. BLAST results have the following fields: E value: The E value (expected value) is a number that describes how many times you would expect a match by chance in a database of that size. The lower the E value is, the more significant the match. Percent Identity: The percent identity is a number that describes how similar the query sequence is to the target sequence (how many characters in each sequence are identical). The higher the percent identity is, the more significant the match. Query Cover: The query cover is a number that describes how much of the query sequence is covered by the target sequence. If the target sequence in the database spans the whole query sequence, then the query cover is 100%. This tells us how long the sequences are, relative to each other. FASTA format FASTA format is used to represent either nucleotide or peptide sequences. -

RNA Ontology Consortium January 8-9, 2011

UC San Diego UC San Diego Previously Published Works Title Meeting report of the RNA Ontology Consortium January 8-9, 2011. Permalink https://escholarship.org/uc/item/0kw6h252 Journal Standards in genomic sciences, 4(2) ISSN 1944-3277 Authors Birmingham, Amanda Clemente, Jose C Desai, Narayan et al. Publication Date 2011-04-01 DOI 10.4056/sigs.1724282 Peer reviewed eScholarship.org Powered by the California Digital Library University of California Standards in Genomic Sciences (2011) 4:252-256 DOI:10.4056/sigs.1724282 Meeting report of the RNA Ontology Consortium January 8-9, 2011 Amanda Birmingham1, Jose C. Clemente2, Narayan Desai3, Jack Gilbert3,4, Antonio Gonzalez2, Nikos Kyrpides5, Folker Meyer3,6, Eric Nawrocki7, Peter Sterk8, Jesse Stombaugh2, Zasha Weinberg9,10, Doug Wendel2, Neocles B. Leontis11, Craig Zirbel12, Rob Knight2,13, Alain Laederach14 1 Thermo Fisher Scientific, Lafayette, CO, USA 2 Department of Chemistry and Biochemistry, University of Colorado, Boulder, CO, USA 3 Argonne National Laboratory, Argonne, IL, USA 4 Department of Ecology and Evolution, University of Chicago, Chicago, IL, USA 5 DOE Joint Genome Institute, Walnut Creek, CA, USA 6 Computation Institute, University of Chicago, Chicago, IL, USA 7 Janelia Farm Research Campus, Howard Hughes Medical Institute, Ashburn, VA, USA 8 Wellcome Trust Sanger Institute, Wellcome Trust Genome Campus, Hinxton, Cambridge, UK 9 Department of Molecular, Cellular and Developmental, Yale University, New Haven, CT, USA 10 Howard Hughes Medical Institute, Yale University, New -

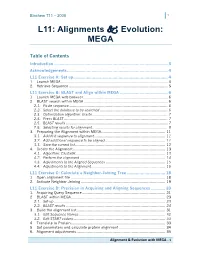

L11: Alignments 5 Evolution: MEGA

Biochem 711 – 2008 1 L11: Alignments Evolution: MEGA Table of Contents Introduction............................................................................................. 3 Acknowledgements.................................................................................. 4 L11 Exercise A: Set up............................................................................. 4 1. Launch MEGA............................................................................................... 4 2. Retrieve Sequence ........................................................................................ 5 L11 Exercise B: BLAST and Align within MEGA ....................................... 6 1. Launch MEGA web browser........................................................................... 6 2. BLAST search within MEGA .......................................................................... 6 2.1. Paste sequence........................................................................................ 6 2.2. Select the database to be searched ............................................................ 6 2.3. Optimization algorithm: blastn................................................................... 7 2.4. Press BLAST ............................................................................................ 7 2.5. BLAST results .......................................................................................... 7 2.6. Selecting results for alignment................................................................... 9 3. Preparing -

Teachers Notes(.Pdf, 116.2

FUNCTION FINDERS BLAST Teacher’s notes BACKGROUND TO ACTIVITY Function Finders BLAST provides a hands-on exercise that introduces the concept of genes coding for proteins. The activity involves translating DNA sequences into amino acid chains and using this information to find a matching protein with the correct corresponding sequence. Estimated duration: 30 – 45 minutes MATERIALS TO RUN THE ACTIVITY - Student worksheets - Codon Wheel sheets - Function Finders presentation files - Computer (one between two students) - Internet connection for each computer Optional animations (recommended for AS Level) DNA to protein animation: www.yourgenome.org/video/from-dna-to-protein Optional activity: View the proteins in 3D Rasmol (available from www.rasmol.org) is a molecular modelling software that can be used to view proteins in 3D. It can be used by the teacher or students to view the proteins they have identified and initiate discussions about the tertiary structures of proteins. It is not an essential part of the activity. ACTIVITY PREPARATION The following activity components need to be prepared before the activity starts. Working in pairs, students require: - One Function Finders worksheet - One Codon Wheel sheet - One computer We recommend one computer between two students, but the activity can be run with groups of three students. OPTIONAL ACTIVITY PREPARATION 1/8 yourgenome.org FUNCTION FINDERS BLAST Teacher’s notes 1. Download Rasmol program (optional) If you wish to use the Rasmol programme to view the protein structure, download the programme from www.rasmol.org and select “Latest windows installer” at the top of the page. 2. Save protein files If you plan to use the Rasmol programme ensure you have the protein files saved in an accessible folder on the laptops. -

Tools for Simulating Evolution of Aligned Genomic Regions with Integrated Parameter Estimation Avinash Varadarajan*, Robert K Bradley and Ian H Holmes

Open Access Software2008VaradarajanetVolume al. 9, Issue 10, Article R147 Tools for simulating evolution of aligned genomic regions with integrated parameter estimation Avinash Varadarajan*, Robert K Bradley and Ian H Holmes Addresses: *Computer Science Division, University of California, Berkeley, CA 94720-1776, USA. Biophysics Graduate Group, University of California, Berkeley, CA 94720-3200, USA. Department of Bioengineering, University of California, Berkeley, CA 94720-1762, USA. Correspondence: Ian H Holmes. Email: [email protected] Published: 8 October 2008 Received: 20 June 2008 Revised: 21 August 2008 Genome Biology 2008, 9:R147 (doi:10.1186/gb-2008-9-10-r147) Accepted: 8 October 2008 The electronic version of this article is the complete one and can be found online at http://genomebiology.com/2008/9/10/R147 © 2008 Varadarajan et al.; licensee BioMed Central Ltd. This is an open access article distributed under the terms of the Creative Commons Attribution License (http://creativecommons.org/licenses/by/2.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. Simulation<p>Threefor richly structured tools of genome for simulating syntenic evolution blocks genome of genomeevolution sequence.</p> are presented: for neutrally evolving DNA, for phylogenetic context-free grammars and Abstract Controlled simulations of genome evolution are useful for benchmarking tools. However, many simulators lack extensibility and cannot measure parameters directly from data. These issues are addressed by three new open-source programs: GSIMULATOR (for neutrally evolving DNA), SIMGRAM (for generic structured features) and SIMGENOME (for syntenic genome blocks). Each offers algorithms for parameter measurement and reconstruction of ancestral sequence.