Operators on Hilbert Space

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Adjoint of Unbounded Operators on Banach Spaces

November 5, 2013 ADJOINT OF UNBOUNDED OPERATORS ON BANACH SPACES M.T. NAIR Banach spaces considered below are over the field K which is either R or C. Let X be a Banach space. following Kato [2], X∗ denotes the linear space of all continuous conjugate linear functionals on X. We shall denote hf; xi := f(x); x 2 X; f 2 X∗: On X∗, f 7! kfk := sup jhf; xij kxk=1 defines a norm on X∗. Definition 1. The space X∗ is called the adjoint space of X. Note that if K = R, then X∗ coincides with the dual space X0. It can be shown, analogues to the case of X0, that X∗ is a Banach space. Let X and Y be Banach spaces, and A : D(A) ⊆ X ! Y be a densely defined linear operator. Now, we st out to define adjoint of A as in Kato [2]. Theorem 2. There exists a linear operator A∗ : D(A∗) ⊆ Y ∗ ! X∗ such that hf; Axi = hA∗f; xi 8 x 2 D(A); f 2 D(A∗) and for any other linear operator B : D(B) ⊆ Y ∗ ! X∗ satisfying hf; Axi = hBf; xi 8 x 2 D(A); f 2 D(B); D(B) ⊆ D(A∗) and B is a restriction of A∗. Proof. Suppose D(A) is dense in X. Let S := ff 2 Y ∗ : x 7! hf; Axi continuous on D(A)g: For f 2 S, define gf : D(A) ! K by (gf )(x) = hf; Axi 8 x 2 D(A): Since D(A) is dense in X, gf has a unique continuous conjugate linear extension to all ∗ of X, preserving the norm. -

Surjective Isometries on Grassmann Spaces 11

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Repository of the Academy's Library SURJECTIVE ISOMETRIES ON GRASSMANN SPACES FERNANDA BOTELHO, JAMES JAMISON, AND LAJOS MOLNAR´ Abstract. Let H be a complex Hilbert space, n a given positive integer and let Pn(H) be the set of all projections on H with rank n. Under the condition dim H ≥ 4n, we describe the surjective isometries of Pn(H) with respect to the gap metric (the metric induced by the operator norm). 1. Introduction The study of isometries of function spaces or operator algebras is an important research area in functional analysis with history dating back to the 1930's. The most classical results in the area are the Banach-Stone theorem describing the surjective linear isometries between Banach spaces of complex- valued continuous functions on compact Hausdorff spaces and its noncommutative extension for general C∗-algebras due to Kadison. This has the surprising and remarkable consequence that the surjective linear isometries between C∗-algebras are closely related to algebra isomorphisms. More precisely, every such surjective linear isometry is a Jordan *-isomorphism followed by multiplication by a fixed unitary element. We point out that in the aforementioned fundamental results the underlying structures are algebras and the isometries are assumed to be linear. However, descriptions of isometries of non-linear structures also play important roles in several areas. We only mention one example which is closely related with the subject matter of this paper. This is the famous Wigner's theorem that describes the structure of quantum mechanical symmetry transformations and plays a fundamental role in the probabilistic aspects of quantum theory. -

Linear Differential Equations in N Th-Order ;

Ordinary Differential Equations Second Course Babylon University 2016-2017 Linear Differential Equations in n th-order ; An nth-order linear differential equation has the form ; …. (1) Where the coefficients ;(j=0,1,2,…,n-1) ,and depends solely on the variable (not on or any derivative of ) If then Eq.(1) is homogenous ,if not then is nonhomogeneous . …… 2 A linear differential equation has constant coefficients if all the coefficients are constants ,if one or more is not constant then has variable coefficients . Examples on linear differential equations ; first order nonhomogeneous second order homogeneous third order nonhomogeneous fifth order homogeneous second order nonhomogeneous Theorem 1: Consider the initial value problem given by the linear differential equation(1)and the n initial Conditions; … …(3) Define the differential operator L(y) by …. (4) Where ;(j=0,1,2,…,n-1) is continuous on some interval of interest then L(y) = and a linear homogeneous differential equation written as; L(y) =0 Definition: Linearly Independent Solution; A set of functions … is linearly dependent on if there exists constants … not all zero ,such that …. (5) on 1 Ordinary Differential Equations Second Course Babylon University 2016-2017 A set of functions is linearly dependent if there exists another set of constants not all zero, that is satisfy eq.(5). If the only solution to eq.(5) is ,then the set of functions is linearly independent on The functions are linearly independent Theorem 2: If the functions y1 ,y2, …, yn are solutions for differential -

The Wronskian

Jim Lambers MAT 285 Spring Semester 2012-13 Lecture 16 Notes These notes correspond to Section 3.2 in the text. The Wronskian Now that we know how to solve a linear second-order homogeneous ODE y00 + p(t)y0 + q(t)y = 0 in certain cases, we establish some theory about general equations of this form. First, we introduce some notation. We define a second-order linear differential operator L by L[y] = y00 + p(t)y0 + q(t)y: Then, a initial value problem with a second-order homogeneous linear ODE can be stated as 0 L[y] = 0; y(t0) = y0; y (t0) = z0: We state a result concerning existence and uniqueness of solutions to such ODE, analogous to the Existence-Uniqueness Theorem for first-order ODE. Theorem (Existence-Uniqueness) The initial value problem 00 0 0 L[y] = y + p(t)y + q(t)y = g(t); y(t0) = y0; y (t0) = z0 has a unique solution on an open interval I containing the point t0 if p, q and g are continuous on I. The solution is twice differentiable on I. Now, suppose that we have obtained two solutions y1 and y2 of the equation L[y] = 0. Then 00 0 00 0 y1 + p(t)y1 + q(t)y1 = 0; y2 + p(t)y2 + q(t)y2 = 0: Let y = c1y1 + c2y2, where c1 and c2 are constants. Then L[y] = L[c1y1 + c2y2] 00 0 = (c1y1 + c2y2) + p(t)(c1y1 + c2y2) + q(t)(c1y1 + c2y2) 00 00 0 0 = c1y1 + c2y2 + c1p(t)y1 + c2p(t)y2 + c1q(t)y1 + c2q(t)y2 00 0 00 0 = c1(y1 + p(t)y1 + q(t)y1) + c2(y2 + p(t)y2 + q(t)y2) = 0: We have just established the following theorem. -

Functional Analysis Lecture Notes Chapter 2. Operators on Hilbert Spaces

FUNCTIONAL ANALYSIS LECTURE NOTES CHAPTER 2. OPERATORS ON HILBERT SPACES CHRISTOPHER HEIL 1. Elementary Properties and Examples First recall the basic definitions regarding operators. Definition 1.1 (Continuous and Bounded Operators). Let X, Y be normed linear spaces, and let L: X Y be a linear operator. ! (a) L is continuous at a point f X if f f in X implies Lf Lf in Y . 2 n ! n ! (b) L is continuous if it is continuous at every point, i.e., if fn f in X implies Lfn Lf in Y for every f. ! ! (c) L is bounded if there exists a finite K 0 such that ≥ f X; Lf K f : 8 2 k k ≤ k k Note that Lf is the norm of Lf in Y , while f is the norm of f in X. k k k k (d) The operator norm of L is L = sup Lf : k k kfk=1 k k (e) We let (X; Y ) denote the set of all bounded linear operators mapping X into Y , i.e., B (X; Y ) = L: X Y : L is bounded and linear : B f ! g If X = Y = X then we write (X) = (X; X). B B (f) If Y = F then we say that L is a functional. The set of all bounded linear functionals on X is the dual space of X, and is denoted X0 = (X; F) = L: X F : L is bounded and linear : B f ! g We saw in Chapter 1 that, for a linear operator, boundedness and continuity are equivalent. -

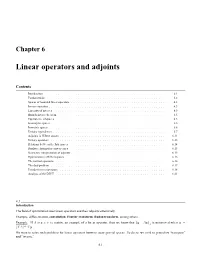

Linear Operators and Adjoints

Chapter 6 Linear operators and adjoints Contents Introduction .......................................... ............. 6.1 Fundamentals ......................................... ............. 6.2 Spaces of bounded linear operators . ................... 6.3 Inverseoperators.................................... ................. 6.5 Linearityofinverses.................................... ............... 6.5 Banachinversetheorem ................................. ................ 6.5 Equivalenceofspaces ................................. ................. 6.5 Isomorphicspaces.................................... ................ 6.6 Isometricspaces...................................... ............... 6.6 Unitaryequivalence .................................... ............... 6.7 Adjoints in Hilbert spaces . .............. 6.11 Unitaryoperators ...................................... .............. 6.13 Relations between the four spaces . ................. 6.14 Duality relations for convex cones . ................. 6.15 Geometric interpretation of adjoints . ............... 6.15 Optimization in Hilbert spaces . .............. 6.16 Thenormalequations ................................... ............... 6.16 Thedualproblem ...................................... .............. 6.17 Pseudo-inverseoperators . .................. 6.18 AnalysisoftheDTFT ..................................... ............. 6.21 6.1 Introduction The field of optimization uses linear operators and their adjoints extensively. Example. differentiation, convolution, Fourier transform, -

A Note on Riesz Spaces with Property-$ B$

Czechoslovak Mathematical Journal Ş. Alpay; B. Altin; C. Tonyali A note on Riesz spaces with property-b Czechoslovak Mathematical Journal, Vol. 56 (2006), No. 2, 765–772 Persistent URL: http://dml.cz/dmlcz/128103 Terms of use: © Institute of Mathematics AS CR, 2006 Institute of Mathematics of the Czech Academy of Sciences provides access to digitized documents strictly for personal use. Each copy of any part of this document must contain these Terms of use. This document has been digitized, optimized for electronic delivery and stamped with digital signature within the project DML-CZ: The Czech Digital Mathematics Library http://dml.cz Czechoslovak Mathematical Journal, 56 (131) (2006), 765–772 A NOTE ON RIESZ SPACES WITH PROPERTY-b S¸. Alpay, B. Altin and C. Tonyali, Ankara (Received February 6, 2004) Abstract. We study an order boundedness property in Riesz spaces and investigate Riesz spaces and Banach lattices enjoying this property. Keywords: Riesz spaces, Banach lattices, b-property MSC 2000 : 46B42, 46B28 1. Introduction and preliminaries All Riesz spaces considered in this note have separating order duals. Therefore we will not distinguish between a Riesz space E and its image in the order bidual E∼∼. In all undefined terminology concerning Riesz spaces we will adhere to [3]. The notions of a Riesz space with property-b and b-order boundedness of operators between Riesz spaces were introduced in [1]. Definition. Let E be a Riesz space. A set A E is called b-order bounded in ⊂ E if it is order bounded in E∼∼. A Riesz space E is said to have property-b if each subset A E which is order bounded in E∼∼ remains order bounded in E. -

1 Lecture 09

Notes for Functional Analysis Wang Zuoqin (typed by Xiyu Zhai) September 29, 2015 1 Lecture 09 1.1 Equicontinuity First let's recall the conception of \equicontinuity" for family of functions that we learned in classical analysis: A family of continuous functions defined on a region Ω, Λ = ffαg ⊂ C(Ω); is called an equicontinuous family if 8 > 0; 9δ > 0 such that for any x1; x2 2 Ω with jx1 − x2j < δ; and for any fα 2 Λ, we have jfα(x1) − fα(x2)j < . This conception of equicontinuity can be easily generalized to maps between topological vector spaces. For simplicity we only consider linear maps between topological vector space , in which case the continuity (and in fact the uniform continuity) at an arbitrary point is reduced to the continuity at 0. Definition 1.1. Let X; Y be topological vector spaces, and Λ be a family of continuous linear operators. We say Λ is an equicontinuous family if for any neighborhood V of 0 in Y , there is a neighborhood U of 0 in X, such that L(U) ⊂ V for all L 2 Λ. Remark 1.2. Equivalently, this means if x1; x2 2 X and x1 − x2 2 U, then L(x1) − L(x2) 2 V for all L 2 Λ. Remark 1.3. If Λ = fLg contains only one continuous linear operator, then it is always equicontinuous. 1 If Λ is an equicontinuous family, then each L 2 Λ is continuous and thus bounded. In fact, this boundedness is uniform: Proposition 1.4. Let X,Y be topological vector spaces, and Λ be an equicontinuous family of linear operators from X to Y . -

Quantum Mechanics, Schrodinger Operators and Spectral Theory

Quantum mechanics, SchrÄodingeroperators and spectral theory. Spectral theory of SchrÄodinger operators has been my original ¯eld of expertise. It is a wonderful mix of functional analy- sis, PDE and Fourier analysis. In quantum mechanics, every physical observable is described by a self-adjoint operator - an in¯nite dimensional symmetric matrix. For example, ¡¢ is a self-adjoint operator in L2(Rd) when de¯ned on an appropriate domain (Sobolev space H2). In quantum mechanics it corresponds to a free particle - just traveling in space. What does it mean, exactly? Well, in quantum mechanics, position of a particle is described by a wave 2 d function, Á(x; t) 2 L (R ): The physical meaningR of the wave function is that the probability 2 to ¯nd it in a region at time t is equal to jÁ(x; t)j dx: You can't know for sure where the particle is for sure. If initially the wave function is given by Á(x; 0) = Á0(x); the SchrÄodinger ¡i¢t equation says that its evolution is given by Á(x; t) = e Á0: But how to compute what is e¡i¢t? This is where Fourier transform comes in handy. Di®erentiation becomes multiplication on the Fourier transform side, and so Z ¡i¢t ikx¡ijkj2t ^ e Á0(x) = e Á0(k) dk; Rd R ^ ¡ikx where Á0(k) = Rd e Á0(x) dx is the Fourier transform of Á0: I omitted some ¼'s since they do not change the picture. Stationary phase methods lead to very precise estimates on free SchrÄodingerevolution. -

An Introduction to Pseudo-Differential Operators

An introduction to pseudo-differential operators Jean-Marc Bouclet1 Universit´ede Toulouse 3 Institut de Math´ematiquesde Toulouse [email protected] 2 Contents 1 Background on analysis on manifolds 7 2 The Weyl law: statement of the problem 13 3 Pseudodifferential calculus 19 3.1 The Fourier transform . 19 3.2 Definition of pseudo-differential operators . 21 3.3 Symbolic calculus . 24 3.4 Proofs . 27 4 Some tools of spectral theory 41 4.1 Hilbert-Schmidt operators . 41 4.2 Trace class operators . 44 4.3 Functional calculus via the Helffer-Sj¨ostrandformula . 50 5 L2 bounds for pseudo-differential operators 55 5.1 L2 estimates . 55 5.2 Hilbert-Schmidt estimates . 60 5.3 Trace class estimates . 61 6 Elliptic parametrix and applications 65 n 6.1 Parametrix on R ................................ 65 6.2 Localization of the parametrix . 71 7 Proof of the Weyl law 75 7.1 The resolvent of the Laplacian on a compact manifold . 75 7.2 Diagonalization of ∆g .............................. 78 7.3 Proof of the Weyl law . 81 A Proof of the Peetre Theorem 85 3 4 CONTENTS Introduction The spirit of these notes is to use the famous Weyl law (on the asymptotic distribution of eigenvalues of the Laplace operator on a compact manifold) as a case study to introduce and illustrate one of the many applications of the pseudo-differential calculus. The material presented here corresponds to a 24 hours course taught in Toulouse in 2012 and 2013. We introduce all tools required to give a complete proof of the Weyl law, mainly the semiclassical pseudo-differential calculus, and then of course prove it! The price to pay is that we avoid presenting many classical concepts or results which are not necessary for our purpose (such as Borel summations, principal symbols, invariance by diffeomorphism or the G˚ardinginequality). -

Operator Algebras: an Informal Overview 3

OPERATOR ALGEBRAS: AN INFORMAL OVERVIEW FERNANDO LLEDO´ Contents 1. Introduction 1 2. Operator algebras 2 2.1. What are operator algebras? 2 2.2. Differences and analogies between C*- and von Neumann algebras 3 2.3. Relevance of operator algebras 5 3. Different ways to think about operator algebras 6 3.1. Operator algebras as non-commutative spaces 6 3.2. Operator algebras as a natural universe for spectral theory 6 3.3. Von Neumann algebras as symmetry algebras 7 4. Some classical results 8 4.1. Operator algebras in functional analysis 8 4.2. Operator algebras in harmonic analysis 10 4.3. Operator algebras in quantum physics 11 References 13 Abstract. In this article we give a short and informal overview of some aspects of the theory of C*- and von Neumann algebras. We also mention some classical results and applications of these families of operator algebras. 1. Introduction arXiv:0901.0232v1 [math.OA] 2 Jan 2009 Any introduction to the theory of operator algebras, a subject that has deep interrelations with many mathematical and physical disciplines, will miss out important elements of the theory, and this introduction is no ex- ception. The purpose of this article is to give a brief and informal overview on C*- and von Neumann algebras which are the main actors of this summer school. We will also mention some of the classical results in the theory of operator algebras that have been crucial for the development of several areas in mathematics and mathematical physics. Being an overview we can not provide details. Precise definitions, statements and examples can be found in [1] and references cited therein. -

Multiple Operator Integrals: Development and Applications

Multiple Operator Integrals: Development and Applications by Anna Tomskova A thesis submitted for the degree of Doctor of Philosophy at the University of New South Wales. School of Mathematics and Statistics Faculty of Science May 2017 PLEASE TYPE THE UNIVERSITY OF NEW SOUTH WALES Thesis/Dissertation Sheet Surname or Family name: Tomskova First name: Anna Other name/s: Abbreviation for degree as given in the University calendar: PhD School: School of Mathematics and Statistics Faculty: Faculty of Science Title: Multiple Operator Integrals: Development and Applications Abstract 350 words maximum: (PLEASE TYPE) Double operator integrals, originally introduced by Y.L. Daletskii and S.G. Krein in 1956, have become an indispensable tool in perturbation and scattering theory. Such an operator integral is a special mapping defined on the space of all bounded linear operators on a Hilbert space or, when it makes sense, on some operator ideal. Throughout the last 60 years the double and multiple operator integration theory has been greatly expanded in different directions and several definitions of operator integrals have been introduced reflecting the nature of a particular problem under investigation. The present thesis develops multiple operator integration theory and demonstrates how this theory applies to solving of several deep problems in Noncommutative Analysis. The first part of the thesis considers double operator integrals. Here we present the key definitions and prove several important properties of this mapping. In addition, we give a solution of the Arazy conjecture, which was made by J. Arazy in 1982. In this part we also discuss the theory in the setting of Banach spaces and, as an application, we study the operator Lipschitz estimate problem in the space of all bounded linear operators on classical Lp-spaces of scalar sequences.