On Statistical Analysis of Synchronous Stream Ciphers

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Differential Fault Attack on MICKEY

A Differential Fault Attack on MICKEY 2.0 Subhadeep Banik and Subhamoy Maitra Applied Statistics Unit, Indian Statistical Institute Kolkata, 203, B.T. Road, Kolkata-108. s.banik [email protected], [email protected] Abstract. In this paper we present a differential fault attack on the stream cipher MICKEY 2.0 which is in eStream's hardware portfolio. While fault attacks have already been reported against the other two eStream hardware candidates Trivium and Grain, no such analysis is known for MICKEY. Using the standard assumptions for fault attacks, we show that if the adversary can induce random single bit faults in the internal state of the cipher, then by injecting around 216:7 faults and performing 232:5 computations on an average, it is possible to recover the entire internal state of MICKEY at the beginning of the key-stream generation phase. We further consider the scenario where the fault may affect at most three neighbouring bits and in that case we require around 218:4 faults on an average. Keywords: eStream, Fault attacks, MICKEY 2.0, Stream Cipher. 1 Introduction The stream cipher MICKEY 2.0 [4] was designed by Steve Babbage and Matthew Dodd as a submission to the eStream project. The cipher has been selected as a part of eStream's final hardware portfolio. MICKEY is a synchronous, bit- oriented stream cipher designed for low hardware complexity and high speed. After a TMD tradeoff attack [16] against the initial version of MICKEY (ver- sion 1), the designers responded by tweaking the design by increasing the state size from 160 to 200 bits and altering the values of some control bit tap loca- tions. -

Failures of Secret-Key Cryptography D

Failures of secret-key cryptography D. J. Bernstein University of Illinois at Chicago & Technische Universiteit Eindhoven http://xkcd.com/538/ 2011 Grigg{Gutmann: In the past 15 years \no one ever lost money to an attack on a properly designed cryptosystem (meaning one that didn't use homebrew crypto or toy keys) in the Internet or commercial worlds". 2011 Grigg{Gutmann: In the past 15 years \no one ever lost money to an attack on a properly designed cryptosystem (meaning one that didn't use homebrew crypto or toy keys) in the Internet or commercial worlds". 2002 Shamir:\Cryptography is usually bypassed. I am not aware of any major world-class security system employing cryptography in which the hackers penetrated the system by actually going through the cryptanalysis." Do these people mean that it's actually infeasible to break real-world crypto? Do these people mean that it's actually infeasible to break real-world crypto? Or do they mean that breaks are feasible but still not worthwhile for the attackers? Do these people mean that it's actually infeasible to break real-world crypto? Or do they mean that breaks are feasible but still not worthwhile for the attackers? Or are they simply wrong: real-world crypto is breakable; is in fact being broken; is one of many ongoing disaster areas in security? Do these people mean that it's actually infeasible to break real-world crypto? Or do they mean that breaks are feasible but still not worthwhile for the attackers? Or are they simply wrong: real-world crypto is breakable; is in fact being broken; is one of many ongoing disaster areas in security? Let's look at some examples. -

LNCS 9065, Pp

Combined Cache Timing Attacks and Template Attacks on Stream Cipher MUGI Shaoyu Du1,4, , Zhenqi Li1, Bin Zhang1,2, and Dongdai Lin3 1 Trusted Computing and Information Assurance Laboratory, Institute of Software, Chinese Academy of Sciences, Beijing, China 2 State Key Laboratory of Computer Science, Institute of Software, Chinese Academy of Sciences, Beijing, China 3 State Key Laboratory of Information Security, Institute of Information Engineering, Chinese Academy of Sciences, Beijing, China 4 University of Chinese Academy of Sciences, Beijing, China du [email protected] Abstract. The stream cipher MUGI was proposed by Hitachi, Ltd. in 2002 and it was specified as ISO/IEC 18033-4 for keystream genera- tion. Assuming that noise-free cache timing measurements are possible, we give the cryptanalysis of MUGI under the cache attack model. Our simulation results show that we can reduce the computation complexity of recovering all the 1216-bits internal state of MUGI to about O(276) when it is implemented in processors with 64-byte cache line. The at- tack reveals some new inherent weaknesses of MUGI’s structure. The weaknesses can also be used to conduct a noiseless template attack of O(260.51 ) computation complexity to restore the state of MUGI. And then combining these two attacks we can conduct a key-recovery attack on MUGI with about O(230) computation complexity. To the best of our knowledge, it is the first time that the analysis of cache timing attacks and template attacks are applied to full version of MUGI and that these two classes of attacks are combined to attack some cipher. -

Detection and Exploitation of Small Correlations in Stream Ciphers

Detection and Exploitation of Small Correlations in Stream Ciphers Masterthesis conducted under the guidance of Prof. Dr. Joachim Rosenthal and Dr. Gérard Maze Institute of Mathematics, University of Zurich 2008 Urs Wagner Outline This thesis gives an overview of stream ciphers based on linear feedback shift registers (LFSR) and their vulnerability to correlation attacks. In the rst chapter, a short introduction to symmetric key ciphers is given. The main focus hereby is on LFSR based stream ciphers. Further, the principles of LFSR are presented. The chapter is then closed by a stream cipher example, the Gee Generator. The second chapter treats the general approach of correlation attacks. Moreover a correlation attack is mounted on the Gee Generator and the practical results are presented. Boolean functions play an important role in stream cipher designs. The Walsh transform, a tool to analyze the cryptographic properties of Boolean functions, is introduced in chapter 3. Additionally, the cryptographic properties themselves are discussed. In the fourth chapter, an improved kind of correlation attack -the fast correlation attack- is presented. It exploits the same weaknesses in the stream cipher designs as the correlation attack, the mode of operation is however dierent. In the last chapter, the insights gained in the previous chapters are used to suggest an attack on a stream cipher by Philips, named Hitag 2. 1 Acknowledgments This thesis was written in the course of my master's studies at the University of Zurich. I am grateful to Prof. Joachim Rosenthal who gave me the opportunity to write my master thesis in cryptography. Special thanks go to Dr. -

Analysis of Selected Block Cipher Modes for Authenticated Encryption

Analysis of Selected Block Cipher Modes for Authenticated Encryption by Hassan Musallam Ahmed Qahur Al Mahri Bachelor of Engineering (Computer Systems and Networks) (Sultan Qaboos University) – 2007 Thesis submitted in fulfilment of the requirement for the degree of Doctor of Philosophy School of Electrical Engineering and Computer Science Science and Engineering Faculty Queensland University of Technology 2018 Keywords Authenticated encryption, AE, AEAD, ++AE, AEZ, block cipher, CAESAR, confidentiality, COPA, differential fault analysis, differential power analysis, ElmD, fault attack, forgery attack, integrity assurance, leakage resilience, modes of op- eration, OCB, OTR, SHELL, side channel attack, statistical fault analysis, sym- metric encryption, tweakable block cipher, XE, XEX. i ii Abstract Cryptography assures information security through different functionalities, es- pecially confidentiality and integrity assurance. According to Menezes et al. [1], confidentiality means the process of assuring that no one could interpret infor- mation, except authorised parties, while data integrity is an assurance that any unauthorised alterations to a message content will be detected. One possible ap- proach to ensure confidentiality and data integrity is to use two different schemes where one scheme provides confidentiality and the other provides integrity as- surance. A more compact approach is to use schemes, called Authenticated En- cryption (AE) schemes, that simultaneously provide confidentiality and integrity assurance for a message. AE can be constructed using different mechanisms, and the most common construction is to use block cipher modes, which is our focus in this thesis. AE schemes have been used in a wide range of applications, and defined by standardisation organizations. The National Institute of Standards and Technol- ogy (NIST) recommended two AE block cipher modes CCM [2] and GCM [3]. -

Breaking Crypto Without Keys: Analyzing Data in Web Applications Chris Eng

Breaking Crypto Without Keys: Analyzing Data in Web Applications Chris Eng 1 Introduction – Chris Eng _ Director of Security Services, Veracode _ Former occupations . 2000-2006: Senior Consulting Services Technical Lead with Symantec Professional Services (@stake up until October 2004) . 1998-2000: US Department of Defense _ Primary areas of expertise . Web Application Penetration Testing . Network Penetration Testing . Product (COTS) Penetration Testing . Exploit Development (well, a long time ago...) _ Lead developer for @stake’s now-extinct WebProxy tool 2 Assumptions _ This talk is aimed primarily at penetration testers but should also be useful for developers to understand how your application might be vulnerable _ Assumes basic understanding of cryptographic terms but requires no understanding of the underlying math, etc. 3 Agenda 1 Problem Statement 2 Crypto Refresher 3 Analysis Techniques 4 Case Studies 5 Q & A 4 Problem Statement 5 Problem Statement _ What do you do when you encounter unknown data in web applications? . Cookies . Hidden fields . GET/POST parameters _ How can you tell if something is encrypted or trivially encoded? _ How much do I really have to know about cryptography in order to exploit implementation weaknesses? 6 Goals _ Understand some basic techniques for analyzing and breaking down unknown data _ Understand and recognize characteristics of bad crypto implementations _ Apply techniques to real-world penetration tests 7 Crypto Refresher 8 Types of Ciphers _ Block Cipher . Operates on fixed-length groups of bits, called blocks . Block sizes vary depending on the algorithm (most algorithms support several different block sizes) . Several different modes of operation for encrypting messages longer than the basic block size . -

Key Differentiation Attacks on Stream Ciphers

Key differentiation attacks on stream ciphers Abstract In this paper the applicability of differential cryptanalytic tool to stream ciphers is elaborated using the algebraic representation similar to early Shannon’s postulates regarding the concept of confusion. In 2007, Biham and Dunkelman [3] have formally introduced the concept of differential cryptanalysis in stream ciphers by addressing the three different scenarios of interest. Here we mainly consider the first scenario where the key difference and/or IV difference influence the internal state of the cipher (∆key, ∆IV ) → ∆S. We then show that under certain circumstances a chosen IV attack may be transformed in the key chosen attack. That is, whenever at some stage of the key/IV setup algorithm (KSA) we may identify linear relations between some subset of key and IV bits, and these key variables only appear through these linear relations, then using the differentiation of internal state variables (through chosen IV scenario of attack) we are able to eliminate the presence of corresponding key variables. The method leads to an attack whose complexity is beyond the exhaustive search, whenever the cipher admits exact algebraic description of internal state variables and the keystream computation is not complex. A successful application is especially noted in the context of stream ciphers whose keystream bits evolve relatively slow as a function of secret state bits. A modification of the attack can be applied to the TRIVIUM stream cipher [8], in this case 12 linear relations could be identified but at the same time the same 12 key variables appear in another part of state register. -

Ray Bradbury Creative Contest Literary Journal

32nd Annual Ray Bradbury Creative Contest Literary Journal 2016 Val Mayerik Val Ray Bradbury Creative Contest A contest of writing and art by the Waukegan Public Library. This year’s literary journal is edited, designed, and produced by the Waukegan Public Library. Table of Contents Elementary School Written page 1 Middle School Written page 23 High School Written page 52 Adult Written page 98 Jennifer Herrick – Designer Rose Courtney – Staff Judge Diana Wence – Staff Judge Isaac Salgado – Staff Judge Yareli Facundo – Staff Judge Elementary School Written The Haunted School Alexis J. In one wonderful day there was a school-named “Hyde Park”. One day when, a kid named Logan and his friend Mindy went to school they saw something new. Hyde Park is hotel now! Logan and Mindy Went inside to see what was going on. So they could not believe what they say. “Hyde Park is also now haunted! When Logan took one step they saw Slender Man. Then they both walk and there was a scary mask. Then mummies started coming out of the grown and zombies started coming from the grown and they were so stinky yuck! Ghost came out all over the school and all the doors were locked. Now Mindy had a plan to scare all the monsters away. She said “we should put all the monsters we saw all together. So they make Hyde Park normal again. And they live happy ever after and now it is back as normal. THE END The Haunted House Angel A. One day it was night. And it was so dark a lot of people went on a house called “dead”. -

(SMC) MODULE of RC4 STREAM CIPHER ALGORITHM for Wi-Fi ENCRYPTION

InternationalINTERNATIONAL Journal of Electronics and JOURNAL Communication OF Engineering ELECTRONICS & Technology (IJECET),AND ISSN 0976 – 6464(Print), ISSN 0976 – 6472(Online), Volume 6, Issue 1, January (2015), pp. 79-85 © IAEME COMMUNICATION ENGINEERING & TECHNOLOGY (IJECET) ISSN 0976 – 6464(Print) IJECET ISSN 0976 – 6472(Online) Volume 6, Issue 1, January (2015), pp. 79-85 © IAEME: http://www.iaeme.com/IJECET.asp © I A E M E Journal Impact Factor (2015): 7.9817 (Calculated by GISI) www.jifactor.com VHDL MODELING OF THE SRAM MODULE AND STATE MACHINE CONTROLLER (SMC) MODULE OF RC4 STREAM CIPHER ALGORITHM FOR Wi-Fi ENCRYPTION Dr.A.M. Bhavikatti 1 Mallikarjun.Mugali 2 1,2Dept of CSE, BKIT, Bhalki, Karnataka State, India ABSTRACT In this paper, VHDL modeling of the SRAM module and State Machine Controller (SMC) module of RC4 stream cipher algorithm for Wi-Fi encryption is proposed. Various individual modules of Wi-Fi security have been designed, verified functionally using VHDL-simulator. In cryptography RC4 is the most widely used software stream cipher and is used in popular protocols such as Transport Layer Security (TLS) (to protect Internet traffic) and WEP (to secure wireless networks). While remarkable for its simplicity and speed in software, RC4 has weaknesses that argue against its use in new systems. It is especially vulnerable when the beginning of the output key stream is not discarded, or when nonrandom or related keys are used; some ways of using RC4 can lead to very insecure cryptosystems such as WEP . Many stream ciphers are based on linear feedback shift registers (LFSRs), which, while efficient in hardware, are less so in software. -

Most Popular ■ CORN STALK RUNNER at Flock Together This 2017 TROY TURKEY TROT

COVERING FREE! UPSTATE NY NOVEMBER SINCE 2000 2018 Flock Together this Thanksgiving ■ 5K START AT 2013 TROY TURKEY TROT. JOIN THE CONTENTS 1 Running & Walking Most Popular ■ CORN STALK RUNNER AT Flock Together this 2017 TROY TURKEY TROT. Thanksgiving! 3 Alpine Skiing & Boarding Strutting Day! Ready for Ski Season! By Laura Clark 5 News Briefs 5 From the Publishers hat I enjoy most about Thanksgiving is that it is a teams are encouraged and die-hards relaxed, all-American holiday. And what is more are invited to try for the individual 50K option. 6-9 CALENDAR OF EVENTS WAmerican than our plucky, ungainly turkey? Granted, Proceeds benefit the Regional Food Bank of Northeastern New although Ben Franklin lost his bid to elevate our native species to November to January York, enabling them to ensure a bountiful Thanksgiving for every- national symbol status, the turkey gets the last cackle. For when one. (fleetfeetalbany.com) Things to Do was the last time you celebrated eagle day? On Thanksgiving Day, Thursday, November 22, get ready for 11 Hiking, Snowshoeing In a wishbone world, Thanksgiving gives the least offense. the most popular running day of the whole year. Sample one of Sure, it is a worry for turkeys but a trade-off if you consider all the these six races in our area. & Camping free publicity. While slightly distasteful for vegetarians, there are While most trots cater to the 5K crowd, perfect for strollers, West Stony Creek: Well-Suited all those yummy sides and desserts to consider. Best of all is the aspiring turklings and elders, the premiere 71st annual Troy emphasis on family members, from toms to hens to the littlest Turkey Trot is the only area race where it is still possible to double for Late Fall/Early Winter turklings (think ducklings). -

Cryptanalysis of Stream Ciphers Based on Arrays and Modular Addition

KATHOLIEKE UNIVERSITEIT LEUVEN FACULTEIT INGENIEURSWETENSCHAPPEN DEPARTEMENT ELEKTROTECHNIEK{ESAT Kasteelpark Arenberg 10, 3001 Leuven-Heverlee Cryptanalysis of Stream Ciphers Based on Arrays and Modular Addition Promotor: Proefschrift voorgedragen tot Prof. Dr. ir. Bart Preneel het behalen van het doctoraat in de ingenieurswetenschappen door Souradyuti Paul November 2006 KATHOLIEKE UNIVERSITEIT LEUVEN FACULTEIT INGENIEURSWETENSCHAPPEN DEPARTEMENT ELEKTROTECHNIEK{ESAT Kasteelpark Arenberg 10, 3001 Leuven-Heverlee Cryptanalysis of Stream Ciphers Based on Arrays and Modular Addition Jury: Proefschrift voorgedragen tot Prof. Dr. ir. Etienne Aernoudt, voorzitter het behalen van het doctoraat Prof. Dr. ir. Bart Preneel, promotor in de ingenieurswetenschappen Prof. Dr. ir. Andr´eBarb´e door Prof. Dr. ir. Marc Van Barel Prof. Dr. ir. Joos Vandewalle Souradyuti Paul Prof. Dr. Lars Knudsen (Technical University, Denmark) U.D.C. 681.3*D46 November 2006 ⃝c Katholieke Universiteit Leuven { Faculteit Ingenieurswetenschappen Arenbergkasteel, B-3001 Heverlee (Belgium) Alle rechten voorbehouden. Niets uit deze uitgave mag vermenigvuldigd en/of openbaar gemaakt worden door middel van druk, fotocopie, microfilm, elektron- isch of op welke andere wijze ook zonder voorafgaande schriftelijke toestemming van de uitgever. All rights reserved. No part of the publication may be reproduced in any form by print, photoprint, microfilm or any other means without written permission from the publisher. D/2006/7515/88 ISBN 978-90-5682-754-0 To my parents for their unyielding ambition to see me educated and Prof. Bimal Roy for making cryptology possible in my life ... My Gratitude It feels awkward to claim the thesis to be singularly mine as a great number of people, directly or indirectly, participated in the process to make it see the light of day. -

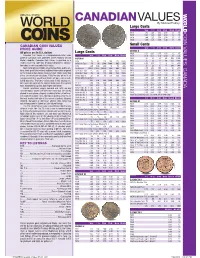

*UPDATED Canadian Values 07-04 201 7/26/2016 4:42:21 PM *UPDATED Canadian Values 07-04 202 COIN VALUES: CANADA 02 .0 .0 12

CANADIAN VALUES By Michael Findlay Large Cents VG-8 F-12 VF-20 EF-40 MS-60 MS-63R 1917 1.00 1.25 1.50 2.50 13. 45. CANADA COIN VALUES: 1918 1.00 1.25 1.50 2.50 13. 45. 1919 1.00 1.25 1.50 2.50 13. 45. 1920 1.00 1.25 1.50 3.00 18. 70. CANADIAN COIN VALUES Small Cents PRICE GUIDE VG-8 F-12 VF-20 EF-40 MS-60 MS-63R GEORGE V All prices are in U.S. dollars LargeL Cents C t 1920 0.20 0.35 0.75 1.50 12. 45. Canadian Coin Values is a comprehensive retail value VG-8 F-12 VF-20 EF-40 MS-60 MS-63R 1921 0.50 0.75 1.50 4.00 30. 250. guide of Canadian coins published online regularly at Coin VICTORIA 1922 20. 23. 28. 40. 200. 1200. World’s website. Canadian Coin Values is provided as a 1858 70. 90. 120. 200. 475. 1800. 1923 30. 33. 42. 55. 250. 2000. reader service to collectors desiring independent informa- 1858 Coin Turn NI NI 2500. 5000. BNE BNE 1924 6.00 8.00 11. 16. 120. 800. tion about a coin’s potential retail value. 1859 4.00 5.00 6.00 10. 50. 200. 1925 25. 28. 35. 45. 200. 900. Sources for pricing include actual transactions, public auc- 1859 Brass 16000. 22000. 30000. BNE BNE BNE 1926 3.50 4.50 7.00 12. 90. 650. tions, fi xed-price lists and any additional information acquired 1859 Dbl P 9 #1 225.