Automated Standard Based Security Assessment for Iot

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Creating Awareness for the Use of Opensource Systems in the Public Sector in Afghanistan

A Service of Leibniz-Informationszentrum econstor Wirtschaft Leibniz Information Centre Make Your Publications Visible. zbw for Economics Hussini, Sayed Jahed (Ed.); Finke, Wolfgang F. (Ed.) Research Report Creating Awareness for the Use of OpenSource Systems in the Public Sector in Afghanistan. Workshop Proceedings, Sept. 15-17, 2012, Kabul/ Afghanistan Wirtschaftswissenschaftliche Schriften, No. 02/2013 Provided in Cooperation with: Ernst-Abbe-Hochschule Jena – University of Applied Sciences, Department of Business Administration Suggested Citation: Hussini, Sayed Jahed (Ed.); Finke, Wolfgang F. (Ed.) (2013) : Creating Awareness for the Use of OpenSource Systems in the Public Sector in Afghanistan. Workshop Proceedings, Sept. 15-17, 2012, Kabul/Afghanistan, Wirtschaftswissenschaftliche Schriften, No. 02/2013, ISBN 3-939046-32-9, Ernst-Abbe-Fachhochschule, Fachbereich Betriebswirtschaft, Jena, http://nbn-resolving.de/urn:nbn:de:gbv:27-20130408-110626-4 This Version is available at: http://hdl.handle.net/10419/71197 Standard-Nutzungsbedingungen: Terms of use: Die Dokumente auf EconStor dürfen zu eigenen wissenschaftlichen Documents in EconStor may be saved and copied for your Zwecken und zum Privatgebrauch gespeichert und kopiert werden. personal and scholarly purposes. Sie dürfen die Dokumente nicht für öffentliche oder kommerzielle You are not to copy documents for public or commercial Zwecke vervielfältigen, öffentlich ausstellen, öffentlich zugänglich purposes, to exhibit the documents publicly, to make them machen, vertreiben oder anderweitig nutzen. publicly available on the internet, or to distribute or otherwise use the documents in public. Sofern die Verfasser die Dokumente unter Open-Content-Lizenzen (insbesondere CC-Lizenzen) zur Verfügung gestellt haben sollten, If the documents have been made available under an Open gelten abweichend von diesen Nutzungsbedingungen die in der dort Content Licence (especially Creative Commons Licences), you genannten Lizenz gewährten Nutzungsrechte. -

Vulnerability Scanners and Penetration Testing

Vulnerability Scanners and Penetration Testing Introduction OBJECTIVE: CompTIA Security+ Domain: Domain 3: Threats and Vulnerabilities CompTIA Security+ Objective Mapping: Objective 3.8: Explain the proper use of penetration testing versus vulnerability scanning. OVERVIEW: In this lab, you will scan a host to discover security holes. Discovering vulnerabilities is critical so they can be patched before attackers compromise your system. Key Term Description OpenVAS an open source vulnerability scanner gaining a higher level of access (possible administrative access) from account with less Privilege escalation permissions and rights Zenmap a GUI front end for nmap, will allow you to scan for open ports and services Metasploit a framework that contains exploits for various information systems nmap a port scanner which will indicate whether ports are open or closed on a remote system Nmap and OpenVAS 1. Click on the External Kali 2 Linux icon on the topology. Type root for the username, and click the Next button. TOPOLOGY MACHINES If the Kali Linux is displaying the time, and not the logon box, press the Enter Key. EXTERNAL KALI 2 USERNAME 2. For the password, type toor (root spelled backwards), and click the Sign In button. EXTERNAL KALI 2 PASSWORD 3. Click the terminal icon (second from the top) to launch the Linux terminal. OPENING THE KALI 2 TERMINAL 4. Type the following command to scan the firewall for open ports, then press Enter. root@kali2:~# nmap 203.0.113.100 NMAP 5. Type the following command, then press Enter to open Zenmap. After Zenmap opens, type 203.0.113.100 in the Target box and then click the Scan button to launch an intense scan. -

List of NMAP Scripts Use with the Nmap –Script Option

List of NMAP Scripts Use with the nmap –script option Retrieves information from a listening acarsd daemon. Acarsd decodes ACARS (Aircraft Communication Addressing and Reporting System) data in real time. The information retrieved acarsd-info by this script includes the daemon version, API version, administrator e-mail address and listening frequency. Shows extra information about IPv6 addresses, such as address-info embedded MAC or IPv4 addresses when available. Performs password guessing against Apple Filing Protocol afp-brute (AFP). Attempts to get useful information about files from AFP afp-ls volumes. The output is intended to resemble the output of ls. Detects the Mac OS X AFP directory traversal vulnerability, afp-path-vuln CVE-2010-0533. Shows AFP server information. This information includes the server's hostname, IPv4 and IPv6 addresses, and hardware type afp-serverinfo (for example Macmini or MacBookPro). Shows AFP shares and ACLs. afp-showmount Retrieves the authentication scheme and realm of an AJP service ajp-auth (Apache JServ Protocol) that requires authentication. Performs brute force passwords auditing against the Apache JServ protocol. The Apache JServ Protocol is commonly used by ajp-brute web servers to communicate with back-end Java application server containers. Performs a HEAD or GET request against either the root directory or any optional directory of an Apache JServ Protocol ajp-headers server and returns the server response headers. Discovers which options are supported by the AJP (Apache JServ Protocol) server by sending an OPTIONS request and lists ajp-methods potentially risky methods. ajp-request Requests a URI over the Apache JServ Protocol and displays the result (or stores it in a file). -

Openvas Reporting Documentation Release 1.4.4

OpenVAS Reporting Documentation Release 1.4.4 TheGroundZero May 23, 2021 Contents 1 Table of contents 3 1.1 Changelog..............................................3 1.2 Usage.................................................3 1.2.1 Installation.........................................3 1.2.2 Command line usage....................................4 2 What’s OpenVAS Reporting?9 3 Why create this tool? 11 4 Aren’t there other tools to achieve this? 13 5 How can I help? 15 6 TODO list 17 i ii OpenVAS Reporting Documentation, Release 1.4.4 A tool to convert OpenVAS XML into reports. Contents 1 OpenVAS Reporting Documentation, Release 1.4.4 2 Contents CHAPTER 1 Table of contents 1.1 Changelog 1.4.2 - Fixed "ValueError: Unknown format code ’f’ for object of type ’str’" 1.4.1 - Small bugfixes and code refactoring 1.4.0 - Use Word template for report building 1.3.1 - Add charts to Word document using matplotlib. Some code clean-up and small lay-out changes in Excel. 1.3.0 - Fix retrieval of description and other useful info by parsing <tags> instead of <description> 1.2.3 - Implement https://github.com/cr0hn/openvas_to_report/pull/12 1.2.2 - Fix bug where port info was not correctly extracted 1.2.1 - Fix bug where affected hosts were added on wrong row in Excel export 1.2.0 - Functional export to Word document (.docx). Includes some formatting. TODO: graphs 1.1.0a - Support for exporting to Word document (.docx). Limited formatting, needs more testing 1.0.1a - Small updates, preparing for export to other formats 1.0.0 - First official release, supports export to Excel with graphs, ToC and worksheet per vulnerability 1.2 Usage 1.2.1 Installation You can install this package directly from source by cloning the Git repository. -

SPI Annual Report 2015

Software in the Public Interest, Inc. 2015 Annual Report July 12, 2016 To the membership, board and friends of Software in the Public Interest, Inc: As mandated by Article 8 of the SPI Bylaws, I respectfully submit this annual report on the activities of Software in the Public Interest, Inc. and extend my thanks to all of those who contributed to the mission of SPI in the past year. { Martin Michlmayr, SPI Secretary 1 Contents 1 President's Welcome3 2 Committee Reports4 2.1 Membership Committee.......................4 2.1.1 Statistics...........................4 3 Board Report5 3.1 Board Members............................5 3.2 Board Changes............................6 3.3 Elections................................6 4 Treasurer's Report7 4.1 Income Statement..........................7 4.2 Balance Sheet............................. 13 5 Member Project Reports 16 5.1 New Associated Projects....................... 16 5.2 Updates from Associated Projects................. 16 5.2.1 0 A.D.............................. 16 5.2.2 Chakra............................ 16 5.2.3 Debian............................. 17 5.2.4 Drizzle............................. 17 5.2.5 FFmpeg............................ 18 5.2.6 GNU TeXmacs........................ 18 5.2.7 Jenkins............................ 18 5.2.8 LibreOffice.......................... 18 5.2.9 OFTC............................. 19 5.2.10 PostgreSQL.......................... 19 5.2.11 Privoxy............................ 19 5.2.12 The Mana World....................... 19 A About SPI 21 2 Chapter 1 President's Welcome SPI continues to focus on our core services, quietly and competently supporting the activities of our associated projects. A huge thank-you to everyone, particularly our board and other key volun- teers, whose various contributions of time and attention over the last year made continued SPI operations possible! { Bdale Garbee, SPI President 3 Chapter 2 Committee Reports 2.1 Membership Committee 2.1.1 Statistics On January 1, 2015 we had 512 contributing and 501 non-contributing mem- bers. -

Pipenightdreams Osgcal-Doc Mumudvb Mpg123-Alsa Tbb

pipenightdreams osgcal-doc mumudvb mpg123-alsa tbb-examples libgammu4-dbg gcc-4.1-doc snort-rules-default davical cutmp3 libevolution5.0-cil aspell-am python-gobject-doc openoffice.org-l10n-mn libc6-xen xserver-xorg trophy-data t38modem pioneers-console libnb-platform10-java libgtkglext1-ruby libboost-wave1.39-dev drgenius bfbtester libchromexvmcpro1 isdnutils-xtools ubuntuone-client openoffice.org2-math openoffice.org-l10n-lt lsb-cxx-ia32 kdeartwork-emoticons-kde4 wmpuzzle trafshow python-plplot lx-gdb link-monitor-applet libscm-dev liblog-agent-logger-perl libccrtp-doc libclass-throwable-perl kde-i18n-csb jack-jconv hamradio-menus coinor-libvol-doc msx-emulator bitbake nabi language-pack-gnome-zh libpaperg popularity-contest xracer-tools xfont-nexus opendrim-lmp-baseserver libvorbisfile-ruby liblinebreak-doc libgfcui-2.0-0c2a-dbg libblacs-mpi-dev dict-freedict-spa-eng blender-ogrexml aspell-da x11-apps openoffice.org-l10n-lv openoffice.org-l10n-nl pnmtopng libodbcinstq1 libhsqldb-java-doc libmono-addins-gui0.2-cil sg3-utils linux-backports-modules-alsa-2.6.31-19-generic yorick-yeti-gsl python-pymssql plasma-widget-cpuload mcpp gpsim-lcd cl-csv libhtml-clean-perl asterisk-dbg apt-dater-dbg libgnome-mag1-dev language-pack-gnome-yo python-crypto svn-autoreleasedeb sugar-terminal-activity mii-diag maria-doc libplexus-component-api-java-doc libhugs-hgl-bundled libchipcard-libgwenhywfar47-plugins libghc6-random-dev freefem3d ezmlm cakephp-scripts aspell-ar ara-byte not+sparc openoffice.org-l10n-nn linux-backports-modules-karmic-generic-pae -

Securing Your Home Routers: Understanding Attacks and Defense Strategies of the Modem’S Serial Number As a Password

Securing Your Home Routers Understanding Attacks and Defense Strategies Joey Costoya, Ryan Flores, Lion Gu, and Fernando Mercês Trend Micro Forward-Looking Threat Research (FTR) Team A TrendLabsSM Research Paper TREND MICRO LEGAL DISCLAIMER The information provided herein is for general information Contents and educational purposes only. It is not intended and should not be construed to constitute legal advice. The information contained herein may not be applicable to all situations and may not reflect the most current situation. 4 Nothing contained herein should be relied on or acted upon without the benefit of legal advice based on the particular facts and circumstances presented and nothing Entry Points: How Threats herein should be construed otherwise. Trend Micro reserves the right to modify the contents of this document Can Infiltrate Your Home at any time without prior notice. Router Translations of any material into other languages are intended solely as a convenience. Translation accuracy is not guaranteed nor implied. If any questions arise related to the accuracy of a translation, please refer to 10 the original language official version of the document. Any discrepancies or differences created in the translation are not binding and have no legal effect for compliance or Postcompromise: Threats enforcement purposes. to Home Routers Although Trend Micro uses reasonable efforts to include accurate and up-to-date information herein, Trend Micro makes no warranties or representations of any kind as to its accuracy, currency, or completeness. You agree that access to and use of and reliance on this document 17 and the content thereof is at your own risk. -

Report of the 2014 NSF Cybersecurity Summit for Large Facilities And

Report of the 2014 NSF Cybersecurity Summit for Large Facilities and Cyberinfrastructure Large Facility Cybersecurity Challenges and Responses August 26 ‐ August 28 Westin Arlington Gateway ‐ Arlington, VA http://trustedci.org/2014summit December 19, 2014 For Public Distribution Craig Jackson, James Marsteller, Von Welch Acknowledgements The organizers wish to thank all those who attended the summit. Special gratitude goes to all those who responded to the CFP, spoke, provided training, and actively participated, including the 2014 Program Committee (highlighted in Section 3), without whom the event would not have been the same. Our sincere thanks goes to the National Science Foundation and Indiana University’s Center for Applied Cybersecurity Research for making this community event possible. The authors wish to extend particular thanks to the following individuals for their detailed assistance with this report: Ardoth Hassler, Drew Simshaw, Jim Basney This event was supported in part by the National Science Foundation under Grant Number 1234408. Any opinions, findings, and conclusions or recommendations expressed at the event or in this report are those of the authors and do not necessarily reflect the views of the National Science Foundation. About this Report Drafts of this report were circulated for comment to the Program Committee (November 5, 2014) and summit participants (December 3, 2014). Citing this Report Please cite as: Craig Jackson, James Marsteller, Von Welch. Report of the 2014 NSF Cybersecurity Summit for Cyberinfrastructure -

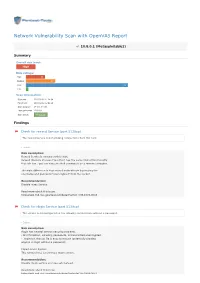

Network Vulnerability Scan with Openvas Report

Network Vulnerability Scan with OpenVAS Report 10.8.0.1 (Metasploitable2) Summary Overall risk level: High Risk ratings: High: 13 Medium: 20 Low: 69 Info: 1 Scan information: Start time: 2018-03-02 11:24:54 Finish time: 2018-03-02 12:02:48 Scan duration: 37 min, 54 sec Tests performed: 103/103 Scan status: Finished Findings Check for rexecd Service (port 512/tcp) The rexecd Service is not allowing connections from this host. Details Risk description: Rexecd Service is running at this Host. Rexecd (Remote Process Execution) has the same kind of functionality that rsh has : you can execute shell commands on a remote computer. The main difference is that rexecd authenticate by reading the username and password *unencrypted* from the socket. Recommendation: Disable rexec Service. Read more about this issue: https//web.nvd.nist.gov/view/vuln/detail?vulnId=CVE-1999-0618 Check for rlogin Service (port 513/tcp) The service is misconfigured so it is allowing conntections without a password. Details Risk description: rlogin has several serious security problems, - All information, including passwords, is transmitted unencrypted. - .rlogin (or .rhosts) file is easy to misuse (potentially allowing anyone to login without a password) Impact Level: System This remote host is running a rlogin service. Recommendation: Disable rlogin service and use ssh instead. Read more about this issue: https//web.nvd.nist.gov/view/vuln/detail?vulnId=CVE-1999-0651 https//web.nvd.nist.gov/view/vuln/detail?vulnId=CVE-1999-0651 http//en.wikipedia.org/wiki/Rlogin http//www.ietf.org/rfc/rfc1282.txt DistCC Detection (port 3632/tcp) No evidence Details Risk description: DistCC is a program to distribute builds of C, C++, Objective C or Objective C++ code across several machines on a network. -

Security Guide Security Guide SUSE Linux Enterprise Server 12 SP4

SUSE Linux Enterprise Server 12 SP4 Security Guide Security Guide SUSE Linux Enterprise Server 12 SP4 Introduces basic concepts of system security, covering both local and network security aspects. Shows how to use the product inherent security software like AppArmor or the auditing system that reliably collects information about any security-relevant events. Publication Date: September 24, 2021 SUSE LLC 1800 South Novell Place Provo, UT 84606 USA https://documentation.suse.com Copyright © 2006– 2021 SUSE LLC and contributors. All rights reserved. Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.2 or (at your option) version 1.3; with the Invariant Section being this copyright notice and license. A copy of the license version 1.2 is included in the section entitled “GNU Free Documentation License”. For SUSE trademarks, see https://www.suse.com/company/legal/ . All other third-party trademarks are the property of their respective owners. Trademark symbols (®, ™ etc.) denote trademarks of SUSE and its aliates. Asterisks (*) denote third-party trademarks. All information found in this book has been compiled with utmost attention to detail. However, this does not guarantee complete accuracy. Neither SUSE LLC, its aliates, the authors nor the translators shall be held liable for possible errors or the consequences thereof. Contents About This Guide xvi 1 Available Documentation xvi 2 Giving Feedback xviii 3 Documentation Conventions xviii 4 Product Life Cycle -

2010-2011 Annual Report

Software in the Public Interest, Inc. 2010-2011 Annual Report 1st July 2011 To the membership, board and friends of Software in the Public Interest, Inc: As mandated by Article 8 of the SPI Bylaws, I respectfully submit this annual report on the activities of Software in the Public Interest, Inc. and extend my thanks to all of those who contributed to the mission of SPI in the past year. { Jonathan McDowell, SPI Secretary 1 Contents 1 President's Welcome3 2 Committee Reports4 2.1 Membership Committee.......................4 2.1.1 Statistics...........................4 3 Board Report5 3.1 Board Members............................5 3.2 Board Changes............................6 3.3 Elections................................6 4 Treasurer's Report7 4.1 Income Statement..........................7 4.2 Balance Sheet.............................9 5 Member Project Reports 11 5.1 New Associated Projects....................... 11 5.1.1 LibreOffice.......................... 11 5.1.2 Open64............................ 11 5.1.3 Jenkins............................ 11 5.1.4 ankur.org.in.......................... 12 A About SPI 13 2 Chapter 1 President's Welcome In the time that has passed since I originally agreed to serve as President sev- eral years ago, SPI has made significant and enduring progress resolving long- standing procedural problems that once impeded our ability to meet the basic service expectations of our associated projects. These accomplishments are of course not my own, but the collective result of work contributed by a dedicated core group of volunteers including the rest of our officers and board. A measure of our success is the steady stream of new SPI associated projects in recent years, including those we invited to join us in the last year listed later in this report. -

2016 Annual Report

Software in the Public Interest, Inc. 2016 Annual Report July 9, 2017 To the membership, board and friends of Software in the Public Interest, Inc: As mandated by Article 8 of the SPI Bylaws, I respectfully submit this annual report on the activities of Software in the Public Interest, Inc. and extend my thanks to all of those who contributed to the mission of SPI in the past year. { Martin Michlmayr, SPI President 1 Contents 1 President's Welcome4 2 Committee Reports5 2.1 Membership Committee.......................5 2.1.1 Statistics...........................5 2.1.2 Active membership clean up.................5 3 Board Report7 3.1 Board Members............................7 3.2 Board Changes............................8 3.3 Elections................................8 3.4 Face-to-face Meeting.........................9 4 Treasurer's Report 10 4.1 Income Statement.......................... 10 4.2 Balance Sheet............................. 15 5 Member Project Reports 17 5.1 New Associated Projects....................... 17 5.1.1 ArduPilot........................... 17 5.1.2 Glucosio............................ 17 5.1.3 NTPsec............................ 17 5.1.4 Open MPI........................... 18 5.1.5 OpenZFS........................... 18 5.1.6 Performance Co-Pilot.................... 18 5.1.7 Torch............................. 18 5.1.8 X.Org............................. 18 5.2 Updates from Associated Projects................. 19 5.2.1 0 A.D.............................. 19 5.2.2 Chakra............................ 19 5.2.3 Debian............................. 20 5.2.4 Drizzle............................. 20 5.2.5 FFmpeg............................ 20 5.2.6 Jenkins............................ 21 2 5.2.7 LibreOffice.......................... 21 5.2.8 NTPsec............................ 21 5.2.9 Open Bioinformatics Foundation.............. 22 5.2.10 SproutCore.......................... 22 5.2.11 Tux4Kids..........................