Workflow Specification and Scheduling with Security

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

AIB 2010 Annual Meeting Rio De Janeiro, Brazil June 25-29, 2010

AIB 2010 Annual Meeting Rio de Janeiro, Brazil June 25-29, 2010 Registered Attendees For The 2010 Meeting The alphabetical list below shows the final list of registered delegates for the 2010 AIB Annual Conference in Rio de Janeiro, Brazil. Final Registrant Count: 895 A Esi Abbam Elliot, University of Illinois, Chicago Ashraf Abdelaal Mahmoud Abdelaal, University of Rome Tor vergata Majid Abdi, York University (Institutional Member) Monica Abreu, Universidade Federal do Ceara Kofi Afriyie, New York University Raj Aggarwal, The University of Akron Ruth V. Aguilera, University of Illinois at Urbana-Champaign Yair Aharoni, Tel Aviv University Niklas Åkerman, Linneaus School of Business and Economics Ian Alam, State University of New York Hadi Alhorr, Saint Louis University Andreas Al-Laham, University of Mannheim Gayle Allard, IE University Helena Allman, University of South Carolina Victor Almeida, COPPEAD / UFRJ Patricia Almeida Ashley,Universidade Federal Fluminense Ilan Alon, Rollins College Marcelo Alvarado-Vargas, Florida International University Flávia Alvim, Fundação Dom Cabral Mohamed Amal, Universidade Regional de Blumenau- FURB Marcos Amatucci, Escola Superior de Propaganda e Marketing de SP Arash Amirkhany, Concordia University Poul Houman Andersen, Aarhus University Ulf Andersson, Copenhagen Business School Naoki Ando, Hosei University Eduardo Bom Angelo,LAZAM MDS Madan Annavarjula, Bryant University Chieko Aoki,Blue Tree Hotels Masashi Arai, Rikkyo University Camilo Arbelaez, Eafit University Harvey Arbeláez, Monterey Institute -

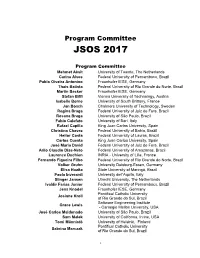

JSOS 2017 Program Committee

Program Committee JSOS 2017 Program Committee Mehmet Aksit University of Twente, The Netherlands Carina Alves Federal University of Pernambuco, Brazil Pablo Oiveira Antonino Fraunhofer IESE, Germany Thais Batista Federal University of Rio Grande do Norte, Brazil Martin Becker Fraunhofer IESE, Germany Stefan Biffl Vienna University of Technology, Austria Isabelle Borne University of South Brittany, France Jan Bosch Chalmers University of Technology, Sweden Regina Braga Federal University of Juiz de Fora, Brazil Rosana Braga University of São Paulo, Brazil Fabio Calefato University of Bari, Italy Rafael Capilla King Juan Carlos University, Spain Christina Chavez Federal University of Bahia, Brazil Heitor Costa Federal University of Lavras, Brazil Carlos Cuesta King Juan Carlos University, Spain José Maria David Federal University of Juiz de Fora, Brazil Arilo Claudio Dias-Neto Federal University of Amazonas, Brazil Laurence Duchien INRIA - University of Lille, France Fernando Figueira Filho Federal University of Rio Grande do Norte, Brazil Volker Gruhn University Duisburg-Essen, Germany Elisa Huzita State University of Maringá, Brazil Paola Inverardi University dell'Aquila, Italy Slinger Jansen Utrecht University, The Netherlands Ivaldir Farias Junior Federal University of Pernambuco, Brazil Jens Knodel Fraunhofer IESE, Germany Pontifical Catholic University Josiane Kroll of Rio Grande do Sul, Brazil Software Engineering Institute Grace Lewis - Carnegie Mellon University, USA José Carlos Maldonado University of São Paulo, Brazil Sam Malek -

A Framework for Threat-Driven Cyber Security Verification of Iot Systems

Conference Organizers General Chairs Edson Midorikawa (Univ. de Sao Paulo - Brazil) Liria Matsumoto Sato (Univ. de Sao Paulo - Brazil) Program Committee Chairs Ricardo Bianchini (Rutgers University - USA) Wagner Meira Jr. (Univ. Federal de Minas Gerais - Brazil) Steering Committee Alberto F. De Souza (UFES, Brazil) Claudio L. Amorim (UFRJ, Brazil) Edson Norberto Cáceres (UFMS, Brazil) Jairo Panetta (INPE, Brazil) Jean-Luc Gaudiot (UCI, USA) Liria M. Sato (USP, Brazil) Philippe O. A. Navaux (UFRGS, Brazil) Rajkumar Buyya Universidade de Melbourne, Australia) Siang W. Song (USP, Brazil) Viktor Prasanna (USC, USA) Vinod Rabello (UFF, Brazil) Walfredo Cirne (Google, USA) Organizing Committee Artur Baruchi (USP-Brazil) Calebe de Paula Bianchini (Mackenzie – Brazil) Denise Stringhini (Mackenzie – Brazil) Edson Toshimi Midorikawa (USP-Brazil) Francisco Isidro Massetto (USP-Brazil) Gabriel Pereira da Silva (UFRJ - Brazil) Hélio Crestana Guardia (UFSCar – Brazil) Francisco Ribacionka (USP-Brazil) Hermes Senger (UFSCar – Brazil) Liria Matsumoto Sato (USP-Brazil) Ricardo Bianchini (Rutgers University - USA) Wagner Meira Jr. (UFMG - Brazil) Program Committee Alfredo Goldman vel Lejbman (USP-Brazil) Ismar Frango Silveira (Mackenzie-Brazil) Nicolas Kassalias (USJT-Brazil) x Computer Architecture Track Vice-chair: David Brooks (Harvard) Claudio L. Amorim (Federal University of Rio de Janeiro) Nader Bagherzadeh (University of California at Irvine) Mauricio Breternitz Jr. (Intel) Patrick Crowley (Washington University) Cesar De Rose (PUC Rio Grande do Sul) Alberto Ferreira De Souza (Federal University of Espirito Santo) Jean-Luc Gaudiot (University of California at Irvine) Timothy Jones (University of Edinburgh) David Kaeli (Northeastern University) Jose Moreira (IBM Research) Philippe Navaux (Federal University of Rio Grande do Sul) Yale Patt (University of Texas at Austin) Gabriel P. -

Acta Cirúrgica Brasileira: Representação Interinstitucional E

1 - EDITORIAL Acta Cirúrgica Brasileira Representação interinstitucional e interdisciplinar Alberto GoldenbergI, Tânia Pereira Morais FinoII Saul GoldenbergIII, I Editor Científico Acta Cir Bras II Secretaria Acta Cir Bras III Fundador e Editor Chefe Acta Cir Bras Em editorial no fascículo no 1, janeiro-fevereiro de 2009, o número de artigos do exterior e o número de suplementos no apresentou-se o desempenho da Acta Cirúrgica Brasileira referentes período. aos anos de 1986 a 20001 e de 2001 a 20052, nos quais se destacava Decidimos investigar a participação interinstitucional e a distribuição geográfica dos autores, o número de artigos nacionais, interdisciplinar da revista nos anos de 2007 e 2008. ORIGEM INSTITUCIONAL DOS ARTIGOS INSTITUIÇÕES NACIONAIS Bahia Experimental Research Center, Faculty of Medicine, Federal University of Bahia[UFBA] Operative Technique and Experimental Surgery, Bahia School of Medicine Brasília Laboratory of Experimental Surgery, School of Medicine, University of Brasilia, Brazil. Ceará Department of Physiology and Pharmacology, Faculty of Medicine, Federal University of Ceará. Experimental Surgical Research Laboratory, Department of Surgery, Federal Univesity of Ceará, Brazil. Espírito Santo Laboratory Division of Surgical Principles, Department of Surgery, School of Sciences, Espirito Santo. Goiás Department of Veterinary Medicine, Veterinary Medicine College, Federal University of Goiás. Maranhão Experimental Research, Department of Surgery, Federal University of Maranhão Mato Grosso Department of Surgery,Federal University of Mato Grosso[UFMT] Mato Grosso do Sul Laboratory of Research, Mato Grosso do Sul Federal University Minas Gerais Department of Surgery, Experimental Laboratory, School of Medicine, Federal University of Minas Gerais Laboratory of Apoptosis, Department of General Pathology, Institute of Biological Science, Federal University of Minas Gerais Wild Animals Research Laboratory, Federal University of Uberlândia, Minas Gerais, Brazil. -

Signals and Systems: U.S. Colleges and Universities (308)

Signals and Systems: U.S. Colleges and Universities (308) Edmonds College, Lynnwood, WA American Military University, APU, Charles Town, WV Embry Riddle Aeronautical University, Daytona Beach, FL Amherst College, MA Embry Riddle Aeronautical University, Prescott, AZ Arizona State University, Tempe, AZ Five Towns College, Dix Hills, NY Arkansas State University, Jonesboro, AR Florida A&M University, Tallahassee, FL Arkansas Tech University, Russellville, AR Florida Atlantic University, Boca Raton, FL Auburn University, AL Florida International University, Miami, FL Austin Peay State University, Clarksville, TN Florida Polytechnic University, Lakeland, FL Baker College, Owosso, MI Florida State University, Tallahassee, FL Baylor University, Waco, TX Florida State University, Panama City, FL Benedictine College, Atchison, KS Gannon University, Erie, PA Boise State University, ID George Mason University, Fairfax, VA Boston University, MA The George Washington University, Washington, D.C. Bradley University, Peoria, IL Georgia Southern University, Statesboro, GA Brigham Young University, Provo, UT Georgia Tech, Atlanta, GA Brown University, Providence, RI Gonzaga University, Spokane, WA Bucknell University, Lewisburg, PA Grand Rapids Community College, MI Cal Poly, Pomona, CA Grand Valley State University, Grand Rapids, MI Cal Poly, San Luis Obispo, CA Grand Rapids Community College, MI Cal Tech, Pasadena, CA Hampton University, VA California State University, Chico, CA Hanover College, IN California State University, Fresno, CA Harvard University, -

Brazil Academic Webuniverse Revisited: a Cybermetric Analysis

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by E-LIS Brazil academic webuniverse revisited: A cybermetric analysis 1 ISIDRO F. AGUILLO 1 JOSÉ L. ORTEGA 1 BEGOÑA GRANADINO 1 CINDOC-CSIC. Joaquín Costa, 22. 28002 Madrid. Spain {isidro;jortega;bgranadino}@cindoc.csic.es Abstract The analysis of the web presence of the universities by means of cybermetric indicators has been shown as a relevant tool for evaluation purposes. The developing countries in Latin-American are making a great effort for publishing electronically their academic and scientific results. Previous studies done in 2001 about Brazilian Universities pointed out that these institutions were leaders for both the region and the Portuguese speaking community. A new data compilation done in the first months of 2006 identify an exponential increase of the size of the universities’ web domains. There is also an important increase in the web visibility of these academic sites, but clearly of much lower magnitude. After five years Brazil is still leader but it is not closing the digital divide with developed world universities. Keywords: Cybermetrics, Brazilian universities, Web indicators, Websize, Web visibility 1. Introduction. The Internet Lab (CINDOC-CSIC) has been working on the development of web indicators for the Latin-American academic sector since 1999 (1,2,3,4,5,6,7,8). The region is especially interesting due to the presence of several very large developing countries that are perfect targets for case studies and the use of Spanish and Portuguese languages in opposition to English usually considered the scientific “lingua franca”. -

Performance of Students Admitted Through Affirmative Action in Brazil.Latin American Research Review

Valente, R R and Berry, B J L. Performance of Students Admitted through Affirmative Action in Brazil.Latin American Research Review. 2017; 52(1), pp. 18-34. DOI: https://doi.org/10.25222/larr.50 SOCIOLOGY Performance of Students Admitted through Affirmative Action in Brazil Rubia R. Valente and Brian J. L. Berry University of Texas at Dallas, US Corresponding author: Rubia R. Valente ([email protected]) Following the implementation of the Lei das Cotas (Affirmative Action Law) in Brazil, there has been debate as to whether students who were admitted through affirmative action perform at the same level as students admitted through traditional methods. This article examines the results of the Exame Nacional de Desempenho dos Estudantes (ENADE) from 2009 to 2012 to determine whether there is a relationship between students’ performance at the university level and the manner of their admittance. We find that students admitted to public universities under affirmative action perform at similar levels to students who were not, whereas quota students in private universities perform slightly better than students admitted through traditional methods. Com a implementação das Lei das Cotas no Brasil, tem se debatido se estudantes admitidos através de ação afirmativa demonstram o mesmo nível acadêmico dos estudantes admitidos através de métodos tradicionais. Este artigo analisa os resultados do Exame Nacional de Desempenho dos Estudantes (ENADE) durante o período de 2009 á 2012 para determinar se existe uma relação entre o desempenho dos alunos no âmbito universitário e as modalidades da sua admissão. Nossa análise revela que os estudantes que foram admitidos em universidades públicas através de ações afirmativas têm o mesmo desempenho acadêmico de estudantes que não se beneficiam de ações afirmativas, enquanto alunos cotistas em universidades privadas têm um desempenho ligeiramente melhor do que alunos admitidos através de métodos tradicionais. -

The Pilotis As Sociospatial Integrator: the Urban Campus of the Catholic University of Pernambuco

1665 THE PILOTIS AS SOCIOSPATIAL INTEGRATOR: THE URBAN CAMPUS OF THE CATHOLIC UNIVERSITY OF PERNAMBUCO Robson Canuto da Silva Universidade Católica de Pernambuco [email protected] Maria de Lourdes da Cunha Nóbrega Universidade Católica de Pernambuco [email protected] Andreyna Raphaella Sena Cordeiro de Lima Universidade Católica de Pernambuco [email protected] 8º CONGRESSO LUSO-BRASILEIRO PARA O PLANEAMENTO URBANO, REGIONAL, INTEGRADO E SUSTENTÁVEL (PLURIS 2018) Cidades e Territórios - Desenvolvimento, atratividade e novos desafios Coimbra – Portugal, 24, 25 e 26 de outubro de 2018 THE PILOTIS AS SOCIOSPATIAL INTEGRATOR: THE URBAN CAMPUS OF THE CATHOLIC UNIVERSITY OF PERNAMBUCO R. Canuto, M.L.C.C. Nóbrega e A. Sena ABSTRACT This study aims to investigate space configuration factors that promote patterns of pedestrian movement and social interactions on the campus of the Catholic University of Pernambuco, located in Recife, in the Brazilian State of Pernambuco. The campus consists of four large urban blocks and a number of modern buildings with pilotis, whose configuration facilitates not only pedestrian movement through the urban blocks, but also various other types of activity. The methodology is based on the Social Logic of Space Theory, better known as Space Syntax. The study is structured in three parts: (1) The Urban Campus, which presents an overview of the evolution of universities and relations between campus and city; (2) Paths of an Urban Campus, which presents a spatial analysis on two scales (global and local); and (3) The Role of pilotis as a Social Integrator, which discusses the socio-spatial role of pilotis. Space Syntax techniques (axial maps of global and local integration)1 was used to shed light on the nature of pedestrian movement and its social performance. -

A Case Study on Recent Developments

Academic Freedom in Brazil A Case Study on Recent Developments By CONRADO HÜBNER MENDES with ADRIANE SANCTIS DE BRITO, BRUNA ANGOTTI, FERNANDO ROMANI SALES, LUCIANA SILVA REIS and NATALIA PIRES DE VASCONCELOS Freedom of expression, freedom of thought, the freedom to teach and to learn, and PUBLISHED university autonomy are all protected by the Brazilian Constitution. Yet a closer look September 2020 at the state of academic freedom in the country reveals that these constitutional rights have come under attack. In recent years, the difficult political climate in Brazil has strained the country’s academic landscape, and its deeply polarized politics have aggravated pre-existing problems in the regulation and governance of higher education. Based on an analysis of media reporting, assessments by various research organizations as well as preliminary survey data, this case study investigates to what extent different dimensions of academic freedom have come under threat in Brazil. It also sheds light on recent efforts to promote academic freedom before concluding with several recommendations for Brazilian and international policymakers. gppi.net We are grateful to the anonymous reviewers who offered invaluable comments to the first version of this study. We also thank Janika Spannagel, Katrin Kinzelbach, Ilyas Saliba, and Alissa Jones Nelson for the substantial feedback along the way, and Leonardo Rosa for important conversations on federal universities regulation and ways to improve academic freedom in Brazil. Finally, we would like to thank the Global Public Policy Institute (GPPi) for the opportunity to draft and publish this case study as part of GPPi’s ongoing project on academic freedom. -

Partner Universities

COUNTRY CITY UNIVERSITY Argentina Universidad Argentina de la Empresa (UADE) Buenos Aires Argentina Buenos Aires Universidad del Salvador (USAL) Australia Brisbane Queensland University of Technology Australia Brisbane Queensland University of Technology QUT Australia Brisbane University of Queensland Australia Joondalup Edith Cowan University, ECU International Australia Melboure Royal Melbourne Institute of Technology (RMIT) Australia Perth Curtin University Australia Toowoomba University of Southern Queensland, Toowoomba Australia Newcastle Newcastle University Austria Dornbirn FH VORARLBERG University of Applied Sciences Austria Graz FH Joanneum University of applied sciences Austria Innsbruck FHG-Zentrum fur Gesundheitsberufe Tirol GmbH Austria Linz University of Education in Upper Austria Austria Vienna Fachhochschule Wien Austria Vienna FH Camus Wien Austria Vienna University of Applied Sciences of BFI Vienna Austria Vienna University of Applied Sciences WKW Vienna Belgium Antwerp Artesis Plantijn Hogeschool van de Provincie Antwerpen Belgium Antwerp De Universiteit van Antwerpen Belgium Antwerp Karel de Grote Hogeschool, Antwerp Belgium Antwerp Karel de Grote University College Belgium Antwerp Thomas More Belgium Antwerp University of Antwerp Belgium Brugges Vives University College Belgium Brussel LUCA School of Arts Belgium Brussel Hogeschool Universiteit Brussel Belgium Brussels Erasmushogeschool Brussel Belgium Brussels ICHEC Bruxelles Belgium Brussels Odisee Belgium Ghent Artevelde Hogeschool Belgium Ghent Hogeschool Gent/ -

Redalyc.O ENIGMA DO AUTISMO: CONTRIBUIÇÕES SOBRE A

Psicologia em Estudo ISSN: 1413-7372 [email protected] Universidade Estadual de Maringá Brasil Mouta Fadda, Gisella; Engler Cury, Vera O ENIGMA DO AUTISMO: CONTRIBUIÇÕES SOBRE A ETIOLOGIA DO TRANSTORNO Psicologia em Estudo, vol. 21, núm. 3, julio-septiembre, 2016, pp. 411-423 Universidade Estadual de Maringá Maringá, Brasil Available in: http://www.redalyc.org/articulo.oa?id=287148579006 How to cite Complete issue Scientific Information System More information about this article Network of Scientific Journals from Latin America, the Caribbean, Spain and Portugal Journal's homepage in redalyc.org Non-profit academic project, developed under the open access initiative Doi: 10.4025/psicolestud.v21i3.30709 THE ENIGMA OF AUTISM: CONTRIBUTIONS TO THE ETIOLOGY OF THE DISORDER 1 Gisella Mouta Fadda Vera Engler Cury Pontifical Catholic University of Campinas (PUC-Campinas), Brazil. ABSTRACT. The lack of a definitive explanation for the causes of autism in children is an enigma that creates significant suffering among parents and difficulties for health professionals. This study is a critical review of the possible causes of autism, currently known as Autism Spectrum Disorder (ASD), spanning the period from the first description of the syndrome in 1943 until 2015. The objective of this article is to outline the current scenario of studies about this type of disorder in order to emphasi ze the points of convergence and the differences between the positions taken by the researchers who have dedicated themselves to this topic. The analysis suggests four main paradigms that attempt to encompass the etiology of autism: 1) the Biological-Genetic Paradigm; 2) the Relational Paradigm; 3) the Environmental Paradigm; and 4) the Neurodiversity Paradigm. -

30Th Brazilian Society for Virology 2019 Annual Meeting—Cuiabá, Mato Grosso, Brazil

viruses Conference Report 30th Brazilian Society for Virology 2019 Annual Meeting—Cuiabá, Mato Grosso, Brazil Renata Dezengrini Slhessarenko 1,* , Marcelo Adriano Mendes dos Santos 2, Michele Lunardi 3, Bruno Moreira Carneiro 4 , Juliana Helena Chavez-Pavoni 4, Daniel Moura de Aguiar 5 , Ana Claudia Pereira Terças Trettel 6,7 , Carla Regina Andrighetti 8 , Flávio Guimarães da Fonseca 9 , João Pessoa Araújo Junior 10 , Fabrício Souza Campos 11 , Luciana Barros de Arruda 12 ,Jônatas Santos Abrahão 9 and Fernando Rosado Spilki 13,* 1 Laboratório de Virologia, Faculdade de Medicina, Universidade Federal de Mato Grosso (UFMT), Cuiabá, MT 78060-900, Brazil 2 Faculdade de Ciências da Saúde, Universidade do Estado de Mato Grosso (UNEMAT), Caceres, MT 78200-000, Brazil; [email protected] 3 Laboratory of Veterinary Microbiology, Universidade de Cuiabá (UNIC), Cuiabá, MT 78065-900, Brazil; [email protected] 4 Instituto de Ciências Exatas e Naturais, Universidade Federal de Rondonópolis (UFR), Rondonópolis, MT 787350-900, Brazil; [email protected] (B.M.C.); [email protected] (J.H.C.-P.) 5 Laboratorio de Virologia e Rickettiososes, Faculdade de Medicina Veterinária, Universidade Federal de Mato Grosso (UFMT), Cuiabá, MT 78060-900, Brazil; [email protected] 6 Nursing Department, Universidade do Estado de Mato Grosso (UNEMAT), Tangará da Serra, MT 78300-000, Brazil; [email protected] 7 Collective Health Institute, Universidade Federal de Mato Grosso (UFMT), Cuiabá, MT 78060-900, Brazil 8 Instituto de Ciências da Saúde, Universidade