6 File Systems

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Filesystems HOWTO Filesystems HOWTO Table of Contents Filesystems HOWTO

Filesystems HOWTO Filesystems HOWTO Table of Contents Filesystems HOWTO..........................................................................................................................................1 Martin Hinner < [email protected]>, http://martin.hinner.info............................................................1 1. Introduction..........................................................................................................................................1 2. Volumes...............................................................................................................................................1 3. DOS FAT 12/16/32, VFAT.................................................................................................................2 4. High Performance FileSystem (HPFS)................................................................................................2 5. New Technology FileSystem (NTFS).................................................................................................2 6. Extended filesystems (Ext, Ext2, Ext3)...............................................................................................2 7. Macintosh Hierarchical Filesystem − HFS..........................................................................................3 8. ISO 9660 − CD−ROM filesystem.......................................................................................................3 9. Other filesystems.................................................................................................................................3 -

File Allocation Table - Wikipedia, the Free Encyclopedia Page 1 of 22

File Allocation Table - Wikipedia, the free encyclopedia Page 1 of 22 File Allocation Table From Wikipedia, the free encyclopedia File Allocation Table (FAT) is a file system developed by Microsoft for MS-DOS and is the primary file system for consumer versions of Microsoft Windows up to and including Windows Me. FAT as it applies to flexible/floppy and optical disc cartridges (FAT12 and FAT16 without long filename support) has been standardized as ECMA-107 and ISO/IEC 9293. The file system is partially patented. The FAT file system is relatively uncomplicated, and is supported by virtually all existing operating systems for personal computers. This ubiquity makes it an ideal format for floppy disks and solid-state memory cards, and a convenient way of sharing data between disparate operating systems installed on the same computer (a dual boot environment). The most common implementations have a serious drawback in that when files are deleted and new files written to the media, directory fragments tend to become scattered over the entire disk, making reading and writing a slow process. Defragmentation is one solution to this, but is often a lengthy process in itself and has to be performed regularly to keep the FAT file system clean. Defragmentation should not be performed on solid-state memory cards since they wear down eventually. Contents 1 History 1.1 FAT12 1.2 Directories 1.3 Initial FAT16 1.4 Extended partition and logical drives 1.5 Final FAT16 1.6 Long File Names (VFAT, LFNs) 1.7 FAT32 1.8 Fragmentation 1.9 Third party -

Filesystem On-Disk Structures for FAT, HPFS, NTFS, JFS, Extn and Reiserfs Presentation Contents

On-disk filesystem structures Jan van Wijk Filesystem on-disk structures for FAT, HPFS, NTFS, JFS, EXTn and ReiserFS Presentation contents Generic filesystem architecture (Enhanced) FAT(32), File Allocation Table variants HPFS, High Performance FileSystem (OS/2 only) NTFS, New Technology FileSystem (Windows) JFS, Journaled File System (IBM classic or bootable) EXT2, EXT3 and EXT4 Linux filesystems ReiserFS, Linux filesystem FS-info: FAT, HPFS, NTFS, JFS, EXTn, Reiser © 2019 JvW Who am I ? Jan van Wijk Software Engineer, C, C++, Rexx, PHP, Assembly Founded FSYS Software in 2001, developing and supporting DFSee from version 4 to 16.x First OS/2 experience in 1987, developing parts of OS/2 1.0 EE (Query Manager, later DB2) Used to be a systems-integration architect at a large bank, 500 servers and 7500 workstations Developing embedded software for machine control and appliances from 2008 onwards Home page: https://www.dfsee.com/ FS-info: FAT, HPFS, NTFS, JFS, EXTn, Reiser © 2019 JvW Information in a filesystem Generic volume information Boot sector, super blocks, special files ... File and directory descriptive info Directories, FNODEs, INODEs, MFT-records Tree hierarchy of files and directories Free space versus used areas Allocation-table, bitmap Used disk-sectors for each file/directory Allocation-table, run-list, bitmap FS-info: FAT, HPFS, NTFS, JFS, EXTn, Reiser © 2019 JvW File Allocation Table The FAT filesystem was derived from older CPM filesystems for the first (IBM) PC Designed for diskettes and small hard -

4 MEMORY MANAGEMENT Memory Is an Important Resource That Must Be Carefully Managed

4 MEMORY MANAGEMENT Memory is an important resource that must be carefully managed. While the average home computer nowadays has a thousand times as much memory as the IBM 7094, the largest computer in the world in the early 1960s, programs are getting bigger faster than memories. To paraphrase Parkinson’s law, “Programs expand to fill the memory available to hold them.” In this chapter we will study how operating systems manage memory. Ideally, what every programmer would like is an infinitely large, infinitely fast memory that is also nonvolatile, that is, does not lose its contents when the electric power fails. While we are at it, why not also ask for it to be inexpensive, too? Unfortunately technology does not provide such memories. Consequently, most computers have a memory hierarchy, with a small amount of very fast, expensive, volatile cache memory, tens of megabytes of medium-speed, medium-price, volatile main memory (RAM), and tens or hundreds of gigabytes of slow, cheap, nonvolatile disk storage. It is the job of the operating system to coordinate how these memories are used. The part of the operating system that manages the memory hierarchy is called the memory manager. Its job is to keep track of which parts of memory are in use and which parts are not in use, to allocate memory to processes when they need it and deallocate it when they are done, and to manage swapping between main memory and disk when main memory is too small to hold all the processes. In this chapter we will investigate a number of different memory management schemes, ranging from very simple to highly sophisticated. -

Operating Systems in Depth This Page Intentionally Left Blank OPERATING SYSTEMS in DEPTH

This page intentionally left blank Operating Systems in Depth This page intentionally left blank OPERATING SYSTEMS IN DEPTH Thomas W. Doeppner Brown University JOHN WILEY & SONS, INC. vice-president & executive publisher Donald Fowley executive editor Beth Lang Golub executive marketing manager Christopher Ruel production editor Barbara Russiello editorial program assistant Mike Berlin senior marketing assistant Diana Smith executive media editor Thomas Kulesa cover design Wendy Lai cover photo Thomas W. Doeppner Cover photo is of Banggai Cardinalfi sh (Pterapogon kauderni), taken in the Lembeh Strait, North Sulawesi, Indonesia. This book was set in 10/12 Times Roman. The book was composed by MPS Limited, A Macmillan Company and printed and bound by Hamilton Printing Company. This book is printed on acid free paper. ϱ Founded in 1807, John Wiley & Sons, Inc. has been a valued source of knowledge and understanding for more than 200 years, helping people around the world meet their needs and fulfi ll their aspirations. Our company is built on a foundation of principles that include responsibility to the communities we serve and where we live and work. In 2008, we launched a Corporate Citizenship Initiative, a global effort to address the environmental, social, economic, and ethical challenges we face in our business. Among the issues we are addressing are carbon impact, paper specifi cations and procurement, ethical conduct within our business and among our vendors, and community and charitable support. For more information, please visit our -

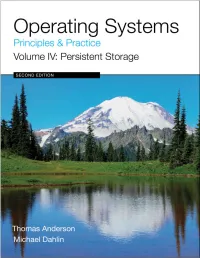

Operating Systems Principles and Practice, Volume 4

Operating Systems Principles & Practice Volume IV: Persistent Storage Second Edition Thomas Anderson University of Washington Mike Dahlin University of Texas and Google Recursive Books recursivebooks.com Operating Systems: Principles and Practice (Second Edition) Volume IV: Persistent Storage by Thomas Anderson and Michael Dahlin Copyright ©Thomas Anderson and Michael Dahlin, 2011-2015. ISBN 978-0-9856735-6-7 Publisher: Recursive Books, Ltd., http://recursivebooks.com/ Cover: Reflection Lake, Mt. Rainier Cover design: Cameron Neat Illustrations: Cameron Neat Copy editors: Sandy Kaplan, Whitney Schmidt Ebook design: Robin Briggs Web design: Adam Anderson SUGGESTIONS, COMMENTS, and ERRORS. We welcome suggestions, comments and error reports, by email to [email protected] Notice of rights. All rights reserved. No part of this book may be reproduced, stored in a retrieval system, or transmitted in any form by any means — electronic, mechanical, photocopying, recording, or otherwise — without the prior written permission of the publisher. For information on getting permissions for reprints and excerpts, contact [email protected] Notice of liability. The information in this book is distributed on an “As Is" basis, without warranty. Neither the authors nor Recursive Books shall have any liability to any person or entity with respect to any loss or damage caused or alleged to be caused directly or indirectly by the information or instructions contained in this book or by the computer software and hardware products described in it. Trademarks: Throughout this book trademarked names are used. Rather than put a trademark symbol in every occurrence of a trademarked name, we state we are using the names only in an editorial fashion and to the benefit of the trademark owner with no intention of infringement of the trademark. -

Download The

Visit : https://hemanthrajhemu.github.io Join Telegram to get Instant Updates: https://bit.ly/VTU_TELEGRAM Contact: MAIL: [email protected] INSTAGRAM: www.instagram.com/hemanthraj_hemu/ INSTAGRAM: www.instagram.com/futurevisionbie/ WHATSAPP SHARE: https://bit.ly/FVBIESHARE Seventh Edition ABRAHAM SILBERSCHATZ Yale University PETER BAER GALVIN Corporate Technologies, Inc. GREG GAGNE Westminster College WILEY JOHN WILEY & SONS. INC https://hemanthrajhemu.github.io Contents Chapter 9 Virtual Memory . 9.1 Background 315 9.8 Allocating Kernel Memory 353 9.2 Demand Paging 319 9.9 Other Considerations 357 9.3 Copy-on-Write 325 9.10 Operating-System Examples 363 9.4 Page Replacement 327 9.11 Summary 365 9.5 Allocation of Frames 340 Exercises 366 9.6 Thrashing 343 Bibliographical Notes 370 9.7 Memory-Mapped Files 348 PART FOUR • STORAGE MANAGEMENT Chapter 10 File-System Interface 10.1 File Concept 373 10.6 Protection 402 10.2 Access Methods 382 10.7 Summary 407 10.3 Directory Structure 385 Exercises 408 10.4 File-System Mounting 395 Bibliographical Notes 409 10.5 File Sharing 397 Chapter 11 File-System Implementation 11.1 File-System Structure 411 11.8 Log-Structured File Systems 437 11.2 File-System Implementation 413 11.9 NFS 438 11.3 Directory Implementation 419 11.10 Example: The WAFL File System 444 11.4 Allocation Methods 421 11.11 Summary 446 11.5 Free-Space Management 429 Exercises 447 11.6 Efficiency and Performance 431 Bibliographical Notes 449 11.7 Recovery 435 Chapter 12 Mass-Storage Structure 12.1 Overview of Mass-Storage -

Doctoral Thesis

Charles University in Prague Faculty of Mathematics and Physics DOCTORAL THESIS Mikul´aˇsPatoˇcka Design and Implementation of the Spad Filesystem Department of Software Engineering Advisor: RNDr. Filip Zavoral, Ph.D. Abstract Title: Design and Implementation of the Spad Filesystem Author: Mgr. Mikul´aˇsPatoˇcka email: [email protected]ff.cuni.cz Department: Department of Software Engineering Faculty of Mathematics and Physics Charles University in Prague, Czech Republic Advisor: RNDr. Filip Zavoral, Ph.D. email: Filip.Zavoral@mff.cuni.cz Mailing address (author): Mikul´aˇsPatoˇcka Voskovcova 37 152 00 Prague, Czech Republic Mailing address (advisor): Dept. of Software Engineering Charles University in Prague Malostransk´en´am. 25 118 00 Prague, Czech Republic WWW: http://artax.karlin.mff.cuni.cz/~mikulas/spadfs/ Abstract: This thesis describes design and implementation of the Spad filesystem. I present my novel method for maintaining filesystem consistency — crash counts. I describe architecture of other filesystems and present my own de- sign decisions in directory management, file allocation information, free space management, block allocation strategy and filesystem checking algorithm. I experimentally evaluate performance of the filesystem. I evaluate performance of the same filesystem on two different operating systems, enabling the reader to make a conclusion on how much the performance of various tasks is affected by operating system and how much by physical layout of data on disk. Keywords: filesystem, operating system, crash counts, extendible hashing, SpadFS Acknowledgments I would like to thank my advisor Filip Zavoral for supporting my work and for reading and making comments on this thesis. I would also like to thank to colleague Leo Galamboˇsfor testing my filesystem on his search engine with 1TB RAID array, which led to fixing some bugs and improving performance. -

Local Filesystems

S3‐2018 11/5/2018 Local Filesystems Jacques-Charles Lafoucriere ENSIIE | ASE Local File Systems Lecture Outline Day 1 Definitions Storage Media Linux VFS FUSE DOS Day 2 FFS LFS ExtN NOVA LTFS ASE Local Filesystems | PAGE 2 Jacques‐Charles Lafoucriere 1 S3‐2018 11/5/2018 File system: Definitions What is a File System? Storage medium is a long stream of bytes Data need to be organized Split in parts Named FS is An organization of data to achieve some goals - Speed, flexibility, integrity, security A software used to control how data is stored and retrieved Can be optimized for some media - ISO 9660 for optical discs - tmpfs for memory ASE Local Filesystems | PAGE 4 Jacques‐Charles Lafoucriere 2 S3‐2018 11/5/2018 FS concepts Space management Allocate/Free space in blocks Organize Files and Directories Keep track of which media belong to which file and which space is free FS fragmentation Space allocated to file is not contiguous Imply lots of jump Decrease performance Come from file write/remove when media is close to full ASE Local Filesystems | PAGE 5 FS layers is OS Logical FS API for file operations Virtual FS Common interface to allow support of multiple physical FS Implement shared services for all Physical FS Physical FS Manage the physical operations on the device Implement all the logic specific to the FS ASE Local Filesystems | PAGE 6 Jacques‐Charles Lafoucriere 3 S3‐2018 11/5/2018 FS concepts Filenames Identify a file May have some limit Length, Case sensitive or not Forbidden char (like directories separator) Today most FS support -

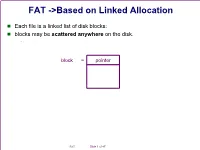

FAT ->Based on Linked Allocation

FAT ->Based on Linked Allocation Each file is a linked list of disk blocks: blocks may be scattered anywhere on the disk. block = pointer FAT Slide 1 of 47 Linked Allocation FAT Slide 2 of 47 FAT Modified linked allocation + Continuous allocation Modifications: 1. Continuous allocation on the cluster basis 2. Put file pointers into table FAT Slide 3 of 47 FAT First modification: extract all file pointers create a special table from these pointers table called FAT (File Allocation Table) Second modification: Extent-base allocation unit for file Instead one block basis, take multiply-block allocation unit, called cluster FAT Slide 4 of 47 FAT File System Disk Volume Structures Keywords = file allocation table Minimal disk unit = disk block (512bytes) Minimal file unit = cluster Cluster = base unit of file = Multiple block size (2k-32K) Boot volume block FAT #1 FAT #2 Data area FAT Slide 5 of 47 FAT example boot record clusters FAT #1 FAT #2 root directory File name Extension File Attribute File Size FCB DATA area Creation time Modification time Accessed time First cluster FAT Slide 6 of 47 Master Boot Record (MBR) hard disk must have a consistent "starting point" where key information is stored about the disk, such as how many partitions it has, what sort of partitions they are, etc. There also needs to be somewhere that the BIOS can load the initial boot program that starts the process of loading the operating system. The place where this information is stored is called the master boot record (MBR). It is also sometimes called the master boot sector or even just the boot sector. -

Wykład 5 Budowa Popularnych Systemów Plików

dr hab. inż. Janusz Bobulski, prof. PCz. [email protected] Katedra Informatyki Elementy Informatyki Śledczej Wykład 5 Budowa popularnych systemów plików. Zajęcia realizowane w ramach projektu Zintegrowany Program Rozwoju Politechniki Częstochowskiej (POWR.03.05.00-00-Z008/18-00), współfinansowany przez Unię Europejską ze środków Europejskiego Funduszu Społecznego w ramach Programu Operacyjnego Wiedza Edukacja Rozwój. Model systemu plików System plików System plików (ang. file system) jest zapisany na woluminie i opisuje przechowywane w nim pliki oraz powiązane z nimi metadane. Na warstwie systemu plików możesz również znaleźć takie elementy jak metadane specyficzne dla danego typu systemu plików i wykorzystywane wyłącznie do zapewnienia jego poprawnego działania — dobrym przykładem mogą być tzw. superbloki (ang. superblocks) występujące w systemie Ext2. Model systemu plików Jednostka danych Jednostka danych (ang. Data Unit) to najmniejszy niezależny blok danych dostępny w danym systemie plików. W rozwiązaniach wywodzących się z systemu UNIX takie jednostki danych noszą nazwę bloków (ang. blocks). Takie bloki danych mają zazwyczaj rozmiar będący wielokrotnością rozmiaru pojedynczego sektora. Jeszcze nie tak dawno temu sektory na dyskach miały rozmiar 512 bajtów, ale we współczesnych systemach plików najmniejsze adresowalne jednostki danych mają rozmiar 4096 bajtów (4 kB) lub więcej. Informacje dostępne na tej warstwie modelu systemu plików są proste — jest to zawartość poszczególnych jednostek danych. Na przykład jeżeli wybrana jednostka danych jest przypisana do zdjęcia w formacie JPEG, to taka jednostka danych przechowuje fragment danych obrazu w formacie JPEG. Jeżeli wybrana jednostka danych jest przypisana do pliku tekstowego, to po prostu przechowuje fragment zawartości takiego pliku. Windows W systemie Windows obsługiwane są dwa podstawowe systemy plików: FAT i NTFS. -

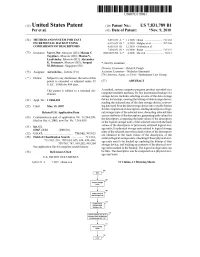

Method and System for Fast Incremental Backup Using Comparison of Descriptors

111111 1111111111111111111111111111111111111111111111111111111111111 US007831789B 1 c12) United States Patent (10) Patent No.: US 7,831,789 Bl Per et al. (45) Date of Patent: *Nov. 9, 2010 (54) METHOD AND SYSTEM FOR FAST 5,835,953 A * 1111998 Ohran ........................ 7111162 INCREMENTAL BACKUP USING 6,625,623 B1 * 9/2003 Midgley et a!. ............. 707/204 COMPARISON OF DESCRIPTORS 6,665,815 B1 12/2003 Goldstein eta!. 7,434,052 B1 * 10/2008 Rump ........................ 713/171 (75) Inventors: Yuri S. Per, Moscow (RU); Maxim V. 2006/0075294 A1 * 4/2006 Ma eta!. ...................... 714/13 Tsypliaev, Moscow (RU); Maxim V. Lyadvinsky, Moscow (RU); Alexander G. Tormasov, Moscow (RU); Serguei * cited by examiner M. Beloussov, Singapore (SG) Primary Examiner-Brian R Peugh (73) Assignee: Acronis Inc., Tortola (VG) Assistant Examiner-Nicholas Simonetti (74) Attorney, Agent, or Firm-Bardmesser Law Group ( *) Notice: Subject to any disclaimer, the term of this patent is extended or adjusted under 35 (57) ABSTRACT U.S.C. 154(b) by 494 days. This patent is subject to a terminal dis A method, system computer program product recorded on a claimer. computer readable medium, for fast incremental backup of a storage device includes selecting an area of the data storage (21) Appl. No.: 11/686,454 device for backup; creating the bitmap of data storage device; reading the selected area of the data storage device; convert (22) Filed: Mar. 15, 2007 ing data read from the data storage device into a usable format for the comparison of descriptors; reading descriptors oflogi Related U.S. Application Data cal storage units of the selected area; discarding selected data access attributes ofthe descriptors; generating hash values for (63) Continuation-in-part of application No.