Network Attack and Defence

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

ESET THREAT REPORT Q3 2020 | 2 ESET Researchers Reveal That Bugs Similar to Krøøk Affect More Chip Brands Than Previously Thought

THREAT REPORT Q3 2020 WeLiveSecurity.com @ESETresearch ESET GitHub Contents Foreword Welcome to the Q3 2020 issue of the ESET Threat Report! 3 FEATURED STORY As the world braces for a pandemic-ridden winter, COVID-19 appears to be losing steam at least in the cybercrime arena. With coronavirus-related lures played out, crooks seem to 5 NEWS FROM THE LAB have gone “back to basics” in Q3 2020. An area where the effects of the pandemic persist, however, is remote work with its many security challenges. 9 APT GROUP ACTIVITY This is especially true for attacks targeting Remote Desktop Protocol (RDP), which grew throughout all H1. In Q3, RDP attack attempts climbed by a further 37% in terms of unique 13 STATISTICS & TRENDS clients targeted — likely a result of the growing number of poorly secured systems connected to the internet during the pandemic, and possibly other criminals taking inspiration from 14 Top 10 malware detections ransomware gangs in targeting RDP. 15 Downloaders The ransomware scene, closely tracked by ESET specialists, saw a first this quarter — an attack investigated as a homicide after the death of a patient at a ransomware-struck 17 Banking malware hospital. Another surprising twist was the revival of cryptominers, which had been declining for seven consecutive quarters. There was a lot more happening in Q3: Emotet returning 18 Ransomware to the scene, Android banking malware surging, new waves of emails impersonating major delivery and logistics companies…. 20 Cryptominers This quarter’s research findings were equally as rich, with ESET researchers: uncovering 21 Spyware & backdoors more Wi-Fi chips vulnerable to KrØØk-like bugs, exposing Mac malware bundled with a cryptocurrency trading application, discovering CDRThief targeting Linux VoIP softswitches, 22 Exploits and delving into KryptoCibule, a triple threat in regard to cryptocurrencies. -

Welivesecurity.Com @Esetresearch ESET Github Contents Foreword

THREAT REPORT Q1 2020 WeLiveSecurity.com @ESETresearch ESET GitHub Contents Foreword Welcome to the first quarterly ESET Threat Report! 3 FEATURED STORY The first quarter of 2020 was, without a doubt, defined by the outbreak of COVID-19 — now 5 NEWS FROM THE LAB a pandemic that has put much of the world under lockdown, disrupting peoples’ lives in unprecedented ways. 6 APT GROUP ACTIVITY In the face of these developments, many businesses were forced to swiftly adopt work- from-home policies, thereby facing numerous new challenges. Soaring demand for remote 8 STATISTICS & TRENDS access and videoconferencing applications attracted cybercriminals who quickly adjusted their attack strategies to profit from the shift. 9 Top 10 malware detections Cybercriminals also haven’t hesitated to exploit public concerns surrounding the pandemic. In March 2020, we saw a surge in scam and malware campaigns using the coronavirus pan- 10 Downloaders demic as a lure, trying to capitalize on people’s fears and hunger for information. 11 Banking malware Even under lockdown, our analysts, detection engineers and security specialists continued to keep a close eye on this quarter’s developments. Some threat types — such as crypto- 12 Ransomware miners or Android malware — saw a decrease in detections compared with the previous — — 14 Cryptominers quarter; others such as web threats and stalkerware were on the rise. Web threats in particular have seen the largest increase in terms of overall numbers of detections, a 15 Spyware & backdoors possible side effect of coronavirus lockdowns. ESET Research Labs also did not stop investigating threats — Q1 2020 saw them dissect 16 Exploits obfuscation techniques in Stantinko’s new cryptomining module; detail the workings of 17 Mac threats advanced Brazil-targeting banking trojan Guildma; uncover new campaigns by the infamous Winnti Group and Turla; and uncover KrØØk, a previously unknown vulnerability affecting the 18 Android threats encryption of over a billion Wi-Fi devices. -

KR00K - CVE-2019-15126 Wi-Fi 暗号化方式における 深刻な脆弱性

ESET Research white papers TLP: WHITE KR00K - CVE-2019-15126 Wi-Fi 暗号化方式における 深刻な脆弱性 筆者: Miloš Čermák, ESET Malware Researcher Štefan SvorenČík, ESET Head of Experimental Research and Detection Róbert Lipovský, ESET Senior Malware Researcher 協力: Ondrej KuboviČ, Security Awareness Specialist 2020 年 2 月 1 KR00K CVE201915126 Wi-Fi 暗号化方式における深刻な脆弱性 TLP: WHITE 目次 エグゼクティブサマリー . 2 序章 . 3 技術的背景 . 3 KR00K 脆弱性の発見 . 4 侵入経路:脆弱性の悪用 . 5 不正な読み取り データの暗号化 . 5 影響を受ける機器 . 6 脆弱なアクセスポイント . 7 KR00K と KRACK の関係 . 7 KR00K で AMAZON エコー LED をクラッキングする方法 . 7 KRACK と KR00K の比較 . 8 結論 . 8 謝辞 . 9 発見のタイムラインと開示の経緯 . 9 ESET について . 10 筆者: Miloš Čermák, ESET Malware Researcher Štefan SvorenČík, ESET Head of Experimental Research and Detection Róbert Lipovský, ESET Senior Malware Researcher 協力: Ondrej KuboviČ, Security Awareness Specialist 2020 年 2 月 2 KR00K CVE201915126 Wi-Fi 暗号化方式における深刻な脆弱性 TLP: WHITE エグゼクティブサマリー ESET の研究者は、Wi-Fi チップにこれまで世界に知られていなかった脆弱性を発見し、Kr00k と名付けました。 CVE-2019-15126 が割り当てられたこの深刻な脆弱性は、脆弱なデバイスは数字がすべて0の暗号化キーを使用して、 ユーザーの通信の一部を暗号化するというものです。これを利用した攻撃が成功すると、攻撃者は脆弱なデバイスによっ て送信された一部のワイヤレスネットワークパケットの解読が可能となってしまいます。 Kr00k は、Broadcom および Cypress による Wi-Fi チップを搭載し、まだパッチが適用されていないデバイスに影響 します。これらの Wi-Fi チップは、スマートフォン、タブレット、ラップトップ、IoT ガジェットなどの最新の Wi-Fi 対応デバイスで使用される最も一般的なものです。 クライアントデバイスだけでなく、Broadcom チップを搭載した Wi-Fi アクセスポイントおよびルーターもこの脆弱 性の影響を受け、多くのクライアントデバイスの環境が脆弱になります。 私たちのテストでは、パッチを適用する前に、Amazon(Echo、Kindle)、 Apple(iPhone、iPad、MacBook)、 Google(Nexus)、 Samsung(Galaxy)、 Raspberry(Pi 3)、 Xiaomi(RedMi)の一部のクライアントデバイス及び、 Asus と Huawei による一部のアクセスポイントが、Kr00k に対して脆弱であることを確認しました。控えめな見積も -

Cyber Roundup – March 2020

Cyber Roundup – March 2020 By Thomas J. DeMayo, Principal, Cyber Risk Management With the exception of the last bulleted item about the proposed federal Data Protection Agency, this month’s edition of Cyber Roundup soberly sets out various cybercrimes that are morphing into even more destructive acts, resulting in ever-expanding harm to businesses and individuals. The ingenious evil that allows these cyber criminals to swindle money, wreck reputations and cause chaos speaks to the need for vigilance and to the engagement of professionals to help insulate your IT systems, devices and procedures. In order to succeed in the long-term, vigilance and professional help must be ongoing. Call us. We also call your attention to our special e-newsletter on the coronavirus in case you missed it. Key Cyber Events The following is a rundown of what happened during the month of February 2020. We welcome your comments, insights and questions. • According to the FBI’s 2019 Internet Crime Report, available here, business email compromise (BEC) accounted for almost half of reported cybercrime losses in 2019. Business email compromise is the method used by cyber criminals to trick victims into transferring funds. The tactic is usually accomplished by impersonating a legitimate person or company with which the business has a relationship. In total, the FBI received 467,361 complaints with losses totaling more than $3.5 billion. Approximately $1.77 billion is attributable to BEC. • The Puerto Rican government reported that they fell victim to business email compromise that resulted in the loss of $2.6 million. The money was transferred after an email was received claiming a change to banking information associated with a remittance payment. -

Annual Report on Attacks and Vulnerabilities Seen in 2020

Annual Report on Attacks and Vulnerabilities seen in 2020 Released on February 2021 Table of contents 1 Introduction .......................................................................................................................................... 3 2 What happened in 2020? ..................................................................................................................... 3 3 Analysis of the most striking phenomena of 2020 ............................................................................... 7 3.1 The cyber threat induced by the Covid-19 crisis .......................................................................... 8 3.2 Ransomware's Attacks against companies................................................................................. 10 3.3 Attacks against VPN Access and Exposed Appliances ................................................................ 12 3.4 Orion SolarWinds and Supply-chain attacks .............................................................................. 13 3.5 DDOS attacks .............................................................................................................................. 15 3.6 Increasingly Sophisticated State Attacks .................................................................................... 15 3.7 Technical developments observed in 2020 ................................................................................ 17 3.7.1 Attacks against Exchange, SharePoint and IIS ................................................................... -

Esetresearch ESET Github Contents Foreword

THREAT REPORT Q2 2020 WeLiveSecurity.com @ESETresearch ESET GitHub Contents Foreword Welcome to the Q2 2020 issue of the ESET Threat Report! 3 FEATURED STORY With half a year passed from the outbreak of COVID-19, the world is now trying to come 5 NEWS FROM THE LAB to terms with the new normal. But even with the initial panic settled, and many countries easing up on their lockdown restrictions, cyberattacks exploiting the pandemic showed no 8 APT GROUP ACTIVITY sign of slowing down in Q2 2020. Our specialists saw a continued influx of COVID-19 lures in web and email attacks, with fraudsters trying to make the most out of the crisis. ESET telemetry also showed a spike 14 STATISTICS & TRENDS in phishing emails targeting online shoppers by impersonating one of the world’s leading package delivery services — a tenfold increase compared to Q1. The rise in attacks target- 15 Top 10 malware detections ing Remote Desktop Protocol (RDP) — the security of which still often remains neglected — continued in Q2, with persistent attempts to establish RDP connections more than doubling 16 Downloaders since the beginning of the year. 17 Banking malware One of the most rapidly developing areas in Q2 was the ransomware scene, with some operators abandoning the — still quite new — trend of doxing and random data leaking, 18 Ransomware and moving to auctioning the stolen data on dedicated underground sites, and even forming “cartels” to attract more buyers. 20 Cryptominers Ransomware also made an appearance on the Android platform, targeting Canada under the 21 Spyware & backdoors guise of a COVID-19 tracing app. -

View the Index

INDEX Symbols & Numbers Adafruit FT232H Breakout, 401–402 * character, 255, 263 adb (Android Debug Bridge), 360, 402 8N1 UART configuration,158 adb logcat, 367–368 802.11 protocols, 288 adb pull command, 361 802.11w, 289 AddPortMapping command, 125–126, 130 addressing layer, UPnP, 119 address search macro, 204 A address space layout randomization, 345 AAAA records, 138–139 admin credentials, Netgear D6000, 213–214 A-ASSOCIATE abort message, 96 ADV_IND PDU type, 271 A-ASSOCIATE accept message, 96 ADV_NONCONN_IND PDU type, 271 A-ASSOCIATE reject message, 96 advanced persistent threat (APT) attacks, 26 A-ASSOCIATE request message, 96 adversaries, 6 C-ECHO requests dissector, building, aes128_cmac function, 325 101–105 AES 128-bit keys, 323, 325, 326 defining structure, 112–113 AFI (Application Family Identifier),244 overview, 96 -afre argument, 135–136 parsing responses, 113–114 aftermarket security, 5 structure of, 101–102 Aircrack-ng, 289, 300–301, 402 writing contexts, 110–111 Aireplay-ng, 290 ABP (Activation by Personalization), 324, Airmon-ng, 289–290, 297–298 326–327, 330–331 Airodump-ng, 290, 301 Abstract Syntax, 111 Akamai, 118 access bits, 251–252 Akerun Smart Lock Robot app for iOS, 357 access controls, testing, 49–50 alarms, jamming wireless, 375–379 access points (APs), 287 Alfa Atheros AWUS036NHA, 402 cracking WPA Enterprise, 304–305 altering RFID tags, 255–256 cracking WPA/WPA2, 299–300 Amazon S3 buckets, 209–210 general discussion, 287–288 Amcrest IP camera, 147–152 overview, 299 amplification attacks, 94 access port, 60 analysis phase, network protocol account privileges, testing, 51 inspections, 92–93 ACK spoofing, 331 Andriesse, Dennis, 218 Activation by Personalization (ABP), 324, Android apps. -

ESET THREAT REPORT Q2 2020 | 2 ESET Researchers Reveal the Modus Operandi of the Elusive Invisimole Group, Including Newly Discovered Ties with the Gamaredon Group

THREAT REPORT Q2 2020 WeLiveSecurity.com @ESETresearch ESET GitHub Contents Foreword Welcome to the Q2 2020 issue of the ESET Threat Report! 3 FEATURED STORY With half a year passed from the outbreak of COVID-19, the world is now trying to come 5 NEWS FROM THE LAB to terms with the new normal. But even with the initial panic settled, and many countries easing up on their lockdown restrictions, cyberattacks exploiting the pandemic showed no 8 APT GROUP ACTIVITY sign of slowing down in Q2 2020. Our specialists saw a continued influx of COVID-19 lures in web and email attacks, with fraudsters trying to make the most out of the crisis. ESET telemetry also showed a spike 14 STATISTICS & TRENDS in phishing emails targeting online shoppers by impersonating one of the world’s leading package delivery services — a tenfold increase compared to Q1. The rise in attacks target- 15 Top 10 malware detections ing Remote Desktop Protocol (RDP) — the security of which still often remains neglected — continued in Q2, with persistent attempts to establish RDP connections more than doubling 16 Downloaders since the beginning of the year. 17 Banking malware One of the most rapidly developing areas in Q2 was the ransomware scene, with some operators abandoning the — still quite new — trend of doxing and random data leaking, 18 Ransomware and moving to auctioning the stolen data on dedicated underground sites, and even forming “cartels” to attract more buyers. 20 Cryptominers Ransomware also made an appearance on the Android platform, targeting Canada under the 21 Spyware & backdoors guise of a COVID-19 tracing app. -

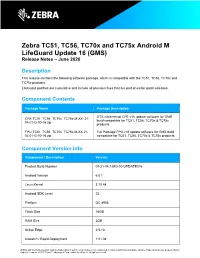

Zebra TC51, TC56, Tc70x and Tc75x Android M Lifeguard Update 16 (GMS) Release Notes – June 2020

Zebra TC51, TC56, TC70x and TC75x Android M LifeGuard Update 16 (GMS) Release Notes – June 2020 Description This release contains the following software package, which is compatible with the TC51, TC56, TC70x and TC75x products. LifeGuard patches are cumulative and include all previous fixes that are part of earlier patch releases. Component Contents Package Name Package Description OTA incremental CFE v16 update software for GMS CFE-TC51_TC56_TC70x_TC75x-M-XX- 21- build compatible for TC51, TC56, TC70x & TC75x 04.01-G-00-16.zip products. FPU-TC51_TC56_TC70x_TC75x-M-XX-21- Full Package FPU v16 update software for GMS build 04.01-G-00-16.zip compatible for TC51, TC56, TC70x & TC75x products. Component Version Info Component / Description Version Product Build Number 01-21-04.1-MG-00-UPDATE016 Android Version 6.0.1 Linux Kernel 3.10.84 Android SDK Level 23 Platform QC 8956 Flash Size 16GB RAM Size 2GB Active Edge 2.5.13 Airwatch / Rapid Deployment 1.01.04 ZEBRA and the stylized Zebra head are trademarks of Zebra Technologies Corp., registered in many jurisdictions worldwide. All other trademarks are the property of their respective owners. ©2020 Zebra Technologies Corp. and/or its affiliates. All rights reserved. Analytics Mgr 2.4.0.1140 App Gallery 3.0.1.7 Audio (Microphone and Speaker) 0.31.3.0 Battery Management 1.3.8 Bluetooth Pairing Utility 3.7.1 Data Analytics Engine 1.0.2.2091 DataWedge 7.0.4 Elemez-B2M 1.0.0.347 EMDK 7.0.0.2000 Enterprise Keyboard 1.9.0.9 GMS R12 MX version 8.2.4.10 NFC 4.3.0_M OSX QCT.60.6.8.1 PTT 3.1.32 RxLogger 4.60.2.0 Scanner 19.53.37.1 SimulScan SimulScanDemo: 2.9 SimulScanRes: 1.14.11 SMARTMU 2.1 SOTI / MobiControl 13.3.0 Build 1059 StageNow 3.1.1.1019 Touch Panel 1.8-Stylus-2-0, 1.8-Glove-2-0 (TC51/56) 1.9-Stylus-1-0, 2.0-Glove-1-0 (TC75X/70X) ZEBRA and the stylized Zebra head are trademarks of Zebra Technologies Corp., registered in many jurisdictions worldwide. -

ESET Threat Report Q1 2020

THREAT REPORT Q1 2020 WeLiveSecurity.com @ESETresearch ESET GitHub Contents Foreword Welcome to the first quarterly ESET Threat Report! 3 FEATURED STORY The first quarter of 2020 was, without a doubt, defined by the outbreak of COVID-19 — now 5 NEWS FROM THE LAB a pandemic that has put much of the world under lockdown, disrupting peoples’ lives in unprecedented ways. 6 APT GROUP ACTIVITY In the face of these developments, many businesses were forced to swiftly adopt work- from-home policies, thereby facing numerous new challenges. Soaring demand for remote 8 STATISTICS & TRENDS access and videoconferencing applications attracted cybercriminals who quickly adjusted their attack strategies to profit from the shift. 9 Top 10 malware detections Cybercriminals also haven’t hesitated to exploit public concerns surrounding the pandemic. In March 2020, we saw a surge in scam and malware campaigns using the coronavirus pan- 10 Downloaders demic as a lure, trying to capitalize on people’s fears and hunger for information. 11 Banking malware Even under lockdown, our analysts, detection engineers and security specialists continued to keep a close eye on this quarter’s developments. Some threat types — such as crypto- 12 Ransomware miners or Android malware — saw a decrease in detections compared with the previous — — 14 Cryptominers quarter; others such as web threats and stalkerware were on the rise. Web threats in particular have seen the largest increase in terms of overall numbers of detections, a 15 Spyware & backdoors possible side effect of coronavirus lockdowns. ESET Research Labs also did not stop investigating threats — Q1 2020 saw them dissect 16 Exploits obfuscation techniques in Stantinko’s new cryptomining module; detail the workings of 17 Mac threats advanced Brazil-targeting banking trojan Guildma; uncover new campaigns by the infamous Winnti Group and Turla; and uncover KrØØk, a previously unknown vulnerability affecting the 18 Android threats encryption of over a billion Wi-Fi devices. -

It's Time for Security Now!. Steve Gibson Is Here. Lots to Talk About

Security Now! Transcript of Episode #756 Page 1 of 32 Transcript of Episode #756 Kr00k Description: This week we look at a significant milestone for Let's Encrypt; the uncertain future of Facebook, Google, Twitter and others in Pakistan; some revealing information about the facial image scraping and recognition company Clearview AI; the Swiss government's reaction to the Crypto AG revelations; a "must patch now" emergency for Apache Tomcat servers; a revisit of OCSP stapling; a tried and true means of increasing your immunity to viruses; an update on SpinRite; and the latest serious vulnerability in our WiFi infrastructure, known as Kr00k. High quality (64 kbps) mp3 audio file URL: http://media.GRC.com/sn/SN-756.mp3 Quarter size (16 kbps) mp3 audio file URL: http://media.GRC.com/sn/sn-756-lq.mp3 SHOW TEASE: It's time for Security Now!. Steve Gibson is here. Lots to talk about. We've got Let's Encrypt's one-billionth certificate, just in time for a major security problem that they're rushing to fix. We'll have the details on that. Also, the new Kr00k vulnerability at WPA3. Steve has a prescription that'll keep you safe. And we're going to talk about Clearview AI, the face recognition that was used by a lot more people than anybody thought. It's all coming up next on Security Now!. Leo Laporte: This is Security Now! with Steve Gibson, Episode 756, recorded Tuesday, March 3rd, 2020: Kr00k. It's time for Security Now!, the show where we cover your security, your privacy, your health, your happiness, your welfare, online and off. -

Cyber Threat Monitor Report 2019-20

1 Cyber Threat Monitor Report 2019-20 K7 Cyber Threat Monitor 2 Contents Deciphering The Indian Cybersecurity Threat Landscape �������������������������������������������� 4 Regional Infection Profile ��������������������������������� 6 Enterprise Insecurity ���������������������������������������� 8 Case Study: Spurt In RDP Attacks �����������������������������������������������������������9 COVID-19 Themed Attacks ����������������������������������������������������������������� 10 Safety Recommendations �������������������������������������������������������������������� 11 Vulnerabilities Galore ������������������������������������� 12 Curveball Aka Windows Crypto API Spoofing Vulnerability ���������������������� 12 Ghostcat Vulnerability ������������������������������������������������������������������������ 13 Unauthenticated Meeting Join Vulnerability In Webex Meetings �������������� 13 SMBghost ������������������������������������������������������������������������������������������14 Prevalent Remote Code Exploitation ����������������������������������������������������� 15 RCE Exploitation Using Shortcut Files ������������������������������������������������������������������������� 15 RCE Vulnerability In Microsoft Exchange Server ����������������������������������������������������������� 15 Danger In The Internet Of Things ��������������������16 Healthcare Sector Wakes Up To MDhex ������������������������������������������������ 17 Zero-Day Vulnerabilities Discovered In Cisco Discovery Protocol (CDP) ���� 18 Kr00k - The