An Expansion-Based QBF Solver for Negation Normal Form

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Mathematical Logic, an Introduction

Mathematical Logic, an Introduction by Peter Koepke Bonn, Winter 2019/20 Wann sollte die Mathematik je zu einem Anfang gelangen, wenn sie warten wollte, bis die Philosophie über unsere Grundbegrie zur Klarheit und Einmüthigkeit gekommen ist? Unsere einzige Rettung ist der formalistische Standpunkt, undenirte Begrie (wie Zahl, Punkt, Ding, Menge) an die Spitze zu stellen, um deren actuelle oder psychologische oder anschauliche Bedeutung wir uns nicht kümmern, und ebenso unbewiesene Sätze (Axiome), deren actuelle Richtigkeit uns nichts angeht. Aus diesen primitiven Begrien und Urtheilen gewinnen wir durch Denition und Deduction andere, und nur diese Ableitung ist unser Werk und Ziel. (Felix Hausdor, 12. Januar 1918) Table of contents 1 Introduction . 4 I First-order Logic and the Gödel Completeness Theorem . 4 2 The Syntax of rst-order logic: Symbols, words, and formulas . 4 2.1 Motivation: a mathematical statement . 5 2.2 Symbols . 5 2.3 Words . 6 2.4 Terms . 7 2.5 Formulas . 8 3 Semantics . 9 4 The satisfaction relation . 11 5 Logical implication and propositional connectives . 14 6 Substitution and term rules . 15 7 A sequent calculus . 20 8 Derivable sequent rules . 22 8.1 Auxiliary derived rules . 22 8.2 Introduction and elimination of ; ; ::: . 23 8.3 Formal proofs about . ._. .^. 24 9 Consistency . 25 1 2 Section 10 Term models and Henkin sets . 27 11 Constructing Henkin sets . 30 12 The completeness theorem . 34 13 The compactness theorem . 35 II Herbrand's Theorem and Automatic Theorem Proving . 38 14 Normal forms . 38 14.1 Negation normal form . 39 14.2 Conjunctive and disjunctive normal form . -

Solving the Boolean Satisfiability Problem Using the Parallel Paradigm Jury Composition

Philosophæ doctor thesis Hoessen Benoît Solving the Boolean Satisfiability problem using the parallel paradigm Jury composition: PhD director Audemard Gilles Professor at Universit´ed'Artois PhD co-director Jabbour Sa¨ıd Assistant Professor at Universit´ed'Artois PhD co-director Piette C´edric Assistant Professor at Universit´ed'Artois Examiner Simon Laurent Professor at University of Bordeaux Examiner Dequen Gilles Professor at University of Picardie Jules Vernes Katsirelos George Charg´ede recherche at Institut national de la recherche agronomique, Toulouse Abstract This thesis presents different technique to solve the Boolean satisfiability problem using parallel and distributed architec- tures. In order to provide a complete explanation, a careful presentation of the CDCL algorithm is made, followed by the state of the art in this domain. Once presented, two proposi- tions are made. The first one is an improvement on a portfo- lio algorithm, allowing to exchange more data without loosing efficiency. The second is a complete library with its API al- lowing to easily create distributed SAT solver. Keywords: SAT, parallelism, distributed, solver, logic R´esum´e Cette th`ese pr´esente diff´erentes techniques permettant de r´esoudre le probl`eme de satisfaction de formule bool´eenes utilisant le parall´elismeet du calcul distribu´e. Dans le but de fournir une explication la plus compl`ete possible, une pr´esentation d´etaill´ee de l'algorithme CDCL est effectu´ee, suivi d'un ´etatde l'art. De ce point de d´epart,deux pistes sont explor´ees. La premi`ereest une am´eliorationd'un algorithme de type portfolio, permettant d'´echanger plus d'informations sans perte d’efficacit´e. -

My Slides for a Course on Satisfiability

Satisfiability Victor W. Marek Computer Science University of Kentucky Spring Semester 2005 Satisfiability – p.1/97 Satisfiability story ie× Á Satisfiability - invented in the ¾¼ of XX century by philosophers and mathematicians (Wittgenstein, Tarski) ie× Á Shannon (late ¾¼ ) applications to what was then known as electrical engineering ie× ie× Á Fundamental developments: ¼ and ¼ – both mathematics of it, and fundamental algorithms ie× Á ¼ - progress in computing speed and solving moderately large problems Á Emergence of “killer applications” in Computer Engineering, Bounded Model Checking Satisfiability – p.2/97 Current situation Á SAT solvers as a class of software Á Solving large cases generated by industrial applications Á Vibrant area of research both in Computer Science and in Computer Engineering ¯ Various CS meetings (SAT, AAAI, CP) ¯ Various CE meetings (CAV, FMCAD, DATE) ¯ CE meetings Stressing applications Satisfiability – p.3/97 This Course Á Providing mathematical and computer science foundations of SAT ¯ General mathematical foundations ¯ Two- and Three- valued logics ¯ Complete sets of functors ¯ Normal forms (including ite) ¯ Compactness of propositional logic ¯ Resolution rule, completeness of resolution, semantical resolution ¯ Fundamental algorithms for SAT ¯ Craig Lemma Satisfiability – p.4/97 And if there is enough of time and will... Á Easy cases of SAT ¯ Horn ¯ 2SAT ¯ Linear formulas Á And if there is time (but notes will be provided anyway) ¯ Expressing runs of polynomial-time NDTM as SAT, NP completeness ¯ “Mix and match” ¯ Learning in SAT, partial closure under resolution ¯ Bounded Model Checking Satisfiability – p.5/97 Various remarks Á Expected involvement of Dr. Truszczynski Á An extensive set of notes (> 200 pages), covering most of topics will be provided f.o.c. -

Logic and Computation – CS 2800 Fall 2019

Logic and Computation – CS 2800 Fall 2019 Lecture 12 Propositional logic continued CNF, DNF, complete Boolean bases Stavros Tripakis Discuss homework 02 survey • How many courses are you taking? • How many hours per course would you like to be spending, ideally? — Including everything: attending lectures, reading, homeworks, projects, piazza, … • How many of those hours on homework? Tripakis Logic and Computation, Fall 2019 2 Outline • Properties of Boolean operators: read and assimilate Section 3.3 of lecture notes! • Normal forms, DNF and CNF • Complete Boolean bases Tripakis Logic and Computation, Fall 2019 3 Properties of Boolean operators • Review lecture notes, section 3.3 Tripakis Logic and Computation, Fall 2019 4 Normal forms, CNF, DNF Tripakis Logic and Computation, Fall 2019 5 Negation Normal Form (NNF) • “Push” all negations all the way to the leaves of the syntax tree of the formula (literals) • Eliminate double negations • Examples: Tripakis Logic and Computation, Fall 2019 6 Negation Normal Form (NNF) • “Push” all negations all the way to the leaves of the syntax tree of the formula (literals) • Eliminate double negations • Examples: Tripakis Logic and Computation, Fall 2019 7 Disjunctive Normal Form (DNF) • A formula is in DNF if it is a disjunction of conjunctions of literals • Literal = either a variable or a negated variable • Examples: which formulas below are in DNF? Tripakis Logic and Computation, Fall 2019 8 Conjunctive Normal Form (CNF) • A formula is in CNF if it is a conjunction of disjunctions of literals • A disjunction of literals is also called a clause • Examples: which formulas below are in CNF? Tripakis Logic and Computation, Fall 2019 9 Can we transform any Boolean formula into DNF? • Yes • Brute‐force method: 1. -

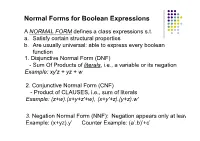

Normal Forms for Boolean Expressions

Normal Forms for Boolean Expressions A NORMAL FORM defines a class expressions s.t. a. Satisfy certain structural properties b. Are usually universal: able to express every boolean function 1. Disjunctive Normal Form (DNF) - Sum Of Products of literals, i.e., a variable or its negation Example: xy'z + yz + w 2. Conjunctive Normal Form (CNF) - Product of CLAUSES, i.e., sum of literals Example: (z+w).(x+y+z'+w), (x+y'+z).(y+z).w‘ 3. Negation Normal Form (NNF): Negation appears only at leaves Example: (x+yz).y’ Counter Example: (a’.b)’+c’ Propositional Logic Decidability Complexity Theorem: Satisfiability of CNF formulas is NP-complete Theorem: Validity of DNF formulas is NP-complete Theorem: Satisfiability and Validity of arbitrary boolean formulas is NP-complete Intuition behind NP-completeness: Transformation b/w normal forms can have exponential blow-up 2SAT Satisfiability is Polynomial Time Implication Graph Notes: 1. Each clause is an implication e.g., x’+y = x y 2. Vertex for each literal in clause 3. One edge for each implication For each variable Check if there is a path from X to X’ as well as from X’ to X Path checking on graph is Poly!! Reduction of 3SAT CNF to Clique Problem on Graphs Theorem: 3SAT and above is NP-complete Note: Clique is NP-complete Are we doomed then? • No, there are efficient methods that work VERY well for large classes of formulas • We study two techniques that are the basis for widely used tools in practice • ROBDD: A compact cannonical form for arbitrary boolean functions • SAT solving: An efficient heuristic-based -

Propositional Logic: More on Normal Forms

PROPOSITIONAL LOGIC: MORE ON NORMAL FORMS VL Logic, Lecture 3, WS 20/21 Armin Biere, Martina Seidl Institute for Formal Models and Verification Johannes Kepler University Linz PROPOSITIONAL FORMULAS: SATISFIABILITY EQUIVALENCES VL Logic Part I: Propositional Logic Recap: Semantic Equivalence Two formulas φ and are semanticly equivalent (written as φ , ) iff forall interpreta- tions [:]ν it holds that [φ]ν = [ ]ν. , is a meta-symbol, i.e., it is not part of the language. φ , iff φ $ is valid, i.e., we can express semantics by means of syntactics. If φ and are not equivalent, we write φ 6, . Example: a _:a 6, b !:b (a _ b) ^ :(a _ b) ,? a _:a , b _:b a $ (b $ c) , (a $ b) $ c 1/3 Satisfiability Equivalence Two formulas φ and are satisfiability-equivalent (written as φ ,S AT ) iff both formu- las are satisfiable or both are contradictory. Satisfiability-equivalent formulas are not necessarily satisfied by the same assignments. Satisfiability equivalence is a weaker property than semantic equivalence. Often sufficient for simplification rules: If the complicated formula is satisfiable then also the simplified formula is satisfiable. 2/3 Example: Satisfiability Equivalence positive pure literal elimination rule: If a literal x occurs in an NNF formula but :x does not occur in this formula, then x can be substituted by >. The resulting formula is satisfiability-equivalent. Example: x ,S AT >, but x 6, > (a ^ b) _ (:c ^ a) ,S AT b _:c, but (a ^ b) _ (:c ^ a) 6, b _:c 3/3 PROPOSITIONAL FORMULAS: FUNCTIONAL COMPLETENESS VL Logic Part I: Propositional Logic Examples of Semantic Equivalences (1/2) φ ^ , ^ φ φ _ , _ φ commutativity φ ^ ( ^ γ) , (φ ^ ) ^ γ φ _ ( _ γ) , (φ _ ) _ γ associativity φ ^ (φ _ ) , φ φ _ (φ ^ ) , φ absorption φ ^ ( _ γ) , (φ ^ ) _ (φ ^ γ) φ _ ( ^ γ) , (φ _ ) ^ (φ _ γ) distributivity :(φ ^ ) ,:φ _: :(φ _ ) ,:φ ^ : laws of De Morgan φ $ , (φ ! ) ^ ( ! φ) φ $ , (φ ^ ) _ (:φ ^ : ) synt.