The Complexity of Disjunction in Intuitionistic Logic

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

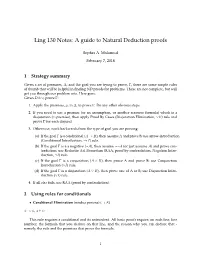

Ling 130 Notes: a Guide to Natural Deduction Proofs

Ling 130 Notes: A guide to Natural Deduction proofs Sophia A. Malamud February 7, 2018 1 Strategy summary Given a set of premises, ∆, and the goal you are trying to prove, Γ, there are some simple rules of thumb that will be helpful in finding ND proofs for problems. These are not complete, but will get you through our problem sets. Here goes: Given Delta, prove Γ: 1. Apply the premises, pi in ∆, to prove Γ. Do any other obvious steps. 2. If you need to use a premise (or an assumption, or another resource formula) which is a disjunction (_-premise), then apply Proof By Cases (Disjunction Elimination, _E) rule and prove Γ for each disjunct. 3. Otherwise, work backwards from the type of goal you are proving: (a) If the goal Γ is a conditional (A ! B), then assume A and prove B; use arrow-introduction (Conditional Introduction, ! I) rule. (b) If the goal Γ is a a negative (:A), then assume ::A (or just assume A) and prove con- tradiction; use Reductio Ad Absurdum (RAA, proof by contradiction, Negation Intro- duction, :I) rule. (c) If the goal Γ is a conjunction (A ^ B), then prove A and prove B; use Conjunction Introduction (^I) rule. (d) If the goal Γ is a disjunction (A _ B), then prove one of A or B; use Disjunction Intro- duction (_I) rule. 4. If all else fails, use RAA (proof by contradiction). 2 Using rules for conditionals • Conditional Elimination (modus ponens) (! E) φ ! , φ ` This rule requires a conditional and its antecedent. -

Starting the Dismantling of Classical Mathematics

Australasian Journal of Logic Starting the Dismantling of Classical Mathematics Ross T. Brady La Trobe University Melbourne, Australia [email protected] Dedicated to Richard Routley/Sylvan, on the occasion of the 20th anniversary of his untimely death. 1 Introduction Richard Sylvan (n´eRoutley) has been the greatest influence on my career in logic. We met at the University of New England in 1966, when I was a Master's student and he was one of my lecturers in the M.A. course in Formal Logic. He was an inspirational leader, who thought his own thoughts and was not afraid to speak his mind. I hold him in the highest regard. He was very critical of the standard Anglo-American way of doing logic, the so-called classical logic, which can be seen in everything he wrote. One of his many critical comments was: “G¨odel's(First) Theorem would not be provable using a decent logic". This contribution, written to honour him and his works, will examine this point among some others. Hilbert referred to non-constructive set theory based on classical logic as \Cantor's paradise". In this historical setting, the constructive logic and mathematics concerned was that of intuitionism. (The Preface of Mendelson [2010] refers to this.) We wish to start the process of dismantling this classi- cal paradise, and more generally classical mathematics. Our starting point will be the various diagonal-style arguments, where we examine whether the Law of Excluded Middle (LEM) is implicitly used in carrying them out. This will include the proof of G¨odel'sFirst Theorem, and also the proof of the undecidability of Turing's Halting Problem. -

Qvp) P :: ~~Pp :: (Pvp) ~(P → Q

TEN BASIC RULES OF INFERENCE Negation Introduction (~I – indirect proof IP) Disjunction Introduction (vI – addition ADD) Assume p p Get q & ~q ˫ p v q ˫ ~p Disjunction Elimination (vE – version of CD) Negation Elimination (~E – version of DN) p v q ~~p → p p → r Conditional Introduction (→I – conditional proof CP) q → r Assume p ˫ r Get q Biconditional Introduction (↔I – version of ME) ˫ p → q p → q Conditional Elimination (→E – modus ponens MP) q → p p → q ˫ p ↔ q p Biconditional Elimination (↔E – version of ME) ˫ q p ↔ q Conjunction Introduction (&I – conjunction CONJ) ˫ p → q p or q ˫ q → p ˫ p & q Conjunction Elimination (&E – simplification SIMP) p & q ˫ p IMPORTANT DERIVED RULES OF INFERENCE Modus Tollens (MT) Constructive Dilemma (CD) p → q p v q ~q p → r ˫ ~P q → s Hypothetical Syllogism (HS) ˫ r v s p → q Repeat (RE) q → r p ˫ p → r ˫ p Disjunctive Syllogism (DS) Contradiction (CON) p v q p ~p ~p ˫ q ˫ Any wff Absorption (ABS) Theorem Introduction (TI) p → q Introduce any tautology, e.g., ~(P & ~P) ˫ p → (p & q) EQUIVALENCES De Morgan’s Law (DM) (p → q) :: (~q→~p) ~(p & q) :: (~p v ~q) Material implication (MI) ~(p v q) :: (~p & ~q) (p → q) :: (~p v q) Commutation (COM) Material Equivalence (ME) (p v q) :: (q v p) (p ↔ q) :: [(p & q ) v (~p & ~q)] (p & q) :: (q & p) (p ↔ q) :: [(p → q ) & (q → p)] Association (ASSOC) Exportation (EXP) [p v (q v r)] :: [(p v q) v r] [(p & q) → r] :: [p → (q → r)] [p & (q & r)] :: [(p & q) & r] Tautology (TAUT) Distribution (DIST) p :: (p & p) [p & (q v r)] :: [(p & q) v (p & r)] p :: (p v p) [p v (q & r)] :: [(p v q) & (p v r)] Conditional-Biconditional Refutation Tree Rules Double Negation (DN) ~(p → q) :: (p & ~q) p :: ~~p ~(p ↔ q) :: [(p & ~q) v (~p & q)] Transposition (TRANS) CATEGORICAL SYLLOGISM RULES (e.g., Ǝx(Fx) / ˫ Fy). -

Logical Verification Course Notes

Logical Verification Course Notes Femke van Raamsdonk [email protected] Vrije Universiteit Amsterdam autumn 2008 Contents 1 1st-order propositional logic 3 1.1 Formulas . 3 1.2 Natural deduction for intuitionistic logic . 4 1.3 Detours in minimal logic . 10 1.4 From intuitionistic to classical logic . 12 1.5 1st-order propositional logic in Coq . 14 2 Simply typed λ-calculus 25 2.1 Types . 25 2.2 Terms . 26 2.3 Substitution . 28 2.4 Beta-reduction . 30 2.5 Curry-Howard-De Bruijn isomorphism . 32 2.6 Coq . 38 3 Inductive types 41 3.1 Expressivity . 41 3.2 Universes of Coq . 44 3.3 Inductive types . 45 3.4 Coq . 48 3.5 Inversion . 50 4 1st-order predicate logic 53 4.1 Terms and formulas . 53 4.2 Natural deduction . 56 4.2.1 Intuitionistic logic . 56 4.2.2 Minimal logic . 60 4.3 Coq . 62 5 Program extraction 67 5.1 Program specification . 67 5.2 Proof of existence . 68 5.3 Program extraction . 68 5.4 Insertion sort . 68 i 1 5.5 Coq . 69 6 λ-calculus with dependent types 71 6.1 Dependent types: introduction . 71 6.2 λP ................................... 75 6.3 Predicate logic in λP ......................... 79 7 Second-order propositional logic 83 7.1 Formulas . 83 7.2 Intuitionistic logic . 85 7.3 Minimal logic . 88 7.4 Classical logic . 90 8 Polymorphic λ-calculus 93 8.1 Polymorphic types: introduction . 93 8.2 λ2 ................................... 94 8.3 Properties of λ2............................ 98 8.4 Expressiveness of λ2........................ -

Logic and Proof Release 3.18.4

Logic and Proof Release 3.18.4 Jeremy Avigad, Robert Y. Lewis, and Floris van Doorn Sep 10, 2021 CONTENTS 1 Introduction 1 1.1 Mathematical Proof ............................................ 1 1.2 Symbolic Logic .............................................. 2 1.3 Interactive Theorem Proving ....................................... 4 1.4 The Semantic Point of View ....................................... 5 1.5 Goals Summarized ............................................ 6 1.6 About this Textbook ........................................... 6 2 Propositional Logic 7 2.1 A Puzzle ................................................. 7 2.2 A Solution ................................................ 7 2.3 Rules of Inference ............................................ 8 2.4 The Language of Propositional Logic ................................... 15 2.5 Exercises ................................................. 16 3 Natural Deduction for Propositional Logic 17 3.1 Derivations in Natural Deduction ..................................... 17 3.2 Examples ................................................. 19 3.3 Forward and Backward Reasoning .................................... 20 3.4 Reasoning by Cases ............................................ 22 3.5 Some Logical Identities .......................................... 23 3.6 Exercises ................................................. 24 4 Propositional Logic in Lean 25 4.1 Expressions for Propositions and Proofs ................................. 25 4.2 More commands ............................................ -

Propositional Logic

Propositional logic Readings: Sections 1.1 and 1.2 of Huth and Ryan. In this module, we will consider propositional logic, which will look familiar to you from Math 135 and CS 251. The difference here is that we first define a formal proof system and practice its use before talking about a semantic interpretation (which will also be familiar) and showing that these two notions coincide. 1 Declarative sentences (1.1) A proposition or declarative sentence is one that can, in principle, be argued as being true or false. Examples: “My car is green” or “Susan was born in Canada”. Many sentences are not declarative, such as “Help!”, “What time is it?”, or “Get me something to eat.” The declarative sentences above are atomic; they cannot be decomposed further. A sentence like “My car is green AND you do not have a car” is a compound sentence or compositional sentence. 2 To clarify the manipulations we perform in logical proofs, we will represent declarative sentences symbolically by atoms such as p, q, r. (We avoid t, f , T , F for reasons which will become evident.) Compositional sentences will be represented by formulas, which combine atoms with connectives. Formulas are intended to symbolically represent statements in the type of mathematical or logical reasoning we have done in the past. Our standard set of connectives will be , , , and . (In Math : ^ _ ! 135, you also used , which we will not use.) Soon, we will $ describe the set of formulas as a formal language; for the time being, we use an informal description. -

Isabelle for Philosophers∗

Isabelle for Philosophers∗ Ben Blumson September 20, 2019 It is unworthy of excellent men to lose hours like slaves in the labour of calculation which could safely be relegated to anyone else if machines were used. Liebniz [11] p. 181. This is an introduction to the Isabelle proof assistant aimed at philosophers and students of philosophy.1 1 Propositional Logic Imagine you are caught in an air raid in the Second World War. You might reason as follows: Either I will be killed in this raid or I will not be killed. Suppose that I will. Then even if I take precautions, I will be killed, so any precautions I take will be ineffective. But suppose I am not going to be killed. Then I won't be killed even if I neglect all precautions; so on this assumption, no precautions ∗This draft is based on notes for students in my paradoxes and honours metaphysics classes { I'm grateful to the students for their help, especially Mark Goh, Zhang Jiang, Kee Wei Loo and Joshua Thong. I've also benefitted from discussion or correspondence on these issues with Zach Barnett, Sam Baron, David Braddon-Mitchell, Olivier Danvy, Paul Oppenheimer, Bruno Woltzenlogel Paleo, Michael Pelczar, David Ripley, Divyanshu Sharma, Manikaran Singh, Neil Sinhababu and Weng Hong Tang. 1I found a very useful introduction to be Nipkow [8]. Another still helpful, though unfortunately dated, introduction is Grechuk [6]. A person wishing to know how Isabelle works might first consult Paulson [9]. For the software itself and comprehensive docu- mentation, see https://isabelle.in.tum.de/. -

List of Rules of Inference 1 List of Rules of Inference

List of rules of inference 1 List of rules of inference This is a list of rules of inference, logical laws that relate to mathematical formulae. Introduction Rules of inference are syntactical transform rules which one can use to infer a conclusion from a premise to create an argument. A set of rules can be used to infer any valid conclusion if it is complete, while never inferring an invalid conclusion, if it is sound. A sound and complete set of rules need not include every rule in the following list, as many of the rules are redundant, and can be proven with the other rules. Discharge rules permit inference from a subderivation based on a temporary assumption. Below, the notation indicates such a subderivation from the temporary assumption to . Rules for classical sentential calculus Sentential calculus is also known as propositional calculus. Rules for negations Reductio ad absurdum (or Negation Introduction) Reductio ad absurdum (related to the law of excluded middle) Noncontradiction (or Negation Elimination) Double negation elimination Double negation introduction List of rules of inference 2 Rules for conditionals Deduction theorem (or Conditional Introduction) Modus ponens (or Conditional Elimination) Modus tollens Rules for conjunctions Adjunction (or Conjunction Introduction) Simplification (or Conjunction Elimination) Rules for disjunctions Addition (or Disjunction Introduction) Separation of Cases (or Disjunction Elimination) Disjunctive syllogism List of rules of inference 3 Rules for biconditionals Biconditional introduction Biconditional Elimination Rules of classical predicate calculus In the following rules, is exactly like except for having the term everywhere has the free variable . Universal Introduction (or Universal Generalization) Restriction 1: does not occur in . -

The Systems of Relevance Logic

ARGUMENT Vol. 1 1/2011 pp. 87–102 The Systems of Relevance Logic Ryszard MIREK Kraków ABSTRACT The system R, or more precisely the pure implicational fragment R →, is considered by the relevance logicians as the most important. The another central system of relevance logic has been the logic E of entailment that was supposed to capture strict relevant implication. The next system of relevance logic is RM or R-mingle. The question is whether adding mingle axiom to R → yields the pure implicational fragment RM → of the system? As concerns the weak systems there are at least two approaches to the problem. First of all, it is possible to restrict a validity of some theorems. In another approach we can investigate even weaker log- ics which have no theorems and are characterized only by rules of deducibility. 1. ThE SySTEM oF NATURAl DEDUCTIoN The central point of relevant logicians has been to avoid the paradoxes of material and strict implication. In other words, according to them, the heart of logic lies in the notion “if […] then [...]”. Among the material paradoxes the following are known: M1. α → (β → α) (positive paradox); M2. ~ α → (α → β); M3. (α → β) ∨ (β → α); M4. (α → β) ∨ (β → γ). In turn, among the strict paradoxes there are known the following: S1. α → (β → β); S2. α → (β ∨ ~ β); S3. (α ∧ ¬ α) → β (ex falso quodlibet). www.argument-journal.eu 88 Ryszard MIREK Relevance logicians have claimed that these theses are counterintui- tive. According to them, in each of them the antecedent seems irrelevant to the consequent. -

Rules of Inference

Appendix I: Rules of Inference What follows are graphic representations for each of the 15 Rules of Inference, followed by a brief discussion, that will be used in this course. This supplement to the material in the Manual Part II, is intended to provide an alternative explanation of what each rule requires and what results from its application, in a more condensed, visual way that may be more easily accessible for some learners. Each rule is presented as an “input-output” machine. The “input(s)” represent the type of statement to which you can apply a given rule, or the type of statement or statements required to be “in play” in order to apply the rule. These statements could be premises that are given at the outset of the argument, derived statements or even assumptions. In the diagram the input is presented as a type of statement. Any substitution instance of this type can be a suitable input. The “output” is the result, the inference, or the conclusion you can draw forward when the rule is validly applied to the input(s). The output statement would be the statement you add to your left-hand derivation column, and justify with the rule you are applying. Within each box is an explanation of what the rule does to any given input to achieve the output. Each rule works on a main logical operator, or with a quantifier. Each rule is unique, reflecting the specific meaning of the logical sign (quantifier or operator) addressed by the rule. Quantifier rules Universal Elimination E Input 1) ELIMINATE the universal (x) Bx quantifer Output 2) Change the Ba variable (x, y, or z) to an individual Discussion: You can use this rule on ANY universally quantified statement, at any time in a proof. -

Chapter 6: Formal Proofs and Boolean Logic

Chapter 6: Formal Proofs and Boolean Logic The Fitch program, like the system F, uses “introduction” and “elimination” rules. The ones we’ve seen so far deal with the logical symbol =. The next group of rules deals with the Boolean connectives ∧, ∨, and ¬. § 6.1 Conjunction rules Conjunction Elimination (∧ Elim) P1 ∧…∧ Pi ∧ …∧ Pn ❺ Pi This rule tells you that if you have a conjunction in a proof, you may enter, on a new line, any of its conjuncts. (Pi here represents any of the conjuncts, including the first or the last.) Notice this important point: the conjunction to which you apply ∧ Elim must appear by itself on a line in the proof. You cannot apply this rule to a conjunction that is embedded as part of a larger sentence. For example, this is not a valid use of ∧ Elim: 1. ¬(Cube(a) ∧ Large(a)) ❺ 2. ¬Cube(a) x ∧ Elim: 1 The reason this is not valid use of the rule is that ∧ Elim can only be applied to conjunctions, and the line that this “proof” purports to apply it to is a negation. And it’s a good thing that this move is not allowed, for the inference above is not valid—from the premise that a is not a large cube it does not follow that a is not a cube. a might well be a small cube (and hence not a large cube, but still a cube). This same restriction—the rule applies to the sentence on the entire line, and not to an embedded sentence—holds for all of the rules of F, by the way. -

Introduction

Chapter 1 Introduction According to Wikipedia, logic is the study of the principles of valid inferences and demonstration. From the breadth of this definition it is immediately clear that logic constitutes an important area in the disciplines of philosophy and mathematics. Logical tools and methods also play an essential role in the de- sign, specification, and verification of computer hardware and software. It is these applications of logic in computer science which will be the focus of this course. In order to gain a proper understanding of logic and its relevance to computer science, we will need to draw heavily on the much older logical tra- ditions in philosophy and mathematics. We will discuss some of the relevant history of logic and pointers to further reading throughout these notes. In this introduction, we give only a brief overview of the contents and approach of this class. The course is divided into four parts: I. Proofs as Evidence for Truth II. Proofs as Programs III. Proofs as Computations IV. Proofs as Refutations Proofs are central in all parts of the course, and give it its constructive nature. In each part, we will exhibit connections between proofs and forms of compu- tations studied in computer science. These connections will take quite different forms, which shows the richness of logic as a foundational discipline at the nexus between philosophy, mathematics, and computer science. In Part I we establish the basic vocabulary and systematically study propo- sitions and proofs, mostly from a philosophical perspective. The treatment will be rather formal in order to permit an easy transition into computational appli- cations.