A Study of Structure-Exploiting Sqp Algorithms for an Optimal Control Problem with Coupled Hyperbolic and Ordinary Differential Equation Constraints

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

University of California, San Diego

UNIVERSITY OF CALIFORNIA, SAN DIEGO Computational Methods for Parameter Estimation in Nonlinear Models A dissertation submitted in partial satisfaction of the requirements for the degree Doctor of Philosophy in Physics with a Specialization in Computational Physics by Bryan Andrew Toth Committee in charge: Professor Henry D. I. Abarbanel, Chair Professor Philip Gill Professor Julius Kuti Professor Gabriel Silva Professor Frank Wuerthwein 2011 Copyright Bryan Andrew Toth, 2011 All rights reserved. The dissertation of Bryan Andrew Toth is approved, and it is acceptable in quality and form for publication on microfilm and electronically: Chair University of California, San Diego 2011 iii DEDICATION To my grandparents, August and Virginia Toth and Willem and Jane Keur, who helped put me on a lifelong path of learning. iv EPIGRAPH An Expert: One who knows more and more about less and less, until eventually he knows everything about nothing. |Source Unknown v TABLE OF CONTENTS Signature Page . iii Dedication . iv Epigraph . v Table of Contents . vi List of Figures . ix List of Tables . x Acknowledgements . xi Vita and Publications . xii Abstract of the Dissertation . xiii Chapter 1 Introduction . 1 1.1 Dynamical Systems . 1 1.1.1 Linear and Nonlinear Dynamics . 2 1.1.2 Chaos . 4 1.1.3 Synchronization . 6 1.2 Parameter Estimation . 8 1.2.1 Kalman Filters . 8 1.2.2 Variational Methods . 9 1.2.3 Parameter Estimation in Nonlinear Systems . 9 1.3 Dissertation Preview . 10 Chapter 2 Dynamical State and Parameter Estimation . 11 2.1 Introduction . 11 2.2 DSPE Overview . 11 2.3 Formulation . 12 2.3.1 Least Squares Minimization . -

Chapter 8 Constrained Optimization 2: Sequential Quadratic Programming, Interior Point and Generalized Reduced Gradient Methods

Chapter 8: Constrained Optimization 2 CHAPTER 8 CONSTRAINED OPTIMIZATION 2: SEQUENTIAL QUADRATIC PROGRAMMING, INTERIOR POINT AND GENERALIZED REDUCED GRADIENT METHODS 8.1 Introduction In the previous chapter we examined the necessary and sufficient conditions for a constrained optimum. We did not, however, discuss any algorithms for constrained optimization. That is the purpose of this chapter. The three algorithms we will study are three of the most common. Sequential Quadratic Programming (SQP) is a very popular algorithm because of its fast convergence properties. It is available in MATLAB and is widely used. The Interior Point (IP) algorithm has grown in popularity the past 15 years and recently became the default algorithm in MATLAB. It is particularly useful for solving large-scale problems. The Generalized Reduced Gradient method (GRG) has been shown to be effective on highly nonlinear engineering problems and is the algorithm used in Excel. SQP and IP share a common background. Both of these algorithms apply the Newton- Raphson (NR) technique for solving nonlinear equations to the KKT equations for a modified version of the problem. Thus we will begin with a review of the NR method. 8.2 The Newton-Raphson Method for Solving Nonlinear Equations Before we get to the algorithms, there is some background we need to cover first. This includes reviewing the Newton-Raphson (NR) method for solving sets of nonlinear equations. 8.2.1 One equation with One Unknown The NR method is used to find the solution to sets of nonlinear equations. For example, suppose we wish to find the solution to the equation: xe+=2 x We cannot solve for x directly. -

A Lifted Linear Programming Branch-And-Bound Algorithm for Mixed Integer Conic Quadratic Programs Juan Pablo Vielma, Shabbir Ahmed, George L

A Lifted Linear Programming Branch-and-Bound Algorithm for Mixed Integer Conic Quadratic Programs Juan Pablo Vielma, Shabbir Ahmed, George L. Nemhauser, H. Milton Stewart School of Industrial and Systems Engineering, Georgia Institute of Technology, 765 Ferst Drive NW, Atlanta, GA 30332-0205, USA, {[email protected], [email protected], [email protected]} This paper develops a linear programming based branch-and-bound algorithm for mixed in- teger conic quadratic programs. The algorithm is based on a higher dimensional or lifted polyhedral relaxation of conic quadratic constraints introduced by Ben-Tal and Nemirovski. The algorithm is different from other linear programming based branch-and-bound algo- rithms for mixed integer nonlinear programs in that, it is not based on cuts from gradient inequalities and it sometimes branches on integer feasible solutions. The algorithm is tested on a series of portfolio optimization problems. It is shown that it significantly outperforms commercial and open source solvers based on both linear and nonlinear relaxations. Key words: nonlinear integer programming; branch and bound; portfolio optimization History: February 2007. 1. Introduction This paper deals with the development of an algorithm for the class of mixed integer non- linear programming (MINLP) problems known as mixed integer conic quadratic program- ming problems. This class of problems arises from adding integrality requirements to conic quadratic programming problems (Lobo et al., 1998), and is used to model several applica- tions from engineering and finance. Conic quadratic programming problems are also known as second order cone programming problems, and together with semidefinite and linear pro- gramming (LP) problems are special cases of the more general conic programming problems (Ben-Tal and Nemirovski, 2001a). -

Quadratic Programming GIAN Short Course on Optimization: Applications, Algorithms, and Computation

Quadratic Programming GIAN Short Course on Optimization: Applications, Algorithms, and Computation Sven Leyffer Argonne National Laboratory September 12-24, 2016 Outline 1 Introduction to Quadratic Programming Applications of QP in Portfolio Selection Applications of QP in Machine Learning 2 Active-Set Method for Quadratic Programming Equality-Constrained QPs General Quadratic Programs 3 Methods for Solving EQPs Generalized Elimination for EQPs Lagrangian Methods for EQPs 2 / 36 Introduction to Quadratic Programming Quadratic Program (QP) minimize 1 xT Gx + g T x x 2 T subject to ai x = bi i 2 E T ai x ≥ bi i 2 I; where n×n G 2 R is a symmetric matrix ... can reformulate QP to have a symmetric Hessian E and I sets of equality/inequality constraints Quadratic Program (QP) Like LPs, can be solved in finite number of steps Important class of problems: Many applications, e.g. quadratic assignment problem Main computational component of SQP: Sequential Quadratic Programming for nonlinear optimization 3 / 36 Introduction to Quadratic Programming Quadratic Program (QP) minimize 1 xT Gx + g T x x 2 T subject to ai x = bi i 2 E T ai x ≥ bi i 2 I; No assumption on eigenvalues of G If G 0 positive semi-definite, then QP is convex ) can find global minimum (if it exists) If G indefinite, then QP may be globally solvable, or not: If AE full rank, then 9ZE null-space basis Convex, if \reduced Hessian" positive semi-definite: T T ZE GZE 0; where ZE AE = 0 then globally solvable ... eliminate some variables using the equations 4 / 36 Introduction to Quadratic Programming Quadratic Program (QP) minimize 1 xT Gx + g T x x 2 T subject to ai x = bi i 2 E T ai x ≥ bi i 2 I; Feasible set may be empty .. -

Linear Programming

Lecture 18 Linear Programming 18.1 Overview In this lecture we describe a very general problem called linear programming that can be used to express a wide variety of different kinds of problems. We can use algorithms for linear program- ming to solve the max-flow problem, solve the min-cost max-flow problem, find minimax-optimal strategies in games, and many other things. We will primarily discuss the setting and how to code up various problems as linear programs At the end, we will briefly describe some of the algorithms for solving linear programming problems. Specific topics include: • The definition of linear programming and simple examples. • Using linear programming to solve max flow and min-cost max flow. • Using linear programming to solve for minimax-optimal strategies in games. • Algorithms for linear programming. 18.2 Introduction In the last two lectures we looked at: — Bipartite matching: given a bipartite graph, find the largest set of edges with no endpoints in common. — Network flow (more general than bipartite matching). — Min-Cost Max-flow (even more general than plain max flow). Today, we’ll look at something even more general that we can solve algorithmically: linear pro- gramming. (Except we won’t necessarily be able to get integer solutions, even when the specifi- cation of the problem is integral). Linear Programming is important because it is so expressive: many, many problems can be coded up as linear programs (LPs). This especially includes problems of allocating resources and business 95 18.3. DEFINITION OF LINEAR PROGRAMMING 96 supply-chain applications. In business schools and Operations Research departments there are entire courses devoted to linear programming. -

Integer Linear Programs

20 ________________________________________________________________________________________________ Integer Linear Programs Many linear programming problems require certain variables to have whole number, or integer, values. Such a requirement arises naturally when the variables represent enti- ties like packages or people that can not be fractionally divided — at least, not in a mean- ingful way for the situation being modeled. Integer variables also play a role in formulat- ing equation systems that model logical conditions, as we will show later in this chapter. In some situations, the optimization techniques described in previous chapters are suf- ficient to find an integer solution. An integer optimal solution is guaranteed for certain network linear programs, as explained in Section 15.5. Even where there is no guarantee, a linear programming solver may happen to find an integer optimal solution for the par- ticular instances of a model in which you are interested. This happened in the solution of the multicommodity transportation model (Figure 4-1) for the particular data that we specified (Figure 4-2). Even if you do not obtain an integer solution from the solver, chances are good that you’ll get a solution in which most of the variables lie at integer values. Specifically, many solvers are able to return an ‘‘extreme’’ solution in which the number of variables not lying at their bounds is at most the number of constraints. If the bounds are integral, all of the variables at their bounds will have integer values; and if the rest of the data is integral, many of the remaining variables may turn out to be integers, too. -

Click to Edit Master Title Style

Click to edit Master title style MINLP with Combined Interior Point and Active Set Methods Jose L. Mojica Adam D. Lewis John D. Hedengren Brigham Young University INFORM 2013, Minneapolis, MN Presentation Overview NLP Benchmarking Hock-Schittkowski Dynamic optimization Biological models Combining Interior Point and Active Set MINLP Benchmarking MacMINLP MINLP Model Predictive Control Chiller Thermal Energy Storage Unmanned Aerial Systems Future Developments Oct 9, 2013 APMonitor.com APOPT.com Brigham Young University Overview of Benchmark Testing NLP Benchmark Testing 1 1 2 3 3 min J (x, y,u) APOPT , BPOPT , IPOPT , SNOPT , MINOS x Problem characteristics: s.t. 0 f , x, y,u t Hock Schittkowski, Dynamic Opt, SBML 0 g(x, y,u) Nonlinear Programming (NLP) Differential Algebraic Equations (DAEs) 0 h(x, y,u) n m APMonitor Modeling Language x, y u MINLP Benchmark Testing min J (x, y,u, z) 1 1 2 APOPT , BPOPT , BONMIN x s.t. 0 f , x, y,u, z Problem characteristics: t MacMINLP, Industrial Test Set 0 g(x, y,u, z) Mixed Integer Nonlinear Programming (MINLP) 0 h(x, y,u, z) Mixed Integer Differential Algebraic Equations (MIDAEs) x, y n u m z m APMonitor & AMPL Modeling Language 1–APS, LLC 2–EPL, 3–SBS, Inc. Oct 9, 2013 APMonitor.com APOPT.com Brigham Young University NLP Benchmark – Summary (494) 100 90 80 APOPT+BPOPT APOPT 70 1.0 BPOPT 1.0 60 IPOPT 3.10 IPOPT 50 2.3 SNOPT Percentage (%) 6.1 40 Benchmark Results MINOS 494 Problems 5.5 30 20 10 0 0.5 1 1.5 2 2.5 3 3.5 4 4.5 5 Not worse than 2 times slower than -

Specifying “Logical” Conditions in AMPL Optimization Models

Specifying “Logical” Conditions in AMPL Optimization Models Robert Fourer AMPL Optimization www.ampl.com — 773-336-AMPL INFORMS Annual Meeting Phoenix, Arizona — 14-17 October 2012 Session SA15, Software Demonstrations Robert Fourer, Logical Conditions in AMPL INFORMS Annual Meeting — 14-17 Oct 2012 — Session SA15, Software Demonstrations 1 New and Forthcoming Developments in the AMPL Modeling Language and System Optimization modelers are often stymied by the complications of converting problem logic into algebraic constraints suitable for solvers. The AMPL modeling language thus allows various logical conditions to be described directly. Additionally a new interface to the ILOG CP solver handles logic in a natural way not requiring conventional transformations. Robert Fourer, Logical Conditions in AMPL INFORMS Annual Meeting — 14-17 Oct 2012 — Session SA15, Software Demonstrations 2 AMPL News Free AMPL book chapters AMPL for Courses Extended function library Extended support for “logical” conditions AMPL driver for CPLEX Opt Studio “Concert” C++ interface Support for ILOG CP constraint programming solver Support for “logical” constraints in CPLEX INFORMS Impact Prize to . Originators of AIMMS, AMPL, GAMS, LINDO, MPL Awards presented Sunday 8:30-9:45, Conv Ctr West 101 Doors close 8:45! Robert Fourer, Logical Conditions in AMPL INFORMS Annual Meeting — 14-17 Oct 2012 — Session SA15, Software Demonstrations 3 AMPL Book Chapters now free for download www.ampl.com/BOOK/download.html Bound copies remain available purchase from usual -

Chapter 11: Basic Linear Programming Concepts

CHAPTER 11: BASIC LINEAR PROGRAMMING CONCEPTS Linear programming is a mathematical technique for finding optimal solutions to problems that can be expressed using linear equations and inequalities. If a real-world problem can be represented accurately by the mathematical equations of a linear program, the method will find the best solution to the problem. Of course, few complex real-world problems can be expressed perfectly in terms of a set of linear functions. Nevertheless, linear programs can provide reasonably realistic representations of many real-world problems — especially if a little creativity is applied in the mathematical formulation of the problem. The subject of modeling was briefly discussed in the context of regulation. The regulation problems you learned to solve were very simple mathematical representations of reality. This chapter continues this trek down the modeling path. As we progress, the models will become more mathematical — and more complex. The real world is always more complex than a model. Thus, as we try to represent the real world more accurately, the models we build will inevitably become more complex. You should remember the maxim discussed earlier that a model should only be as complex as is necessary in order to represent the real world problem reasonably well. Therefore, the added complexity introduced by using linear programming should be accompanied by some significant gains in our ability to represent the problem and, hence, in the quality of the solutions that can be obtained. You should ask yourself, as you learn more about linear programming, what the benefits of the technique are and whether they outweigh the additional costs. -

Gauss-Newton SQP

TEMPO Spring School: Theory and Numerics for Nonlinear Model Predictive Control Exercise 3: Gauss-Newton SQP J. Andersson M. Diehl J. Rawlings M. Zanon University of Freiburg, March 27, 2015 Gauss-Newton sequential quadratic programming (SQP) In the exercises so far, we solved the NLPs with IPOPT. IPOPT is a popular open-source primal- dual interior point code employing so-called filter line-search to ensure global convergence. Other NLP solvers that can be used from CasADi include SNOPT, WORHP and KNITRO. In the following, we will write our own simple NLP solver implementing sequential quadratic programming (SQP). (0) (0) Starting from a given initial guess for the primal and dual variables (x ; λg ), SQP solves the NLP by iteratively computing local convex quadratic approximations of the NLP at the (k) (k) current iterate (x ; λg ) and solving them by using a quadratic programming (QP) solver. For an NLP of the form: minimize f(x) x (1) subject to x ≤ x ≤ x; g ≤ g(x) ≤ g; these quadratic approximations take the form: 1 | 2 (k) (k) (k) (k) | minimize ∆x rxL(x ; λg ; λx ) ∆x + rxf(x ) ∆x ∆x 2 subject to x − x(k) ≤ ∆x ≤ x − x(k); (2) @g g − g(x(k)) ≤ (x(k)) ∆x ≤ g − g(x(k)); @x | | where L(x; λg; λx) = f(x) + λg g(x) + λx x is the so-called Lagrangian function. By solving this (k) (k+1) (k) (k+1) QP, we get the (primal) step ∆x := x − x as well as the Lagrange multipliers λg (k+1) and λx . -

Chapter 7. Linear Programming and Reductions

Chapter 7 Linear programming and reductions Many of the problems for which we want algorithms are optimization tasks: the shortest path, the cheapest spanning tree, the longest increasing subsequence, and so on. In such cases, we seek a solution that (1) satisfies certain constraints (for instance, the path must use edges of the graph and lead from s to t, the tree must touch all nodes, the subsequence must be increasing); and (2) is the best possible, with respect to some well-defined criterion, among all solutions that satisfy these constraints. Linear programming describes a broad class of optimization tasks in which both the con- straints and the optimization criterion are linear functions. It turns out an enormous number of problems can be expressed in this way. Given the vastness of its topic, this chapter is divided into several parts, which can be read separately subject to the following dependencies. Flows and matchings Introduction to linear programming Duality Games and reductions Simplex 7.1 An introduction to linear programming In a linear programming problem we are given a set of variables, and we want to assign real values to them so as to (1) satisfy a set of linear equations and/or linear inequalities involving these variables and (2) maximize or minimize a given linear objective function. 201 202 Algorithms Figure 7.1 (a) The feasible region for a linear program. (b) Contour lines of the objective function: x1 + 6x2 = c for different values of the profit c. x x (a) 2 (b) 2 400 400 Optimum point Profit = $1900 ¡ ¡ ¡ ¡ ¡ 300 ¢¡¢¡¢¡¢¡¢ 300 ¡ ¡ ¡ ¡ ¡ ¢¡¢¡¢¡¢¡¢ ¡ ¡ ¡ ¡ ¡ ¢¡¢¡¢¡¢¡¢ 200 200 c = 1500 ¡ ¡ ¡ ¡ ¡ ¢¡¢¡¢¡¢¡¢ ¡ ¡ ¡ ¡ ¡ ¢¡¢¡¢¡¢¡¢ c = 1200 ¡ ¡ ¡ ¡ ¡ 100 ¢¡¢¡¢¡¢¡¢ 100 ¡ ¡ ¡ ¡ ¡ ¢¡¢¡¢¡¢¡¢ c = 600 ¡ ¡ ¡ ¡ ¡ ¢¡¢¡¢¡¢¡¢ x1 x1 0 100 200 300 400 0 100 200 300 400 7.1.1 Example: profit maximization A boutique chocolatier has two products: its flagship assortment of triangular chocolates, called Pyramide, and the more decadent and deluxe Pyramide Nuit. -

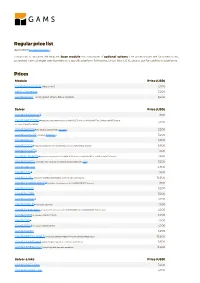

Standard Price List

Regular price list April 2021 (Download PDF ) This price list includes the required base module and a number of optional solvers. The prices shown are for unrestricted, perpetual named single user licenses on a specific platform (Windows, Linux, Mac OS X), please ask for additional platforms. Prices Module Price (USD) GAMS/Base Module (required) 3,200 MIRO Connector 3,200 GAMS/Secure - encrypted Work Files Option 3,200 Solver Price (USD) GAMS/ALPHAECP 1 1,600 GAMS/ANTIGONE 1 (requires the presence of a GAMS/CPLEX and a GAMS/SNOPT or GAMS/CONOPT license, 3,200 includes GAMS/GLOMIQO) GAMS/BARON 1 (for details please follow this link ) 3,200 GAMS/CONOPT (includes CONOPT 4 ) 3,200 GAMS/CPLEX 9,600 GAMS/DECIS 1 (requires presence of a GAMS/CPLEX or a GAMS/MINOS license) 9,600 GAMS/DICOPT 1 1,600 GAMS/GLOMIQO 1 (requires presence of a GAMS/CPLEX and a GAMS/SNOPT or GAMS/CONOPT license) 1,600 GAMS/IPOPTH (includes HSL-routines, for details please follow this link ) 3,200 GAMS/KNITRO 4,800 GAMS/LGO 2 1,600 GAMS/LINDO (includes GAMS/LINDOGLOBAL with no size restrictions) 12,800 GAMS/LINDOGLOBAL 2 (requires the presence of a GAMS/CONOPT license) 1,600 GAMS/MINOS 3,200 GAMS/MOSEK 3,200 GAMS/MPSGE 1 3,200 GAMS/MSNLP 1 (includes LSGRG2) 1,600 GAMS/ODHeuristic (requires the presence of a GAMS/CPLEX or a GAMS/CPLEX-link license) 3,200 GAMS/PATH (includes GAMS/PATHNLP) 3,200 GAMS/SBB 1 1,600 GAMS/SCIP 1 (includes GAMS/SOPLEX) 3,200 GAMS/SNOPT 3,200 GAMS/XPRESS-MINLP (includes GAMS/XPRESS-MIP and GAMS/XPRESS-NLP) 12,800 GAMS/XPRESS-MIP (everything but general nonlinear equations) 9,600 GAMS/XPRESS-NLP (everything but discrete variables) 6,400 Solver-Links Price (USD) GAMS/CPLEX Link 3,200 GAMS/GUROBI Link 3,200 Solver-Links Price (USD) GAMS/MOSEK Link 1,600 GAMS/XPRESS Link 3,200 General information The GAMS Base Module includes the GAMS Language Compiler, GAMS-APIs, and many utilities .