New York City Automated Decision System Task Force Comments Compilation

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Compliance with Special Education Regulations for the Provision of Services New York City Department of Education Report 2018-N-5 | September 2020

Compliance With Special Education Regulations for the Provision of Services New York City Department of Education Report 2018-N-5 | September 2020 Spotlight on Education Audit Highlights Objective To determine if the New York City Department of Education (DOE) arranged for eligible students to be placed in special education services and programs within the required time frames, and provided all of the services and programs recommended in the students’ Individualized Education Program (IEP). We also assessed the time frames for students to commence receiving services and programs. Our audit covered the period of July 1, 2016 through January 21, 2020, and included all students who were initially referred during the 2016-17 school year. About the Program Special education services and programs are designed to meet the educational and developmental needs of children with disabilities. Part 200 of the Regulations of the New York State Commissioner of Education (Regulations) stipulates the requirements for evaluating and placing students in special education. In New York City (NYC), DOE is responsible for determining eligibility for and providing special education services to such students. Specifically, the Regulations require that DOE arrange for the placement of eligible students in special education services and programs within 60 school days of receipt of parental consent to evaluate. However, arranging placement is not the end of the process as it is more important that eligible students be provided with the services and programs recommended in their IEPs in a timely manner. State Regulations do not define how long this should take. A report issued in August 2019 by New York University/Steinhardt’s Research Alliance for New York City Schools stated that the percentage of students with disabilities varies greatly across NYC – ranging from less than 5 percent in some areas to more than 25 percent in others – with disability rates higher in low-income neighborhoods. -

ACRONYM Reference Guide 2017.Docx FAMIS Security Unit

Richard Carlo Executive Director Financial Systems & Business Operations This collection of acronyms and jargon was produced by: Vivian M. Toro – Administrative Manager/Webmaster Financial Systems & Business Operations FAMIS Security Unit 65 Court Street, Brooklyn, NY 11201 [email protected] ACRONYM Reference Guide 2017.docx FAMIS Security Unit This page added for printing purposes Quick Reference Guide Acronyms or jargon used within the NYC Department of Education A B C D E F G H I J K L M N O P Q R S T U V W X Y Z ACRONYM Reference Guide 2017.docx can be found at the following link: http://schools.nyc.gov/Offices/EnterpriseOperations/ChiefFinancialOfficer/DFSBO/KeyDocuments/default.htm For corrections or to add to this collection, please e-mail [email protected], webmaster for DFO & Financial Systems and Business Operations. 2 ACRONYM Reference Guide 2017.docx FAMIS Security Unit Quick Reference Guide Acronyms or jargon used within the NYC Department of Education A B C D E F G H I J K L M N O P Q R S T U V W X Y Z A ACCES-VR - Adult Career & Continuing Education Services-Vocational Rehabilitation (aka VESID): Offers access to a full range of employment and independent living services that may be needed by persons with disabilities through their lives. Learn more here. AED - The Academy for Educational Development: AED PSO empowers schools to provide high expectations and high supports for ALL students by customizing professional development and coaching to the needs and interests of the principal and the entire school community. -

A Better Picture of Poverty What Chronic Absenteeism and Risk Load Reveal About NYC’S Lowest-Income Elementary Schools

A Better Picture of Poverty What Chronic Absenteeism and Risk Load Reveal About NYC’s Lowest-Income Elementary Schools by KIM NAUER, NICOLE MADER, GAIL ROBINSON AND TOM JACOBS WITH BRUCE CORY, JORDAN MOSS AND ARYN BLOODWORTH CENTER FOR NEW YORK CITY AFFAIRS THE MILANO SCHOOL OF INTERNATIONAL AFFAIRS, MANAGEMENT, AND URBAN POLICY November 2014 CONTENTS The Center for New York City Affairs is dedicated to advancing innovative public policies that strengthen 1 Executive Summary and Issue Highlights neighborhoods, support families and reduce urban 7 Recommendations from the Field poverty. Our tools include rigorous analysis; journalistic research; candid public dialogue with stakeholders; and 10 Measuring the Weight of Poverty strategic planning with government officials, nonprofit 13 A School’s Total Risk Load: 18 Factors practitioners and community residents. 22 Back to School: Tackling Chronic Absenteeism Clara Hemphill, Interim Director 34 Three Years, Many Lessons: Mayor Bloomberg’s Task Force Kim Nauer, Education Research Director 41 Without a Home: Absenteeism Among Transient Students Pamela Wheaton, Insideschools.org Managing Editor Kendra Hurley, Senior Editor 46 School, Expanded: Mayor deBlasio’s New Community Schools Laura Zingmond, Senior Editor 49 Do I Know You? The Need for Better Data-Sharing Abigail Kramer, Associate Editor Nicole Mader, Education Policy Research Analyst 53 Which Schools First? The Highest Poverty Schools Need Help Jacqueline Wayans, Events Coordinator 55 What Makes a Community School? Six Standards Additional -

Corrective Action Report Submission

Corinne Rello-Anselmi, Deputy Chancellor Division of Students with Disabilities and English Language Learners NEW YORK CITY DEPARTMENT OF EDUCATION OFFICE OF ENGLISH LANGUAGE LEARNERS 52 Chambers Street, Room 209 http://schools.nyc.gov/Academics/ELL Corrective Action Report Submission Ira Schwartz, Assistant Commissioner Office of Accountability 55 Hanson Place, Room 400 Brooklyn, New York 11217 January 28, 2013 Dear Ira, Below are the information and documents your office has requested as evidence of meeting targets and commitments as outlined in the Corrective Action Plan. After reviewing these items, if additional information is needed, please do not hesitate to let me know. As you will find, all first year targets have been met. We welcome feedback from the community and stakeholders as we continue to make strides and develop quality programs for English language learners. Sincerely, Angelica M. Infante, Chief Executive Officer, Office of English Language Learners 1 Summary of Major Accomplishments In September 2011, the New York City Department of Education and State Education Department reached an agreement as outlined in the Corrective Action Plan (CAP) for services for English Language Learners (ELLs). Issue Description CAP Target Status Steps Taken by the DOE Prior to Steps Taken by the DOE After CAP CAP 1) Some By August 31, 2012, Targets During the 2010-11 school During the 2011-12 school year, 98.2% of students were achieve a 75% met year, 93.2% of eligible students eligible students were administered the not reduction -

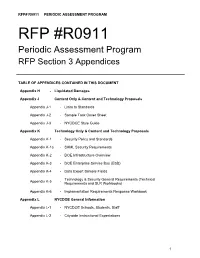

RFP #R0911 Periodic Assessment Program RFP Section 3 Appendices

RFP# R0911 PERIODIC ASSESSMENT PROGRAM RFP #R0911 Periodic Assessment Program RFP Section 3 Appendices TABLE OF APPENDICES CONTAINED IN THIS DOCUMENT Appendix H - Liquidated Damages Appendix J Content Only & Content and Technology Proposals Appendix J-1 - Links to Standards Appendix J-2 - Sample Task Cover Sheet Appendix J-3 - NYCDOE Style Guide Appendix K Technology Only & Content and Technology Proposals Appendix K-1 - Security Policy and Standards Appendix K-1a - SAML Security Requirements Appendix K-2 - DOE Infrastructure Overview Appendix K-3 - DOE Enterprise Service Bus (ESB) Appendix K-4 - Data Export Sample Fields Technology & Security General Requirements (Technical Appendix K-5 - Requirements and SLR Workbooks) Appendix K-6 - Implementation Requirements Response Workbook Appendix L NYCDOE General Information Appendix L-1 - NYCDOE Schools, Students, Staff Appendix L-2 - Citywide Instructional Expectations 1 RFP# R0911 PERIODIC ASSESSMENT PROGRAM Appendix H: LIQUIDATED DAMAGES 1.0. ASSESSMENT OF LIQUIDATED DAMAGES AS A REMEDY FOR NON-COMPLIANCE. 1.1. If the Contractor‘s provision of any Services shall not comply with the terms, conditions and specifications of this Agreement as a result of any intentional, negligent and/or other act(s) of commission and/or omission of the Contractor and/or its agents, servants, employees, subcontractors, affiliates, partners (including, without limitation, general, limited, silent and apparent partners), directors, officers, volunteers, invitees, licensees, designees, assigns or any other representatives (expressed in this Appendix H jointly and severally as ―Contractor Group‖), the Contractor hereby stipulate and agree that the Board shall suffer losses and damages by reason of the inconvenience and impacts upon Board interests, operations and activities that shall result from any such act(s) of commission and/or omission. -

Empty Promises 6-15-09

mpty ROMISES A Case Study of Restructuring and the Exclusion of English Language Learners in Two Brooklyn High Schools JUNE 2009 Advocates for Children of New York Table of Contents Executive Summary & Recommendations 4 Introduction 6 Methodology 7 The Impact of Restructuring Lafayette and Tilden on ELLs 8 I. The Closure of Lafayette and Tilden High Schools 8 A. H ISTORY OF LAFAYETTE AND TILDEN 8 B. ELL P ROGRAMS 9 C. C LOSURES 10 D. C OMMUNITY ADVOCACY AROUND THE CLOSURES 11 II. The Fate of ELLs in the Restructuring of Lafayette and Tilden 13 A. L IMITED ACCESS TO SMALL SCHOOLS ON LAFAYETTE AND TILDEN CAMPUSES 13 B. P OOR SERVICES FOR ELL S IN SMALL SCHOOLS 15 C. S EGREGATION OF ELL S INTO LARGE SCHOOLS AND ELL-FOCUSED SMALL SCHOOLS 22 D. ELL S LEFT BEHIND : S ERVICE CUTS AND PUSH OUTS 26 Conclusion and Recommendations 28 Endnotes 30 EMPTY PROMISES R ESTRUCTURING & E XCLUSION OF ELL S IN TWO BROOKLYN HIGH SCHOOLS Acknowledgments dvocates for Children of New York (AFC) and Asian American Legal Defense and Education Fund (AALDEF) are grateful for the generous support to write this report provided by the Donors’ Education Collaborative, the Durst Family Foundation and the Ford Foundation. We would like to thank the many students, parents, school staff, community organizations and researchers who kindly gave their time and shared their stories, experiences and data with us. We would like to thank staff at Flanbwayan Haitian Literacy Project, the Haitian American Bilingual Education Technical Assistance Center, and the United Chinese Association of Brooklyn for sharing information about the schools we studied and for connecting us with parents and students. -

Department of Education (NYCDOE)

THE STATE EDUCATION DEPARTMENT / THE UNIVERSITY OF THE STATE OF NEW YORK / ALBANY, NY 12234 TO: P-12 Education Committee FROM: Charles A. Szuberla, Jr. SUBJECT: Charter Schools: Charter Renewal Recommendations for Charters Authorized by the Chancellor of the New York City Department of Education (NYCDOE) DATE: June 10, 2015 AUTHORIZATION(S): SUMMARY Issue for Decision Should the Regents approve the proposed renewal charters for the following charter schools authorized by the Chancellor of the NYCDOE: Achievement First Endeavor Charter School Community Roots Charter School International Leadership Charter School New York Center for Autism Charter School Renaissance Charter School Reason(s) for Consideration Required by New York State Law. Proposed Handling This issue will be before the Regents P-12 Education Committee and the Full Board for action at the June 2015 Regents meeting. Procedural History The Chancellor of the NYCDOE approved the renewal of the charter schools set forth below and submitted recommendations to the Regents for approval and issuance of the renewal charters as required by Article 56 of the Education Law, the New York Charter Schools Act. Background Information I forward the recommendations for the renewal charters of the following charter schools, as proposed by the Chancellor of the NYCDOE in her capacity as a charter school authorizer under Article 56 of the Education Law. The Chancellor asks that the charters be extended for the terms indicated. The summary of the NYCDOE’s 2014 Renewal Recommendation Report -

Implementation of the New York City Community Schools Initiative

Developing Community Schools at Scale Implementation of the New York City Community Schools Initiative William R. Johnston, Celia J. Gomez, Lisa Sontag-Padilla, Lea Xenakis, Brent Anderson C O R P O R A T I O N For more information on this publication, visit www.rand.org/t/RR2100 Published by the RAND Corporation, Santa Monica, Calif. © Copyright 2017 RAND Corporation R® is a registered trademark. Cover: Getty Images / asiseeit Limited Print and Electronic Distribution Rights This document and trademark(s) contained herein are protected by law. This representation of RAND intellectual property is provided for noncommercial use only. Unauthorized posting of this publication online is prohibited. Permission is given to duplicate this document for personal use only, as long as it is unaltered and complete. Permission is required from RAND to reproduce, or reuse in another form, any of its research documents for commercial use. For information on reprint and linking permissions, please visit www.rand.org/pubs/permissions. The RAND Corporation is a research organization that develops solutions to public policy challenges to help make communities throughout the world safer and more secure, healthier and more prosperous. RAND is nonprofit, nonpartisan, and committed to the public interest. RAND’s publications do not necessarily reflect the opinions of its research clients and sponsors. Support RAND Make a tax-deductible charitable contribution at www.rand.org/giving/contribute www.rand.org Preface With the launch of the New York City Community Schools Initiative (NYC-CS), New York has joined many other cities to recognize that addressing the social consequences of poverty must be done in tandem with efforts to improve teaching and learning to improve student outcomes. -

A-701 School Health Services 3/25/2021

A-701 SCHOOL HEALTH SERVICES 3/25/2021 Regulation of the Chancellor Number: A-701 Subject: SCHOOL HEALTH SERVICES Category: STUDENTS Issued: March 25, 2021 SUMMARY OF CHANGES This regulation updates and supersedes Chancellor’s Regulation A-701 dated August 15, 2012. Changes: • Updates information concerning student health forms, notices, and records, as well as definition of parent whenever used in this regulation (Section I). • Clarifies requirement to submit completed comprehensive medical examination upon admission to 3-K and pre-kindergarten programs and K-12 schools, and also if the student turns 5 years old after admission (Section II.A.1). • Clarifies that medical evaluations provided to the Committee on Special Education shall be conducted by the student’s primary care provider, not an OSH physician (Section II.A.2). • Updates the physical examination form requirements for participation in interscholastic sports (Section II.A.2). • Updates reporting requirements for student height and weight measurements (Section II.B). • Updates student vision screening requirements, responsibilities of DOHMH and DOE staff, reporting, and notice to parents (Section II.C). • Removes provisions concerning hearing screening (Sections I.B, II.C). • Updates state immunization mandates and removes religious exemption in accordance with New York Public Health Law § 2164, and specifies DOHMH system of record for immunization data (Section III). • Clarifies language concerning provisional immunization requirements for new entrants, and the rights of students in temporary housing (Section III.B). • Clarifies process and timelines for requesting medical exemptions to immunization requirements (Section III.C). • Adds New York City requirement for influenza immunizations for students aged 6 months to 59 months (Section III.F). -

Students with Interrupted Formal Education: a Challenge for the New York City Public Schools

Students with Interrupted Formal Education: A Challenge for the New York City Public Schools A report issued by Advocates for Children of New York May 2010 With contributions from: Flanbwayan Haitian Literacy Project The New York Immigration Coalition Sauti Yetu Center for African Women YWCA of Queens Youth Center TABLE OF CONTENTS Acknowledgments.................................................................................................................................. 4 Executive Summary ............................................................................................................................... 5 I. Introduction ................................................................................................................................ 8 A. Defining SIFE .............................................................................................................................. 8 B. Reviewing the Numbers: Data on New York City’s SIFE .........................................10 C. Taking a Closer Look .............................................................................................................15 II. Beyond Numerical Data: Portraits of New York City’s SIFE .................................... 16 A. Meeting the Students ............................................................................................................16 B. Identifying their Needs .........................................................................................................24 III. Priorities for Reform ........................................................................................................... -

New York City Principals' Satisfaction with the Public School Network

St. John Fisher College Fisher Digital Publications Education Doctoral Ralph C. Wilson, Jr. School of Education 8-2013 New York City Principals’ Satisfaction with the Public School Network Support at One At-Risk Network Over a Three-Year Period, 2009-2010, 2010-2011, and 2011-2012 Varleton McDonald St. John Fisher College Follow this and additional works at: https://fisherpub.sjfc.edu/education_etd Part of the Education Commons How has open access to Fisher Digital Publications benefited ou?y Recommended Citation McDonald, Varleton, "New York City Principals’ Satisfaction with the Public School Network Support at One At-Risk Network Over a Three-Year Period, 2009-2010, 2010-2011, and 2011-2012" (2013). Education Doctoral. Paper 151. Please note that the Recommended Citation provides general citation information and may not be appropriate for your discipline. To receive help in creating a citation based on your discipline, please visit http://libguides.sjfc.edu/citations. This document is posted at https://fisherpub.sjfc.edu/education_etd/151 and is brought to you for free and open access by Fisher Digital Publications at St. John Fisher College. For more information, please contact [email protected]. New York City Principals’ Satisfaction with the Public School Network Support at One At-Risk Network Over a Three-Year Period, 2009-2010, 2010-2011, and 2011-2012 Abstract This study investigated New York City (NYC) principals’ satisfaction with network school support provided by School Support Organizations (SSOs) in one network in the NYC public school system over a three-year period from 2009 through 2012. The SSOs or networks were created in New York City for the purpose of increasing student performance, enhancing graduation/promotion rates, and improving teacher pedagogy. -

Glossary of Terms

Glossary of Terms Active Register: The active register is the current register. School and class registers can fluctuate as principals and school-based personnel make changes to student schedules or audit the school’s overall register. For this reason it is very important to note the day on which any register data is reported. This report is based on a snapshot of the active register on January 28, 2015. For High Schools that organize their year into semesters, the report captures the core course classes and registers for the end of the first term. For High Schools that are not organized into semesters, the report captures a snapshot of the current term as of February 9, 2015. Automate The Schools (ATS): Automate the Schools (ATS) is a school-based administrative system that standardizes and automates the collection and reporting of data for all students in the New York City Public Schools. Bridge Class: A bridge class spans grade levels. This analysis does not include any class that spans more than one grade in grades K-8, unless the class is designated as a self-contained special education class. Child Assistance Program (CAP): This system tracks the process students go through when referred for possible special education services. Integrated Co-Teaching (Formerly Collaborative Team Teaching or CTT): Integrated Co-Teaching (ICT) ensures that students with disabilities are educated alongside age-appropriate peers in a general education setting. ICT classes consist of one general education teacher and one special education teacher, providing a reduced student/ teacher ratio. Co-teachers provide individual attention to students and together they plan and prepare lessons, activities, and projects that take into account the learning differences among all students in the class.