Low Rank Approximation Lecture 1

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

18.06 Linear Algebra, Problem Set 2 Solutions

18.06 Problem Set 2 Solution Total: 100 points Section 2.5. Problem 24: Use Gauss-Jordan elimination on [U I] to find the upper triangular −1 U : 2 3 2 3 2 3 1 a b 1 0 0 −1 4 5 4 5 4 5 UU = I 0 1 c x1 x2 x3 = 0 1 0 : 0 0 1 0 0 1 −1 Solution (4 points): Row reduce [U I] to get [I U ] as follows (here Ri stands for the ith row): 2 3 2 3 1 a b 1 0 0 (R1 = R1 − aR2) 1 0 b − ac 1 −a 0 4 5 4 5 0 1 c 0 1 0 −! (R2 = R2 − cR2) 0 1 0 0 1 −c 0 0 1 0 0 1 0 0 1 0 0 1 ( ) 2 3 R1 = R1 − (b − ac)R3 1 0 0 1 −a ac − b −! 40 1 0 0 1 −c 5 : 0 0 1 0 0 1 Section 2.5. Problem 40: (Recommended) A is a 4 by 4 matrix with 1's on the diagonal and −1 −a; −b; −c on the diagonal above. Find A for this bidiagonal matrix. −1 Solution (12 points): Row reduce [A I] to get [I A ] as follows (here Ri stands for the ith row): 2 3 1 −a 0 0 1 0 0 0 6 7 60 1 −b 0 0 1 0 07 4 5 0 0 1 −c 0 0 1 0 0 0 0 1 0 0 0 1 2 3 (R1 = R1 + aR2) 1 0 −ab 0 1 a 0 0 6 7 (R2 = R2 + bR2) 60 1 0 −bc 0 1 b 07 −! 4 5 (R3 = R3 + cR4) 0 0 1 0 0 0 1 c 0 0 0 1 0 0 0 1 2 3 (R1 = R1 + abR3) 1 0 0 0 1 a ab abc (R = R + bcR ) 60 1 0 0 0 1 b bc 7 −! 2 2 4 6 7 : 40 0 1 0 0 0 1 c 5 0 0 0 1 0 0 0 1 Alternatively, write A = I − N. -

![[Math.RA] 19 Jun 2003 Two Linear Transformations Each Tridiagonal with Respect to an Eigenbasis of the Ot](https://docslib.b-cdn.net/cover/7979/math-ra-19-jun-2003-two-linear-transformations-each-tridiagonal-with-respect-to-an-eigenbasis-of-the-ot-97979.webp)

[Math.RA] 19 Jun 2003 Two Linear Transformations Each Tridiagonal with Respect to an Eigenbasis of the Ot

Two linear transformations each tridiagonal with respect to an eigenbasis of the other; comments on the parameter array∗ Paul Terwilliger Abstract Let K denote a field. Let d denote a nonnegative integer and consider a sequence ∗ K p = (θi,θi , i = 0...d; ϕj , φj, j = 1...d) consisting of scalars taken from . We call p ∗ ∗ a parameter array whenever: (PA1) θi 6= θj, θi 6= θj if i 6= j, (0 ≤ i, j ≤ d); (PA2) i−1 θh−θd−h ∗ ∗ ϕi 6= 0, φi 6= 0 (1 ≤ i ≤ d); (PA3) ϕi = φ1 + (θ − θ )(θi−1 − θ ) h=0 θ0−θd i 0 d i−1 θh−θd−h ∗ ∗ (1 ≤ i ≤ d); (PA4) φi = ϕ1 + (Pθ − θ )(θd−i+1 − θ0) (1 ≤ i ≤ d); h=0 θ0−θd i 0 −1 ∗ ∗ ∗ ∗ −1 (PA5) (θi−2 − θi+1)(θi−1 − θi) P, (θi−2 − θi+1)(θi−1 − θi ) are equal and independent of i for 2 ≤ i ≤ d − 1. In [13] we showed the parameter arrays are in bijection with the isomorphism classes of Leonard systems. Using this bijection we obtain the following two characterizations of parameter arrays. Assume p satisfies PA1, PA2. Let ∗ ∗ A, B, A ,B denote the matrices in Matd+1(K) which have entries Aii = θi, Bii = θd−i, ∗ ∗ ∗ ∗ ∗ ∗ Aii = θi , Bii = θi (0 ≤ i ≤ d), Ai,i−1 = 1, Bi,i−1 = 1, Ai−1,i = ϕi, Bi−1,i = φi (1 ≤ i ≤ d), and all other entries 0. We show the following are equivalent: (i) p satisfies −1 PA3–PA5; (ii) there exists an invertible G ∈ Matd+1(K) such that G AG = B and G−1A∗G = B∗; (iii) for 0 ≤ i ≤ d the polynomial i ∗ ∗ ∗ ∗ ∗ ∗ (λ − θ0)(λ − θ1) · · · (λ − θn−1)(θi − θ0)(θi − θ1) · · · (θi − θn−1) ϕ1ϕ2 · · · ϕ nX=0 n is a scalar multiple of the polynomial i ∗ ∗ ∗ ∗ ∗ ∗ (λ − θd)(λ − θd−1) · · · (λ − θd−n+1)(θ − θ )(θ − θ ) · · · (θ − θ ) i 0 i 1 i n−1 . -

C H a P T E R § Methods Related to the Norm Al Equations

¡ ¢ £ ¤ ¥ ¦ § ¨ © © © © ¨ ©! "# $% &('*),+-)(./+-)0.21434576/),+98,:%;<)>=,'41/? @%3*)BAC:D8E+%=B891GF*),+H;I?H1KJ2.21K8L1GMNAPO45Q5R)S;S+T? =RU ?H1*)>./+EAVOKAPM ;<),5 ?H1G;P8W.XAVO4575R)S;S+Y? =Z8L1*)/[%\Z1*)]AB3*=,'Q;<)B=,'41/? @%3*)RAP8LU F*)BA(;S'*)Z)>@%3/? F*.4U ),1G;^U ?H1*)>./+ APOKAP;_),5a`Rbc`^dfeg`Rbih,jk=>.4U U )Blm;B'*)n1K84+o5R.EUp)B@%3*.K;q? 8L1KA>[r\0:D;_),1Ejp;B'/? A2.4s4s/+t8/.,=,' b ? A7.KFK84? l4)>lu?H1vs,+D.*=S;q? =>)m6/)>=>.43KA<)W;B'*)w=B8E)IxW=K? ),1G;W5R.K;S+Y? yn` `z? AW5Q3*=,'|{}84+-A_) =B8L1*l9? ;I? 891*)>lX;B'*.41Q`7[r~k8K{i)IF*),+NjL;B'*)71K8E+o5R.4U4)>@%3*.G;I? 891KA0.4s4s/+t8.*=,'w5R.BOw6/)Z.,l4)IM @%3*.K;_)7?H17A_8L5R)QAI? ;S3*.K;q? 8L1KA>[p1*l4)>)>lj9;B'*),+-)Q./+-)Q)SF*),1.Es4s4U ? =>.K;q? 8L1KA(?H1Q{R'/? =,'W? ;R? A s/+-)S:Y),+o+D)Blm;_8|;B'*)W3KAB3*.4Urc+HO4U 8,F|AS346KABs*.,=B)Q;_)>=,'41/? @%3*)BAB[&('/? A]=*'*.EsG;_),+}=B8*F*),+tA7? ;PM ),+-.K;q? F*)75R)S;S'K8ElAi{^'/? =,'Z./+-)R)K? ;B'*),+pl9?+D)B=S;BU OR84+k?H5Qs4U ? =K? ;BU O2+-),U .G;<)Bl7;_82;S'*)Q1K84+o5R.EU )>@%3*.G;I? 891KA>[ mWX(fQ - uK In order to solve the linear system 7 when is nonsymmetric, we can solve the equivalent system b b [¥K¦ ¡¢ £N¤ which is Symmetric Positive Definite. This system is known as the system of the normal equations associated with the least-squares problem, [ ¦ £N¤ minimize §* R¨©7ª§*«9¬ Note that (8.1) is typically used to solve the least-squares problem (8.2) for over- ®°¯± ±²³® determined systems, i.e., when is a rectangular matrix of size , . -

Self-Interlacing Polynomials Ii: Matrices with Self-Interlacing Spectrum

SELF-INTERLACING POLYNOMIALS II: MATRICES WITH SELF-INTERLACING SPECTRUM MIKHAIL TYAGLOV Abstract. An n × n matrix is said to have a self-interlacing spectrum if its eigenvalues λk, k = 1; : : : ; n, are distributed as follows n−1 λ1 > −λ2 > λ3 > ··· > (−1) λn > 0: A method for constructing sign definite matrices with self-interlacing spectra from totally nonnegative ones is presented. We apply this method to bidiagonal and tridiagonal matrices. In particular, we generalize a result by O. Holtz on the spectrum of real symmetric anti-bidiagonal matrices with positive nonzero entries. 1. Introduction In [5] there were introduced the so-called self-interlacing polynomials. A polynomial p(z) is called self- interlacing if all its roots are real, semple and interlacing the roots of the polynomial p(−z). It is easy to see that if λk, k = 1; : : : ; n, are the roots of a self-interlacing polynomial, then the are distributed as follows n−1 (1.1) λ1 > −λ2 > λ3 > ··· > (−1) λn > 0; or n (1.2) − λ1 > λ2 > −λ3 > ··· > (−1) λn > 0: The polynomials whose roots are distributed as in (1.1) (resp. in (1.2)) are called self-interlacing of kind I (resp. of kind II). It is clear that a polynomial p(z) is self-interlacing of kind I if, and only if, the polynomial p(−z) is self-interlacing of kind II. Thus, it is enough to study self-interlacing of kind I, since all the results for self-interlacing of kind II will be obtained automatically. Definition 1.1. An n × n matrix is said to possess a self-interlacing spectrum if its eigenvalues λk, k = 1; : : : ; n, are real, simple, are distributed as in (1.1). -

Accurate Singular Values of Bidiagonal Matrices

d d Accurate Singular Values of Bidiagonal Matrices (Appeared in the SIAM J. Sci. Stat. Comput., v. 11, n. 5, pp. 873-912, 1990) James Demmel W. Kahan Courant Institute Computer Science Division 251 Mercer Str. University of California New York, NY 10012 Berkeley, CA 94720 Abstract Computing the singular values of a bidiagonal matrix is the ®nal phase of the standard algo- rithm for the singular value decomposition of a general matrix. We present a new algorithm which computes all the singular values of a bidiagonal matrix to high relative accuracy indepen- dent of their magnitudes. In contrast, the standard algorithm for bidiagonal matrices may com- pute sm all singular values with no relative accuracy at all. Numerical experiments show that the new algorithm is comparable in speed to the standard algorithm, and frequently faster. Keywords: singular value decomposition, bidiagonal matrix, QR iteration AMS(MOS) subject classi®cations: 65F20, 65G05, 65F35 1. Introduction The standard algorithm for computing the singular value decomposition (SVD ) of a gen- eral real matrix A has two phases [7]: = T 1) Compute orthogonal matrices P 11and Q such that B PAQ 11is in bidiagonal form, i.e. has nonzero entries only on its diagonal and ®rst superdiagonal. Σ= T 2) Compute orthogonal matrices P 22and Q such that PBQ 22is diagonal and nonnega- σ Σ tive. The diagonal entries i of are the singular values of A. We will take them to be σ≥σ = sorted in decreasing order: ii+112. The columns of Q QQ are the right singular vec- = tors,andthecolumnsofP PP12are the left singular vectors. -

Some Inequalities About Trace of Matrix

Available online www.jsaer.com Journal of Scientific and Engineering Research, 2019, 6(7):89-93 ISSN: 2394-2630 Research Article CODEN(USA): JSERBR Some Inequalities about Trace of Matrix TU Yuanyuan*1, SU Runqing2 1Department of Mathematics, Taizhou College, Nanjing Normal University, Taizhou 225300, China Email:[email protected] 2College of Mathematics and Statistics, Nanjing University of Information Science and Technology, Nanjing, 210044, China Abstract In this paper, we studied the inequality of trace by using the properties of the block matrix, singular value and eigenvalue of the matrix. As a result, some new inequalities about trace of matrix under certain conditions are given, at the same time, we extend the corresponding results. Keywords eigenvalue, singular value, trace, inequality 1. Introduction For a complex number x a ib , where a,b are all real, we write Re x a, Im x b , as usual , let I n mn be an n n unit matrix, M be the set of m n complex matrices, diag d1,,L dn be the diagonal matrix with diagonal elements dd1,,L n , if A be a matrix, denote the eigenvalues of by i A, H the singular values of A by i A, the trace of by trA , the associate matrix of A by A , A be a semi- positive definite matrix if be a Herimite matrix and i A 0. In 1937 , Von Neumann gave the famous inequality [1] n (1.1) |tr ( AB ) | ii ( A ) ( B ), i1 where AB, are nn complex matrices. Scholars were very active in the study of this inequality, and obtained many achievements. -

The Column and Row Hilbert Operator Spaces

The Column and Row Hilbert Operator Spaces Roy M. Araiza Department of Mathematics Purdue University Abstract Given a Hilbert space H we present the construction and some properties of the column and row Hilbert operator spaces Hc and Hr, respectively. The main proof of the survey is showing that CB(Hc; Kc) is completely isometric to B(H ; K ); in other words, the completely bounded maps be- tween the corresponding column Hilbert operator spaces is completely isometric to the bounded operators between the Hilbert spaces. A similar theorem for the row Hilbert operator spaces follows via duality of the operator spaces. In particular there exists a natural duality between the column and row Hilbert operator spaces which we also show. The presentation and proofs are due to Effros and Ruan [1]. 1 The Column Hilbert Operator Space Given a Hilbert space H , we define the column isometry C : H −! B(C; H ) given by ξ 7! C(ξ)α := αξ; α 2 C: Then given any n 2 N; we define the nth-matrix norm on Mn(H ); by (n) kξkc;n = C (ξ) ; ξ 2 Mn(H ): These are indeed operator space matrix norms by Ruan's Representation theorem and thus we define the column Hilbert operator space as n o Hc = H ; k·kc;n : n2N It follows that given ξ 2 Mn(H ) we have that the adjoint operator of C(ξ) is given by ∗ C(ξ) : H −! C; ζ 7! (ζj ξ) : Suppose C(ξ)∗(ζ) = d; c 2 C: Then we see that cd = (cj C(ξ)∗(ζ)) = (cξj ζ) = c(ζj ξ) = (cj (ζj ξ)) : We are also able to compute the matrix norms on rectangular matrices. -

![Arxiv:1904.05239V2 [Math.FA]](https://docslib.b-cdn.net/cover/0992/arxiv-1904-05239v2-math-fa-490992.webp)

Arxiv:1904.05239V2 [Math.FA]

ON MATRIX REARRANGEMENT INEQUALITIES RIMA ALAIFARI, XIUYUAN CHENG, LILLIAN B. PIERCE AND STEFAN STEINERBERGER Abstract. Given two symmetric and positive semidefinite square matrices A, B, is it true that any matrix given as the product of m copies of A and n copies of B in a particular sequence must be dominated in the spectral norm by the ordered matrix product AmBn? For example, is kAABAABABBk ≤ kAAAAABBBBk? Drury [10] has characterized precisely which disordered words have the property that an inequality of this type holds for all matrices A, B. However, the 1-parameter family of counterexamples Drury constructs for these characterizations is comprised of 3 × 3 matrices, and thus as stated the characterization applies only for N × N matrices with N ≥ 3. In contrast, we prove that for 2 × 2 matrices, the general rearrangement inequality holds for all disordered words. We also show that for larger N × N matrices, the general rearrangement inequality holds for all disordered words, for most A, B (in a sense of full measure) that are sufficiently small perturbations of the identity. 1. Introduction 1.1. Introduction. Rearrangement inequalities for functions have a long history; we refer to Lieb and Loss [20] for an introduction and an example of their ubiquity in Analysis, Mathematical Physics, and Partial Differential Equations. A natural question that one could ask is whether there is an operator-theoretic variant of such rearrangement inequalities. For example, given two operators A : X → X and B : X → X, is there an inequality kABABAk≤kAAABBk where k·k is a norm on operators? In this paper, we will study the question for A, B being symmetric and positive semidefinite square matrices and k·k denoting the classical operator norm kMk = sup kMxk2. -

Design and Evaluation of Tridiagonal Solvers for Vector and Parallel Computers Universitat Politècnica De Catalunya

Design and Evaluation of Tridiagonal Solvers for Vector and Parallel Computers Author: Josep Lluis Larriba Pey Advisor: Juan José Navarro Guerrero Barcelona, January 1995 UPC Universitat Politècnica de Catalunya Departament d'Arquitectura de Computadors UNIVERSITAT POLITÈCNICA DE CATALU NYA B L I O T E C A X - L I B R I S Tesi doctoral presentada per Josep Lluis Larriba Pey per tal d'aconseguir el grau de Doctor en Informàtica per la Universitat Politècnica de Catalunya UNIVERSITAT POLITÈCNICA DE CATAl U>'YA ADA'UNÍIEÏRACÍÓ ;;//..;,3UMPf';S AO\n¿.-v i S -i i::-.« c:¿fCM or,re: iïhcc'a a la pàgina .rf.S# a;; 3 b el r;ú¡; Barcelona, 6 N L'ENCARREGAT DEL REGISTRE. Barcelona, de de 1995 To my wife Marta To my parents Elvira and José Luis "A journey of a thousand miles must begin with a single step" Lao-Tse Acknowledgements I would like to thank my parents, José Luis and Elvira, for their unconditional help and love to me. I will always be indebted to you. I also want to thank my wife, Marta, for being the sparkle in my life, for her support and for understanding my (good) moods. I thank Juanjo, my advisor, for his friendship, patience and good advice. Also, I want to thank Àngel Jorba for his unconditional collaboration, work and support in some of the contributions of this work. I thank the members of "Comissió de Doctorat del DAG" for their comments and specially Miguel Valero for his suggestions on the topics of chapter 4. I thank Mateo Valero for his friendship and always good advice. -

Spectral Inequalities for Schr¨Odinger Operators with Surface Potentials

SPECTRAL INEQUALITIES FOR SCHRODINGER¨ OPERATORS WITH SURFACE POTENTIALS RUPERT L. FRANK AND ARI LAPTEV Dedicated to M. Sh. Birman on the occasion of his 80th birthday Abstract. We prove sharp Lieb-Thirring inequalities for Schr¨odingeroperators with po- tentials supported on a hyperplane and we show how these estimates are related to Lieb- Thirring inequalities for relativistic Schr¨odingeroperators. 1. Introduction The Cwikel-Lieb-Rozenblum and the Lieb-Thirring inequalities estimate the number and N moments of eigenvalues of Schr¨odingeroperators −∆ − V in L2(R ) in terms of an integral of the potential V . They state that the bound Z γ γ+N=2 tr(−∆ − V )− ≤ Lγ;N V (x)+ dx (1.1) N R holds with a constant Lγ;N independent of V iff γ ≥ 1=2 for N = 1, γ > 0 for N = 2 and γ ≥ 0 for N ≥ 3. Here and below t± := maxf0; ±tg denotes the positive and negative part of a real number, a real-valued function or a self-adjoint operator t. In particular, the problem of finding the optimal value of the constant Lγ;N has attracted a lot of attention recently. We refer to the review articles [H2, LW2] for background information, references and applications of (1.1). The purpose of the present paper is twofold. First, we would like to find an analog of in- equality (1.1) for Schr¨odingeroperators with singular potentials V (x) = v(x1; : : : ; xd)δ(xN ), d := N − 1, supported on a hyperplane. It turns out that such an inequality is indeed valid, provided the integral on the right hand side of (1.1) is replaced by Z 2γ+d v(x1; : : : ; xd)+ dx1 : : : dxd : d R We determine the complete range of γ's for which the resulting inequality holds. -

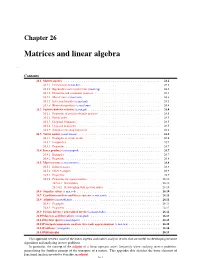

Matrices and Linear Algebra

Chapter 26 Matrices and linear algebra ap,mat Contents 26.1 Matrix algebra........................................... 26.2 26.1.1 Determinant (s,mat,det)................................... 26.2 26.1.2 Eigenvalues and eigenvectors (s,mat,eig).......................... 26.2 26.1.3 Hermitian and symmetric matrices............................. 26.3 26.1.4 Matrix trace (s,mat,trace).................................. 26.3 26.1.5 Inversion formulas (s,mat,mil)............................... 26.3 26.1.6 Kronecker products (s,mat,kron).............................. 26.4 26.2 Positive-definite matrices (s,mat,pd) ................................ 26.4 26.2.1 Properties of positive-definite matrices........................... 26.5 26.2.2 Partial order......................................... 26.5 26.2.3 Diagonal dominance.................................... 26.5 26.2.4 Diagonal majorizers..................................... 26.5 26.2.5 Simultaneous diagonalization................................ 26.6 26.3 Vector norms (s,mat,vnorm) .................................... 26.6 26.3.1 Examples of vector norms.................................. 26.6 26.3.2 Inequalities......................................... 26.7 26.3.3 Properties.......................................... 26.7 26.4 Inner products (s,mat,inprod) ................................... 26.7 26.4.1 Examples.......................................... 26.7 26.4.2 Properties.......................................... 26.8 26.5 Matrix norms (s,mat,mnorm) .................................... 26.8 26.5.1 -

Lecture 5 Ch. 5, Norms for Vectors and Matrices

KTH ROYAL INSTITUTE OF TECHNOLOGY Norms for vectors and matrices — Why? Lecture 5 Problem: Measure size of vector or matrix. What is “small” and what is “large”? Ch. 5, Norms for vectors and matrices Problem: Measure distance between vectors or matrices. When are they “close together” or “far apart”? Emil Björnson/Magnus Jansson/Mats Bengtsson April 27, 2016 Answers are given by norms. Also: Tool to analyze convergence and stability of algorithms. 2 / 21 Vector norm — axiomatic definition Inner product — axiomatic definition Definition: LetV be a vector space over afieldF(R orC). Definition: LetV be a vector space over afieldF(R orC). A function :V R is a vector norm if for all A function , :V V F is an inner product if for all || · || → �· ·� × → x,y V x,y,z V, ∈ ∈ (1) x 0 nonnegative (1) x,x 0 nonnegative || ||≥ � �≥ (1a) x =0iffx= 0 positive (1a) x,x =0iffx=0 positive || || � � (2) cx = c x for allc F homogeneous (2) x+y,z = x,z + y,z additive || || | | || || ∈ � � � � � � (3) x+y x + y triangle inequality (3) cx,y =c x,y for allc F homogeneous || ||≤ || || || || � � � � ∈ (4) x,y = y,x Hermitian property A function not satisfying (1a) is called a vector � � � � seminorm. Interpretation: “Angle” (distance) between vectors. Interpretation: Size/length of vector. 3 / 21 4 / 21 Connections between norm and inner products Examples / Corollary: If , is an inner product, then x =( x,x ) 1 2 � The Euclidean norm (l ) onC n: �· ·� || || � � 2 is a vector norm. 2 2 2 1/2 x 2 = ( x1 + x 2 + + x n ) . Called: Vector norm derived from an inner product.