S. Karademir, N

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Hexaflexagons, Probability Paradoxes, and the Tower of Hanoi

HEXAFLEXAGONS, PROBABILITY PARADOXES, AND THE TOWER OF HANOI For 25 of his 90 years, Martin Gard- ner wrote “Mathematical Games and Recreations,” a monthly column for Scientific American magazine. These columns have inspired hundreds of thousands of readers to delve more deeply into the large world of math- ematics. He has also made signifi- cant contributions to magic, philos- ophy, debunking pseudoscience, and children’s literature. He has produced more than 60 books, including many best sellers, most of which are still in print. His Annotated Alice has sold more than a million copies. He continues to write a regular column for the Skeptical Inquirer magazine. (The photograph is of the author at the time of the first edition.) THE NEW MARTIN GARDNER MATHEMATICAL LIBRARY Editorial Board Donald J. Albers, Menlo College Gerald L. Alexanderson, Santa Clara University John H. Conway, F.R. S., Princeton University Richard K. Guy, University of Calgary Harold R. Jacobs Donald E. Knuth, Stanford University Peter L. Renz From 1957 through 1986 Martin Gardner wrote the “Mathematical Games” columns for Scientific American that are the basis for these books. Scientific American editor Dennis Flanagan noted that this column contributed substantially to the success of the magazine. The exchanges between Martin Gardner and his readers gave life to these columns and books. These exchanges have continued and the impact of the columns and books has grown. These new editions give Martin Gardner the chance to bring readers up to date on newer twists on old puzzles and games, on new explanations and proofs, and on links to recent developments and discoveries. -

Embedded System Security: Prevention Against Physical Access Threats Using Pufs

International Journal of Innovative Research in Science, Engineering and Technology (IJIRSET) | e-ISSN: 2319-8753, p-ISSN: 2320-6710| www.ijirset.com | Impact Factor: 7.512| || Volume 9, Issue 8, August 2020 || Embedded System Security: Prevention Against Physical Access Threats Using PUFs 1 2 3 4 AP Deepak , A Krishna Murthy , Ansari Mohammad Abdul Umair , Krathi Todalbagi , Dr.Supriya VG5 B.E. Students, Department of Electronics and Communication Engineering, Sir M. Visvesvaraya Institute of Technology, Bengaluru, India1,2,3,4 Professor, Department of Electronics and Communication Engineering, Sir M. Visvesvaraya Institute of Technology, Bengaluru, India5 ABSTRACT: Embedded systems are the driving force in the development of technology and innovation. It is used in plethora of fields ranging from healthcare to industrial sectors. As more and more devices come into picture, security becomes an important issue for critical application with sensitive data being used. In this paper we discuss about various vulnerabilities and threats identified in embedded system. Major part of this paper pertains to physical attacks carried out where the attacker has physical access to the device. A Physical Unclonable Function (PUF) is an integrated circuit that is elementary to any hardware security due to its complexity and unpredictability. This paper presents a new low-cost CMOS image sensor and nano PUF based on PUF targeting security and privacy. PUFs holds the future to safer and reliable method of deploying embedded systems in the coming years. KEYWORDS:Physically Unclonable Function (PUF), Challenge Response Pair (CRP), Linear Feedback Shift Register (LFSR), CMOS Image Sensor, Polyomino Partition Strategy, Memristor. I. INTRODUCTION The need for security is universal and has become a major concern in about 1976, when the first initial public key primitives and protocols were established. -

Some Open Problems in Polyomino Tilings

Some Open Problems in Polyomino Tilings Andrew Winslow1 University of Texas Rio Grande Valley, Edinburg, TX, USA [email protected] Abstract. The author surveys 15 open problems regarding the algorith- mic, structural, and existential properties of polyomino tilings. 1 Introduction In this work, we consider a variety of open problems related to polyomino tilings. For further reference on polyominoes and tilings, the original book on the topic by Golomb [15] (on polyominoes) and more recent book of Gr¨unbaum and Shep- hard [23] (on tilings) are essential. Also see [2,26] and [19,21] for open problems in polyominoes and tiling more broadly, respectively. We begin by introducing the definitions used throughout; other definitions are introduced as necessary in later sections. Definitions. A polyomino is a subset of R2 formed by a strongly connected union of axis-aligned unit squares centered at locations on the square lattice Z2. Let T = fT1;T2;::: g be an infinite set of finite simply connected closed sets of R2. Provided the elements of T have pairwise disjoint interiors and cover the Euclidean plane, then T is a tiling and the elements of T are called tiles. Provided every Ti 2 T is congruent to a common shape T , then T is mono- hedral, T is the prototile of T , and the elements of T are called copies of T . In this case, T is said to have a tiling. 2 Isohedral Tilings We begin with monohedral tilings of the plane where the prototile is a polyomino. If a tiling T has the property that for all Ti;Tj 2 T , there exists a symmetry of T mapping Ti to Tj, then T is isohedral; otherwise the tiling is non-isohedral (see Figure 1). -

SOMA CUBE Thorleif's SOMA Page

SOMA CUBE Thorleif's SOMA page : http://fam-bundgaard.dk/SOMA/SOMA.HTM Standard SOMA figures Stran 1 Stran 2 Stran 3 Stran 4 Stran 5 Stran 6 Stran 7 Stran 8 Stran 9 Stran 10 Stran 11 Stran 12 Stran 13 Stran 14 Stran 15 Stran 16 Stran 17 Stran 18 Stran 19 Stran 20 Stran 21 Stran 22 Stran 23 Stran 24 Stran 25 Stran 26 Stran 27 Stran 28 Stran 29 Stran 30 Special SOMA collection Stran 31 . Pairs of figures Pairs Stran 32 Figures NOT using using 7 pieces. Figures NOT Stran 33 Double set Double figures. Stran 34 Double set Double figures. Stran 35 Double set Double figures. Stran 36 Special Double/Tripple etc. set figures. Double/Tripple Special Stran 37 Paulo Brina 'Quad' collection Stran 38 Quad set figures. Quad set Stran 39 SOMA NEWS A 4 set SOMA figure is large. March 25 2010 Just as I thought SOMA was fading, I heard from Paulo Brina of Belo Horizonte, Brasil. The story he told was BIG SOMA Joining the rest of us, SOMA maniacs, Paulo was tired of the old 7 piece soma, so he began to play Tetra-SOMA, using 4 sets = 28 pieces = 108 cubes. The tetra set, home made, stainless steel (it's polished, so, it's hard to take photos) Notice the extreme beauty this polished set exhibits. :o) As Paulo wrote "It's funny. More possibilities, more complexity." Stran 40 Lets look at the pictures. 001 bloc 2x7x9 = 126, so we have 18 holes/gaps 002 A 5x5x5 cube would make 125, . -

Folding Small Polyominoes Into a Unit Cube

CCCG 2020, Saskatoon, Canada, August 5{7, 2020 Folding Small Polyominoes into a Unit Cube Kingston Yao Czajkowski∗ Erik D. Demaine† Martin L. Demaine† Kim Eppling‡ Robby Kraft§ Klara Mundilova† Levi Smith¶ Abstract tackle the analogous problem for smaller polyominoes, with at most nine squares.2 We demonstrate that a 3 × 3 square can fold into a unit Any polyomino folding into a unit cube has at least cube using horizontal, vertical, and diagonal creases on six squares (because the cube has surface area 6). In- the 6 × 6 half-grid. Together with previous results, this deed, for hexominoes, the half-grid and diagonal fea- result implies that all tree-shaped polyominoes with at tures of the model are not useful, because the folding least nine squares fold into a unit cube. We also make cannot have any overlap (again by an area argument). partial progress on the analogous problem for septomi- Therefore, the hexominoes that fold into a unit cube are noes and octominoes by showing a half-grid folding of exactly the eleven hexomino nets of the cube; see e.g. the U septomino and 2 × 4 rectangle into a unit cube. Gardner [Gar89] for the list. Aichholzer et al. [ABD+18, Fig. 16] verified by exhaustive search that this claim re- 1 Introduction mains true even if we allow cutting the polyomino with slits until the dual graph (with a vertex for each square Which polyominoes fold into a unit cube? Aichholzer et and edges for uncut edge adjacency) is a tree; we call al. [ABD+18] introduced this problem at CCCG 2015, these tree-shaped polyominoes. -

Area and Perimeter

2.2 Area and Perimeter Perimeter is easy to define: it’s the distance all the way round the edge of a shape (land sometimes has a “perimeter fence”). (The perimeter of a circle is called its circumference.) Some pupils will want to mark a dot where they start measuring/counting the perimeter so that they know where to stop. Some may count dots rather than edges and get 1 unit too much. Area is a harder concept. “Space” means 3-d to most people, so it may be worth trying to avoid that word: you could say that area is the amount of surface a shape covers. (Surface area also applies to 3-d solids.) (Loosely, perimeter is how much ink you’d need to draw round the edge of the shape; area is how much ink you’d need to colour it in.) It’s good to get pupils measuring accurately-drawn drawings or objects to get a feel for how small an area of 20 cm2, for example, actually is. For comparisons between volume and surface area of solids, see section 2:10. 2.2.1 Draw two rectangles (e.g., 6 × 4 and 8 × 3) on a They’re both rectangles, both contain the same squared whiteboard (or squared acetate). number of squares, both have same area. “Here are two shapes. What’s the same about them One is long and thin, different side lengths. and what’s different?” Work out how many squares they cover. (Imagine Infinitely many; e.g., 2.4 cm by 10 cm. -

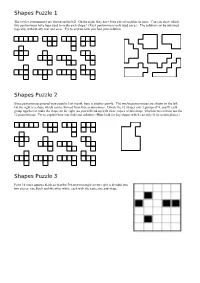

Shapes Puzzle 1

Shapes Puzzle 1 The twelve pentominoes are shown on the left. On the right, they have been placed together in pairs. Can you show which two pentominoes have been used to make each shape? (Each pentomino is only used once.) The solution can be obtained logically, without any trial and error. Try to explain how you find your solution. Shapes Puzzle 2 Since pentominoes proved very popular last month, here is another puzzle. The twelve pentominoes are shown on the left. On the right is a shape which can be formed from four pentominoes. Divide the 12 shapes into 3 groups of 4, and fit each group together to make the shape on the right (so you will end up with three copies of this shape, which between them use the 12 pentominoes. Try to explain how you find your solution. (Hint: look for key shapes which can only fit in certain places.) Shapes Puzzle 3 Paint 10 more squares black so that the 5x6 unit rectangle on the right is divided into two pieces: one black and the other white, each with the same size and shape. Shapes Puzzle 4 I have a piece of carpet 10m square. I want to use it to carpet my lounge, which is 12m by 9m, but has a fixed aquarium 1m by 8m in the centre, as shown. Show how I can cut the carpet into just two pieces which I can then use to carpet the room exactly. [Hint: The cut is entirely along the 1m gridlines shown.] Shapes Puzzle 5 Can you draw a continuous line which passes through every square in the grid on the right, except the squares which are shaded in? (The grid on the left has been done for you as an example.) Try to explain the main steps towards your solution, even if you can't explain every detail. -

Topological, Geometric and Combinatorial Properties of Random Polyominoes

CENTRO DE INVESTIGACIÓN EN MATEMÁTICAS DOCTORAL THESIS Topological, geometric and combinatorial properties of random polyominoes Author: Supervisors: Érika B. ROLDÁN ROA Dr. Matthew KAHLE (OSU) Dr. Víctor PÉREZ-ABREU C. (CIMAT) TESIS para obtener el grado académico de Doctor en Ciencias en Probabilidad y Estadística May 2018 iii Declaration of Authorship I, Érika B. ROLDÁN ROA, declare that this thesis titled, “Topological, geometric and combinatorial properties of random polyominoes” and the work presented in it are my own. I confirm that: • This work was done wholly while in candidature for a research degree at this Institute. • Where I have consulted the published work of others, this is always clearly attributed. • In particular, I indicate in any result that is not mine, or in joint work with my advisors and collaborators, the names of the authors and where it has been originally published (if I know it). The results that are not mine, or in joint work with my advisors and collaborators, are numerated with letters. • All the images and figures contained in this work have been generated by me, except for Figure 1 and the content of Table 2.2.2. • The algorithms and the code written for implementing them that were used for the figure constructions, computational experiments, and random simulations are my own work. • Just in case God(s) exists, my parents and grandparents have always told me that I should acknowledge that She is the real author of everything created in this Universe. Signed: Date: v “Toys are not really as innocent as they look. Toys and games are precursors to serious ideas.” Charles Eames FIGURE 1: Our story begins in 1907 with the 74th Canterbury puz- zle [8]. -

By Kate Jones, RFSPE

ACES: Articles, Columns, & Essays A Periodic Table of Polyform Puzzles by Kate Jones, RFSPE What are polyform puzzles? A branch of mathematics known as Combinatorics deals with systems that encompass permutations and combinations. A subcategory covers tilings, or tessellations, that are formed of mosaic-like pieces of all different shapes made of the same distinct building block. A simple example is starting with a single square as the building block. Two squares joined are a domino. Three squares joined are a tromino. Compare that to one proton/electron pair forming hydrogen, and two of them becoming helium, etc. The puzzles consist of combining all the members of a set into coherent shapes, in as many different variations as possible. Here is a table of geometric families of polyform sets that provide combinatorial puzzle challenges (“recreational mathematics”) developed and produced by my company, Kadon Enterprises, Inc., over the last 40 years (1979-2019). See www.gamepuzzles.com. The POLYOMINO Series Figure 1: The polyominoes orders 1 through 5. 36 TELICOM 31, No. 3 — Third Quarter 2019 Figure 2: Polyominoes orders 1-5 (Poly-5); order 6 (hexominoes, Sextillions); order 7 (heptominoes); and order 8 (octominoes)—21, 35, 108 and 369 pieces, respectively. Polyominoes named by Solomon Golomb in 1953. Product names by Kate Jones. TELICOM 31, No. 3 — Third Quarter 2019 37 Figure 3: Left—Polycubes orders 1 through 4. Right—Polycubes order 5 (the planar pentacubes, Kadon’s Quintillions set). Figure 4: Above—the 18 non-planar pentacubes (Super Quintillions). Below—the 166 hexacubes. 38 TELICOM 31, No. 3 — Third Quarter 2019 The POLYIAMOND Series Figure 5: The polyiamonds orders 1 through 7. -

Tiling with Polyominoes, Polycubes, and Rectangles

University of Central Florida STARS Electronic Theses and Dissertations, 2004-2019 2015 Tiling with Polyominoes, Polycubes, and Rectangles Michael Saxton University of Central Florida Part of the Mathematics Commons Find similar works at: https://stars.library.ucf.edu/etd University of Central Florida Libraries http://library.ucf.edu This Masters Thesis (Open Access) is brought to you for free and open access by STARS. It has been accepted for inclusion in Electronic Theses and Dissertations, 2004-2019 by an authorized administrator of STARS. For more information, please contact [email protected]. STARS Citation Saxton, Michael, "Tiling with Polyominoes, Polycubes, and Rectangles" (2015). Electronic Theses and Dissertations, 2004-2019. 1438. https://stars.library.ucf.edu/etd/1438 TILING WITH POLYOMINOES, POLYCUBES, AND RECTANGLES by Michael A. Saxton Jr. B.S. University of Central Florida, 2013 A thesis submitted in partial fulfillment of the requirements for the degree of Master of Science in the Department of Mathematics in the College of Sciences at the University of Central Florida Orlando, Florida Fall Term 2015 Major Professor: Michael Reid ABSTRACT In this paper we study the hierarchical structure of the 2-d polyominoes. We introduce a new infinite family of polyominoes which we prove tiles a strip. We discuss applications of algebra to tiling. We discuss the algorithmic decidability of tiling the infinite plane Z × Z given a finite set of polyominoes. We will then discuss tiling with rectangles. We will then get some new, and some analogous results concerning the possible hierarchical structure for the 3-d polycubes. ii ACKNOWLEDGMENTS I would like to express my deepest gratitude to my advisor, Professor Michael Reid, who spent countless hours mentoring me over my time at the University of Central Florida. -

Pentomino -- from Wolfram Mathworld 08/14/2007 01:42 AM

Pentomino -- from Wolfram MathWorld 08/14/2007 01:42 AM Search Site Discrete Mathematics > Combinatorics > Lattice Paths and Polygons > Polyominoes Other Wolfram Sites: Algebra Wolfram Research Applied Mathematics Demonstrations Site Calculus and Analysis Pentomino Discrete Mathematics Integrator Foundations of Mathematics Tones Geometry Functions Site History and Terminology Wolfram Science Number Theory more… Probability and Statistics Recreational Mathematics Download Mathematica Player >> Topology Complete Mathematica Documentation >> Alphabetical Index Interactive Entries Show off your math savvy with a Random Entry MathWorld T-shirt. New in MathWorld MathWorld Classroom About MathWorld Send a Message to the Team Order book from Amazon 12,698 entries Fri Aug 10 2007 A pentomino is a 5-polyomino. There are 12 free pentominoes, 18 one-sided pentominoes, and 63 fixed pentominoes. The twelve free pentominoes are known by the letters of the alphabet they most closely resemble: , , , , , , , , , , , and (Gardner 1960, Golomb 1995). Another common naming convention replaces , , , and with , , , and so that all letters from to are used (Berlekamp et al. 1982). In particular, in the life cellular automaton, the -pentomino is always known as the -pentomino. The , , and pentominoes can also be called the straight pentomino, L-pentomino, and T-pentomino, respectively. SEE ALSO: Domino, Hexomino, Heptomino, Octomino, Polyomino, Tetromino, Triomino. [Pages Linking Here] REFERENCES: Ball, W. W. R. and Coxeter, H. S. M. Mathematical Recreations and Essays, 13th ed. New York: Dover, pp. 110-111, 1987. Berlekamp, E. R.; Conway, J. H; and Guy, R. K. Winning Ways for Your Mathematical Plays, Vol. 1: Adding Games, 2nd ed. Wellesley, MA: A K Peters, 2001. Berlekamp, E. -

Packing a Rectangle with Congruent N-Ominoes

JOURNAL OF COMBINATORIAL THEORY 7, 107--115 (1969) Packing a Rectangle with Congruent N-ominoes DAVID A. KLARNER McMas'ter University, Hamilton, Ontario, Canada Communicated b)' Gian-Carlo Rota Received April 1968 ABSTRACT Golomb defines a polyomino (a generalized domino) to be a finite set of rook-wise connected cells in an infinite chessboard. In this note we discuss problems concerning the packing of rectangles with congruent polyominoes. Many of these problems are unsolved. The square lattice consists of lattice points, edges, and cells. A lattice point is a point with integer coordinates in the Cartesian plane, edge is a unit line segment that connects two lattice points, and a cell is a point set consisting of the boundary and interior points of a unit square having its vertices at lattice points. An n-omino is a union of n cells that is connected and has no finite cut set. An n-omino X packs an a ?z b rectangle R if R can be expressed as the union of n-ominoes X1, X 2 ,... such that Xi is congruent to X(i = 1,2,...) and 3Li, X~ (i 5./) have no common interior points. If X packs a rectangle with area kn, we say k is a packing number.for X and write P(X) for the set of all packing numbers for X. If X does not pack a rectangle we write P(X) == 0; also, if k ~ P(X), jk c P(X) (j = 1,2,...), so that P(X) 7~ f) implies P(X) is infinite.