330343-002 High Performance ZLIB Compression on Intel® Architecture Processors White Paper

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Hardware Based Compression in Ceph OSD with BTRFS

Hardware Based Compression in Ceph OSD with BTRFS Weigang Li ([email protected]) Tushar Gohad ([email protected]) Data Center Group Intel Corporation 2016 Storage Developer Conference. © Intel Corp. All Rights Reserved. Credits This work wouldn’t have been possible without contributions from – Reddy Chagam ([email protected]) Brian Will ([email protected]) Praveen Mosur ([email protected]) Edward Pullin ([email protected]) 2 2016 Storage Developer Conference. © Intel Corp. All Rights Reserved. Agenda Ceph A Quick Primer Storage Efficiency and Security Features Offload Mechanisms – Software and Hardware Compression in Ceph OSD with BTRFS Compression in BTRFS and Ceph Hardware Acceleration with QAT PoC implementation Performance Results Key Takeaways 3 2016 Storage Developer Conference. © Intel Corp. All Rights Reserved. Ceph Open-source, object-based scale-out storage system Software-defined, hardware-agnostic – runs on commodity hardware Object, Block and File support in a unified storage cluster Highly durable, available – replication, erasure coding Replicates and re-balances dynamically 4 Image source: http://ceph.com/ceph-storage 2016 Storage Developer Conference. © Intel Corp. All Rights Reserved. Ceph Scalability – CRUSH data placement, no single POF Enterprise features – snapshots, cloning, mirroring Most popular block storage for Openstack use cases 10 years of hardening, vibrant community 5 Source: http://www.openstack.org/assets/survey/April-2016-User-Survey-Report.pdf 2016 Storage Developer Conference. © Intel Corp. All Rights Reserved. Ceph: Architecture OSD OSD OSD OSD OSD btrfs xfs ext4 POSIX Backend Backend Backend Backend Backend Bluestore KV DISK DISK DISK DISK DISK Commodity Servers M M M 6 2016 Storage Developer Conference. -

GNUPG Open Source Encryption on HP Nonstop

GNUPG Open Source Encryption on HP NonStop Damian Ward NonStop Solutions Architect / BITUG Vice Chairman Confidential Introduction What am I going to talk about today • About Me • About VocaLink • History of encryption • PGP to GNUPG • PGP encryption walkthrough • Installing GNUPG • Use Case • Questions..? Please feel free to ask as we go through the presentation. Confidential 2 Introduction About your presenter • Damian Ward • 20+ years HP NonStop and Payments experience • Career spanning: − Operations, Application Programming, System Management, Programme Management, Technical Specialist, Solutions Architect, Enterprise Architect, Infrastructure Architect • Specialities: − HP NonStop systems and architecture, Enterprise Architecture, Encryption, Availability Management, ATM Systems, Payments Processing, Capacity Planning, System modelling, Fraud, Mobile and Internet technologies, Programming, Emerging Technologies and Robotics • BITUG Vice Chairman 2011 • BITUG Chairman 2012 Confidential 3 Introduction VocaLink: cards processing landscape Direct connection to in house processing system Indirect ATM acquirer and card issuer connection (via VocaLinkCSB) FIS Connex Advantage Connections to Mobile Switch with resillient Operators Indirect ATM connection (via third telecommunication party processor) connections to each customer Connections to Direct connection overseas to Post Office via TNS CSB schemes and systems ATM and POS international banks acquiring and issuing connections via gateway connections to international schemes Confidential -

Intel® Quick Sync Video and Ffmpeg Installation and Validation Guide

White paper Intel® Quick Sync Video and FFmpeg Installation and Validation Guide Introduction Intel® Quick Sync Video technology on Intel® Iris™ Pro Graphics and Intel® HD graphics provides transcode acceleration on Linux* systems in FFmpeg* 2.8 and later editions. This paper is a detailed step-by-step guide to enabling h264_qsv, mpeg2_qsv, and hevc_qsv hardware accelerated codecs in the FFmpeg framework. For a quicker overview, please see this article. Performance note: The *_qsv implementations are intended to provide easy access to Intel hardware capabilities for FFmpeg users, but are less efficient than custom applications optimized for Intel® Media Server Studio. Document note: Monospace type = command line inputs/outputs. Pink = highlights to call special attention to important command line I/O details. Getting Started 1. Install Intel Media Server Studio for Linux. Download from software.intel.com/intel-media-server- studio. This is a prerequisite for the *_qsv codecs as it provides the foundation for encode acceleration. See the next chapter for more info on edition choices. Note: Professional edition install is required for hevc_qsv. 2. Get the latest FFmpeg source from https://www.FFmpeg.org/download.html. Intel Quick Sync Video support is available in FFmpeg 2.8 and later editions. The install steps outlined below were verified with ffmpeg release 3.2.2 3. Configure FFmpeg with “--enable –libmfx –enable-nonfree”, build, and install. This requires copying include files to /opt/intel/mediasdk/include/mfx and adding a libmfx.pc file. More details below. 4. Test transcode with an accelerated codec such as “-vcodec h264_qsv” on the FFmpeg command line. -

FPGA-Based ZLIB/GZIP Compression As an Nvme Namespace

FPGA-Based ZLIB/GZIP Compression as an NVMe Namespace Saeed Fouladi Fard Eideticom 2018 Storage Developer Conference. © Eidetic Communications Inc. All Rights Reserved. 1 Why NVMe ❒ NVMe: A standard specification for accessing non-volatile media over PCIe ❒ High-speed and CPU efficient ❒ In-box drivers available for major OSes ❒ Allows peer-to-peer data transfers ❒ Reduces system memory access ❒ Frees CPU time 2018 Storage Developer Conference. © Eidetic Communications Inc. All Rights Reserved. 2 Why NVMe, Cont’d Host ❒ NVMe can be used as a high speed Memory CPU platform for using PCIe and sharing NVMe SSD NVMe SSD NVMe SSD NVMe accelerators with Accelerator Accelerator Accelerator NVMe NVMe NVMe low overhead ❒ Easy to use Accels 2018 Storage Developer Conference. © Eidetic Communications Inc. All Rights Reserved. 3 Hardware Platform TM ❒ Called NoLoad = NVMe Offload TM ❒ NoLoad can present FPGA accelerators as NVMe namespaces to the host computer or peers ❒ Accelerator integration, verification, and discovery is mostly automated ❒ Host software can be added to use the accelerator Streamlined Accelerator Integration 2018 Storage Developer Conference. © Eidetic Communications Inc. All Rights Reserved. 4 NoLoadTM Software Management Applications ❒ Userspace: both kernel nvme-cli & userspace frameworks nvme-of supported etc libnoload SPDK ❒ OS: use inbox NVMe driver (no changes) TM ❒ Hardware: NoLoad Hardware Eval Kits 2018 Storage Developer Conference. © Eidetic Communications Inc. All Rights Reserved. 5 Accelerators as NVMe devices NVMe NSs: 3 Optane SSDs, 3 Compression Accels, 1 RAM-Drive 2018 Storage Developer Conference. © Eidetic Communications Inc. All Rights Reserved. 6 Peer-to-Peer Access ❒ P2P Transfers bypass DRAM DRAM CPU memory and other DDR DDR PCIe subsystems CPU CPU ❒ P2P uses PCIe EP’s memory (e.g., CMB,BAR) PCIe PCIe PCIe PCIe NoLoad NVMe NVMe NVMe ❒ A P2P capable Root CMB Complex or PCIe switch is needed SSD A SSD B SSD A NoLoad with Compression Legacy Datapath Peer-2-Peer Datapath 2018 Storage Developer Conference. -

Scripting the Openssh, SFTP, and SCP Utilities on I Scott Klement

Scripting the OpenSSH, SFTP, and SCP Utilities on i Presented by Scott Klement http://www.scottklement.com © 2010-2015, Scott Klement Why do programmers get Halloween and Christmas mixed-up? 31 OCT = 25 DEC Objectives Of This Session • Setting up OpenSSH on i • The OpenSSH tools: SSH, SFTP and SCP • How do you use them? • How do you automate them so they can be run from native programs (CL programs) 2 What is SSH SSH is short for "Secure Shell." Created by: • Tatu Ylönen (SSH Communications Corp) • Björn Grönvall (OSSH – short lived) • OpenBSD team (led by Theo de Raadt) The term "SSH" can refer to a secured network protocol. It also can refer to the tools that run over that protocol. • Secure replacement for "telnet" • Secure replacement for "rcp" (copying files over a network) • Secure replacement for "ftp" • Secure replacement for "rexec" (RUNRMTCMD) 3 What is OpenSSH OpenSSH is an open source (free) implementation of SSH. • Developed by the OpenBSD team • but it's available for all major OSes • Included with many operating systems • BSD, Linux, AIX, HP-UX, MacOS X, Novell NetWare, Solaris, Irix… and yes, IBM i. • Integrated into appliances (routers, switches, etc) • HP, Nokia, Cisco, Digi, Dell, Juniper Networks "Puffy" – OpenBSD's Mascot The #1 SSH implementation in the world. • More than 85% of all SSH installations. • Measured by ScanSSH software. • You can be sure your business partners who use SSH will support OpenSSH 4 Included with IBM i These must be installed (all are free and shipped with IBM i **) • 57xx-SS1, option 33 = PASE • 5733-SC1, *BASE = Portable Utilities • 5733-SC1, option 1 = OpenSSH, OpenSSL, zlib • 57xx-SS1, option 30 = QShell (useful, not required) ** in v5r3, had 5733-SC1 had to be ordered separately (no charge.) In v5r4 or later, it's shipped automatically. -

Zlib Home Site

zlib Home Site http://zlib.net/ A Massively Spiffy Yet Delicately Unobtrusive Compression Library (Also Free, Not to Mention Unencumbered by Patents) (Not Related to the Linux zlibc Compressing File-I/O Library) Welcome to the zlib home page, web pages originally created by Greg Roelofs and maintained by Mark Adler . If this page seems suspiciously similar to the PNG Home Page , rest assured that the similarity is completely coincidental. No, really. zlib was written by Jean-loup Gailly (compression) and Mark Adler (decompression). Current release: zlib 1.2.6 January 29, 2012 Version 1.2.6 has many changes over 1.2.5, including these improvements: gzread() can now read a file that is being written concurrently gzgetc() is now a macro for increased speed Added a 'T' option to gzopen() for transparent writing (no compression) Added deflatePending() to return the amount of pending output Allow deflateSetDictionary() and inflateSetDictionary() at any time in raw mode deflatePrime() can now insert bits in the middle of the stream ./configure now creates a configure.log file with all of the results Added a ./configure --solo option to compile zlib with no dependency on any libraries Fixed a problem with large file support macros Fixed a bug in contrib/puff Many portability improvements You can also look at the complete Change Log . Version 1.2.5 fixes bugs in gzseek() and gzeof() that were present in version 1.2.4 (March 2010). All users are encouraged to upgrade immediately. Version 1.2.4 has many changes over 1.2.3, including these improvements: -

Z/OS Openssh User's Guide

z/OS Version 2 Release 4 z/OS OpenSSH User's Guide IBM SC27-6806-40 Note Before using this information and the product it supports, read the information in “Notices” on page 503. This edition applies to Version 2 Release 4 of z/OS (5650-ZOS) and to all subsequent releases and modifications until otherwise indicated in new editions. Last updated: 2020-11-16 © Copyright International Business Machines Corporation 2015, 2019. US Government Users Restricted Rights – Use, duplication or disclosure restricted by GSA ADP Schedule Contract with IBM Corp. Contents Figures................................................................................................................. ix Tables.................................................................................................................. xi About this document...........................................................................................xiii Who should use this document?............................................................................................................... xiii z/OS information........................................................................................................................................xiii Discussion list...................................................................................................................................... xiii How to send your comments to IBM......................................................................xv If you have a technical problem.................................................................................................................xv -

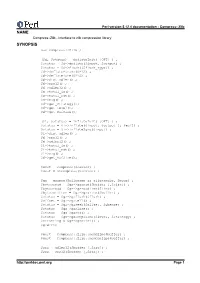

Name Synopsis

Perl version 5.12.4 documentation - Compress::Zlib NAME Compress::Zlib - Interface to zlib compression library SYNOPSIS use Compress::Zlib ; ($d, $status) = deflateInit( [OPT] ) ; $status = $d->deflate($input, $output) ; $status = $d->flush([$flush_type]) ; $d->deflateParams(OPTS) ; $d->deflateTune(OPTS) ; $d->dict_adler() ; $d->crc32() ; $d->adler32() ; $d->total_in() ; $d->total_out() ; $d->msg() ; $d->get_Strategy(); $d->get_Level(); $d->get_BufSize(); ($i, $status) = inflateInit( [OPT] ) ; $status = $i->inflate($input, $output [, $eof]) ; $status = $i->inflateSync($input) ; $i->dict_adler() ; $d->crc32() ; $d->adler32() ; $i->total_in() ; $i->total_out() ; $i->msg() ; $d->get_BufSize(); $dest = compress($source) ; $dest = uncompress($source) ; $gz = gzopen($filename or filehandle, $mode) ; $bytesread = $gz->gzread($buffer [,$size]) ; $bytesread = $gz->gzreadline($line) ; $byteswritten = $gz->gzwrite($buffer) ; $status = $gz->gzflush($flush) ; $offset = $gz->gztell() ; $status = $gz->gzseek($offset, $whence) ; $status = $gz->gzclose() ; $status = $gz->gzeof() ; $status = $gz->gzsetparams($level, $strategy) ; $errstring = $gz->gzerror() ; $gzerrno $dest = Compress::Zlib::memGzip($buffer) ; $dest = Compress::Zlib::memGunzip($buffer) ; $crc = adler32($buffer [,$crc]) ; $crc = crc32($buffer [,$crc]) ; http://perldoc.perl.org Page 1 Perl version 5.12.4 documentation - Compress::Zlib $crc = adler32_combine($crc1, $crc2, $len2)l $crc = crc32_combine($adler1, $adler2, $len2) my $version = Compress::Raw::Zlib::zlib_version(); DESCRIPTION The -

Data Compression in Solid State Storage

Data Compression in Solid State Storage John Fryar [email protected] Santa Clara, CA August 2013 1 Acknowledgements This presentation would not have been possible without the counsel, hard work and graciousness of the following individuals and/or organizations: Raymond Savarda Sandgate Technologies Santa Clara, CA August 2013 2 Disclaimers The opinions expressed herein are those of the author and do not necessarily represent those of any other organization or individual unless specifically cited. A thorough attempt to acknowledge all sources has been made. That said, we’re all human… Santa Clara, CA August 2013 3 Learning Objectives At the conclusion of this tutorial the audience will have been exposed to: • The different types of Data Compression • Common Data Compression Algorithms • The Deflate/Inflate (GZIP/GUNZIP) algorithms in detail • Implementation Options (Software/Hardware) • Impacts of design parameters in Performance • SSD benefits and challenges • Resources for Further Study Santa Clara, CA August 2013 4 Agenda • Background, Definitions, & Context • Data Compression Overview • Data Compression Algorithm Survey • Deflate/Inflate (GZIP/GUNZIP) in depth • Software Implementations • HW Implementations • Tradeoffs & Advanced Topics • SSD Benefits and Challenges • Conclusions Santa Clara, CA August 2013 5 Definitions Item Description Comments Open A system which will compress Must strictly adhere to standards System data for use by other entities. on compress / decompress I.E. the compressed data will algorithms exit the system Interoperability among vendors mandated for Open Systems Closed A system which utilizes Can support a limited, optimized System compressed data internally but subset of standard. does not expose compressed Also allows custom algorithms data to the outside world No Interoperability req’d. -

94 Ztidbits (Zenterprise Data Compression)

Operating system requirements: Requires z/OS 2.1 and new zEDC for z/OS feature * The zEDC Express is an optional feature, exclusive to the zEC12 and zBC12, designed to provide - z/OS V1.13 and V1.12 offer software decompression support only hardware-based acceleration for data compression and decompression for the enterprise, helping to - Easy to set up and use – transparent to application software ; Use policy (DATACLASS) to set up compression. improve cross platform data exchange, reduce CPU consumption, and save disk space. - No changes to access method * A minimum of one feature can be ordered and a maximum of eight can be installed on the system, on Server requirements: Exclusive to zEC12 (with Driver 15E) and zBC12 the PCIe I/O drawer. SOD: In a future z/VM deliverable - New zEDC Express feature for PCIe I/O drawer (FC#0420)One compression coprocessor per zEDC Express feature - Up to two zEDC Express features per I/O domain can be installed. IBM plans to offer z/VM support for guest exploitation of the zEDC - Each feature can be shared across up to 15 LPARs - A zEDC Express feature can be shared by up to 15 LPARs. Express feature on zEC12 & zBC12. - Recommended minimum configuration per server is two featuresUp to 8 features available on zEC12 or zBC12 * Using the new z/OS zEnterprise Data Compression (zEDC ) capability with a new I/O feature on the zEC12 or - For best performance, feature is needed on all systems accessing the compressed data zBC12 – zEDC Express enables customers to do hardware based data compression. -

Apex: Automated Inference of Error Specifications for C Apis

APEx: Automated Inference of Error Specifications for C APIs Yuan Kang Baishakhi Ray Suman Jana Columbia University, USA University of Virginia, USA Columbia University, USA [email protected] [email protected] [email protected] ABSTRACT There are several existing static and dynamic analysis techniques Although correct error handling is crucial to software robustness to automatically detect incorrect handling of API function failures. and security, developers often inadvertently introduce bugs in error For example, several prior projects used dynamic fault injection to handling code. Moreover, such bugs are hard to detect using exist- simulate function failures to check whether the test program han- ing bug-finding tools without correct error specifications. Creating dles such failures correctly [11, 35, 23]. Another popular approach error specifications manually is tedious and error-prone. In this for detecting error handling bugs is to use static analysis to check paper, we present a new technique that automatically infers error whether failures are properly detected and handled [33, 17, 20, 19]. specifications of API functions based on their usage patterns in C For example, EPEx, a system designed by Jana et al. [19], uses programs. Our key insight is that error-handling code tend to have under-constrained symbolic execution to explore error paths and fewer branching points and program statements than the code im- leverages a program-independent error oracle to detect error han- plementing regular functionality. Our scheme leverages this prop- dling bugs. However, all such tools need the error specifications of erty to automatically identify error handling code at API call sites the API functions as input. -

Compression Device Drivers Release 19.11.10

Compression Device Drivers Release 19.11.10 Sep 06, 2021 CONTENTS 1 Compression Device Supported Functionality Matrices1 1.1 Supported Feature Flags..................................1 2 ISA-L Compression Poll Mode Driver3 2.1 Features...........................................3 2.2 Limitations.........................................4 2.3 Installation.........................................4 2.4 Initialization........................................5 3 OCTEON TX ZIP Compression Poll Mode Driver6 3.1 Features...........................................6 3.2 Limitations.........................................6 3.3 Supported OCTEON TX SoCs...............................6 3.4 Steps To Setup Platform..................................6 3.5 Installation.........................................7 3.6 Initialization........................................7 4 Intel(R) QuickAssist (QAT) Compression Poll Mode Driver8 4.1 Features...........................................8 4.2 Limitations.........................................8 4.3 Installation.........................................9 5 ZLIB Compression Poll Mode Driver 10 5.1 Features........................................... 10 5.2 Limitations......................................... 10 5.3 Installation......................................... 10 5.4 Initialization........................................ 11 i CHAPTER ONE COMPRESSION DEVICE SUPPORTED FUNCTIONALITY MATRICES 1.1 Supported Feature Flags Table 1.1: Features availability in compression drivers Feature i s a l o c t e o n t x q a t z l i b HW Accelerated Y Y CPU SSE Y CPU AVX Y CPU AVX2 Y CPU AVX512 Y CPU NEON Stateful Compression Stateful Decompression Y Pass-through Y OOP SGL In SGL Out Y Y OOP SGL In LB Out Y Y OOP LB In SGL Out Y Y Deflate Y Y Y Y LZS Adler32 Y Y Crc32 Y Y Adler32&Crc32 Y Fixed Y Y Y Y Dynamic Y Y Y Y Note: • “Pass-through” feature flag refers to the ability of the PMD to let input buffers pass-through it, copying the input to the output, without making any modifications to it (no compression done).