Book of Abstracts

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Primena Statistike U Kliničkim Istraţivanjima Sa Osvrtom Na Korišćenje Računarskih Programa

UNIVERZITET U BEOGRADU MATEMATIČKI FAKULTET Dušica V. Gavrilović Primena statistike u kliničkim istraţivanjima sa osvrtom na korišćenje računarskih programa - Master rad - Mentor: prof. dr Vesna Jevremović Beograd, 2013. godine Zahvalnica Ovaj rad bi bilo veoma teško napisati da nisam imala stručnu podršku, kvalitetne sugestije i reviziju, pomoć prijatelja, razumevanje kolega i beskrajnu podršku porodice. To su razlozi zbog kojih želim da se zahvalim: . Mom mentoru, prof. dr Vesni Jevremović sa Matematičkog fakulteta Univerziteta u Beogradu, koja je bila ne samo idejni tvorac ovog rada već i dugogodišnja podrška u njegovoj realizaciji. Njena neverovatna upornost, razne sugestije, neiscrpni optimizam, profesionalizam i razumevanje, predstavljali su moj stalni izvor snage na ovom master-putu. Članu komisije, doc. dr Zorici Stanimirović sa Matematičkog fakulteta Univerziteta u Beogradu, na izuzetnoj ekspeditivnosti, stručnoj recenziji, razumevanju, strpljenju i brojnim korisnim savetima. Članu komisije, mr Marku Obradoviću sa Matematičkog fakulteta Univerziteta u Beogradu, na stručnoj i prijateljskoj podršci kao i spremnosti na saradnju. Dipl. mat. Radojki Pavlović, šefu studentske službe Matematičkog fakulteta Univerziteta u Beogradu, na upornosti, snalažljivosti i kreativnosti u pronalaženju raznih ideja, predloga i rešenja na putu realizacije ovog master rada. Dugogodišnje prijateljstvo sa njom oduvek beskrajno cenim i oduvek mi mnogo znači. Dipl. mat. Zorani Bizetić, načelniku Data Centra Instituta za onkologiju i radiologiju Srbije, na upornosti, idejama, detaljnoj reviziji, korisnim sugestijama i svakojakoj podršci. Čak i kada je neverovatno ili dosadno ili pametno uporna, mnogo je i dugo volim – skoro ceo moj život. Mast. biol. Jelici Novaković na strpljenju, reviziji, bezbrojnim korekcijama i tehničkoj podršci svake vrste. Hvala na osmehu, budnom oku u sitne sate, izvrsnoj hrani koja me je vraćala u život, nes-kafi sa penom i transfuziji energije kada sam bila na rezervi. -

SEASONAL ADJUSTMENT USING the X12 PROCEDURE Tammy Jackson and Michael Leonard SAS Institute, Inc

SEASONAL ADJUSTMENT USING THE X12 PROCEDURE Tammy Jackson and Michael Leonard SAS Institute, Inc. Introduction program are regARIMA modeling, model diagnostics, seasonal adjustment using enhanced The U.S. Census Bureau has developed a new X-11 methodology, and post-adjustment seasonal adjustment/decomposition algorithm diagnostics. Statistics Canada's X-11 method fits called X-12-ARIMA that greatly enhances the old an ARIMA model to the original series, then uses X-11 algorithm. The X-12-ARIMA method the model forecast and extends the original series. modifies the X-11 variant of Census Method II by This extended series is then seasonally adjusted by J. Shiskin A.H. Young and J.C. Musgrave of the standard X-11 seasonal adjustment method. February 1967 and the X-11-ARIMA program The extension of the series improves the estimation based on the methodological research developed by of the seasonal factors and reduces revisions to the Estela Bee Dagum, Chief of the Seasonal seasonally adjusted series as new data become Adjustment and Time Series Staff of Statistics available. Canada, September 1979. The X12 procedure is a new addition to SAS/ETS software that Seasonal adjustment of a series is based on the implements the X-12-ARIMA algorithm developed assumption that seasonal fluctuations can be by the U.S. Census Bureau (Census X12). With the measured in the original series (Ot, t = 1,..., n) and help of employees of the Census Bureau, SAS separated from the trend cycle, trading-day, and employees have incorporated the Census X12 irregular fluctuations. The seasonal component of algorithm into the SAS System. -

Discipline: Statistics

Discipline: Statistics Offered through the Department of Mathematical Sciences, SSB 154, 786-1744/786-4824 STAT A252 Elementary Statistics 3 credits/(3+0) Prerequisites: Math 105 with minimum grade of C. Registration Restrictions: If prerequisite is not satisfied, appropriate SAT, ACT or AP scores or approved UAA Placement Test required. Course Attributes: GER Tier I Quantitative Skills. Fees Special Note: A student may apply no more than 3 credits from STAT 252 or BA 273 toward the graduation requirements for a baccalaureate degree. Course Description: Introduction to statistical reasoning. Emphasis on concepts rather than in- depth coverage of traditional statistical methods. Topics include sampling and experimentation, descriptive statistics, probability, binomial and normal distributions, estimation, single-sample and two-sample hypothesis tests. Additional topics will be selected from descriptive methods in regression and correlation, or contingency table analysis. STAT A253 Applied Statistics for the Sciences 4 credits/(4+0) Prerequisite: Math A107 or Math A109 Registration Restrictions: If prerequisite is not satisfied, appropriate SAT, ACT or AP scores or approved UAA Placement Test required. Course Attributes: GER Tier I Quantitative Skills. Fees. Course Description: Intensive survey course with applications for the sciences. Topics include descriptive statistics, probability, random variables, binomial, Poisson and normal distributions, estimation and hypothesis testing of common parameters, analysis of variance for single factor and two factors, correlation, and simple linear regression. A major statistical software package will be utilized. STAT A307 Probability 3 credits/(3+0) Prerequisites: Math A200 with minimum grade of C or Math A272 with minimum grade of C. Course Attributes: GER Tier I Quantitative Skills. -

Topics in Compositional, Seasonal and Spatial-Temporal Time Series

TOPICS IN COMPOSITIONAL, SEASONAL AND SPATIAL-TEMPORAL TIME SERIES BY KUN CHANG A dissertation submitted to the Graduate School|New Brunswick Rutgers, The State University of New Jersey in partial fulfillment of the requirements for the degree of Doctor of Philosophy Graduate Program in Statistics and Biostatistics Written under the direction of Professor Rong Chen and approved by New Brunswick, New Jersey October, 2015 ABSTRACT OF THE DISSERTATION Topics in compositional, seasonal and spatial-temporal time series by Kun Chang Dissertation Director: Professor Rong Chen This dissertation studies several topics in time series modeling. The discussion on sea- sonal time series, compositional time series and spatial-temporal time series brings new insight to the existing methods. Innovative methodologies are developed for model- ing and forecasting purposes. These topics are not isolated but to naturally support each other under rigorous discussions. A variety of real examples are presented from economics, social science and geoscience areas. The second chapter introduces a new class of seasonal time series models, treating the seasonality as a stable composition through time. With the objective of forecasting the sum of the next ` observations, the concept of rolling season is adopted and a structure of rolling conditional distribution is formulated under the compositional time series framework. The probabilistic properties, the estimation and prediction, and the forecasting performance of the model are studied and demonstrated with simulation and real examples. The third chapter focuses on the discussion of compositional time series theories in multivariate models. It provides an idea to the modeling procedure of the multivariate time series that has sum constraints at each time. -

Do Seasonal Adjustments Induce Noncausal Dynamics in Inflation

econometrics Article Do Seasonal Adjustments Induce Noncausal Dynamics in Inflation Rates? Alain Hecq 1, Sean Telg 1,* and Lenard Lieb 2 1 Department of Quantitative Economics, Maastricht University, School of Business and Economics, P.O. Box 616, 6200 MD Maastricht, The Netherlands; [email protected] 2 Department of General Economics (Macro), Maastricht University, School of Business and Economics, P.O. Box 616, 6200 MD Maastricht, The Netherlands; [email protected] * Correspondence: [email protected]; Tel.: +31-43-38-83578 Academic Editors: Gilles Dufrénot, Fredj Jawadi, Alexander Mihailov and Marc S. Paolella Received: 12 June 2017; Accepted: 17 October 2017; Published: 31 October 2017 Abstract: This paper investigates the effect of seasonal adjustment filters on the identification of mixed causal-noncausal autoregressive models. By means of Monte Carlo simulations, we find that standard seasonal filters induce spurious autoregressive dynamics on white noise series, a phenomenon already documented in the literature. Using a symmetric argument, we show that those filters also generate a spurious noncausal component in the seasonally adjusted series, but preserve (although amplify) the existence of causal and noncausal relationships. This result has has important implications for modelling economic time series driven by expectation relationships. We consider inflation data on the G7 countries to illustrate these results. Keywords: inflation; seasonal adjustment filters; mixed causal-noncausal models JEL Classification: C22; E37 1. Introduction Most empirical macroeconomic studies are based on seasonally adjusted data. Various methods have been proposed in the literature aiming at removing unobserved seasonal patterns without affecting other properties of the time series. Just as the misspecification of a trend may cause spurious cycles in detrended data (e.g., Nelson and Kang 1981), a wrongly specified pattern at the seasonal frequency might have very undesirable effects (see, e.g., Ghysels and Perron 1993; Maravall 1993). -

Seasonal Adjustment

OPEAN CENTRAL BANK Seasonal EUR Adjustment Seasonal Adjustment Titel_16182.pmd 1 12.11.03, 07:33 Seasonal Adjustment Editors: Michele Manna and Romana Peronaci Published by: © European Central Bank, November 2003 Address Kaiserstrasse 29 60311 Frankfurt am Main Germany Postal address Postfach 16 03 19 60066 Frankfurt am Main Germany Telephone +49 69 1344 0 Internet http://www.ecb.int Fax +49 69 1344 6000 Telex 411 144 ecb d This publication is also available as an e-book to be downloaded from the ECB’s website. The views expressed in this publication do not necessarily reflect those of the European Central Bank. No responsibility for them should be attributed to the ECB or to any of the other institutions with which the authors are affiliated. All rights reserved by the authors. Editors: Michele Manna and Romana Peronaci Typeset and printed by: Kern & Birner GmbH + Co. As at January 2003. ISBN 92-9181-412-1 (print) ISBN 92-9181-413-X (online) Contents Foreword by Eugenio Domingo Solans ........................................................................ 5 Notes from the Chairman Michele Manna ..................................................................... 7 1 Comparing direct and indirect seasonal adjustments of aggregate series by Catherine C. Hood and David F. Findley .............................................................. 9 2 A class of diagnostics in the ARIMA-model-based decomposition of a time series by Agustín Maravall ............................................................................... 23 3 Seasonal adjustment of European aggregates: direct versus indirect approach by Dominique Ladiray and Gian Luigi Mazzi ........................................................... 37 4 Criteria to determine the optimal revision policy: a case study based on euro zone monetary aggregates data by Laurent Maurin ....................................... 67 5 Direct versus indirect seasonal adjustment of UK monetary statistics: preliminary discussion by David Willoughby ........................................................ -

R-Based Strategies for DH in English Linguistics: a Case Study

R-based strategies for DH in English Linguistics: a case study Nicolas Ballier Paula Lissón Université Paris Diderot Université Paris Diderot UFR Études Anglophones UFR Études Anglophones CLILLAC-ARP (EA 3967) CLILLAC-ARP (EA 3967) nicolas.ballier@univ- [email protected] paris-diderot.fr paris-diderot.fr language for research in linguistics, both in a Abstract quantitative and qualitative approach. Developing a culture based on the program- This paper is a position statement advocating ming language R (R Core Team 2016) for NLP the implementation of the programming lan- among MA and PhD students doing English Lin- guage R in a curriculum of English Linguis- guistics is no easy task. Broadly speaking, most tics. This is an illustration of a possible strat- of the students have little background in mathe- egy for the requirements of Natural Language matics, statistics, or programming, and usually Processing (NLP) for Digital Humanities (DH) studies in an established curriculum. R feel reluctant to study any of these disciplines. plays the role of a Trojan Horse for NLP and While most PhD students in English linguistics statistics, while promoting the acquisition of are former students with a Baccalauréat in Sci- a programming language. We report an over- ences, some MA students pride themselves on view of existing practices implemented in an having radically opted out of Maths. However, MA and PhD programme at the University of we believe that students should be made aware of Paris Diderot in the recent years. We empha- the growing use of statistical and NLP methods size completed aspects of the curriculum and in linguistics and to be able to interpret and im- detail existing teaching strategies rather than plement these techniques. -

How to Handle Seasonality

q:u:z '9* I ~ ,I ~ \l I I I National Criminal Justice Reference Service 1- --------------~~------------------------------------------------------ li nCJrs This microfiche was produced from documents received for inclusion in the NCJRS data base. Since NCJRS cannot exercise control over the physical condition of the documents submitted, HOW TO SEASONALITY the individual frame quality will vary. The resolution chart on HAN~JE this frame may be used to evaluate the document quality. Introduction to the Detection an~ Ana~y~is of Seasonal Fluctuation ln Crlmlnal Justice Time Series L. May 1983 2 5 0 11111 . IIIII 1. IIIII~ .' . .. .' '.. ,,\ 16 \\\\\~ IIIII 1.4 11111 . MICROCOPY RESOLUTION TEST CHART NATIONAL BUREAU OF STANDARDS-J963-A Microfilming procedures used to create this fiche comply with the standards set forth in 41CFR 101-11.504. 1 .~ Points of view or opinions stated in this document are those of the author(s) and do not represent the official position or policies of the U. S. Department of Justice. 1 , ~!. ~ , National Institute of Justice j United States Department of Justice I, ,I, Washington, D. C. 20531 , .1 I! d ...:.,. 1 ·1;··'1~~;:~ ~~' ~ ; ~ ., 3/30/84 \ , ,;;:'. 1 ... ,'f '\! 1*,,-: ,j '"-=-~-~'- f qq .... 191f' I III I to( ' .. ~J ( ,] (J ,(] ....,.. t ~\ . 1 HOW TO HANDJE SEASONALITY Introduction to the Detection and Analysis of Seasonal Fluctuation in Criminal Justice Time Series _i. May 1983 by Carolyn Rebecca Blocv. Statistical Analysis Center ILLINOIS CRIMINAL JUSTICE INFORMATION AUTHORITY William Gould, Chairman J. David Coldren, Executive Director U.S. DopsrtrMnt of •• u~lIce NatlonaIlattiut. of Juallce II Thi. documont has .os"n reproouood (!)(actty t.\l received from the /1 _on or organl.tation originating II. -

A Diagnostic for Seasonality Based Upon Autoregressive Roots

A Diagnostic for Seasonality Based Upon Autoregressive Roots Tucker McElroy U.S. Census Bureau NTTS, March 13, 2019 Disclaimer These slides are released to inform interested parties of research and to encourage discussion. The views expressed on statistical issues are those of the author and not necessarily those of the U.S. Census Bureau. Outline 1. Background on Seasonality 2. Criteria for a Diagnostic of Seasonality 3. Persistent Oscillations 4. Seasonality Hypothesis Testing 5. Simulation Evidence 6. Data Application Background on Seasonality Seasonality in Official Time Series. Many official time series { such as gross domestic product (GDP) and unemployment rate data { have an enormous impact on public policy, and the seasonal patterns often obscure the long-run and mid-range dynamics. What is Seasonality? Persistency in a time series over seasonal periods that is not explainable by intervening time periods. • Requires persistence year to year • Non-seasonal trending series have persistence, which comes through intervening seasons { we must screen out such cases Background on Seasonality The Seasonal Adjustment Task. Given a raw time series: 1. Does it have seasonality? If so, seasonally adjust. 2. Does the seasonal adjustment have seasonality? If not, publish. Both these tasks require a seasonality diagnostic, although the properties of a time series before and after seasonal adjustment can be quite different. Background on Seasonality Pre-Testing. Testing for seasonality in a raw series, where the seasonality could be deterministic (stable), moving and stationary (dynamic), or moving and non-stationary (unit root). These categories are not mutually exclusive, e.g., we could have both unit root and deterministic seasonality. -

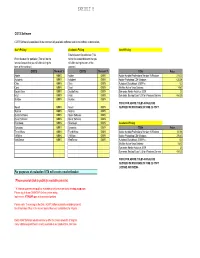

Dell VITA RFP- Revised COTS Pricing 12-17-08

COTS Software COTS Software is considered to be commercially available software read to run without customization, Gov't Pricing Academic Pricing Gov't Pricing Enter discount for publisher (This Enter discount for publisher (This will be the will be the lowest discount that you lowest discount that you will offer during the will offer during the term of the term of the contract) contract) COTS Discount % COTS Discount % Title Price Adobe 0.00% Adobe 0.00% Adobe Acrobat Professional Version 9 Windows 218.33 Autodesk 0.00% Autodesk 0.00% Adobe Photoshop CS4 Windows 635.24 Citrix 0.00% Citrix 0.00% Autodesk Sketchbook 2009 Pro 120 Corel 0.00% Corel 0.00% McAfee Active Virus Defense 14.87 DoubleTake 0.00% DoubleTake 0.00% Symantec Norton Antivirus 2009 25 Intuit 0.00% Intuit 0.00% Symantec Backup Exec 12.5 for Windows Servers 454.25 McAfee 0.00% McAfee 0.00% PRICE FOR ABOVE TITLES SHOULD BE Novell 0.00% Novell 0.00% QUOTED FOR PURCHASE OF ONE (1) COPY Nuance 0.00% Nuance 0.00% Quark Software 0.00% Quark Software 0.00% Quest Software 0.00% Quest Software 0.00% Riverdeep 0.00% Riverdeep 0.00% Academic Pricing Symantec 0.00% Symantec 0.00% Title Price Trend Micro 0.00% Trend Micro 0.00% Adobe Acrobat Professional Version 9 Windows 131.03 VMWare 0.00% VMWare 0.00% Adobe Photoshop CS4 Windows 276.43 WebSense 0.00% WebSense 0.00% Autodesk Sketchbook 2009 Pro 120 McAfee Active Virus Defense 14.87 Symantec Norton Antivirus 2009 25 Symantec Backup Exec 12.5 for Windows Servers 454.25 PRICE FOR ABOVE TITLES SHOULD BE QUOTED FOR PURCHASE OF ONE (1) COPY LICENSE AND MEDIA For purposes of evaluation VITA will create a market basket. -

R for Statistics: First Steps

R for statistics: first steps CAR meeting, Raleigh, Feb 26 2011 Peter Aldhous, San Francisco Bureau Chief [email protected] A note of caution before using any stats package: Beware running with scissors! You can bamboozle yourself, and your readers, by misusing statistics. Make sure you understand the methods you are using, in particular the assumptions that must be met for them to be valid (e.g. normal distribution for many common tests). So before rushing in, consult: • IRE tipsheets (e.g. Donald & LaFleur, #2752; Donald & Hacker, #2731) • Statistical textbooks (e.g. http://www.statsoft.com/textbook/ is free online) • Experts who can provide a reality check on your analysis! Getting started: Download R, instructions at: http://www.r-project.org/ Start the program: Prepare the data: 1) Save as a csv file 2) Point R at the data First steps in the R command line: Load and examine the data: View a basic summary: What is the mean age and salary for CEOs in each sector? An alternative, computing mean and standard deviation in one go: Is there a correlation between age and salary? Draw a graph to explore the correlation analysis: Does mean CEO age differ significantly across sectors? Does mean CEO salary differ significantly across sectors? Draw a graph to explore the distribution of salary by sector: Moving beyond the basic functions: R packages The R community has written 2800+ extensions to R’s basic statistical and graphical functions, available from the CRAN repository: http://cran.r-project.org/web/packages/ Follow the links to find a PDF Reference Manual for each package. -

R in a Nutshell

R IN A NUTSHELL Second Edition Joseph Adler Beijing • Cambridge • Farnham • Köln • Sebastopol • Tokyo R in a Nutshell, Second Edition by Joseph Adler Copyright © 2012 Joseph Adler. All rights reserved. Printed in the United States of America. Published by O’Reilly Media, Inc., 1005 Gravenstein Highway North, Sebastopol, CA 95472. O’Reilly books may be purchased for educational, business, or sales promotional use. Online editions are also available for most titles (http://my.safaribooksonline.com). For more infor- mation, contact our corporate/institutional sales department: 800-998-9938 or [email protected]. Editors: Mike Loukides and Meghan Blanchette Indexer: Fred Brown Production Editor: Holly Bauer Cover Designer: Karen Montgomery Proofreader: Julie Van Keuren Interior Designer: David Futato Illustrators: Robert Romano and Re- becca Demarest September 2009: First Edition. October 2012: Second Edition. Revision History for the Second Edition: 2012-09-25 First release See http://oreilly.com/catalog/errata.csp?isbn=9781449312084 for release details. Nutshell Handbook, the Nutshell Handbook logo, and the O’Reilly logo are registered trade- marks of O’Reilly Media, Inc. R in a Nutshell, the image of a harpy eagle, and related trade dress are trademarks of O’Reilly Media, Inc. Many of the designations used by manufacturers and sellers to distinguish their products are claimed as trademarks. Where those designations appear in this book, and O’Reilly Media, Inc., was aware of a trademark claim, the designations have been printed in caps or initial caps. While every precaution has been taken in the preparation of this book, the publisher and author assume no responsibility for errors or omissions, or for damages resulting from the use of the information contained herein.