Improving Filtering of Email Phishing Attacks by Using Three-Way Text Classifiers

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Technical and Legal Approaches to Unsolicited Electronic Mail, 35 USFL Rev

UIC School of Law UIC Law Open Access Repository UIC Law Open Access Faculty Scholarship 1-1-2001 Technical and Legal Approaches to Unsolicited Electronic Mail, 35 U.S.F. L. Rev. 325 (2001) David E. Sorkin John Marshall Law School, [email protected] Follow this and additional works at: https://repository.law.uic.edu/facpubs Part of the Computer Law Commons, Internet Law Commons, Marketing Law Commons, and the Privacy Law Commons Recommended Citation David E. Sorkin, Technical and Legal Approaches to Unsolicited Electronic Mail, 35 U.S.F. L. Rev. 325 (2001). https://repository.law.uic.edu/facpubs/160 This Article is brought to you for free and open access by UIC Law Open Access Repository. It has been accepted for inclusion in UIC Law Open Access Faculty Scholarship by an authorized administrator of UIC Law Open Access Repository. For more information, please contact [email protected]. Technical and Legal Approaches to Unsolicited Electronic Mailt By DAVID E. SORKIN* "Spamming" is truly the scourge of the Information Age. This problem has become so widespread that it has begun to burden our information infrastructure. Entire new networks have had to be constructed to deal with it, when resources would be far better spent on educational or commercial needs. United States Senator Conrad Burns (R-MT)1 UNSOLICITED ELECTRONIC MAIL, also called "spain," 2 causes or contributes to a wide variety of problems for network administrators, t Copyright © 2000 David E. Sorkin. * Assistant Professor of Law, Center for Information Technology and Privacy Law, The John Marshall Law School; Visiting Scholar (1999-2000), Center for Education and Research in Information Assurance and Security (CERIAS), Purdue University. -

Learning Apache Mahout Classification Table of Contents

Learning Apache Mahout Classification Table of Contents Learning Apache Mahout Classification Credits About the Author About the Reviewers www.PacktPub.com Support files, eBooks, discount offers, and more Why subscribe? Free access for Packt account holders Preface What this book covers What you need for this book Who this book is for Conventions Reader feedback Customer support Downloading the example code Downloading the color images of this book Errata Piracy Questions 1. Classification in Data Analysis Introducing the classification Application of the classification system Working of the classification system Classification algorithms Model evaluation techniques The confusion matrix The Receiver Operating Characteristics (ROC) graph Area under the ROC curve The entropy matrix Summary 2. Apache Mahout Introducing Apache Mahout Algorithms supported in Mahout Reasons for Mahout being a good choice for classification Installing Mahout Building Mahout from source using Maven Installing Maven Building Mahout code Setting up a development environment using Eclipse Setting up Mahout for a Windows user Summary 3. Learning Logistic Regression / SGD Using Mahout Introducing regression Understanding linear regression Cost function Gradient descent Logistic regression Stochastic Gradient Descent Using Mahout for logistic regression Summary 4. Learning the Naïve Bayes Classification Using Mahout Introducing conditional probability and the Bayes rule Understanding the Naïve Bayes algorithm Understanding the terms used in text classification Using the Naïve Bayes algorithm in Apache Mahout Summary 5. Learning the Hidden Markov Model Using Mahout Deterministic and nondeterministic patterns The Markov process Introducing the Hidden Markov Model Using Mahout for the Hidden Markov Model Summary 6. Learning Random Forest Using Mahout Decision tree Random forest Using Mahout for Random forest Steps to use the Random forest algorithm in Mahout Summary 7. -

Linux VDS Release Notes

Linux VDS Release Notes Linux VDS Release Notes Introduction Important: This document is based on the most recent available information regarding the release of Linux VDS. Future revisions of this document will include updates not only to the status of features, but also an overview of updates to customer documentation. The Linux VDS platform provides a set of features designed and implemented to suit the value-added reseller (VAR), small hosting service provider (HSP), application service provider (ASP), independent software vendor (ISV), and any small- and medium-sized business (SMB). This document provides administrators who possess minimal to moderate technical skills with a guide to the latest documentation and other sources of information available at the time of the release. This document also includes any cautions and warnings specific to this release. For more information, refer to the following documents: • Linux VDS User’s Guide • Linux VDS Getting Started Guide In addition, you can also refer to the following Web content: • Frequently-asked questions (FAQ) Status of Features Linux VDS introduces new features and enhancements. What’s New? The following table describes the status of features included with this release: Feature Status Accrisoft This release introduces support for Accrisoft e-commerce software (http://www.accrisoft.com/ ). You can use the software to build and manage profitable Web sites. Accrisoft is a fee-based, optional application. This release also provides an installation script to assure the software operates fully and correctly on your account. An uninstall script assures that you can remove the software completely and effectively if you need to do so. -

A Rule Based Approach for Spam Detection

A RULE BASED APPROACH FOR SPAM DETECTION Thesis submitted in partial fulfillment of the requirements for the award of degree of Master of Engineering In Computer Science & Engineering By: Ravinder Kamboj (Roll No. 800832030) Under the supervision of: Dr. V.P Singh Mrs. Sanmeet Bhatia Assistant Professor Assistant Professor Computer Science & Engineering Department of SMCA COMPUTER SCIENCE AND ENGINEERING DEPARTMENT THAPAR UNIVERSITY PATIALA – 147004 JULY- 2010 i ii Abstract Spam is defined as a junk Email or unsolicited Email. Spam has increased tremendously in the last few years. Today more than 85% of e-mails that are received by e-mail users are spam. The cost of spam can be measured in lost human time, lost server time and loss of valuable mail. Spammers use various techniques like spam via botnet, localization of spam and image spam. According to the mail delivery process anti-spam measures for Email Spam can be divided in to two parts, based on Emails envelop and Email data. Black listing, grey listing and white listing techniques can be applied on the Email envelop to detect spam. Techniques based on the data part of Email like heuristic techniques and Statistical techniques can be used to combat spam. Bayesian filters as part of statistical technique divides the income message in to words called tokens and checks their probability of occurrence in spam e-mails and ham e-mails. Two types of approaches can be followed for the detection of spam e-mails one is learning approach other is rule based approach. Learning approach required a large dataset of spam e-mails and ham e-mails is required for the training of spam filter; this approach has good time characteristics filter can be retrained quickly for new Spam. -

Successful Non-Governmental Threat Attribution

Successful Non-Governmental! Threat Attribution, Containment! and Deterrence: A Case Study! Joe St Sauver, Ph.D. ! [email protected] or [email protected]! Internet2 Nationwide Security Programs Manager! November 2nd, 2010, 1:15-2:30 PM, Chancellor I! http://pages.uoregon.edu/joe/attribute-contain-deter/! Disclaimer: The opinions expressed are those of the author and ! do not necessarily represent the opinion of any other party.! I. Introduction! 2! Cyberspace: Anonymous and Undeterred?! • General Keith Alexander, Director of the National Security Agency (DIRNSA), recently commented [1] that in cyberspace:! "" "“It is difficult to deliver an effective response if the ! " "attacker's identity isn't known,” and ! " "“It is unclear if the government's response to cyber ! " "threats and attacks have deterred criminals, ! " "terrorists, or nations.” ! • That's a provocatively framed (if equivocal) assessment, and one worthy of careful consideration given its source. ! 3! Is The Concept of Deterrence Even Relevant to ! Attacks on Private Critical Cyber Infrastructure?! • In pondering that quote, I also note the National Research Council's (NRC's) “Cyber Deterrence Research and Scholarship” question number 39, [2] which asked: ! "" "How and to what extent, if at all, is deterrence applicable! " "to cyber attacks on private companies (especially those that! " "manage U.S. critical infrastructure)? ! • Since the Office of the Director of National Intelligence (ODNI) requested the NRC's inquiry into cyber deterrence, and since General Alexander is now leading the new United States Cyber Command as well as the National Security Agency, it is appropriate to consider these two questions jointly. ! 4! Can We Identify An Example of Successful Attribution and Cyber Deterrence?! • If we are to prove that cyber deterrence is both relevant and possible, and that the difficulties associated with attribution can be overcome, we must be able to point to at least one example of successful attribution and cyber deterrence. -

Return of Organization Exempt from Income

OMB No. 1545-0047 Return of Organization Exempt From Income Tax Form 990 Under section 501(c), 527, or 4947(a)(1) of the Internal Revenue Code (except black lung benefit trust or private foundation) Open to Public Department of the Treasury Internal Revenue Service The organization may have to use a copy of this return to satisfy state reporting requirements. Inspection A For the 2011 calendar year, or tax year beginning 5/1/2011 , and ending 4/30/2012 B Check if applicable: C Name of organization The Apache Software Foundation D Employer identification number Address change Doing Business As 47-0825376 Name change Number and street (or P.O. box if mail is not delivered to street address) Room/suite E Telephone number Initial return 1901 Munsey Drive (909) 374-9776 Terminated City or town, state or country, and ZIP + 4 Amended return Forest Hill MD 21050-2747 G Gross receipts $ 554,439 Application pending F Name and address of principal officer: H(a) Is this a group return for affiliates? Yes X No Jim Jagielski 1901 Munsey Drive, Forest Hill, MD 21050-2747 H(b) Are all affiliates included? Yes No I Tax-exempt status: X 501(c)(3) 501(c) ( ) (insert no.) 4947(a)(1) or 527 If "No," attach a list. (see instructions) J Website: http://www.apache.org/ H(c) Group exemption number K Form of organization: X Corporation Trust Association Other L Year of formation: 1999 M State of legal domicile: MD Part I Summary 1 Briefly describe the organization's mission or most significant activities: to provide open source software to the public that we sponsor free of charge 2 Check this box if the organization discontinued its operations or disposed of more than 25% of its net assets. -

Technical and Legal Approaches to Unsolicited Electronic Mail†

35 U.S.F. L. REV. 325 (2001) Technical and Legal Approaches to Unsolicited Electronic Mail† By DAVID E. SORKIN* “Spamming” is truly the scourge of the Information Age. This problem has become so widespread that it has begun to burden our information infrastructure. Entire new networks have had to be constructed to deal with it, when resources would be far better spent on educational or commercial needs. United States Senator Conrad Burns (R-MT)1 UNSOLICITED ELECTRONIC MAIL, also called “spam,”2 causes or contributes to a wide variety of problems for network administrators, † Copyright © 2000 David E. Sorkin. * Assistant Professor of Law, Center for Information Technology and Privacy Law, The John Marshall Law School; Visiting Scholar (1999–2000), Center for Education and Research in Information Assurance and Security (CERIAS), Purdue University. The author is grateful for research support furnished by The John Marshall Law School and by sponsors of the Center for Education and Research in Information Assurance and Security. Paul Hoffman, Director of the Internet Mail Consortium, provided helpful comments on technical matters based upon an early draft of this Article. Additional information related to the subject of this Article is available at the author’s web site Spam Laws, at http://www.spamlaws.com/. 1. Spamming: Hearing Before the Subcomm. on Communications of the Senate Comm. on Commerce, Sci. & Transp., 105th Cong. 2 (1998) (prepared statement of Sen. Burns), available at 1998 WL 12761267 [hereinafter 1998 Senate Hearing]. 2. The term “spam” reportedly came to be used in connection with online activities following a mid-1980s episode in which a participant in a MUSH created and used a macro that repeatedly typed the word “SPAM,” interfering with others’ ability to participate. -

Glossary of Spam Terms

white paper Glossary of Spam terms The jargon of The spam indusTry table of Contents A Acceptable Use Policy (AUP) . 5 Alias . 5 Autoresponder . 5 B Ban on Spam . 5 Bayesian Filtering . 5 C CAN-SPAM . 5 Catch Rate . 5 CAUSe . 5 Challenge Response Authentication . 6 Checksum Database . 6 Click-through . 6 Content Filtering . 6 Crawler . 6 D Denial of Service (DoS) . 6 Dictionary Attack . 6 DNSBL . 6 e eC Directive . 7 e-mail Bomb . 7 exploits Block List (XBL) (from Spamhaus org). 7 F False Negative . 7 False Positive . 7 Filter Scripting . 7 Fingerprinting . 7 Flood . 7 h hacker . 8 header . 8 heuristic Filtering . 8 honeypot . 8 horizontal Spam . 8 i internet Death Penalty . 8 internet Service Provider (iSP) . 8 J Joe Job . 8 K Keyword Filtering . 9 Landing Page . 9 LDAP . 9 Listwashing . 9 M Machine-learning . 9 Mailing List . 9 Mainsleaze . 9 Malware . 9 Mung . 9 N Nigerian 419 Scam . 10 Nuke . 10 O Open Proxy . 10 Open Relay . 10 Opt-in . 10 Opt-out . 10 P Pagejacking . 10 Phishing . 10 POP3 . 11 Pump and Dump . 11 Q Quarantine . 11 R RBLs . 11 Reverse DNS . 11 ROKSO . 11 S SBL . 11 Scam . 11 Segmentation . 11 SMtP . 12 Spam . 12 Spambot . 12 Spamhaus . 12 Spamming . 12 Spamware . 12 SPewS . 12 Spider . 12 Spim . 12 Spoof . 12 Spyware . 12 t training Set . 13 trojan horse . 13 trusted Senders List . 13 U UCe . 13 w whack-A-Mole . 13 worm . 13 V Vertical Spam . 13 Z Zombie . 13 Glossary of Spam terms A acceptable use policy (AUP) A policy statement, made by an iSP, whereby the company outlines its rules and guidelines for use of the account . -

Image Spam Detection: Problem and Existing Solution

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056 Volume: 06 Issue: 02 | Feb 2019 www.irjet.net p-ISSN: 2395-0072 Image Spam Detection: Problem and Existing Solution Anis Ismail1, Shadi Khawandi2, Firas Abdallah3 1,2,3Faculty of Technology, Lebanese University, Lebanon ----------------------------------------------------------------------***--------------------------------------------------------------------- Abstract - Today very important means of communication messaging spam, Internet forum spam, junk fax is the e-mail that allows people all over the world to transmissions, and file sharing network spam [1]. People communicate, share data, and perform business. Yet there is who create electronic spam are called spammers [2]. nothing worse than an inbox full of spam; i.e., information The generally accepted version for source of spam is that it crafted to be delivered to a large number of recipients against their wishes. In this paper, we present a numerous anti-spam comes from the Monty Python song, "Spam spam spam spam, methods and solutions that have been proposed and deployed, spam spam spam spam, lovely spam, wonderful spam…" Like but they are not effective because most mail servers rely on the song, spam is an endless repetition of worthless text. blacklists and rules engine leaving a big part on the user to Another thought maintains that it comes from the computer identify the spam, while others rely on filters that might carry group lab at the University of Southern California who gave high false positive rate. it the name because it has many of the same characteristics as the lunchmeat Spam that is nobody wants it or ever asks Key Words: E-mail, Spam, anti-spam, mail server, filter. -

Mastering Spam a Multifaceted Approach with the Spamato Spam Filter System

DISS. ETH NO. 16839 Mastering Spam A Multifaceted Approach with the Spamato Spam Filter System A dissertation submitted to the SWISS FEDERAL INSTITUTE OF TECHNOLOGY ZURICH for the degree of Doctor of Sciences presented by KENO ALBRECHT Dipl. Inf. born June 4, 1977 citizen of Germany accepted on the recommendation of Prof. Dr. Roger Wattenhofer, examiner Prof. Dr. Gordon V. Cormack, co-examiner Prof. Dr. Christof Fetzer, co-examiner 2006 Abstract Email is undoubtedly one of the most important applications used to com- municate over the Internet. Unfortunately, the email service lacks a crucial security mechanism: It is possible to send emails to arbitrary people with- out revealing one’s own identity. Additionally, sending millions of emails costs virtually nothing. Hence over the past years, these characteristics have facilitated and even boosted the formation of a new business branch that advertises products and services via unsolicited bulk emails, better known as spam. Nowadays, spam makes up more than 50% of all emails and thus has become a major vexation of the Internet experience. Although this problem has been dealt with for a long time, only little success (measured on a global scale) has been achieved so far. Fighting spam is a cat and mouse game where spammers and anti-spammers regularly beat each other with sophisticated techniques of increasing complexity. While spammers try to bypass existing spam filters, anti-spammers seek to detect and block new spamming tricks as soon as they emerge. In this dissertation, we describe the Spamato spam filter system as a mul- tifaceted approach to help regain a spam-free inbox. -

Suse Linux Enterprise Server 10 Mail Scanning Gateway Build Guide

SuSE Linux Enterprise Server 10 Mail Scanning Gateway Build Guide Written By: Stephen Carter [email protected] Last Modified 9. May. 2007 Page 1 of 96 Table of Contents Due credit.......................................................................................................................................................4 Overview........................................................................................................................................................5 System Requirements.....................................................................................................................................6 SLES10 DVD............................................................................................................................................6 An existing e-mail server..........................................................................................................................6 A Pentium class PC...................................................................................................................................6 Internet Access..........................................................................................................................................6 Internet Firewall Modifications.................................................................................................................7 Installation Summary.....................................................................................................................................8 How -

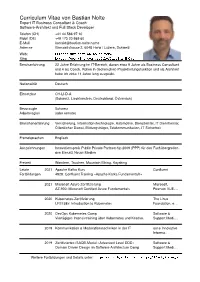

Curriculum Vitae Von Bastian Nolte (Kurzversion)

Curriculum Vitae von Bastian Nolte Expert IT Business Consultant & Coach Software-Architekt und Full Stack Developer Telefon (CH) +41 44 586 97 10 Mobil (DE) +49 173 20 989 60 E-Mail [email protected] Adresse Grosswilstrasse 2, 6048 Horw / Luzern, Schweiz Web: https://www.bastian-nolte.name Xing https://www.xing.com/profile/Bastian_Nolte Berufserfahrung 22 Jahre Erfahrung im IT-Bereich, davon etwa 9 Jahre als Business Consultant und 4 als Coach. Rollen in (technischer) Projektleitungsfunktion und als Architekt habe ich zirka 11 Jahre lang ausgeübt. Nationalität Deutsch Einsetzbar CH-LI-D-A (Schweiz, Liechtenstein, Deutschland, Österreich) Bevorzugte Schweiz Arbeitsregion (oder remote) Branchenerfahrung Versicherung, Informationstechnologie, Automotive, Dienstleister, IT Dienstleister, Öffentlicher Dienst, Bildungsträger, Telekommunikation, IT Sicherheit Fremdsprachen Englisch Auszeichnungen Innovationspreis Public Private Partnership 2009 (PPP) für den Fachübergreifen- den Einsatz Neuer Medien Freizeit Wandern, Tauchen, Mountain Biking, Kayaking Letzte 2021 Apache Kafka Kurs Confluent Fortbildungen 4929: Confluent Training «Apache Kafka Fundamentals» 2021 Microsoft Azure Zertifizierung Microsoft, AZ-900: Microsoft Certified Azure Fundamentals Pearson VUE. 2020 Kubernetes-Zertifizierung The Linux LFS158x: Introduction to Kubernetes Foundation, e. 2020 DevOps Kubernetes Camp Software & Viertägiges Intensivtraining über Kubernetes und Knative. Support Medi. 2019 Kommunikation & Moderationstechniken in der IT oose Innovative Informa.