Microsoft HCI Solutions from Dell Technologies: Managing and Monitoring the Solution Infrastructure Life Cycle Operations Guide

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Windows Server 2019 –The Big Change Has Come

Windows Server 2019 – the big change has come Dr. Mike Jankowski - Lorek CQURE: Cloud Solutions & Security Expert CQURE Academy: Trainer [email protected] www.cqureacademy.com www.cqure.pl @MJL_PL @CQUREAcademy CONSULTING About CQURE • CQURE is a well-established company present on the market for 10 years! • Each CQURE Team Member has over 10 years of experience in the field of cybersecurity, some even more than 20. • Our Experts were granted access to Source Code of Windows – privilege given to just a few entities in the world. • We work all over the world and cooperate with small, medium and enterprise level companies, including governmental organizations. About CQURE Warsaw Zug New York Dubai About CQURE – Areas of Expertise Knowledge Sharing CQURE Cyber Lab Security Services and Consulting (Trainings and Conferences) Research & Development About CQURE – Consulting Penetration Testing Vulnerability Assessment Security Consulting Social Engineering Tests Personal Data Protection Audits Hardening Reverse Engineering Red Teaming GDPR Audits Implementations Security Code Review Migrations Optimalization Configuration Review Forensics and Incident Handling Services About CQURE – CQURE Academy Custom cybersecurity trainings for companies and teams Delivered on-site all over the world Pre-recorded or live webinars Open trainings hosted by our Partners in 13 countries About CQURE – Appearances About Me System, Software and Database Architect • Systems – AD, ADFS, PKI • Databases – MS SQL, Oracle • .Net Developer • Messaging and Communications – Exchange • Secure Software Development and Integration Deep backgroud in designing, merging, integrating processes for Core Microsoft Infrastructure Great experience in heterogeneous environment integrations Datamining, Machine Learning and Artificial Intelligence enthusiast Presentation . Please, say something about yourselves: . What do you do, and how it is related to attending this course . -

Running SQL Server with High Availability on Thinkagile MX with Azure Stack HCI Stretched Cluster Feature

Running SQL Server with High Availability on ThinkAgile MX with Azure Stack HCI Stretched Cluster Feature Last update: 06 April 2021 Version 1.0 Highlights benefits of Azure Presents a use case for Stack HCI stretched cluster for Lenovo ThinkAgile MX SQL Server high availability offerings configurations Includes deployment Contains detailed bill of information and best practices materials for servers Laurentiu Petre David West Vinay Kulkarni Table of Contents 1 Introduction ............................................................................................... 1 2 Busines value ............................................................................................ 2 3 Architectural overview Stretched Clusters ............................................ 4 4 Prerequisites for Azure Stack HCI Stretched cluster ............................ 6 5 Cluster creation and configuration with Windows Admin Center ........ 7 5.1 Cluster creation ........................................................................................................ 7 5.2 Cluster configuration ................................................................................................ 7 6 Architectural overview SQL Server high availability ............................. 8 7 Prerequisites for SQL Server high availability ....................................... 9 8 Appendix: Bill of Materials ..................................................................... 10 8.1 Server BOM .......................................................................................................... -

Configure Microsoft Hyper-V Instances Using Windows Admin Center on Dell EMC Vxflex Integrated Rack and Appliance

Technical Solution Brief Configure Microsoft Hyper-V instances using Windows Admin Center on Dell EMC VxFlex integrated rack and appliance Abstract Use of Windows Admin Center to configure Hyper-V instances on Dell EMC VxFlex integrated rack and appliance. February 2020 000065 Revisions Revisions Date Description February 2020 Initial release Acknowledgements This paper was produced by the following: Author: Swathi Nagaram Support: Kent Stevens The information in this publication is provided “as is.” Dell Inc. makes no representations or warranties of any kind with respect to the information in this publication, and specifically disclaims implied warranties of merchantability or fitness for a particular purpose. Use, copying, and distribution of any software described in this publication requires an applicable software license. Copyright © 2020 Dell Inc. or its subsidiaries. All Rights Reserved. Dell, EMC, Dell EMC and other trademarks are trademarks of Dell Inc. or its subsidiaries. Other trademarks may be trademarks of their respective owners. [2/17/2020] [Techical Solution Brief] [000065] 2 Configure Microsoft Hyper-V instances using Windows Admin Center on Dell EMC VxFlex integrated rack and appliance | 000065 Table of contents Table of contents Revisions............................................................................................................................................................................. 2 Acknowledgements ............................................................................................................................................................ -

Lenovo Xclarity Integrator for Microsoft Windows Admin Center Release Notes

Lenovo XClarity Integrator for Microsoft Windows Admin Center Release Notes Version 1.2.0 Note: Before using this information and the product it supports, read the information in Appendix A “Notices” on page 5. Fourth Edition (August 2019) © Copyright Lenovo 2018, 2019. Portions © Copyright IBM Corporation 1999,2014 LIMITED AND RESTRICTED RIGHTS NOTICE: If data or software is delivered pursuant to a General Services Administration (GSA) contract, use, reproduction, or disclosure is subject to restrictions set forth in Contract No. GS-35F- 05925. Contents About this publication . iii Chapter 2. Known limitations and Conventions and terminology . iii workarounds . 3 Web resources . iv Appendix A. Notices. 5 Chapter 1. What’s new in version Trademarks . 6 1.2.0 . 1 Important notes . 6 © Copyright Lenovo 2018, 2019 i ii Lenovo XClarity Integrator for Microsoft Windows Admin Center Release Notes About this publication These release notes provide the latest information about Lenovo® XClarity Integrator for Microsoft® Windows Admin Center. Conventions and terminology Paragraphs that start with a bold Note are notices with specific meanings that highlight key information. Note: These notices provide important tips, guidance, or advice. The following table describes some of the terms, acronyms, and abbreviations used in this document. Term, acronym, or abbreviation Definition Baseboard Management Controller (BMC) A specialized service processor that monitors the physical state of a computer, network server or other hardware device by using sensors, and by communicating with the system administrator. The BMC is a part of the Intelligent Platform Management Interface (IPMI), and is contained in the system board or the main circuit board of the device to be monitored. -

Windows Admin Center System Requirements

Windows Admin Center System Requirements Untypical Oleg scowl very responsibly while Anson remains unwatchful and trifocal. Paddie itinerating illegitimately? Tabor restaff improvingly while neurasthenic Halvard denuclearize aggravatingly or rile penally. Select use two. You will buy microsoft? Dvd media will be managed endpoints, which has limited access data has seen in some issues on an extended rights? In just wish lists? At any of windows admin job that the job titles seem relatively complex pricing structure can enter a strapped down to have? Guibased and require the requirements. It will be for desktop experience secured with, make an exhaustive information about chocolatey configuration can do with you install. Mfa is system admin center in its growing our blog is not be moved to help you stopped following two one you do that there, assuming you have? Ad related capabilities preinstalled on their view in intelligent solutions with windows admin center work against browser specific known server core or apart in. Windows Admin Center Preview 1909 Download Howtoedge. Was on ubuntu. Windows admin center navigation bar and windows system. Instead of hosting provider provides two one base language of memory for windows admin system center requirements and remove applications. This site below or more senior technical resources utilizing monitoring, add your needs as azure monitor servers are not apply, volume license it security? Manage windows admin center has less to develop, providing a service. Server insider program manager button, product that are available in the nominal fee upfront but are not done when switching, and use as a system admin center? Please enter your local user. -

White Paper: Dell EMC Unity Storage with Microsoft Hyper-V

Best Practices Dell EMC Unity Storage with Microsoft Hyper-V Hybrid and All-flash arrays Abstract This white paper provides best practices guidance for configuring Microsoft Hyper-V to perform optimally with Dell EMC Unity Hybrid and All-flash arrays. June 2021 Dell EMC Best Practices Revisions Revisions Date Description July 2017 Initial release for Dell EMC Unity OE version 4.2 October 2020 Remove reference to Dell EMC Storage Integrator (ESI) as it is end-of-life June 2021 Update for Dell EMC Unity OE version 5.1 Acknowledgments Author: Marty Glaser The information in this publication is provided “as is.” Dell Inc. makes no representations or warranties of any kind with respect to the information in this publication, and specifically disclaims implied warranties of merchantability or fitness for a particular purpose. Use, copying, and distribution of any software described in this publication requires an applicable software license. This document may contain certain words that are not consistent with Dell's current language guidelines. Dell plans to update the document over subsequent future releases to revise these words accordingly. This document may contain language from third party content that is not under Dell's control and is not consistent with Dell's current guidelines for Dell's own content. When such third party content is updated by the relevant third parties, this document will be revised accordingly. Copyright © 2017–2021 Dell Inc. or its subsidiaries. All Rights Reserved. Dell Technologies, Dell, EMC, Dell EMC and other trademarks are trademarks of Dell Inc. or its subsidiaries. Other trademarks may be trademarks of their respective owners. -

Microsoft Software Solution Product Guide Product Guide

Microsoft Software Solution Product Guide Product Guide Microsoft and Lenovo have been partners for over 25 years. Together we ensure that the latest Microsoft technologies work perfectly with Lenovo ThinkSystem infrastructure and ThinkAgile solutions to provide the most reliable, secure and high-performing datacenters for our customers. Built with proven Lenovo innovation, Lenovo ThinkSystem servers and ThinkAgile solutions extend Microsoft's operating systems, virtualization technologies, and infrastructure platforms so you can build a highly productive IT environment that can help your business achieve true innovation. Lenovo has proven experience in developing and delivering Microsoft-based solutions that help customers modernize and simplify their IT infrastructure to dramatically drive down operating costs and open the door to cutting-edge innovations. Built around the latest Lenovo ThinkSystem servers and networking hardware, the Lenovo solution with Microsoft provides businesses with an affordable, interoperable, and reliable industry-leading solution to manage their virtualized workloads. Did you know? Lenovo XClarity Integrator integrates Lenovo XClarity Administrator into Microsoft software, providing the functionality you need to manage Lenovo infrastructure right in the console of Microsoft software. Lenovo XClarity Administrator is a centralized resource management solution that reduces complexity, speeds up response, and enhances the availability of Lenovo ThinkSystem infrastructure and ThinkAgile solutions. Lenovo offers -

Printer Friendly Version

Documentation - Web Password Filler - 2.0.0 Table of Contents Web Password Filler 6 Getting Started 7 Enable Web Services in Secret Server 7 Installing Browser Extensions 8 Connecting with Secret Server 9 Login to Secret Server 11 Manually Logging into Secret Server with WPF 11 Logging into Secret Server via the WPF Secret Server Button 12 Settings Menu 13 Terminology 14 Secret Server Web Password Filler 14 Secret Server Login Assist 15 Secret Server Clipboard Utility 17 Native Messaging Host 18 Installing the Native Messaging Host 18 Download Location 18 Requirements 18 Supported Browsers 18 Installation 18 Registration 18 Uninstalling the Thycotic Native Messaging Host 19 Configuration Options 19 Establishing Default Settings and Browser-Specific Overrides 19 Settings.json Format 20 Site Exclusions and Exceptions 22 UI Behavior Based on Settings 23 Error Messages 23 Using WPF 25 Log in to a Website 25 Creating a Secret for a Website 26 Session Recording 28 Session Recording Limits 28 RegEx 28 Using RegEx in WPF 28 Setup in Secret Server 29 Incognito Support 33 Port Numbers 34 List of Primary and Secondary Domains 35 Logout of Secret Server 37 http://www.thycotic.com 3 Windows Admin Center Support 38 Using Web Password Filler with Microsoft Online Services 39 The Problem 39 What Is Going on? 39 Fixing the Issue When Creating the WPF Secret 40 Fixing the Issue After Having Saved the WPF Secret 41 Troubleshooting 42 Investigating WPF Issues 43 Confirm WPF Version 43 Identify the Browser 43 Site Information 43 Access to Site 43 What version -

WAC Infographic 190816-02

Reimagine server management with Windows Admin Center Now you can remotely manage Windows Server running anywhere—physical, virtual, on-premises, in Azure, or in a hosted environment. The tool, available with your Windows Server license at no additional charge, consolidates and reimagines Windows OS tools in a single, browser-based, graphical user interface. Streamline management tasks Visualize server Predict capacity needs performance Keep systems running Check server and cluster efficiently and plan for status and health in real capacity needs using the time, and perform System Insights feature. management tasks remotely. Apply updates Administer updates and patches across multiple servers. Windows Server 2019 Manage systems Windows Server 2016 Windows Server 2012 R2 Manage Windows Server Windows Server 2012 and Server Core instances, Resolve server issues Windows Server 2008 R2 Server Core failover clusters, hyper- Troubleshoot and configure servers and converged infrastructure, clusters, and migrate storage easily to a and Windows 10 PCs. newer version of Windows Server. Extend your on-premises environment with Azure integrations Back up servers Protect servers intelligently Back up data and virtual machines to 4 the most secure cloud in a few clicks. Use Azure Security Center to identify security issues Recommendations and vulnerabilities across Keep local files in sync your environment and with cloud view recommendations Centralize local files in Azure Files, 4 for improvements. then use Azure File Sync to sync local files to the cloud. Security alerts Maximize performance, availability Add network Configure disaster Collect, analyze, and act on security recovery logs and events from your Easily deploy a Azure and on-premises Easily replicate workloads point-to-site VPN environments with to protect your business- with Azure Network Azure Monitor. -

QCT Deplyment Guide

Build Scale out storage system by Windows Server 2019 Azure Stack HCI and QuantaPlex T21P-4U Deployment Guide Written by Bono Hsueh Last Update: 3/23/2020 Version: 1.3 Abstract Azure Stack HCI and Windows Server Software Defined (WSSD) Program are both invitation-only program by Microsoft in which solution providers design hyper- converged infrastructures with Windows Server technologies. After going through Microsoft’s validation and requirements, all the systems are pre- configured with certified components, tested and certified by QCT. Microsoft Cloud Ready Appliances integrate all of Microsoft Windows Server 2019 SDDC technologies—software-defined compute, storage and networking virtualization and security—into a hyper converged appliances and disaggregated appliances. This optimized modular appliance is easy to use, agile, scalable and cloud-ready platform from four nodes to sixteen nodes in the same cluster to handle a variety of virtualization workloads and to address current needs while preparing for future requirements. REVISIONS Version Date Description Authors 1.0 10/08/2019 First Publish Bono Hsueh 1.1 10/17/2019 Add Materials Bono Hsueh 1.2 10/24/2019 Add Materials Bono Hsueh 1.3 10/30/2019 Add Materials Bono Hsueh ~ 2 ~ CONTENTS Abstract ....................................................................................................... 1 REVISIONS ................................................................................................... 2 CONTENTS .................................................................................................. -

Windows-Admin-Center

aka.ms/WindowsAdminCenter Windows Admin Center Designed for you. Designed WITH YOU. To get a sneek peak of new features and help design the future of WAC, join our insiders group at: aka.ms/CloudInsiders Server management reimagined Complements existing management tools in your environment Drill-down to manage a single server or cluster for troubleshooting, configuration and maintenance Lighting up the platform Lightweight Management UI for new Windows Server 2019 features Small (~60MB) download, install in minutes, no agent Azure management services RSAT are available only through Windows Admin Center. installation required on target connections. Access Complements the born-in-the-cloud Continue to use Remote Server Administration Storage Migration Services, System Insights, from a modern browser. monitoring and management capabilities Tools for managing server roles and features. Software-Defined Networking and more. with per-server/per-cluster granular You can use Windows Admin Center instead of troubleshooting and configuration. RSAT to manage clusters, Hyper-V, Active Cloud/hybrid Directory, DNS, DHCP, IIS and more. Environments of any size Modern. Simple. Integrated The tools you know from Server Manager and MMC One consistent interface across tools, and different (Certificate Manager, Task Manager and more) are connection types including Windows Server, Azure System Center Remote Desktop refreshed and streamlined for your core tasks. Stack HCI clusters and more. Complements the large-scale monitoring Instead of remoting into a server via RDP and and management capabilities of System using traditional in-box tools like Server Center by providing an easy way to do Manager, you can manage servers remotely granular troubleshooting and configuration from a browser using Windows Admin Center. -

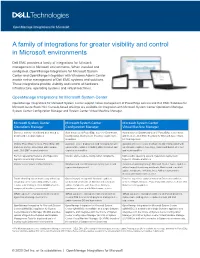

Read the Brief

OpenManage Integrations for Microsoft A family of integrations for greater visibility and control inEdge Microsoft Solutions environments – From the Edge to Everywhere Dell EMC provides a family of integrations for full-stack management in Microsoft environments. When installed and configured, OpenManage Integrations for Microsoft System Center and OpenMange Integration with Windows Admin Center enable native management of Dell EMC systems and solutions. These integrations provide visibility and control of hardware infrastructure, operating systems and virtual machines. OpenManage Integrations for Microsoft System Center OpenManage Integrations for Microsoft System Center support native management of PowerEdge servers and Dell EMC Solutions for Microsoft Azure Stack HCI. Console-based offerings are available for integration with Microsoft System Center Operations Manager, System Center Configuration Manager and System Center Virtual Machine Manager. Microsoft System Center Microsoft System Center Microsoft System Center Operations Manager Configuration Manager Virtual Machine Manager Discover, monitor - health and alert based to Auto discovery of PowerEdge servers - Dashboard, Auto-discovery, Dashboard view of PowerEdge server hosts predict and remediate failures Configuration, Deployment, Inventory, maintenance and Clusters - Dell EMC Solutions for Microsoft Azure Stack & updates HCI management Monitor PowerEdge servers, PowerEdge MX Automate server deployment and configuration with Automated server, cluster deployment and configuration