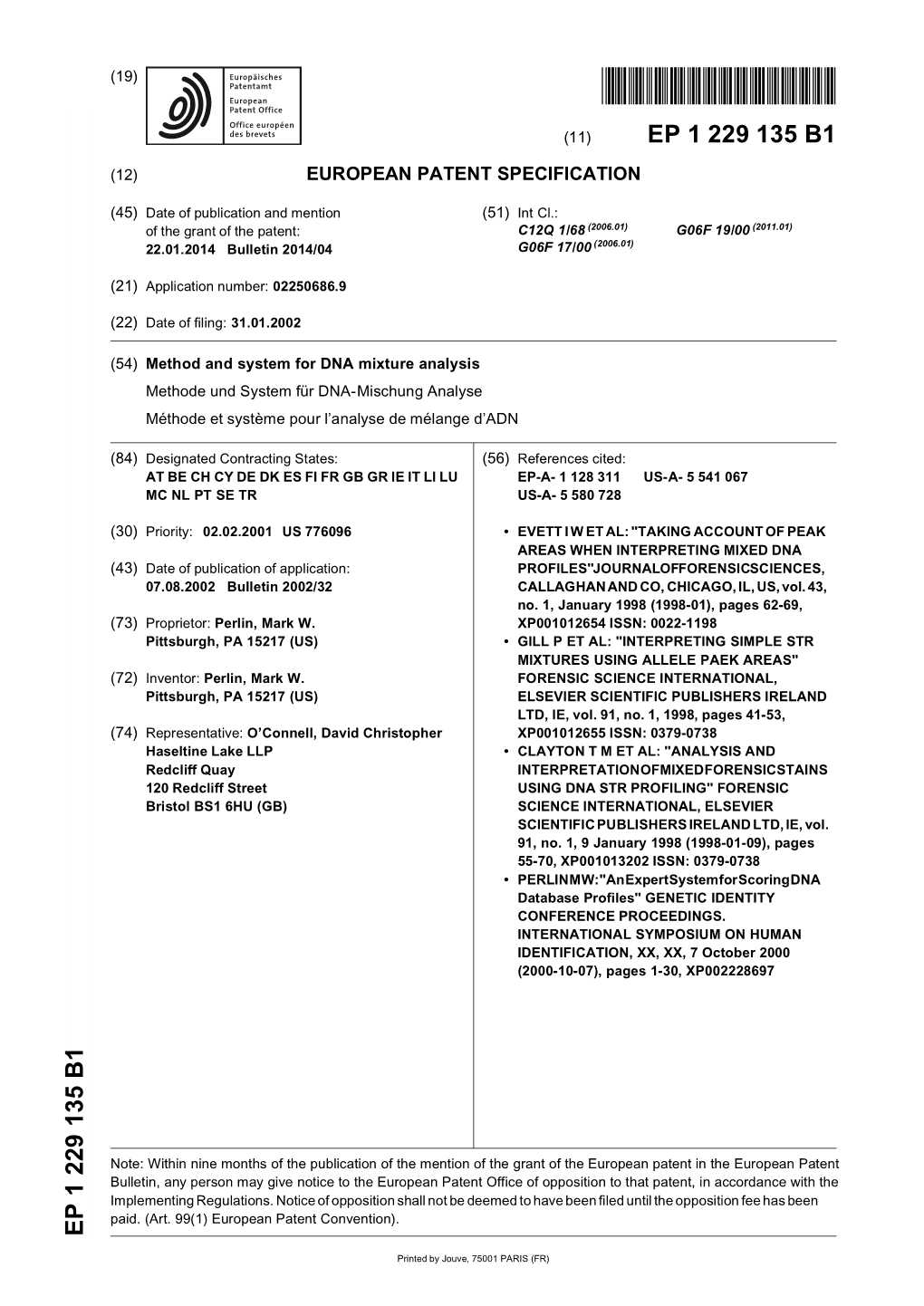

Method and System for DNA Mixture Analysis Methode Und System Für DNA-Mischung Analyse Méthode Et Système Pour L’Analyse De Mélange D’ADN

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Contributions to DNA Cryptography : Applications to Text and Image Secure Transmission Olga Tornea

Contributions to DNA cryptography : applications to text and image secure transmission Olga Tornea To cite this version: Olga Tornea. Contributions to DNA cryptography : applications to text and image secure transmis- sion. Other. Université Nice Sophia Antipolis; Universitatea tehnică (Cluj-Napoca, Roumanie), 2013. English. NNT : 2013NICE4092. tel-00942608 HAL Id: tel-00942608 https://tel.archives-ouvertes.fr/tel-00942608 Submitted on 6 Feb 2014 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. UNIVERSITE DE NICE-SOPHIA ANTIPOLIS ECOLE DOCTORALE STIC SCIENCES ET TECHNOLOGIES DE L’INFORMATION ET DE LA COMMUNICATION T H E S E pour l’obtention du grade de Docteur en Sciences de l’Université de Nice-Sophia Antipolis Mention : Automatique, Traitement du Signal et des Images présentée et soutenue par Olga TORNEA CONTRIBUTIONS TO DNA CRYPTOGRAPHY APPLICATIONS TO TEXT AND IMAGE SECURE TRANSMISSION Thèse dirigée par Monica BORDA et Marc ANTONINI soutenue le 13 Novembre 2013 Jury : Mircea Giurgiu Université Technique de Cluj-Napoca Président William Puech Université -

Genomics-Based Security Protocols: from Plaintext to Cipherprotein

Genomics-Based Security Protocols: From Plaintext to Cipherprotein Harry Shaw Sayed Hussein, Hermann Helgert Microwave and Communications Systems Branch Department of Electrical and Computer Engineering NASA/Goddard Space Flight Center George Washington University Greenbelt, MD, USA Washington, DC, USA [email protected] [email protected], [email protected] Abstract- The evolving nature of the internet will require RSA and nonce exchanges, etc. It also provides for a continual advances in authentication and confidentiality biological authentication capability. protocols. Nature provides some clues as to how this can be This paper is organized as follows:. accomplished in a distributed manner through molecular • A description of the elements of the prototype biology. Cryptography and molecular biology share certain genomic HMAC architecture aspects and operations that allow for a set of unified principles • A description of the DNA code encryption process, to be applied to problems in either venue. A concept for genome selection and properties developing security protocols that can be instantiated at the genomics level is presented. A DNA (Deoxyribonucleic acid) • The elements of the prototype protocol architecture inspired hash code system is presented that utilizes concepts and its concept of operations from molecular biology. It is a keyed-Hash Message • A short plaintext to ciphertext encryption example. Authentication Code (HMAC) capable of being used in secure • Description of the principles of gene expression mobile Ad hoc networks. It is targeted for applications without and transcriptional control to develop protocols for an available public key infrastructure. Mechanics of creating information security. These protocols would the HMAC are presented as well as a prototype HMAC operate in both the electronic and genomic protocol architecture. -

WO 2011/043817 Al 14 April 2011 (14.04.2011) PCT

(12) INTERNATIONAL APPLICATION PUBLISHED UNDER THE PATENT COOPERATION TREATY (PCT) (19) World Intellectual Property Organization International Bureau (10) International Publication Number (43) International Publication Date WO 2011/043817 Al 14 April 2011 (14.04.2011) PCT (51) International Patent Classification: 10010 (US). ARNOLD, Lee, D. [US/US]; 55 Chestnut A61F 2/00 (2006.01) Street, Mt. Sinai, NY 11766 (US). (21) International Application Number: (74) Agents: GOLDMAN, Michael, L. et al; Nixon Peabody PCT/US20 10/002708 LLP, Patent Group, 1100 Clinton Square, Rochester, NY 14604-1792 (US). (22) International Filing Date: 7 October 2010 (07.10.2010) (81) Designated States (unless otherwise indicated, for every kind of national protection available): AE, AG, AL, AM, (25) Filing Language: English AO, AT, AU, AZ, BA, BB, BG, BH, BR, BW, BY, BZ, (26) Publication Langi English CA, CH, CL, CN, CO, CR, CU, CZ, DE, DK, DM, DO, DZ, EC, EE, EG, ES, FI, GB, GD, GE, GH, GM, GT, (30) Priority Data: HN, HR, HU, ID, IL, IN, IS, JP, KE, KG, KM, KN, KP, 61/278,523 7 October 2009 (07.10.2009) US KR, KZ, LA, LC, LK, LR, LS, LT, LU, LY, MA, MD, (71) Applicants (for all designated States except US): COR¬ ME, MG, MK, MN, MW, MX, MY, MZ, NA, NG, NI, NELL UNIVERSITY [US/US]; Cornell Center For NO, NZ, OM, PE, PG, PH, PL, PT, RO, RS, RU, SC, SD, Technology Enterprise, & Commercialization, 395 Pine SE, SG, SK, SL, SM, ST, SV, SY, TH, TJ, TM, TN, TR, Tree Road, Suite 310, Ithaca, NY 14850 (US). -

Patterns and Signals of Biology: an Emphasis on the Role of Post

University of Nebraska at Omaha DigitalCommons@UNO Student Work 5-2016 Patterns and Signals of Biology: An Emphasis On The Role of Post Translational Modifications in Proteomes for Function and Evolutionary Progression Oliver Bonham-Carter Follow this and additional works at: https://digitalcommons.unomaha.edu/studentwork Part of the Computer Sciences Commons Patterns and Signals of Biology: An Emphasis On The Role of Post Translational Modifications in Proteomes for Function and Evolutionary Progression by Oliver Bonham-Carter A DISSERTATION Presented to the Faculty of The Graduate College at the University of Nebraska In Partial Fulfillment of Requirements For the Degree of Doctor of Philosophy Major: Information Technology Under the Supervision of Dhundy Bastola, PhD and Hesham Ali, PhD Omaha, Nebraska May, 2016 SUPERVISORY COMMITTEE Chair: Dhundy (Kiran) Bastola, PhD Co-Chair: Hesham Ali, PhD Lotfollah Najjar, PhD Steven From, PhD Pro Que st Num b e r: 10124836 A ll rig hts re se rve d INFO RMA TION TO A LL USERS The quality of this reproduction is dependent upon the quality of the copy submitted. In the unlikely event that the author did not send a complete manuscript and there are missing pages, these will b e no te d . Also , if m a te ria l ha d to b e re m o ve d , a no te will ind ic a te the d e le tio n. Pro Que st 10124836 Pub lishe d b y Pro Que st LLC (2016). Co p yrig ht o f the Disserta tio n is he ld b y the Autho r. -

ABSTRACT Title of Dissertation: BIOENGINEERED

ABSTRACT Title of Dissertation: BIOENGINEERED CONDUITS FOR DIRECTING DIGITIZED MOLECULAR-BASED INFORMATION Jessica L. Terrell, Doctor of Philosophy, 2015 Directed By: Professor William E. Bentley Fischell Department of Bioengineering Molecular recognition is a prevalent quality in natural biological environments: molecules- small as well as macro- enable dynamic response by instilling functionality and communicating information about the system. The accession and interpretation of this rich molecular information leads to context about the system. Moreover, molecular complexity, both in terms of chemical structure and diversity, permits information to be represented with high capacity. Thus, an opportunity exists to assign molecules as chemical portrayals of natural, non-natural, and even non-biological data. Further, their associated upstream, downstream, and regulatory pathways could be commandeered for the purpose of data processing and transmission. This thesis emphasizes molecules that serve as units of information, the processing of which elucidates context. The project first strategizes biocompatible assembly processes that integrate biological componentry in organized configurations for molecular transfer (e.g. from a cell to a receptor). Next, we have explored the use of DNA for its potential to store data in richer, digital forms. Binary data is embedded within a gene for storage inside a cell carrier and is selectively conveyed. Successively, a catalytic relay is developed to transduce similar data from sequence- based DNA storage to a delineated chemical cue that programs cellular phenotype. Finally, these cell populations are used as mobile information processing units that independently seek and collectively categorize the information, which is fed back as ‘binned’ output. Every development demonstrates a transduction process of molecular data that involves input acquisition, refinement, and output interpretation. -

Genomics and Proteomics Based Security Protocols for Secure Network Architectures

Genomics and Proteomics Based Security Protocols for Secure Network Architectures Harry Cornel Shaw B.S. in Computer Science, August 1993, University of Maryland University College M.S. in Electrical Engineering, January 2006, The George Washington University A Dissertation submitted to The Faculty of The School of Engineering and Applied Science of The George Washington University in partial satisfaction of the requirements for the degree of Doctor of Philosophy May 19, 2013 Dissertation directed by Hermann J. Helgert Professor of Engineering and Applied Science The School of Engineering and Applied Science of The George Washington University certifies that Harry Cornel Shaw has passed the Final Examination for the degree of Doctor of Philosophy as of March 20, 2013. This is the final and approved form of the dissertation. Genomics and Proteomics Based Security Protocols for Secure Network Architectures Harry C. Shaw Dissertation Research Committee: Hermann J. Helgert, Professor of Engineering and Applied Science, Dissertation Director Murray Loew, Professor of Engineering and Applied Science Committee Member John J. Hudiburg, NASA/Goddard Space Flight Center, Exploration and Space Communications Division Mission Systems Engineer Committee Member Tian Lan, Assistant Professor of Engineering and Applied Science Committee Member Sayed Hussein, Professional Lecturer, Department of Electrical and Computer Engineering, The George Washington University Committee Member ii Copyright 2013 by Harry C. Shaw All rights reserved iii Dedication I dedicate this research to my mother Mary Alice, my wife, Debbie, my sister, Renee and all my friends and colleagues that have supported me through this long ordeal. The GSFC Space Network Project, which has supported me and without their support, this achievement would not have been possible. -

UP Research Outputs | 2016

CONTENTS 4 Faculty of Engineering, Built Environment Human Resource Management and Information Technology (EBIT) Marketing Management Dean’s Office Marketing Management: Division School for the Built Environment of Tourism Management Architecture School of Public Management and Administration Construction Economics Taxation Town and Regional Planning 57 Faculty of Education School of Engineering Dean’s Office Chemical Engineering Early Childhood Education Civil Engineering Education Management and Policy Studies Electrical, Electronic and Computer Engineering Educational Psychology Engineering and Technology Management Humanities Education Industrial and Systems Engineering Science, Mathematics and Technology Education Institute for Technological Innovation Unit for Distance Learning Materials Science and Metallurgical Engineering 64 Gordon Institute of Business Science (GIBS) Mechanical and Aeronautical Engineering Gordon Institute of Business Science Mining Engineering 66 Faculty of Health Sciences School of Information Technology Dean’s Office Computer Science School of Dentistry Informatics Community Dentistry Information Science Dental Management Sciences 42 Faculty of Economic and Maxillo-Facial and Oral Surgery Management Sciences (EMS) Odontology Dean’s Office Oral Pathology and Oral Biology Accounting Periodontics and Oral Medicine Auditing Prosthodontics Business Management School of Health Care Sciences Business Management: Division of Human Nutrition Communication Management Nursing Science Economics Occupational Therapy Financial -

Patent No.: US 8898021 B2 50 0.25 O

USOO8898O21B2 (12) United States Patent (10) Patent No.: US 8,898,021 B2 Perlin (45) Date of Patent: Nov. 25, 2014 (54) METHOD AND SYSTEM FOR DNA MIXTURE 6,274,317 B1 * 8/2001 Hiller et al. ....................... 435/6 ANALYSIS 6,750,011 B1* 6/2004 Perlin ... 435/6.11 6,807,490 B1 * 10/2004 Perlin ............................. TO2/20 (76) Inventor: Mark W. Perlin, Pittsburgh, PA (US) (*) Notice: Subject to any disclaimer,- the term of this FOREIGN PATENT DOCUMENTS patent is extended or adjusted under 35 EP O812621 A1 * 12/1997 U.S.C. 154(b) by 0 days. OTHER PUBLICATIONS (21) Appl. No.: 09/776,096 Gill et al. (Forensic Science International (1998) 91: 41-53).* (22) Filed: Feb. 2, 2001 Clayton et al. (Forensic Science International (1998) 91: 55-70).* O O Merriam-Webster's Online Dictionary definition of "probability', p. (65) Prior Publication Data 1 ENEE. US 20O2/O152O35A1 Oct. 17, 2002 Dictionary&va probability.* Abdullah et al. International Journal of Bio-Medical Computing, (51) Int. Cl. 1988, vol. 22, pp. 65-72. (abstract only).* GOIN 33/48 (2006.01) www.dictionary.com definition of genotype, Stedman's Medical GOIN33/50 (2006.01) Dictionary, 2002.* GOIN3L/00 (2006.01) G06F 7/5 (2006.01) (Continued) f t8 98. Primary Examiner — Russell S Negin GO6F 7/16 (2006.01) (74) Attorney, Agent, or Firm — Ansel M. Schwartz GO6F 7/11 (2006.01) GO6F 9/22 (2011.01) (57) ABSTRACT '9924 (2011.01) The present invention pertains to a process for automatically (52) U.S.C. analyzing mixed DNA samples. -

JEAS V7 I2 (Corrected).Pdf

Journal of Engineering and Applied Sciences (JEAS) Network Intrusion Detection Approach using Machine Learning Based on Decision Tree Algorithm Elmadena M. Hassan , Mohammed A. Saleh , Awadallah M. Ahmed The Use of SNP Genotyping for QTL/ Candidate Gene Discovery in Plants Salman F. Alamery Eye Fixation Operational Definition: Effect on Fixation Duration when Using I-DT Amin G Alhashim , Abdulrahman Khamaj Evaluating and Measuring the Impact of E-Learning System A Refereed Academic Journal Published by the Adopted in Saudi Electronic University Publishing and Translation Center at Majmaah University Thamer Alhussain Vol. 7 Issue (2) (November. 2020) ISSN: 1658 - 6638 Publishing and Translation Center - Majmaah University IN THE NAME OF ALLAH, THE MOST GRACIOUS, THE MOST MERCIFUL Vol. 7, Issue (2) (November - 2020) ISSN: 1658 - 6638 About the Journal Journal of Engineering and Applied Sciences (JEAS) Vision Pioneer journal in the publication of advanced research in engineering and applied sciences. Mission A peer-review process which is transparent and rigorous Objectives a) Support research that addresses current problems facing humanity. b) Provide an avenue for exchange of research interests and facilitate the communication among researchers. Scope JEAS accepts articles in the field of engineering and applied sciences. Engineering areas covered by JEAS include: Engineering areas Applied Sciences areas Computer Sciences areas Architectural Engineering Applied Mathematics Computer Sciences Chemical Engineering Applied Physics Information Technology Civil Engineering Biological Science Information Sciences Computer Engineering Biomathematics Computer Engineering Electrical Engineering Biotechnology Environmental Engineering Computer Sciences Industrial Engineering Earth Science Mechanical Engineering Environmental Science Correspondence and Subscription Majmaah University, Post Box 66, Al-Majmaah 11952, KSA email: [email protected] C Copyrights 2018 (1439 H) Majmaah University All rights reserved.