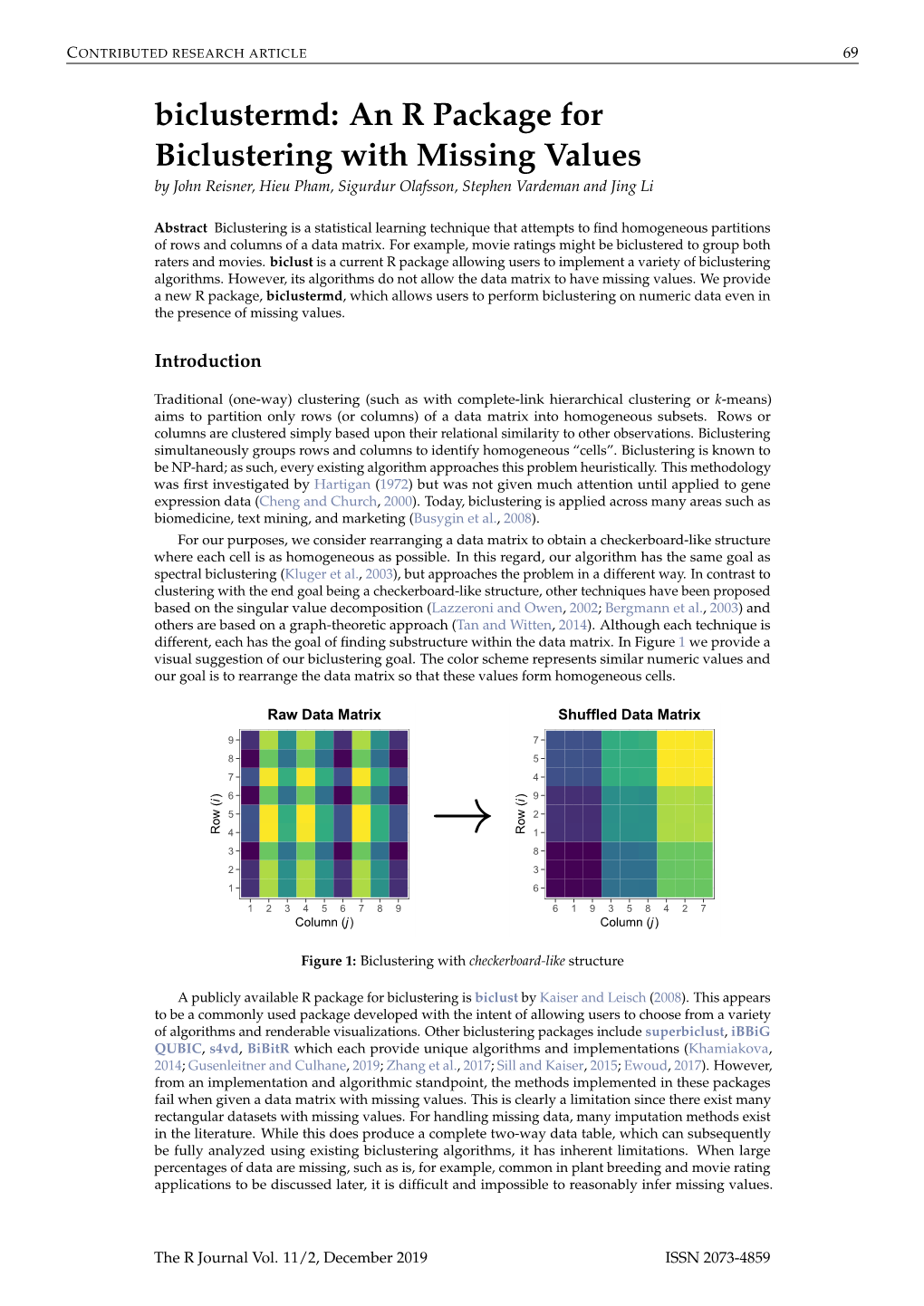

Biclustermd: an R Package for Biclustering with Missing Values by John Reisner, Hieu Pham, Sigurdur Olafsson, Stephen Vardeman and Jing Li

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Quantum Arithmetics and the Relationship Between Real and P-Adic Physics

Quantum Arithmetics and the Relationship between Real and p-Adic Physics M. Pitk¨anen Email: [email protected]. http://tgd.wippiespace.com/public_html/. November 7, 2011 Abstract This chapter suggests answers to the basic questions of the p-adicization program, which are following. 1. Is there a duality between real and p-adic physics? What is its precice mathematic formula- tion? In particular, what is the concrete map p-adic physics in long scales (in real sense) to real physics in short scales? Can one find a rigorous mathematical formulationof canonical identification induced by the map p ! 1=p in pinary expansion of p-adic number such that it is both continuous and respects symmetries. 2. What is the origin of the p-adic length scale hypothesis suggesting that primes near power of two are physically preferred? Why Mersenne primes are especially important? The answer to these questions proposed in this chapter relies on the following ideas inspired by the model of Shnoll effect. The first piece of the puzzle is the notion of quantum arithmetics formulated in non-rigorous manner already in the model of Shnoll effect. 1. Quantum arithmetics is induced by the map of primes to quantum primes by the standard formula. Quantum integer is obtained by mapping the primes in the prime decomposition of integer to quantum primes. Quantum sum is induced by the ordinary sum by requiring that also sum commutes with the quantization. 2. The construction is especially interesting if the integer defining the quantum phase is prime. One can introduce the notion of quantum rational defined as series in powers of the preferred prime defining quantum phase. -

Quantum Arithmetics and the Relationship Between Real and P-Adic Physics

CONTENTS 1 Quantum Arithmetics and the Relationship between Real and p-Adic Physics M. Pitk¨anen, June 19, 2019 Email: [email protected]. http://tgdtheory.com/public_html/. Recent postal address: Rinnekatu 2-4 A 8, 03620, Karkkila, Finland. Contents 1 Introduction 4 1.1 Overall View About Variants Of Quantum Integers . .5 1.2 Motivations For Quantum Arithmetics . .6 1.2.1 Model for Shnoll effect . .6 1.2.2 What could be the deeper mathematics behind dualities? . .6 1.2.3 Could quantum arithmetics allow a variant of canonical identification re- specting both symmetries and continuity? . .7 1.2.4 Quantum integers and preferred extremals of K¨ahleraction . .8 1.3 Correspondence Along Common Rationals And Canonical Identification: Two Man- ners To Relate Real And P-Adic Physics . .8 1.3.1 Identification along common rationals . .8 1.3.2 Canonical identification and its variants . .9 1.3.3 Can one fuse the two views about real-p-adic correspondence . .9 1.4 Brief Summary Of The General Vision . 10 1.4.1 Two options for quantum integers . 11 1.4.2 Quantum counterparts of classical groups . 11 2 Various options for Quantum Arithmetics 12 2.1 Comparing options I and II . 12 2.2 About the choice of the quantum parameter q ..................... 14 2.3 Canonical identification for quantum rationals and symmetries . 15 2.4 More about the non-uniqueness of the correspondence between p-adic integers and their quantum counterparts . 16 2.5 The three basic options for Quantum Arithmetics . 18 CONTENTS 2 3 Could Lie groups possess quantum counterparts with commutative elements exist? 18 3.1 Quantum counterparts of special linear groups . -

Minsquare Factors and Maxfix Covers of Graphs

Minsquare Factors and Maxfix Covers of Graphs Nicola Apollonio1 and Andr´as Seb˝o2 1 Department of Statistics, Probability, and Applied Statistics University of Rome “La Sapienza”, Rome, Italy 2 CNRS, Leibniz-IMAG, Grenoble, France Abstract. We provide a polynomial algorithm that determines for any given undirected graph, positive integer k and various objective functions on the edges or on the degree sequences, as input, k edges that minimize the given objective function. The tractable objective functions include linear, sum of squares, etc. The source of our motivation and at the same time our main application is a subset of k vertices in a line graph, that cover as many edges as possible (maxfix cover). Besides the general al- gorithm and connections to other problems, the extension of the usual improving paths for graph factors could be interesting in itself: the ob- jects that take the role of the improving walks for b-matchings or other general factorization problems turn out to be edge-disjoint unions of pairs of alternating walks. The algorithm we suggest also works if for any sub- set of vertices upper, lower bound constraints or parity constraints are given. In particular maximum (or minimum) weight b-matchings of given size can be determined in polynomial time, combinatorially, in more than one way. 1 Introduction Let G =(V,E) be a graph that may contain loops and parallel edges, and let k>0 be an integer. The main result of this work is to provide a polynomial algorithm for finding a subgraph of cardinality k that minimizes some pregiven objective function on the edges or the degree sequences of the graph. -

Exact Formulas for the Generalized Sum-Of-Divisors Functions)

Exact Formulas for the Generalized Sum-of-Divisors Functions Maxie D. Schmidt School of Mathematics Georgia Institute of Technology Atlanta, GA 30332 USA [email protected] [email protected] Abstract We prove new exact formulas for the generalized sum-of-divisors functions, σα(x) := dα. The formulas for σ (x) when α C is fixed and x 1 involves a finite sum d x α ∈ ≥ over| all of the prime factors n x and terms involving the r-order harmonic number P ≤ sequences and the Ramanujan sums cd(x). The generalized harmonic number sequences correspond to the partial sums of the Riemann zeta function when r > 1 and are related to the generalized Bernoulli numbers when r 0 is integer-valued. ≤ A key part of our new expansions of the Lambert series generating functions for the generalized divisor functions is formed by taking logarithmic derivatives of the cyclotomic polynomials, Φ (q), which completely factorize the Lambert series terms (1 qn) 1 into n − − irreducible polynomials in q. We focus on the computational aspects of these exact ex- pressions, including their interplay with experimental mathematics, and comparisons of the new formulas for σα(n) and the summatory functions n x σα(n). ≤ 2010 Mathematics Subject Classification: Primary 30B50; SecondaryP 11N64, 11B83. Keywords: Divisor function; sum-of-divisors function; Lambert series; cyclotomic polynomial. Revised: April 23, 2019 arXiv:1705.03488v5 [math.NT] 19 Apr 2019 1 Introduction 1.1 Lambert series generating functions We begin our search for interesting formulas for the generalized sum-of-divisors functions, σα(n) for α C, by expanding the partial sums of the Lambert series which generate these functions in the∈ form of [5, 17.10] [13, 27.7] § § nαqn L (q) := = σ (m)qm, q < 1. -

Happiness Is Integral

Hapies Is Inegral But Not Rational Austin Schmid/unsplash.com Andre Bland, Zoe Cramer, Philip de Castro, Desiree Domini, Tom Edgar, Devon Johnson, Steven Klee, Joseph Koblitz, and Ranjani Sundaresan classic problem in recreational mathemat- 1970, pages 83–84) for a proof of this fact. ics involves adding the squares of the digits A number is called happy if it eventually reaches 1 of a given number to obtain a new number. and unhappy otherwise. For example, from the number 49, we obtain In this article we explore happiness in several dif- ferent numeral systems. We include exercises along AAlthough this action may seem a bit arbitrary, some- the way that we hope will enhance your experience. thing interesting happens when we do it repeatedly. The The proofs-based exercises are meant to extend the sum of the squares of the digits in 97 is ideas we present and are completely optional. We also Continuing in this way, we obtain the sequence, include a few example-driven exercises that we strongly encourage you to complete, as they will be important At this point, the sequence produces 1s forever. later in the article. The optimistic mathematician might hope that this Everyone Can Find Happiness will always happen, but alas, it doesn’t. For example, if we begin with 2, we obtain As we have seen, only some numbers are happy. Maybe this seems unfair. However, the definition of happiness depends upon the method we use to represent posi- Once we reach 4, we enter a cycle that returns to 4 tive integers: We rely heavily on the base-10 notation every eight steps. -

![Arxiv:1706.02359V1 [Math.CO] 7 Jun 2017 N Arxeutosrltdto Related Equations Matrix and 1.1](https://docslib.b-cdn.net/cover/1531/arxiv-1706-02359v1-math-co-7-jun-2017-n-arxeutosrltdto-related-equations-matrix-and-1-1-3741531.webp)

Arxiv:1706.02359V1 [Math.CO] 7 Jun 2017 N Arxeutosrltdto Related Equations Matrix and 1.1

NEW FACTOR PAIRS FOR FACTORIZATIONS OF LAMBERT SERIES GENERATING FUNCTIONS MIRCEA MERCA ACADEMY OF ROMANIAN SCIENTISTS SPLAIUL INDEPENDENTEI 54, BUCHAREST, 050094 ROMANIA [email protected] MAXIE D. SCHMIDT SCHOOL OF MATHEMATICS GEORGIA INSTITUTE OF TECHNOLOGY ATLANTA, GA 30332 USA [email protected] Abstract. We prove several new variants of the Lambert series factorization theorem established in the first article “Generating special arithmetic func- tions by Lambert series factorizations” by Merca and Schmidt (2017). Several characteristic examples of our new results are presented in the article to moti- vate the formulations of the generalized factorization theorems. Applications of these new factorization results include new identities involving the Euler partition function and the generalized sum-of-divisors functions, the M¨obius function, Euler’s totient function, the Liouville lambda function, von Man- goldt’s lambda function, and the Jordan totient function. 1. Introduction 1.1. Lambert series factorization theorems. We consider recurrence relations and matrix equations related to Lambert series expansions of the form [5, §27.7] [1, §17.10] a qn n = b qm, |q| < 1, (1) 1 − qn m nX≥1 mX≥1 + + for prescribed arithmetic functions a : Z → C and b : Z → C where bm = arXiv:1706.02359v1 [math.CO] 7 Jun 2017 d|m ad. As in [3], we are interested in so-termed Lambert series factorizations of the form P n n anq 1 n n = sn,kak q , (2) 1 − q C(q) ! nX≥1 nX≥1 kX=1 n for arbitrary {an}n≥1 and where specifying one of the sequences, cn := [q ]1/C(q) or sn,k with C(0) := 1, uniquely determines the form of the other. -

Unconditional Class Group Tabulation to 2⁴⁰

University of Calgary PRISM: University of Calgary's Digital Repository Graduate Studies The Vault: Electronic Theses and Dissertations 2014-07-21 Unconditional Class Group Tabulation to 2⁴⁰ Mosunov, Anton Mosunov, A. (2014). Unconditional Class Group Tabulation to 2⁴⁰ (Unpublished master's thesis). University of Calgary, Calgary, AB. doi:10.11575/PRISM/28551 http://hdl.handle.net/11023/1651 master thesis University of Calgary graduate students retain copyright ownership and moral rights for their thesis. You may use this material in any way that is permitted by the Copyright Act or through licensing that has been assigned to the document. For uses that are not allowable under copyright legislation or licensing, you are required to seek permission. Downloaded from PRISM: https://prism.ucalgary.ca UNIVERSITY OF CALGARY Unconditional Class Group Tabulation to 240 by Anton S. Mosunov A THESIS SUBMITTED TO THE FACULTY OF GRADUATE STUDIES IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR THE DEGREE OF MASTER OF SCIENCE DEPARTMENT OF COMPUTER SCIENCE CALGARY, ALBERTA July, 2014 c Anton S. Mosunov 2014 Abstract In this thesis, we aim to tabulate the class groups of binary quadratic forms for all fun- damental discriminants ∆ < 0 satisfying j∆j < 240. Our computations are performed in several stages. We first multiply large polynomials in order to produce class numbers h(∆) for ∆ 6≡ 1 (mod 8). This stage is followed by the resolution of class groups Cl∆ with the Buchmann-Jacobson-Teske algorithm, which can be significantly accelerated when the class numbers are known. In order to determine Cl∆ for ∆ ≡ 1 (mod 8), we use this algorithm in conjunction with Bach's conditional averaging method and the Eichler-Selberg trace formula, required for unconditional verification. -

Geometric Langlands Program As Equivalent Unified Approach Will

Submitted exclusively to the London Mathematical Society doi:10.1112/0000/000000 Geometric Langlands program as equivalent unified approach will succeed in providing proof for Riemann hypothesis that comply with Principle of Maximum Density for Integer Number Solutions J. Ting, Preprint submitted to viXra on August 12, 2021 Abstract The 1859 Riemann hypothesis conjectured all nontrivial zeros in Riemann zeta function are uniquely located on sigma = 1/2 critical line. Derived from Dirichlet eta function [proxy for Riemann zeta function] are, in chronological order, simplified Dirichlet eta function and Dirichlet Sigma-Power Law. Computed Zeroes from the former uniquely occur at sigma = 1/2 resulting in total summation of fractional exponent ({sigma) that is twice present in this function to be integer {1. Computed Pseudo-zeroes from the later uniquely occur at sigma = 1/2 resulting in total summation of fractional exponent (1 { sigma) that is twice present in this law to be integer 1. All nontrivial zeros are, respectively, obtained directly and indirectly as one specific type of Zeroes and Pseudo-zeroes only when sigma = 1/2. Thus, it is proved that Riemann hypothesis is true whereby this function and law must rigidly comply with Principle of Maximum Density for Integer Number Solutions. The unified geometrical-mathematical approach used in our proof is equivalent to the unified algebra-geometry approach of geometric Langlands program. Contents 1. Introduction ................ 2 1.1 General notations and Figures 1, 2, 3 and 4 ........ 2 1.2 Equivalence of our unified geometrical-mathematical approach and the approach of geometric Langlands program including p-adic Riemann zeta function ζp(s) 4 2. -

Rigorous Proof for Riemann Hypothesis That Rigidly Comply with Principle of Maximum Density for Integer Number Solutions

viXra manuscript No. (will be inserted by the editor) Rigorous proof for Riemann hypothesis that rigidly comply with Principle of Maximum Density for Integer Number Solutions John Ting June 17, 2021 Abstract The 1859 Riemann hypothesis conjectured all nontrivial zeros in Riemann zeta function are uniquely located on sigma = 1/2 critical line. Derived from Dirichlet eta function [proxy for Riemann zeta function] are, in chronological order, simplified Dirichlet eta function and Dirichlet Sigma-Power Law. Computed Zeroes from the former uniquely occur at sigma = 1/2 resulting in total summation of fractional exponent (–sigma) that is twice present in this function to be integer –1. Computed Pseudo-zeroes from the later uniquely occur at sigma = 1/2 resulting in total summation of fractional exponent (1 – sigma) that is twice present in this law to be integer 1. All nontrivial zeros are, respectively, obtained directly and indirectly as one specific type of Zeroes and Pseudo-zeroes only when sigma = 1/2. Thus, it is proved that Riemann hypothesis is true whereby this function and law must rigidly comply with Principle of Maximum Density for Integer Number Solutions. Keywords Dirichlet Sigma-Power Law · Pseudo-zeroes · Riemann hypothesis · Zeroes Mathematics Subject Classification (2010) 11M26 · 11A41 Contents 1 Introduction . 1 2 Notation and sketch of the proof . 2 3 The Completely Predictable and Incompletely Predictable entities . 10 4 The exact and inexact Dimensional analysis homogeneity for Equations . 11 5 Gauss Areas of Varying Loops and Principle of Maximum Density for Integer Number Solutions . 11 6 Simple observation on Shift of Varying Loops in z(s + ıt) Polar Graph and Principle of Equidistant for Multiplicative Inverse............................................................ -

2017/1 Edition

Number Theory In Context and Interactive Number Theory In Context and Interactive Karl-Dieter Crisman Gordon College January 24, 2017 About the Author Karl-Dieter Crisman has degrees in mathematics from Northwestern University and the University of Chicago. He has taught at a number of institutions, and has been a professor of mathematics at Gordon College in Massachusetts since 2005. His research is in the mathematics of voting and choice, and one of his teaching interests is (naturally) combining programming and mathematics using SageMath. He has given invited talks on both topics in various venues on three continents. Other (mathematical) interests include fruitful connections between math- ematics and music theory, the use of service-learning in courses at all levels, connections between faith and math, and editing. Non-mathematical interests he wishes he had more time for include playing keyboard instruments and ex- ploring new (human and computer) languages. But playing strategy games and hiking with his family is most interesting of all. Edition: 2017/1 Edition Website: math.gordon.edu/ntic © 2011–2017 Karl-Dieter Crisman This work is (currently) licensed under a Creative Commons Attribution- NoDerivatives 4.0 International License. To my students and the Sage community; let’s keep exploring together. Acknowledgements This text evolved over the course of teaching MAT 338 Number Theory for many years at Gordon College, and immense thanks are due to the students through five offerings of this course for bearing with using a text-in-progress. The Sage Math team and especially the Sage cell server have made an interac- tive book of this nature possible online, and the Mathbook XML and MathJax projects have contributed immensely to its final form, as should be clear. -

Averaging Average Orders

Classical Problems Automorphic Forms Average Order Dirichlet Series Averaging Average Orders Thomas A. Hulse Colby College Joint work with Chan Ieong Kuan, David Lowry-Duda and Alexander Walker 2015 Maine-Qu´ebec Number Theory Conference The University of Maine, Orono 4 October 2015 Thomas A. Hulse Averaging Average Orders 4 October 2015 1 / 22 Classical Problems Automorphic Forms Average Order Dirichlet Series Classical Problems For m 2 Z≥0, let 2 2 r(m) = #fm = x + y j x; y 2 Zg be the sum of squares function, which counts the number of integer points p on the circle of radius m centered at the origin. Gauss's Circle Problem is concerned with estimating P (x), for x > 1, where P (x) is defined as an error term of the sum, X r(m) = πx + P (x); m≤x p which counts the number of lattice points inside the circle of radius x. Specifically, it is concerned with the smallest θ such that P (x) = O(xθ+") 1 for all " > 0. Gauss was able to prove that θ ≤ 2 . Thomas A. Hulse Averaging Average Orders 4 October 2015 2 / 22 Classical Problems Automorphic Forms Average Order Dirichlet Series Classical Problems For m 2 Z≥0, let 2 2 r(m) = #fm = x + y j x; y 2 Zg be the sum of squares function, which counts the number of integer points p on the circle of radius m centered at the origin. Gauss's Circle Problem is concerned with estimating P (x), for x > 1, where P (x) is defined as an error term of the sum, X r(m) = πx + P (x); m≤x p which counts the number of lattice points inside the circle of radius x. -

Minconvex Factors of Prescribed Size in Graphs∗

Minconvex Factors of Prescribed Size in Graphs∗ Nicola Apollonio ,y Andr´as Seb}o z January 24, 2006 Abstract We provide a polynomial algorithm that determines for any given undi- rected graph, positive integer k and a separable convex function on the degree sequences, k edges that minimize the function. The motivation and at the same time the main application of the results is the problem of finding a subset of k vertices in a line graph, that cover as many edges as possible (we called it maxfix cover even though k is part of the input), generalizing the vertex cover problem for line graphs, equivalent to the maximum matching problem in graphs. The usual improving paths or walks for factorization problems turn into edge-disjoint unions of pairs of such paths for this problem. We also show several alternatives for reducing the problem to b-matching problems { lead- ing to generalizations and variations. The algorithms we suggest also work if for any subset of vertices, upper, lower bound constraints or parity constraints are given. In particular max- imum (or minimum) weight b-matchings of given size can be determined in polynomial time, combinatorially, in more than one way, for arbitrary b. Fur- thermore, we provide an alternative "gadget reduction" method to solve the problems which leads to further generalizations, provides ideas to alter the ∗A a preliminary shortened version with part of the results (and a small part of the proofs) has appeared in the IPCO 2004 volume: LNCS 3064 (D. Bienstock and G. Nemhauser, eds.). yUniversity \La Sapienza" di Roma, supported by the European project ADONET while the author visited Laboratoire Leibniz (IMAG), Grenoble, [email protected] zCNRS, Leibniz-IMAG, Grenoble, France, [email protected] 1 usual optimization problems themselves, and solves the arising problems in polynomial time.