Reaching Definitions: Which Assignments Reach a Program Statement • Available Expressions • Live Variables • Dead Code • …

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Equality Saturation: a New Approach to Optimization

Logical Methods in Computer Science Vol. 7 (1:10) 2011, pp. 1–37 Submitted Oct. 12, 2009 www.lmcs-online.org Published Mar. 28, 2011 EQUALITY SATURATION: A NEW APPROACH TO OPTIMIZATION ROSS TATE, MICHAEL STEPP, ZACHARY TATLOCK, AND SORIN LERNER Department of Computer Science and Engineering, University of California, San Diego e-mail address: {rtate,mstepp,ztatlock,lerner}@cs.ucsd.edu Abstract. Optimizations in a traditional compiler are applied sequentially, with each optimization destructively modifying the program to produce a transformed program that is then passed to the next optimization. We present a new approach for structuring the optimization phase of a compiler. In our approach, optimizations take the form of equality analyses that add equality information to a common intermediate representation. The op- timizer works by repeatedly applying these analyses to infer equivalences between program fragments, thus saturating the intermediate representation with equalities. Once saturated, the intermediate representation encodes multiple optimized versions of the input program. At this point, a profitability heuristic picks the final optimized program from the various programs represented in the saturated representation. Our proposed way of structuring optimizers has a variety of benefits over previous approaches: our approach obviates the need to worry about optimization ordering, enables the use of a global optimization heuris- tic that selects among fully optimized programs, and can be used to perform translation validation, even on compilers other than our own. We present our approach, formalize it, and describe our choice of intermediate representation. We also present experimental results showing that our approach is practical in terms of time and space overhead, is effective at discovering intricate optimization opportunities, and is effective at performing translation validation for a realistic optimizer. -

Scalable Conditional Induction Variables (CIV) Analysis

Scalable Conditional Induction Variables (CIV) Analysis ifact Cosmin E. Oancea Lawrence Rauchwerger rt * * Comple A t te n * A te s W i E * s e n l C l o D C O Department of Computer Science Department of Computer Science and Engineering o * * c u e G m s E u e C e n R t v e o d t * y * s E University of Copenhagen Texas A & M University a a l d u e a [email protected] [email protected] t Abstract k = k0 Ind. k = k0 DO i = 1, N DO i =1,N Var. DO i = 1, N IF(cond(b(i)))THEN Subscripts using induction variables that cannot be ex- k = k+2 ) a(k0+2*i)=.. civ = civ+1 )? pressed as a formula in terms of the enclosing-loop indices a(k)=.. Sub. ENDDO a(civ) = ... appear in the low-level implementation of common pro- ENDDO k=k0+MAX(2N,0) ENDIF ENDDO gramming abstractions such as filter, or stack operations and (a) (b) (c) pose significant challenges to automatic parallelization. Be- Figure 1. Loops with affine and CIV array accesses. cause the complexity of such induction variables is often due to their conditional evaluation across the iteration space of its closed-form equivalent k0+2*i, which enables its in- loops we name them Conditional Induction Variables (CIV). dependent evaluation by all iterations. More importantly, This paper presents a flow-sensitive technique that sum- the resulted code, shown in Figure 1(b), allows the com- marizes both such CIV-based and affine subscripts to pro- piler to verify that the set of points written by any dis- gram level, using the same representation. -

Induction Variable Analysis with Delayed Abstractions1

Induction Variable Analysis with Delayed Abstractions1 SEBASTIAN POP, and GEORGES-ANDRE´ SILBER CRI, Mines Paris, France and ALBERT COHEN ALCHEMY group, INRIA Futurs, Orsay, France We present the design of an induction variable analyzer suitable for the analysis of typed, low-level, three address representations in SSA form. At the heart of our analyzer stands a new algorithm that recognizes scalar evolutions. We define a representation called trees of recurrences that is able to capture different levels of abstractions: from the finer level that is a subset of the SSA representation restricted to arithmetic operations on scalar variables, to the coarser levels such as the evolution envelopes that abstract sets of possible evolutions in loops. Unlike previous work, our algorithm tracks induction variables without prior classification of a few evolution patterns: different levels of abstraction can be obtained on demand. The low complexity of the algorithm fits the constraints of a production compiler as illustrated by the evaluation of our implementation on standard benchmark programs. Categories and Subject Descriptors: D.3.4 [Programming Languages]: Processors—compilers, interpreters, optimization, retargetable compilers; F.3.2 [Logics and Meanings of Programs]: Semantics of Programming Languages—partial evaluation, program analysis General Terms: Compilers Additional Key Words and Phrases: Scalar evolutions, static analysis, static single assignment representation, assessing compilers heuristics regressions. 1Extension of Conference Paper: -

Control Flow Analysis in Scheme

Control Flow Analysis in Scheme Olin Shivers Carnegie Mellon University [email protected] Abstract Fortran). Representative texts describing these techniques are [Dragon], and in more detail, [Hecht]. Flow analysis is perhaps Traditional ¯ow analysis techniques, such as the ones typically the chief tool in the optimising compiler writer's bag of tricks; employed by optimising Fortran compilers, do not work for an incomplete list of the problems that can be addressed with Scheme-like languages. This paper presents a ¯ow analysis ¯ow analysis includes global constant subexpression elimina- technique Ð control ¯ow analysis Ð which is applicable to tion, loop invariant detection, redundant assignment detection, Scheme-like languages. As a demonstration application, the dead code elimination, constant propagation, range analysis, information gathered by control ¯ow analysis is used to per- code hoisting, induction variable elimination, copy propaga- form a traditional ¯ow analysis problem, induction variable tion, live variable analysis, loop unrolling, and loop jamming. elimination. Extensions and limitations are discussed. However, these traditional ¯ow analysis techniques have The techniques presented in this paper are backed up by never successfully been applied to the Lisp family of computer working code. They are applicable not only to Scheme, but languages. This is a curious omission. The Lisp community also to related languages, such as Common Lisp and ML. has had suf®cient time to consider the problem. Flow analysis dates back at least to 1960, ([Dragon], pp. 516), and Lisp is 1 The Task one of the oldest computer programming languages currently in use, rivalled only by Fortran and COBOL. Flow analysis is a traditional optimising compiler technique Indeed, the Lisp community has long been concerned with for determining useful information about a program at compile the execution speed of their programs. -

Language and Compiler Support for Dynamic Code Generation by Massimiliano A

Language and Compiler Support for Dynamic Code Generation by Massimiliano A. Poletto S.B., Massachusetts Institute of Technology (1995) M.Eng., Massachusetts Institute of Technology (1995) Submitted to the Department of Electrical Engineering and Computer Science in partial fulfillment of the requirements for the degree of Doctor of Philosophy at the MASSACHUSETTS INSTITUTE OF TECHNOLOGY September 1999 © Massachusetts Institute of Technology 1999. All rights reserved. A u th or ............................................................................ Department of Electrical Engineering and Computer Science June 23, 1999 Certified by...............,. ...*V .,., . .* N . .. .*. *.* . -. *.... M. Frans Kaashoek Associate Pro essor of Electrical Engineering and Computer Science Thesis Supervisor A ccepted by ................ ..... ............ ............................. Arthur C. Smith Chairman, Departmental CommitteA on Graduate Students me 2 Language and Compiler Support for Dynamic Code Generation by Massimiliano A. Poletto Submitted to the Department of Electrical Engineering and Computer Science on June 23, 1999, in partial fulfillment of the requirements for the degree of Doctor of Philosophy Abstract Dynamic code generation, also called run-time code generation or dynamic compilation, is the cre- ation of executable code for an application while that application is running. Dynamic compilation can significantly improve the performance of software by giving the compiler access to run-time infor- mation that is not available to a traditional static compiler. A well-designed programming interface to dynamic compilation can also simplify the creation of important classes of computer programs. Until recently, however, no system combined efficient dynamic generation of high-performance code with a powerful and portable language interface. This thesis describes a system that meets these requirements, and discusses several applications of dynamic compilation. -

Strength Reduction of Induction Variables and Pointer Analysis – Induction Variable Elimination

Loop optimizations • Optimize loops – Loop invariant code motion [last time] Loop Optimizations – Strength reduction of induction variables and Pointer Analysis – Induction variable elimination CS 412/413 Spring 2008 Introduction to Compilers 1 CS 412/413 Spring 2008 Introduction to Compilers 2 Strength Reduction Induction Variables • Basic idea: replace expensive operations (multiplications) with • An induction variable is a variable in a loop, cheaper ones (additions) in definitions of induction variables whose value is a function of the loop iteration s = 3*i+1; number v = f(i) while (i<10) { while (i<10) { j = 3*i+1; //<i,3,1> j = s; • In compilers, this a linear function: a[j] = a[j] –2; a[j] = a[j] –2; i = i+2; i = i+2; f(i) = c*i + d } s= s+6; } •Observation:linear combinations of linear • Benefit: cheaper to compute s = s+6 than j = 3*i functions are linear functions – s = s+6 requires an addition – Consequence: linear combinations of induction – j = 3*i requires a multiplication variables are induction variables CS 412/413 Spring 2008 Introduction to Compilers 3 CS 412/413 Spring 2008 Introduction to Compilers 4 1 Families of Induction Variables Representation • Basic induction variable: a variable whose only definition in the • Representation of induction variables in family i by triples: loop body is of the form – Denote basic induction variable i by <i, 1, 0> i = i + c – Denote induction variable k=i*a+b by triple <i, a, b> where c is a loop-invariant value • Derived induction variables: Each basic induction variable i defines -

Generalizing Loop-Invariant Code Motion in a Real-World Compiler

Imperial College London Department of Computing Generalizing loop-invariant code motion in a real-world compiler Author: Supervisor: Paul Colea Fabio Luporini Co-supervisor: Prof. Paul H. J. Kelly MEng Computing Individual Project June 2015 Abstract Motivated by the perpetual goal of automatically generating efficient code from high-level programming abstractions, compiler optimization has developed into an area of intense research. Apart from general-purpose transformations which are applicable to all or most programs, many highly domain-specific optimizations have also been developed. In this project, we extend such a domain-specific compiler optimization, initially described and implemented in the context of finite element analysis, to one that is suitable for arbitrary applications. Our optimization is a generalization of loop-invariant code motion, a technique which moves invariant statements out of program loops. The novelty of the transformation is due to its ability to avoid more redundant recomputation than normal code motion, at the cost of additional storage space. This project provides a theoretical description of the above technique which is fit for general programs, together with an implementation in LLVM, one of the most successful open-source compiler frameworks. We introduce a simple heuristic-driven profitability model which manages to successfully safeguard against potential performance regressions, at the cost of missing some speedup opportunities. We evaluate the functional correctness of our implementation using the comprehensive LLVM test suite, passing all of its 497 whole program tests. The results of our performance evaluation using the same set of tests reveal that generalized code motion is applicable to many programs, but that consistent performance gains depend on an accurate cost model. -

Efficient Symbolic Analysis for Optimizing Compilers*

Efficient Symbolic Analysis for Optimizing Compilers? Robert A. van Engelen Dept. of Computer Science, Florida State University, Tallahassee, FL 32306-4530 [email protected] Abstract. Because most of the execution time of a program is typically spend in loops, loop optimization is the main target of optimizing and re- structuring compilers. An accurate determination of induction variables and dependencies in loops is of paramount importance to many loop opti- mization and parallelization techniques, such as generalized loop strength reduction, loop parallelization by induction variable substitution, and loop-invariant expression elimination. In this paper we present a new method for induction variable recognition. Existing methods are either ad-hoc and not powerful enough to recognize some types of induction variables, or existing methods are powerful but not safe. The most pow- erful method known is the symbolic differencing method as demonstrated by the Parafrase-2 compiler on parallelizing the Perfect Benchmarks(R). However, symbolic differencing is inherently unsafe and a compiler that uses this method may produce incorrectly transformed programs without issuing a warning. In contrast, our method is safe, simpler to implement in a compiler, better adaptable for controlling loop transformations, and recognizes a larger class of induction variables. 1 Introduction It is well known that the optimization and parallelization of scientific applica- tions by restructuring compilers requires extensive analysis of induction vari- ables and dependencies -

CSE 401/M501 – Compilers

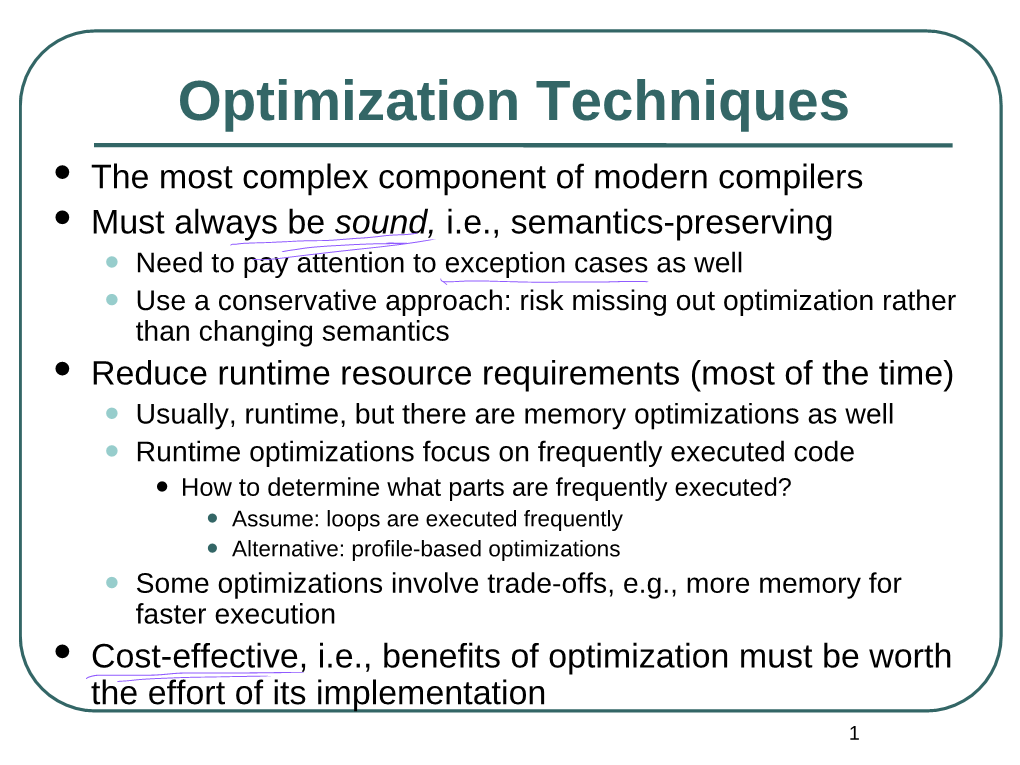

CSE 401/M501 – Compilers Dataflow Analysis Hal Perkins Autumn 2018 UW CSE 401/M501 Autumn 2018 O-1 Agenda • Dataflow analysis: a framework and algorithm for many common compiler analyses • Initial example: dataflow analysis for common subexpression elimination • Other analysis problems that work in the same framework • Some of these are the same optimizations we’ve seen, but more formally and with details UW CSE 401/M501 Autumn 2018 O-2 Common Subexpression Elimination • Goal: use dataflow analysis to A find common subexpressions m = a + b n = a + b • Idea: calculate available expressions at beginning of B C p = c + d q = a + b each basic block r = c + d r = c + d • Avoid re-evaluation of an D E available expression – use a e = b + 18 e = a + 17 copy operation s = a + b t = c + d u = e + f u = e + f – Simple inside a single block; more complex dataflow analysis used F across bocks v = a + b w = c + d x = e + f G y = a + b z = c + d UW CSE 401/M501 Autumn 2018 O-3 “Available” and Other Terms • An expression e is defined at point p in the CFG if its value a+b is computed at p defined t1 = a + b – Sometimes called definition site ... • An expression e is killed at point p if one of its operands a+b is defined at p available t10 = a + b – Sometimes called kill site … • An expression e is available at point p if every path a+b leading to p contains a prior killed b = 7 definition of e and e is not … killed between that definition and p UW CSE 401/M501 Autumn 2018 O-4 Available Expression Sets • To compute available expressions, for each block -

Compiler-Based Code-Improvement Techniques

Compiler-Based Code-Improvement Techniques KEITH D. COOPER, KATHRYN S. MCKINLEY, and LINDA TORCZON Since the earliest days of compilation, code quality has been recognized as an important problem [18]. A rich literature has developed around the issue of improving code quality. This paper surveys one part of that literature: code transformations intended to improve the running time of programs on uniprocessor machines. This paper emphasizes transformations intended to improve code quality rather than analysis methods. We describe analytical techniques and specific data-flow problems to the extent that they are necessary to understand the transformations. Other papers provide excellent summaries of the various sub-fields of program analysis. The paper is structured around a simple taxonomy that classifies transformations based on how they change the code. The taxonomy is populated with example transformations drawn from the literature. Each transformation is described at a depth that facilitates broad understanding; detailed references are provided for deeper study of individual transformations. The taxonomy provides the reader with a framework for thinking about code-improving transformations. It also serves as an organizing principle for the paper. Copyright 1998, all rights reserved. You may copy this article for your personal use in Comp 512. Further reproduction or distribution requires written permission from the authors. 1INTRODUCTION This paper presents an overview of compiler-based methods for improving the run-time behavior of programs — often mislabeled code optimization. These techniques have a long history in the literature. For example, Backus makes it quite clear that code quality was a major concern to the implementors of the first Fortran compilers [18]. -

Lecture 1 Introduction

Lecture 1 Introduction • What would you get out of this course? • Structure of a Compiler • Optimization Example Carnegie Mellon Todd C. Mowry 15-745: Introduction 1 What Do Compilers Do? 1. Translate one language into another – e.g., convert C++ into x86 object code – difficult for “natural” languages, but feasible for computer languages 2. Improve (i.e. “optimize”) the code – e.g, make the code run 3 times faster – driving force behind modern processor design Carnegie Mellon 15-745: Introduction 2 Todd C. Mowry What Do We Mean By “Optimization”? • Informal Definition: – transform a computation to an equivalent but “better” form • in what way is it equivalent? • in what way is it better? • “Optimize” is a bit of a misnomer – the result is not actually optimal Carnegie Mellon 15-745: Introduction 3 Todd C. Mowry How Can the Compiler Improve Performance? Execution time = Operation count * Machine cycles per operation • Minimize the number of operations – arithmetic operations, memory acesses • Replace expensive operations with simpler ones – e.g., replace 4-cycle multiplication with 1-cycle shift • Minimize cache misses Processor – both data and instruction accesses • Perform work in parallel cache – instruction scheduling within a thread memory – parallel execution across multiple threads • Related issue: minimize object code size – more important on embedded systems Carnegie Mellon 15-745: Introduction 4 Todd C. Mowry Other Optimization Goals Besides Performance • Minimizing power and energy consumption • Finding (and minimizing the -

Simplification of Array Access Patterns for Compiler Optimizations *

Simplification of Array Access Patterns for Compiler Optimizations * Yunheung Paek$ Jay Hoeflingert David Paduat $ New Jersey Institute of Technology paekQcis.njit.edu t University of Illinois at Urbana-Champaign {hoef ling ,padua}Buiuc . edu Abstract Existing array region representation techniques are sen- sitive to the complexity of array subscripts. In general, these techniques are very accurate and efficient for sim- ple subscript expressions, but lose accuracy or require potentially expensive algorithms for complex subscripts. We found that in scientific applications, many access patterns are simple even when the subscript expressions Figure 1: Access to array X with indices ik, 1 5 k < d, in are complex. In this work, we present a new, general the program ScCtiOn ‘P such that 15 5 ik 5 Uk. array access representation and define operations for it. This allows us to aggregate and simplify the rep- resentation enough that precise region operations may be determined. For this purpose, when doing array ac- be applied to enable compiler optimizations. Our ex- cess analysis for the references to an array, a compiler periments show that these techniques hold promise for would first calculate a Reference Descriptor (RD) for speeding up applications. each, which extends the array reference with range con- straints. For instance, given that il: ranges from lk to 1 Introduction uk in p (for k = 1,2, * - -, d), the RD for the access in Figure 1 is The array is one of the most important data structures “X(81(i), .92(i), e-s, am(i)) subject to in imperative programs. Particularly in numerical ap- lk < ik 5 Uk, for k = 1,2, * * ‘, d.” plications, almost all computation is performed on ar- rays.