Dynamic Difficulty Adjustment Via Ranking of Avail- Able Actions

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Evaluating Dynamic Difficulty Adaptivity in Shoot'em up Games

SBC - Proceedings of SBGames 2013 Computing Track – Full Papers Evaluating dynamic difficulty adaptivity in shoot’em up games Bruno Baere` Pederassi Lomba de Araujo and Bruno Feijo´ VisionLab/ICAD Dept. of Informatics, PUC-Rio Rio de Janeiro, RJ, Brazil [email protected], [email protected] Abstract—In shoot’em up games, the player engages in a up game called Zanac [12], [13]2. More recent games that solitary assault against a large number of enemies, which calls implement some kind of difficulty adaptivity are Mario Kart for a very fast adaptation of the player to a continuous evolution 64 [40], Max Payne [20], the Left 4 Dead series [53], [54], of the enemies attack patterns. This genre of video game is and the GundeadliGne series [2]. quite appropriate for studying and evaluating dynamic difficulty adaptivity in games that can adapt themselves to the player’s skill Charles et al. [9], [10] proposed a framework for creating level while keeping him/her constantly motivated. In this paper, dynamic adaptive systems for video games including the use of we evaluate the use of dynamic adaptivity for casual and hardcore player modeling for the assessment of the system’s response. players, from the perspectives of the flow theory and the model Hunicke et al. [27], [28] proposed and tested an adaptive of core elements of the game experience. Also we present an system for FPS games where the deployment of resources, adaptive model for shoot’em up games based on player modeling and online learning, which is both simple and effective. -

View Portfolio Document

games assets portfolio FULL GAME CREDITS ACTIVISION InXILE Starbreeze Call of Duty: Ghosts Heist The walking dead Call of Duty: Advanced Warfare Call of Duty: Black Ops 3 IO INTERACTIVE SQUARE ENIX Call of Duty: Infinity Warfare Hitman: Absolution Bravely Default BIOWARE KABAM THQ Dragon Age: Inquisition Spirit Lords Darksiders Saints Row 2 CRYSTAL DYNAMICS KONAMI Tomb Raider 2013 Silent Hill: Shattered Memories TORUS Rise of the Tomb Raider Barbie: Life in the Dreamhouse MIDWAY Falling Skies: Planetary Warfare ELECTRONIC ARTS NFL Blitz 2 How to Train Your Dragon 2 DarkSpore Penguins of Madagascar FIFA 09/10/11/12/13/14/15/16/17/18/19 PANDEMIC STUDIOS Fight Night 4 The Sabateur VICIOUS CYCLE Harry Potter – Deathly Hallows Part 1 & 2 Ben 10: Alien Force NBA Live 09/10/12/13 ROCKSTAR GAMES Dead Head Fred NCAA Football 09/10/11/12/13/14 LA Noire NFL Madden 11/12/13/14/15 / 18 Max Payne 2 2K NHL 09/10/11/12/13/16/17/18 Max Payne 3 NBA 2K14/15 Rory Mcilroy PGA Tour Red Dead Redemption Tiger Woods 11/12/13 Grand Theft Auto V 505 GAMES Warhammer Online: Age of Reckoning Takedown (Trailer) UFC 1/ 2 /3 SONY COMPUTER ENTERTAINMENT NFS – Payback God of War 2 EPIC GAMES Battlefield 1 In the name of Tsar Sorcery Gears of War 2 Killzone: Shadow Fall UBISOFT Assassin’s Creed GAMELOFT Starlink Asphalt 9 Steep Rainbow 6 KEYFRAME ANIMATION ASSET CREATION MOCAP CLEANUP LIGHTING FX UBISOFT Assassin Creed Odyssey UBISOFT UBISOFT Assassin Creed Odyssey UBISOFT Assassin Creed Odyssey UBISOFT Assassin Creed Odyssey UBISOFT Assassin Creed Odyssey Electronic Arts -

TESIS: Grand Theft Auto IV. Impacto Y Contexto En Los Videojuegos Como

UNIVERSIDAD NACIONAL AUTÓNOMA DE MÉXICO FACULTAD DE ESTUDIOS SUPERIORES ACATLÁN Grand Theft Auto IV. Impacto y contexto en los videojuegos como parte de la cultura de masas Tesis para obtener el título de: Licenciado en Comunicación PRESENTA David Mendieta Velázquez ASESOR DE TESIS Mtro. José C. Botello Hernández UNAM – Dirección General de Bibliotecas Tesis Digitales Restricciones de uso DERECHOS RESERVADOS © PROHIBIDA SU REPRODUCCIÓN TOTAL O PARCIAL Todo el material contenido en esta tesis esta protegido por la Ley Federal del Derecho de Autor (LFDA) de los Estados Unidos Mexicanos (México). El uso de imágenes, fragmentos de videos, y demás material que sea objeto de protección de los derechos de autor, será exclusivamente para fines educativos e informativos y deberá citar la fuente donde la obtuvo mencionando el autor o autores. Cualquier uso distinto como el lucro, reproducción, edición o modificación, será perseguido y sancionado por el respectivo titular de los Derechos de Autor. Grand Theft Auto IV Impacto y contexto en los videojuegos como parte de la cultura de masas Agradecimientos A mis padres. Gracias, papá, por enseñarme valores y por tratar de enseñarme todo lo que sabías para que llegara a ser alguien importante. Sé que desde el cielo estás orgulloso de tu familia. Mamá, gracias por todo el apoyo en todos estos años; sé que tu esfuerzo es enorme y en este trabajo se refleja solo un poco de tus desvelos y preocupaciones. Gracias por todo tu apoyo para la terminación de este trabajo. A Ariadna Pruneda Alcántara. Gracias, mi amor, por toda tu ayuda y comprensión. Tu orientación, opiniones e interés que me has dado para la realización de cualquier proyecto que me he propuesto, así como por ser la motivación para seguir adelante siempre. -

Rockstar Games Announces Max Payne 3 Release Date

Rockstar Games Announces Max Payne 3 Release Date January 17, 2012 8:30 AM ET NEW YORK--(BUSINESS WIRE)--Jan. 17, 2012-- Rockstar Games, a publishing label of Take-Two Interactive Software, Inc. (NASDAQ: TTWO), is proud to announce that Max Payne 3 is expected to launch on the Xbox 360® video game and entertainment system from Microsoft and PlayStation®3 computer entertainment system on May 15, 2012 in North America and May 18, 2012 internationally; and for the PC on May 29, 2012 in North America and June 1, 2012 internationally. “Max Payne 3 brings powerful storytelling back to the action-shooter genre,” said Sam Houser, Founder of Rockstar Games. “Rockstar Studios are delivering a game that’s both incredibly cinematic and very, very intense to play.” Based on incredibly precise and fluid gunplay and maintaining the series’ famed dark and cinematic approach, Max Payne 3 follows the famed former New York detective onto the streets of São Paulo, Brazil. Max Payne now works in executive protection for the wealthy Rodrigo Branco in the hopes of escaping the memories of his troubled past. When a street gang kidnaps Rodrigo’s wife, Max is pulled into a conspiracy of shadowy, warring factions threading every aspect of São Paulo society in a deadly web that threatens to engulf everyone and everything around him. In another first for the series, Max Payne 3’s multiplayer offering brings the game’s cinematic feel, fluid gunplay and kinetic sense of movement into the realm of online multiplayer. Building on the fiction and signature gameplay elements of the Max Payne universe, Max Payne 3 features a wide range of new and traditional multiplayer modes that play on the themes of paranoia, betrayal and heroism, all delivered with the same epic visual style of the single-player game. -

3.2 Bullet Time and the Mediation of Post-Cinematic Temporality

3.2 Bullet Time and the Mediation of Post-Cinematic Temporality BY ANDREAS SUDMANN I’ve watched you, Neo. You do not use a computer like a tool. You use it like it was part of yourself. —Morpheus in The Matrix Digital computers, these universal machines, are everywhere; virtually ubiquitous, they surround us, and they do so all the time. They are even inside our bodies. They have become so familiar and so deeply connected to us that we no longer seem to be aware of their presence (apart from moments of interruption, dysfunction—or, in short, events). Without a doubt, computers have become crucial actants in determining our situation. But even when we pay conscious attention to them, we necessarily overlook the procedural (and temporal) operations most central to computation, as these take place at speeds we cannot cognitively capture. How, then, can we describe the affective and temporal experience of digital media, whose algorithmic processes elude conscious thought and yet form the (im)material conditions of much of our life today? In order to address this question, this chapter examines several examples of digital media works (films, games) that can serve as central mediators of the shift to a properly post-cinematic regime, focusing particularly on the aesthetic dimensions of the popular and transmedial “bullet time” effect. Looking primarily at the first Matrix film | 1 3.2 Bullet Time and the Mediation of Post-Cinematic Temporality (1999), as well as digital games like the Max Payne series (2001; 2003; 2012), I seek to explore how the use of bullet time serves to highlight the medial transformation of temporality and affect that takes place with the advent of the digital—how it establishes an alternative configuration of perception and agency, perhaps unprecedented in the cinematic age that was dominated by what Deleuze has called the “movement-image.”[1] 1. -

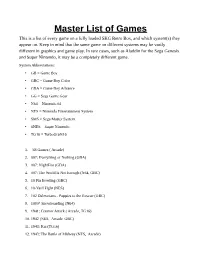

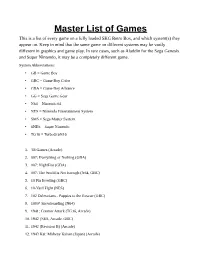

Master List of Games This Is a List of Every Game on a Fully Loaded SKG Retro Box, and Which System(S) They Appear On

Master List of Games This is a list of every game on a fully loaded SKG Retro Box, and which system(s) they appear on. Keep in mind that the same game on different systems may be vastly different in graphics and game play. In rare cases, such as Aladdin for the Sega Genesis and Super Nintendo, it may be a completely different game. System Abbreviations: • GB = Game Boy • GBC = Game Boy Color • GBA = Game Boy Advance • GG = Sega Game Gear • N64 = Nintendo 64 • NES = Nintendo Entertainment System • SMS = Sega Master System • SNES = Super Nintendo • TG16 = TurboGrafx16 1. '88 Games ( Arcade) 2. 007: Everything or Nothing (GBA) 3. 007: NightFire (GBA) 4. 007: The World Is Not Enough (N64, GBC) 5. 10 Pin Bowling (GBC) 6. 10-Yard Fight (NES) 7. 102 Dalmatians - Puppies to the Rescue (GBC) 8. 1080° Snowboarding (N64) 9. 1941: Counter Attack ( Arcade, TG16) 10. 1942 (NES, Arcade, GBC) 11. 1943: Kai (TG16) 12. 1943: The Battle of Midway (NES, Arcade) 13. 1944: The Loop Master ( Arcade) 14. 1999: Hore, Mitakotoka! Seikimatsu (NES) 15. 19XX: The War Against Destiny ( Arcade) 16. 2 on 2 Open Ice Challenge ( Arcade) 17. 2010: The Graphic Action Game (Colecovision) 18. 2020 Super Baseball ( Arcade, SNES) 19. 21-Emon (TG16) 20. 3 Choume no Tama: Tama and Friends: 3 Choume Obake Panic!! (GB) 21. 3 Count Bout ( Arcade) 22. 3 Ninjas Kick Back (SNES, Genesis, Sega CD) 23. 3-D Tic-Tac-Toe (Atari 2600) 24. 3-D Ultra Pinball: Thrillride (GBC) 25. 3-D WorldRunner (NES) 26. 3D Asteroids (Atari 7800) 27. -

![[Japan] SALA GIOCHI ARCADE 1000 Miglia](https://docslib.b-cdn.net/cover/3367/japan-sala-giochi-arcade-1000-miglia-393367.webp)

[Japan] SALA GIOCHI ARCADE 1000 Miglia

SCHEDA NEW PLATINUM PI4 EDITION La seguente lista elenca la maggior parte dei titoli emulati dalla scheda NEW PLATINUM Pi4 (20.000). - I giochi per computer (Amiga, Commodore, Pc, etc) richiedono una tastiera per computer e talvolta un mouse USB da collegare alla console (in quanto tali sistemi funzionavano con mouse e tastiera). - I giochi che richiedono spinner (es. Arkanoid), volanti (giochi di corse), pistole (es. Duck Hunt) potrebbero non essere controllabili con joystick, ma richiedono periferiche ad hoc, al momento non configurabili. - I giochi che richiedono controller analogici (Playstation, Nintendo 64, etc etc) potrebbero non essere controllabili con plance a levetta singola, ma richiedono, appunto, un joypad con analogici (venduto separatamente). - Questo elenco è relativo alla scheda NEW PLATINUM EDITION basata su Raspberry Pi4. - Gli emulatori di sistemi 3D (Playstation, Nintendo64, Dreamcast) e PC (Amiga, Commodore) sono presenti SOLO nella NEW PLATINUM Pi4 e non sulle versioni Pi3 Plus e Gold. - Gli emulatori Atomiswave, Sega Naomi (Virtua Tennis, Virtua Striker, etc.) sono presenti SOLO nelle schede Pi4. - La versione PLUS Pi3B+ emula solo 550 titoli ARCADE, generati casualmente al momento dell'acquisto e non modificabile. Ultimo aggiornamento 2 Settembre 2020 NOME GIOCO EMULATORE 005 SALA GIOCHI ARCADE 1 On 1 Government [Japan] SALA GIOCHI ARCADE 1000 Miglia: Great 1000 Miles Rally SALA GIOCHI ARCADE 10-Yard Fight SALA GIOCHI ARCADE 18 Holes Pro Golf SALA GIOCHI ARCADE 1941: Counter Attack SALA GIOCHI ARCADE 1942 SALA GIOCHI ARCADE 1943 Kai: Midway Kaisen SALA GIOCHI ARCADE 1943: The Battle of Midway [Europe] SALA GIOCHI ARCADE 1944 : The Loop Master [USA] SALA GIOCHI ARCADE 1945k III SALA GIOCHI ARCADE 19XX : The War Against Destiny [USA] SALA GIOCHI ARCADE 2 On 2 Open Ice Challenge SALA GIOCHI ARCADE 4-D Warriors SALA GIOCHI ARCADE 64th. -

Master List of Games This Is a List of Every Game on a Fully Loaded SKG Retro Box, and Which System(S) They Appear On

Master List of Games This is a list of every game on a fully loaded SKG Retro Box, and which system(s) they appear on. Keep in mind that the same game on different systems may be vastly different in graphics and game play. In rare cases, such as Aladdin for the Sega Genesis and Super Nintendo, it may be a completely different game. System Abbreviations: • GB = Game Boy • GBC = Game Boy Color • GBA = Game Boy Advance • GG = Sega Game Gear • N64 = Nintendo 64 • NES = Nintendo Entertainment System • SMS = Sega Master System • SNES = Super Nintendo • TG16 = TurboGrafx16 1. '88 Games (Arcade) 2. 007: Everything or Nothing (GBA) 3. 007: NightFire (GBA) 4. 007: The World Is Not Enough (N64, GBC) 5. 10 Pin Bowling (GBC) 6. 10-Yard Fight (NES) 7. 102 Dalmatians - Puppies to the Rescue (GBC) 8. 1080° Snowboarding (N64) 9. 1941: Counter Attack (TG16, Arcade) 10. 1942 (NES, Arcade, GBC) 11. 1942 (Revision B) (Arcade) 12. 1943 Kai: Midway Kaisen (Japan) (Arcade) 13. 1943: Kai (TG16) 14. 1943: The Battle of Midway (NES, Arcade) 15. 1944: The Loop Master (Arcade) 16. 1999: Hore, Mitakotoka! Seikimatsu (NES) 17. 19XX: The War Against Destiny (Arcade) 18. 2 on 2 Open Ice Challenge (Arcade) 19. 2010: The Graphic Action Game (Colecovision) 20. 2020 Super Baseball (SNES, Arcade) 21. 21-Emon (TG16) 22. 3 Choume no Tama: Tama and Friends: 3 Choume Obake Panic!! (GB) 23. 3 Count Bout (Arcade) 24. 3 Ninjas Kick Back (SNES, Genesis, Sega CD) 25. 3-D Tic-Tac-Toe (Atari 2600) 26. 3-D Ultra Pinball: Thrillride (GBC) 27. -

On Videogames: Representing Narrative in an Interactive Medium

September, 2015 On Videogames: Representing Narrative in an Interactive Medium. 'This thesis is submitted in partial fulfilment of the requirements for the degree of Doctor of Philosophy' Dawn Catherine Hazel Stobbart, Ba (Hons) MA Dawn Stobbart 1 Plagiarism Statement This project was written by me and in my own words, except for quotations from published and unpublished sources which are clearly indicated and acknowledged as such. I am conscious that the incorporation of material from other works or a paraphrase of such material without acknowledgement will be treated as plagiarism, subject to the custom and usage of the subject, according to the University Regulations on Conduct of Examinations. (Name) Dawn Catherine Stobbart (Signature) Dawn Stobbart 2 This thesis is formatted using the Chicago referencing system. Where possible I have collected screenshots from videogames as part of my primary playing experience, and all images should be attributed to the game designers and publishers. Dawn Stobbart 3 Acknowledgements There are a number of people who have been instrumental in the production of this thesis, and without whom I would not have made it to the end. Firstly, I would like to thank my supervisor, Professor Kamilla Elliott, for her continuous and unwavering support of my Ph.D study and related research, for her patience, motivation, and commitment. Her guidance helped me throughout all the time I have been researching and writing of this thesis. When I have faltered, she has been steadfast in my ability. I could not have imagined a better advisor and mentor. I would not be working in English if it were not for the support of my Secondary school teacher Mrs Lishman, who gave me a love of the written word. -

Rockstar Games Announces Max Payne 3 Painful Memories Pack Now Available

Rockstar Games Announces Max Payne 3 Painful Memories Pack Now Available December 4, 2012 7:30 AM ET NEW YORK--(BUSINESS WIRE)--Dec. 4, 2012-- Rockstar Games, a publishing label of Take-Two Interactive Software, Inc. (NASDAQ: TTWO), is happy to announce that the Painful Memories Pack, the latest downloadable content pack for Max Payne 3, is now available for download on the Xbox LIVE® online entertainment network, PlayStation® Network for the PlayStation® 3 computer entertainment system, and PC. The Painful Memories Pack includes four new maps for Max Payne 3 multiplayer, from the iconic Roscoe Street Subway of Max's past to the dingy Hoboken watering hole, Marty's Bar. The pack also includes the IMG 5.56 and UAR-21 assault rifles, new avatars, items, and the Hangover Burst, which temporarily causes enemies to respawn with blurred vision and reduced stamina and health. The Painful Memories Pack is now available for 800 Microsoft Points on Xbox LIVE and $9.99 on PlayStation Network and PC. Players who purchased the Max Payne The Painful Memories Pack, the latest downloadable content pack for Max 3 Rockstar Pass can download the pack free Payne 3, is now available for download on the Xbox LIVE(R) online of charge. The Max Payne 3 Rockstar Pass entertainment network, PlayStation(R) Network for the PlayStation(R) 3 is currently available for 2400 Microsoft computer entertainment system, and PC. (Photo: Business Wire) Points (Xbox LIVE) or $29.99 on PSN. Featuring cutting edge shooting mechanics for precision gunplay, advanced new Bullet Time® and Shootdodge™ effects, full integration of Natural Motion's Euphoria Character Behavior system for lifelike movement and a dark and twisted story, Max Payne 3 is a seamless, highly detailed, cinematic experience from Rockstar Games. -

Bullet Time and the Mediation of Post-Cinematic Temporality 2016

Repositorium für die Medienwissenschaft Andreas Sudmann Bullet Time and the Mediation of Post-Cinematic Temporality 2016 https://doi.org/10.25969/mediarep/13461 Veröffentlichungsversion / published version Sammelbandbeitrag / collection article Empfohlene Zitierung / Suggested Citation: Sudmann, Andreas: Bullet Time and the Mediation of Post-Cinematic Temporality. In: Shane Denson, Julia Leyda (Hg.): Post-Cinema. Theorizing 21st-Century Film. Falmer: REFRAME Books 2016, S. 297– 326. DOI: https://doi.org/10.25969/mediarep/13461. Nutzungsbedingungen: Terms of use: Dieser Text wird unter einer Creative Commons - This document is made available under a creative commons - Namensnennung - Nicht kommerziell - Keine Bearbeitungen 4.0/ Attribution - Non Commercial - No Derivatives 4.0/ License. For Lizenz zur Verfügung gestellt. Nähere Auskünfte zu dieser Lizenz more information see: finden Sie hier: https://creativecommons.org/licenses/by-nc-nd/4.0/ https://creativecommons.org/licenses/by-nc-nd/4.0/ 3.2 Bullet Time and the Mediation of Post-Cinematic Temporality BY ANDREAS SUDMANN I’ve watched you, Neo. You do not use a computer like a tool. You use it like it was part of yourself. —Morpheus in The Matrix Digital computers, these universal machines, are everywhere; virtually ubiquitous, they surround us, and they do so all the time. They are even inside our bodies. They have become so familiar and so deeply connected to us that we no longer seem to be aware of their presence (apart from moments of interruption, dysfunction—or, in short, events). Without a doubt, computers have become crucial actants in determining our situation. But even when we pay conscious attention to them, we necessarily overlook the procedural (and temporal) operations most central to computation, as these take place at speeds we cannot cognitively capture. -

Master Thesis Project Artificial Intelligence Adaptation in Video

Master Thesis Project Artificial Intelligence Adaptation in Video Games Author: Anastasiia Zhadan Supervisor: Aris Alissandrakis Examiner: Welf Löwe Reader: Narges Khakpour Semester: VT 2018 Course Code: 4DV50E Subject: Computer Science Abstract One of the most important features of a (computer) game that makes it mem- orable is an ability to bring a sense of engagement. This can be achieved in numerous ways, but the most major part is a challenge, often provided by in-game enemies and their ability to adapt towards the human player. How- ever, adaptability is not very common in games. Throughout this thesis work, aspects of the game control systems that can be improved in order to be adapt- able were studied. Based on the results gained from the study of the literature related to artificial intelligence in games, a classification of games was de- veloped for grouping the games by the complexity of the control systems and their ability to adapt different aspects of enemies behavior including individual and group behavior. It appeared that only 33% of the games can not be con- sidered adaptable. This classification was then used to analyze the popularity of games regarding their challenge complexity. Analysis revealed that simple, familiar behavior is more welcomed by players. However, highly adaptable games have got competitively high scores and excellent reviews from game critics and reviewers, proving that adaptability in games deserves further re- search. Keywords: artificial intelligence in games, adaptability in games, non-player character adaptation, challenge Preface Computer games have become an interest for me not so long ago, but since then they have turned almost into a true passion.