The Ontic Inference Language

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Program #6: Word Count

CSc 227 — Program Design and Development Spring 2014 (McCann) http://www.cs.arizona.edu/classes/cs227/spring14/ Program #6: Word Count Due Date: March 11 th, 2014, at 9:00 p.m. MST Overview: The UNIX operating system (and its variants, of which Linux is one) includes quite a few useful utility programs. One of those is wc, which is short for Word Count. The purpose of wc is to give users an easy way to determine the size of a text file in terms of the number of lines, words, and bytes it contains. (It can do a bit more, but that’s all of the functionality that we are concerned with for this assignment.) Counting lines is done by looking for “end of line” characters (\n (ASCII 10) for UNIX text files, or the pair \r\n (ASCII 13 and 10) for Windows/DOS text files). Counting words is also straight–forward: Any sequence of characters not interrupted by “whitespace” (spaces, tabs, end–of–line characters) is a word. Of course, whitespace characters are characters, and need to be counted as such. A problem with wc is that it generates a very minimal output format. Here’s an example of what wc produces on a Linux system when asked to count the content of a pair of files; we can do better! $ wc prog6a.dat prog6b.dat 2 6 38 prog6a.dat 32 321 1883 prog6b.dat 34 327 1921 total Assignment: Write a Java program (completely documented according to the class documentation guidelines, of course) that counts lines, words, and bytes (characters) of text files. -

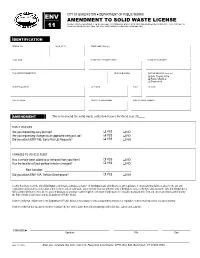

ENV-11 License Amendment

CITY OF BURLINGTON ● DEPARTMENT OF PUBLIC WORKS ENV AMENDMENT TO SOLID WASTE LICENSE TO BE COMPLETED WHEN A VEHICLE FLEET CHANGES OR EARLY PICK UPS ARE REQUESTED PURSUANT TO CHAPTER 14, CODE OF ORDINANCES. (SEE INSTRUCTIONS ON BACK BEFORE COMPLETING) 11 IDENTIFICATION FEDERAL EIN ACCOUNT ID TRADE NAME (DBA, etc.) LEGAL NAME BUSINESS TELEPHONE NUMBER BUSINESS FAX NUMBER TAXPAYER REPRESENTATIVE YEARS IN BUSINESS FORM OF BUSINESS (check one) Sole Proprietorship Partnership/LLC Corporation BUSINESS ADDRESS CITY/TOWN STATE ZIP CODE CONTACT NAME CONTACT PHONE NUMBER CONTACT EMAIL ADDRESS AMENDMENT This is to amend the solid waste collection license for fiscal year 20____. EARLY PICK UPS Are you requesting early pick up? YES NO Are you requesting changes to an approved early pick up? YES NO Did you attach ENV-10B, Early Pick Up Requests? YES N/A CHANGES TO VEHICLE FLEET Has a vehicle been added to or removed from your fleet? YES NO Has the location of your parked vehicles changed? YES NO New Location: Did you attach ENV-10A, Vehicle Descriptions? YES N/A I certify that I have read the City of Burlington’s solid waste ordinance (Chapter 14, Burlington Code of Ordinances) and regulations. I understand that failure to observe the law and regulations could result in a revocation of my license to collect solid waste and recyclable material within the City of Burlington. I also certify that I understand the City of Burlington has a Noise Control Ordinance (Sec. 21-13, Code of Ordinances) and that I cannot begin the collection of solid waste or recyclable material before 7:00 a.m. -

Modern Programming Languages CS508 Virtual University of Pakistan

Modern Programming Languages (CS508) VU Modern Programming Languages CS508 Virtual University of Pakistan Leaders in Education Technology 1 © Copyright Virtual University of Pakistan Modern Programming Languages (CS508) VU TABLE of CONTENTS Course Objectives...........................................................................................................................4 Introduction and Historical Background (Lecture 1-8)..............................................................5 Language Evaluation Criterion.....................................................................................................6 Language Evaluation Criterion...................................................................................................15 An Introduction to SNOBOL (Lecture 9-12).............................................................................32 Ada Programming Language: An Introduction (Lecture 13-17).............................................45 LISP Programming Language: An Introduction (Lecture 18-21)...........................................63 PROLOG - Programming in Logic (Lecture 22-26) .................................................................77 Java Programming Language (Lecture 27-30)..........................................................................92 C# Programming Language (Lecture 31-34) ...........................................................................111 PHP – Personal Home Page PHP: Hypertext Preprocessor (Lecture 35-37)........................129 Modern Programming Languages-JavaScript -

Praat Scripting Tutorial

Praat Scripting Tutorial Eleanor Chodroff Newcastle University July 2019 Praat Acoustic analysis program Best known for its ability to: Visualize, label, and segment audio files Perform spectral and temporal analyses Synthesize and manipulate speech Praat Scripting Praat: not only a program, but also a language Why do I want to know Praat the language? AUTOMATE ALL THE THINGS Praat Scripting Why can’t I just modify others’ scripts? Honestly: power, flexibility, control Insert: all the gifs of ‘you can do it’ and ‘you got this’ and thumbs up Praat Scripting Goals ~*~Script first for yourself, then for others~*~ • Write Praat scripts quickly, effectively, and “from scratch” • Learn syntax and structure of the language • Handle various input/output combinations Tutorial Overview 1) Praat: Big Picture 2) Getting started 3) Basic syntax 4) Script types + Practice • Wav files • Measurements • TextGrids • Other? Praat: Big Picture 1) Similar to other languages you may (or may not) have used before • String and numeric variables • For-loops, if else statements, while loops • Regular expression matching • Interpreted language (not compiled) Praat: Big Picture 2) Almost everything is a mouse click! i.e., Praat is a GUI scripting language GUI = Graphical User Interface, i.e., the Objects window If you ever get lost while writing a Praat script, click through the steps using the GUI Getting Started Open a Praat script From the toolbar, select Praat à New Praat script Save immediately! Save frequently! Script Goals and Input/Output • Consider what -

Unique Identifier for Staff (UID Staff) Overview

Unique Identifier for Staff (UID Staff) Overview The intent of this document is to provide an overview of the Unique Identifier for Staff (UID Staff) system prior to working in the UID Staff System. After reviewing this information, please review the training materials that are available on the UID Staff Training website (http://www.ncpublicschools.org/cedars/uniqueid/staff/training/) for instructions on how to complete the processes of the Staff UID System. Staff UID Overview • The Unique Identifier for Staff System (UID Staff) will assign a unique identifier to Staff who participate in the North Carolina public school system. • Unique IDs follow staff between school districts and remain valid even if they move out of state and then return to a NC public school. • Assigning unique identifiers is the first step in DPI’s multi-stage effort to create the NC Common Education Data Analysis and Reporting System (CEDARS). • UID Staff is built on the eScholar Uniq-ID® for Staff product. Overview of UID Staff Process The UID Staff process includes the following 8 steps: 1. Generate CEDARS Staff file from LEA/Charter School Payroll System • Instructions for generating this file can be found at: http://www.ncpublicschools.org/docs/cedars/uniqueid/staff/training/creating-extracts.pdf 2. Upload CEDARS Staff file into UID Staff and Initiates Data Validation • This file can be loaded into the Training Environment (https://cedarstrain.schools.nc.gov/staffid/) so that you can check for errors before loading into production. 3. Download Fix Errors Report (if data errors are found by the UID Staff System) 4. -

Program That Runs Other Programs Unix/Linux Process Hierarchy Shell

shell: program that runs other programs Building a Shell 1 5 Unix/Linux Process Hierarchy shell program that runs other programs on behalf of the user sh Original Unix shell (Stephen Bourne, AT&T Bell Labs, 1977) [0] bash “Bourne-Again” Shell, widely used default on most Unix/Linux/Mac OS X systems others.. init [1] while (true) { Print command prompt. Daemon Login shell Read command line from user. e.g. httpd Parse command line. If command is built-in, do it. Child Child Child Else fork process to execute command. in child: Execute requested command with execv. (never returns) Grandchild Grandchild in parent: Wait for child to complete. } 6 7 1 terminal ≠ shell Background vs. Foreground User interface to shell and other programs. Users generally run one command at a time Graphical (GUI) vs. command-line (CLI) Type command, read output, type another command Command-line terminal (emulator): Input (keyboard) Some programs run “for a long time” Output (screen) $ emacs fizz. txt # shell stuck until ema cs exi ts. A “background” job is a process we don't want to wait for $ emacs boom. txt & # em acs ru ns in backg round [1] 9073 # wh ile sh ell i s... $ gdb ./ umbre lla # im mediat ely r eady f or nex t com mand don't do this with emacs un l es s u si n g X wi nd o ws vers i o n 8 9 Managing Background Jobs Signals Signal: small message notifying a process of event in system Shell waits for and reaps foreground jobs. -

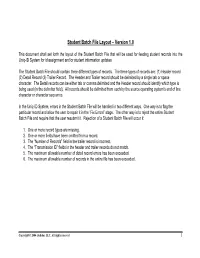

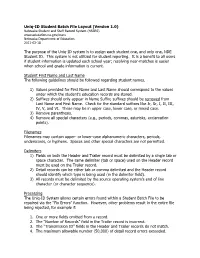

Student Batch File Layout – Version 1.0

Student Batch File Layout – Version 1.0 This document shall set forth the layout of the Student Batch File that will be used for feeding student records into the Uniq-ID System for id assignment and/or student information updates. The Student Batch File should contain three different types of records. The three types of records are: (1) Header record (2) Detail Record (3) Trailer Record. The Header and Trailer record should be delimited by a single tab or space character. The Detail records can be either tab or comma delimited and the Header record should identify which type is being used (in the delimiter field). All records should be delimited from each by the source operating system’s end of line character or character sequence. In the Uniq-ID System, errors in the Student Batch File will be handled in two different ways. One way is to flag the particular record and allow the user to repair it in the “Fix Errors” stage. The other way is to reject the entire Student Batch File and require that the user resubmit it. Rejection of a Student Batch File will occur if: 1. One or more record types are missing. 2. One or more fields have been omitted from a record. 3. The “Number of Records” field in the trailer record is incorrect. 4. The “Transmission ID” fields in the header and trailer records do not match. 5. The maximum allowable number of detail record errors has been exceeded. 6. The maximum allowable number of records in the entire file has been exceeded. -

REGISTRATION AS UNLAWFUL DETAINER ASSISTANT CORPORATION Or PARTNERSHIP COUNTY of SHASTA

REGISTRATION AS UNLAWFUL DETAINER ASSISTANT CORPORATION or PARTNERSHIP COUNTY OF SHASTA Filing Fees This space reserved for County Clerk File Stamp use only Filing registration: $175.00 Filing bond: $ 7.00 Recording bond: (1st page) $ 14.00 Total fee payable to Shasta County Clerk $196.00 Each additional ID card $ 10.00 Payable to Shasta County Clerk Each additional page of bond $ 3.00 Additional Fee payable by separate check to Shasta County Recorder Primary Registration Secondary Registration - List County of Primary Registration: Bond: The number of UNLAWFUL DETAINER ASSISTANTS employed by the corporation or partnership will determine the required bond amount. Mark the appropriate box. 1-4 employees ($25,000) 5-9 employees ($50,000) 10 or more employees ($100,000) Business Information: Completely fill in all information requested. Name of Corporation or Partnership: Business Name: Business Address: Phone Number: Corporate Officers or General Partners: Provide the name and age of each Corporate Officer or General Partner. 1) Name: Age: 2) Name: Age: 3) Name: Age: Official Use Only Registration #: Start Date: Expiration Date: (For Renewals - A new # must be assigned if (2 yrs from date of filing/renewal of registration there has been a lapse in registration over 3 years.) or expiration of the bond.) Approved/Denied (Circle one) Clerk’s Initials: Date: Enter in Excel Log Clerk’s Initials: Date: Create Card/Copy Card & Bond Clerk’s Initials: Date: Enter in Riims Clerk’s Initials: Date: Create Label for File Folder Clerk’s Initials: Date: Picked Up/Mailed (circle one) Clerk’s Initials: Date: \\admin\elshare\County Clerk\Unlawful Detainer\Forms and Procedures\Application UDA Corp-Partner 020618.docx Page 1 of 6 Education and Experience This information must be provided for at least one corporate officer or general partner. -

CST 8129 −Ian Allen − Fall 2002 -1- 150 Minutes CST 8129 −Ian Allen − Fall 2002 -2- 150 Minutes Evaluation: 28 Questions + 1 Script Name: ______5

CST 8129 −Ian Allen − Fall 2002 -1- 150 minutes CST 8129 −Ian Allen − Fall 2002 -2- 150 minutes Evaluation: 28 Questions + 1 Script Name: _________________________ 5. Which bash command sequence correctly searches for the string foobar and then prints YES if it is found inside the group file? Important Instructions †a.if grep foobar /etc/group ; then echo YES ; fi 1. Read all instructions and both sides of both pages. b. if [ grep foobar /etc/group ] ; then echo YES ; fi 2. Manage your time when answering questions on this test. c. if test foobar /etc/group ; then echo YES ; fi Answer the questions you know, first. d. if test foobar = /etc/group ; then echo YES ; fi 3. The Multiple Choice section is worth 8% of the 25% e. if [ test foobar /etc/group ] ; then echo YES ; fi There are no penalties for wrong answers. 4. The Script Writing section is worth 17% of the 25% 6. What is the value of variable foobar at the end of the loop that starts: for foobar in 5 5 $# $? 6 1 ; do †a.1 Multiple Choice Section - 28 Questions - 8% of 25% b. 5 c. 6 (Office use only: 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28) d. 0 1. If foo is a script containing the line TERM=vt100 ; export TERM, e. the value is undefined what is the output of the following sequence of bash commands: TERM=linux ; ./foo ; echo $TERM 7. -

Do You Have a Disability – Yes Or No? Or Is There a Better Way of Asking? Guidance on Disability Disclosure and Respecting Confidentiality

052243SP_cvr_final 9/1/06 5:49 pm Page ii Guidance for colleges and other post-16 education providers on implementing the Disability Discrimination Act Do you have a disability – yes or no? or is there a better way of asking? Guidance on disability disclosure and respecting confidentiality This publication results from the Learning and Skills Development Agency’s strategic programme of research and development funded by the Learning and Skills Council, the organisation that exists to make England better skilled and more competitive. For further information about the Learning and Skills Council visit www.LSC.gov.uk ISBN 1 84572 349 X Christine Rose 052243SP_cvr_final 9/1/06 5:49 pm Page iv Guidance for colleges and adult education providers on encouraging disclosure Do you have a disability – yes or no? or is there a better way of asking? Guidance on disability disclosure and respecting confidentiality Christine Rose Interactive TV Published by the Learning and Skills Development Agency www.LSDA.org.uk LSDA is committed to providing publications that are accessible to all. To request additional copies of this publication or a different format, please contact: Information Services Learning and Skills Development Agency Regent Arcade House 19–25 Argyll Street London W1F 7LS. Tel 020 7297 9144 Fax 020 7297 9242 [email protected] Registered with the Charity Commissioners Copyeditor: Nick Sweeney Designer: Joel Quartey Printer: Blackmore Ltd, Shaftesbury, Dorset 052243SP/01/06/3000 ISBN 1 84572 349 X © Learning and Skills Development Agency 2006 You are welcome to copy this publication for internal use within your organisation. Otherwise, no part of this publication may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, electronic, electrical, chemical, optical, photocopying, recording or otherwise, without prior written permission of the copyright owner. -

Shell Script: the Shell Is the Part of the UNIX That Is Most Visible to the User. It Receives and Interprets the Commands Entered by the User

Shell Script: The shell is the part of the UNIX that is most visible to the user. It receives and interprets the commands entered by the user. In many respects, this makes it the most important component of the UNIX structure. To do anything in the system, we should give the shell a command. If the command requires a utility, the shell requests that the kernel execute the utility. If the command requires an application program, the shell requests that it be run. The standard shells are of different types as There are two major parts to a shell. The first is the interpreter. The interpreter reads your commands and works with the kernel to execute them. The second part of the shell is a programming capability that allows you to write a shell (command) script. A shell script is a file that contains shell commands that perform a useful function. It is also known as shell program. Three additional shells are used in UNIX today. The Bourne shell, developed by Steve Bourne at the AT&T labs, is the oldest. Because it is the oldest and most primitive, it is not used on many systems today. An enhanced version of Bourne shell, called Bash (Bourne again shell), is used in Linux. The C shell, developed in Berkeley by Bill Joy, received its name from the fact that its commands were supposed to look like C statements. A compatible version of C shell, called tcsh is used in Linux. The Korn shell, developed by David Korn also of the AT&T labs, is the newest and most powerful. -

Uniq-ID Student Batch File Layout

Uniq-ID Student Batch File Layout (Version 1.0) Nebraska Student and Staff Record System (NSSRS) www.education.ne.gov/nssrs Nebraska Department of Education 2011-02-18 The purpose of the Uniq-ID system is to assign each student one, and only one, NDE Student ID. This system is not utilized for student reporting. It is a benefit to all users if student information is updated each school year; resolving near-matches is easier when school and grade information is current. Student First Name and Last Name The following guidelines should be followed regarding student names. 1) Values provided for First Name and Last Name should correspond to the values under which the student’s education records are stored. 2) Suffixes should only appear in Name Suffix; suffixes should be removed from Last Name and First Name. Check for the standard suffixes like Jr, Sr, I, II, III, IV, V, and VI. These may be in upper case, lower case, or mixed case. 3) Remove parenthesis. 4) Remove all special characters (e.g., periods, commas, asterisks, exclamation points). Filenames Filenames may contain upper- or lower-case alphanumeric characters, periods, underscores, or hyphens. Spaces and other special characters are not permitted. Delimiters 1) Fields on both the Header and Trailer record must be delimited by a single tab or space character. The same delimiter (tab or space) used on the Header record must be used on the Trailer record. 2) Detail records can be either tab or comma delimited and the Header record should identify which type is being used (in the delimiter field).