Notes on Theoretical Limitations and Practical Vulnerabilities of Internet Surveillance Capture

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Implementing Cisco Cyber Security Operations

2019 CLUS Implementing Cisco Cyber Security Operations Paul Ostrowski / Patrick Lao / James Risler Cisco Security Content Development Engineers LTRCRT-2222 2019 CLUS Cisco Webex Teams Questions? Use Cisco Webex Teams to chat with the speaker after the session How 1 Find this session in the Cisco Live Mobile App 2 Click “Join the Discussion” 3 Install Webex Teams or go directly to the team space 4 Enter messages/questions in the team space Webex Teams will be moderated cs.co/ciscolivebot#LTRCRT-2222 by the speaker until June 16, 2019. 2019 CLUS © 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public 3 Agenda • Goals and Objectives • Prerequisite Knowledge & Skills (PKS) • Introduction to Security Onion • SECOPS Labs and Topologies • Access SECFND / SECOPS eLearning Lab Training Environment • Lab Evaluation • Cisco Cybersecurity Certification and Education Offerings 2019 CLUS LTRCRT-2222 © 2019 Cisco and/or its affiliates. All rights reserved. Cisco Public 4 Goals and Objectives: • Today's organizations are challenged with rapidly detecting cybersecurity breaches in order to effectively respond to security incidents. Cybersecurity provides the critical foundation organizations require to protect themselves, enable trust, move faster, add greater value and grow. • Teams of cybersecurity analysts within Security Operations Centers (SOC) keep a vigilant eye on network security monitoring systems designed to protect their organizations by detecting and responding to cybersecurity threats. • The goal of Cisco’s CCNA Cyber OPS (SECFND / SECOPS) courses is to teach the fundamental skills required to begin a career working as an associate/entry-level cybersecurity analyst within a threat centric security operations center. • This session will provide the student with an understanding of Security Onion as an open source network security monitoring tool (NSM). -

Maximum Internet Security: a Hackers Guide - Networking - Intrusion Detection

- Maximum Internet Security: A Hackers Guide - Networking - Intrusion Detection Exact Phrase All Words Search Tips Maximum Internet Security: A Hackers Guide Author: Publishing Sams Web Price: $49.99 US Publisher: Sams Featured Author ISBN: 1575212684 Benoît Marchal Publication Date: 6/25/97 Pages: 928 Benoît Marchal Table of Contents runs Pineapplesoft, a Save to MyInformIT consulting company that specializes in Internet applications — Now more than ever, it is imperative that users be able to protect their system particularly e-commerce, from hackers trashing their Web sites or stealing information. Written by a XML, and Java. In 1997, reformed hacker, this comprehensive resource identifies security holes in Ben co-founded the common computer and network systems, allowing system administrators to XML/EDI Group, a think discover faults inherent within their network- and work toward a solution to tank that promotes the use those problems. of XML in e-commerce applications. Table of Contents I Setting the Stage 1 -Why Did I Write This Book? 2 -How This Book Will Help You Featured Book 3 -Hackers and Crackers Sams Teach 4 -Just Who Can Be Hacked, Anyway? Yourself Shell II Understanding the Terrain Programming in 5 -Is Security a Futile Endeavor? 24 Hours 6 -A Brief Primer on TCP/IP 7 -Birth of a Network: The Internet Take control of your 8 -Internet Warfare systems by harnessing the power of the shell. III Tools 9 -Scanners 10 -Password Crackers 11 -Trojans 12 -Sniffers 13 -Techniques to Hide One's Identity 14 -Destructive Devices IV Platforms -

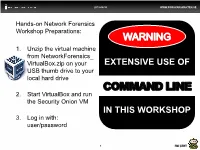

Hands-On Network Forensics, FIRST 2015

2015-04-30 WWW.FORSVARSMAKTEN.SE Hands-on Network Forensics Workshop Preparations: 1. Unzip the virtual machine from NetworkForensics_ VirtualBox.zip on your EXTENSIVE USE OF USB thumb drive to your local hard drive COMMAND LINE 2. Start VirtualBox and run the Security Onion VM IN THIS WORKSHOP 3. Log in with: user/password 1 FM CERT 2015-04-30 WWW.FORSVARSMAKTEN.SE Hands-on Network Forensics Erik Hjelmvik, Swedish Armed Forces CERT FIRST 2015, Berlin 2 FM CERT 2015-04-30 WWW.FORSVARSMAKTEN.SE Hands-on Network Forensics Workshop Preparations: 1. Unzip the virtual machine from NetworkForensics_ VirtualBox.zip on your EXTENSIVE USE OF USB thumb drive to your local hard drive COMMAND LINE 2. Start VirtualBox and run the Security Onion VM IN THIS WORKSHOP 3. Log in with: user/password 3 FM CERT 2015-04-30 WWW.FORSVARSMAKTEN.SE ”Password” Ned 4 FM CERT 2015-04-30 WWW.FORSVARSMAKTEN.SE SysAdmin: Homer 5 FM CERT 2015-04-30 WWW.FORSVARSMAKTEN.SE PR /Marketing: Krusty the Clown 6 FM CERT 2015-04-30 WWW.FORSVARSMAKTEN.SE Password Ned AB = pwned.se 7 FM CERT 2015-04-30 WWW.FORSVARSMAKTEN.SE pwned.se Network [INTERNET] | Default Gateway 192.168.0.1 PASSWORD-NED-XP www.pwned.se | 192.168.0.53 192.168.0.2 [TAP]--->Security- | | | Onion -----+------+---------+---------+----------------+------- | | Homer-xubuntu Krustys-PC 192.168.0.51 192.168.0.54 8 FM CERT 2015-04-30 WWW.FORSVARSMAKTEN.SE Security Onion 9 FM CERT 2015-04-30 WWW.FORSVARSMAKTEN.SE Paths (also on Cheat Sheet) • PCAP files: /nsm/sensor_data/securityonion_eth1/dailylogs/ • Argus files: -

CIT 485: Network Forensics

CIT 485/585 Network Forensics The primary objective of this assignment is to learn a process for investigating security incidents and to give students practice analyzing such an incident using captured network data. 1S TUDENT LEARNING OUTCOMES 1. Describe digital evidence and how the type of legal dispute affects evidence used to resolve it. 2. Describe the steps of the OSCAR network forensics methodology. 3. Identify and decode protocols used on non-standard ports. 4. Investigate suspicious network data for malicious activity. 2D IGITAL EVIDENCE Digital evidence refers to any data collected in digital form from any computer, whether that computer is a desktop, mobile device, game console, printer, or IoT device. A primary goal of digital forensics is ensuring evidence integrity, the preservation of evidence in its original form. Evidence integrity is supported by a chain of custody, a set of documentation that describes the acquisition, copying, and analysis of digital evidence. As analysis of digital data often changes that data (reading a file will not modify the file itself but will change the last accessed time on the file), cryptographic checksums such as SHA-256 are often used to ensure that copies of digital evidence match the original evidence. Details of digital evidence handling are discussed in CIT 430: Computer Forensics. Digital evidence in a criminal case is returned through an inventory of items take through a search warrant. Any devices that may contain an embedded computer can contain digital evidence. Defense attorneys can request an invetory of items and obtain forensic copies of the data from those devices. -

To Provide Adequate Commercial Security

MINIMAL KEY LENGTHS FOR SYMMETRIC CIPHERS TO PROVIDE ADEQUATE COMMERCIAL SECURITY AReport by an AdHoc Groupof Cryptographers andComputerScientists »■■ MattBlaze 1 WhitfieldDiffie2 Ronald L. Rivest3 Bruce Schneier4 Tsutomu Shimomura5 Eric Thompson6 MichaelWiener7 JANUARY 1996 ABSTRACT Encryption plays an essential role in protecting the privacy of electronic information against threats from a variety ofpotential attackers. In so doing, modern cryptography employs a combination ofconventional or symmetric cryptographic systems for encrypting data andpublic key or asymmetric systems for managing the keys used by the symmetric systems. Assessing the strength required of the symmetric cryptographic systems is therefore an essential step in employing cryptography for computer and communication security. Technology readily available today (late 1995) makes brute-force attacks against cryptographic systems considered adequate for the past several years both fast and cheap. General purpose computers can be used, but a much more efficient approach is to employ commercially available Field Programmable Gate Array (FPGA) technology. For attackers prepared to make a higher initial investment, custom-made, special-purpose chips make such calculations much faster and significantly lower the amortized costper solution. As a result, cryptosystems with 40-bit keys offer virtually no protection at this point against brute-force attacks. Even the U.S. Data Encryption Standard with 56-bit keys is increasingly inadequate. As cryptosystems often succumb to "smarter" attacks than brute-force key search, it is also important toremember that the keylengths discussed here are the minimum needed for security against the computational threats considered. Fortunately, the cost of very strong encryption is not significantly greater than that of weak encryption. -

Network Forensics

Network Forensics Michael Sonntag Institute of Networks and Security What is it? Evidence taken from the “network” In practice this means today the Internet (or LAN) In special cases: Telecommunication networks (as long as they are not yet changed to VoIP!) Typically not available “after the fact” Requires suspicions and preparation in advance Copying the communication content At the source (=within the suspects computer): “Online search” This could also be a webserver, e.g. if it contains illegal content “Source” does NOT mean that this is the client/initiator of communication/… At the destination: See some part of the traffic Only if unavoidable or the only interesting part Somewhere on the way of the (all?) traffic: ISP, physically tapping the wires, home routers etc. Network Forensics 2 Problems of network forensics “So you have copied some Internet traffic – but how is it linked to the suspect?” The IP addresses involved must be tied to individual persons This might be easy (location of copying) or very hard “When did it take place?” Packet captures typically have only relative timestamps But there may be lots of timestamps in the actual traffic! As supporting evidence to some external documentation “Is it unchanged?” These are merely packets; their content can be changed Although it is possible to check e.g. checksums, this is a lot of work and normally not done Treat as any other digital evidence Hash value + Chain of Custody; work on copies only Network Forensics 3 Scenario Suspect: Mallory Malison; released -

Guide to Computer Forensics and Investigations Fourth Edition

Guide to Computer Forensics and Investigations Fourth Edition Chapter 11 Virtual Machines, Network Forensics, and Live Acquisitions Objectives • Describe primary concerns in conducting forensic examinations of virtual machines • Describe the importance of network forensics • Explain standard procedures for performing a live acquisition • Explain standard procedures for network forensics • Describe the use of network tools Guide to Computer Forensics and Investigations 2 Virtual Machines Overview • Virtual machines are important in today’s networks. • Investigators must know how to detect a virtual machine installed on a host, acquire an image of a virtual machine, and use virtual machines to examine malware. Virtual Machines Overview (cont.) • Check whether virtual machines are loaded on a host computer. • Check Registry for clues that virtual machines have been installed or uninstalled. Network Forensics Overview • Network forensics – Systematic tracking of incoming and outgoing traffic • To ascertain how an attack was carried out or how an event occurred on a network • Intruders leave trail behind • Determine the cause of the abnormal traffic – Internal bug – Attackers Guide to Computer Forensics and Investigations 5 Securing a Network • Layered network defense strategy – Sets up layers of protection to hide the most valuable data at the innermost part of the network • Defense in depth (DiD) – Similar approach developed by the NSA – Modes of protection • People • Technology • Operations Guide to Computer Forensics and Investigations -

Contents in Detail

CONTENTS IN DETAIL ACKNOWLEDGMENTS xv INTRODUCTION xvii Why This Book? .....................................................................................................xvii Concepts and Approach ........................................................................................xviii How to Use This Book ............................................................................................. xix About the Sample Capture Files ................................................................................ xx The Rural Technology Fund ....................................................................................... xx Contacting Me ........................................................................................................ xx 1 PACKET ANALYSIS AND NETWORK BASICS 1 Packet Analysis and Packet Sniffers ............................................................................. 2 Evaluating a Packet Sniffer ............................................................................ 2 How Packet Sniffers Work............................................................................. 3 How Computers Communicate.................................................................................... 4 Protocols ..................................................................................................... 4 The Seven-Layer OSI Model .......................................................................... 5 Data Encapsulation ..................................................................................... -

Linux Networking Cookbook.Pdf

Linux Networking Cookbook ™ Carla Schroder Beijing • Cambridge • Farnham • Köln • Paris • Sebastopol • Taipei • Tokyo Linux Networking Cookbook™ by Carla Schroder Copyright © 2008 O’Reilly Media, Inc. All rights reserved. Printed in the United States of America. Published by O’Reilly Media, Inc., 1005 Gravenstein Highway North, Sebastopol, CA 95472. O’Reilly books may be purchased for educational, business, or sales promotional use. Online editions are also available for most titles (safari.oreilly.com). For more information, contact our corporate/institutional sales department: (800) 998-9938 or [email protected]. Editor: Mike Loukides Indexer: John Bickelhaupt Production Editor: Sumita Mukherji Cover Designer: Karen Montgomery Copyeditor: Derek Di Matteo Interior Designer: David Futato Proofreader: Sumita Mukherji Illustrator: Jessamyn Read Printing History: November 2007: First Edition. Nutshell Handbook, the Nutshell Handbook logo, and the O’Reilly logo are registered trademarks of O’Reilly Media, Inc. The Cookbook series designations, Linux Networking Cookbook, the image of a female blacksmith, and related trade dress are trademarks of O’Reilly Media, Inc. Java™ is a trademark of Sun Microsystems, Inc. .NET is a registered trademark of Microsoft Corporation. Many of the designations used by manufacturers and sellers to distinguish their products are claimed as trademarks. Where those designations appear in this book, and O’Reilly Media, Inc. was aware of a trademark claim, the designations have been printed in caps or initial caps. While every precaution has been taken in the preparation of this book, the publisher and author assume no responsibility for errors or omissions, or for damages resulting from the use of the information contained herein. -

Ten Strategies of a World-Class Cybersecurity Operations Center Conveys MITRE’S Expertise on Accumulated Expertise on Enterprise-Grade Computer Network Defense

Bleed rule--remove from file Bleed rule--remove from file MITRE’s accumulated Ten Strategies of a World-Class Cybersecurity Operations Center conveys MITRE’s expertise on accumulated expertise on enterprise-grade computer network defense. It covers ten key qualities enterprise- grade of leading Cybersecurity Operations Centers (CSOCs), ranging from their structure and organization, computer MITRE network to processes that best enable effective and efficient operations, to approaches that extract maximum defense Ten Strategies of a World-Class value from CSOC technology investments. This book offers perspective and context for key decision Cybersecurity Operations Center points in structuring a CSOC and shows how to: • Find the right size and structure for the CSOC team Cybersecurity Operations Center a World-Class of Strategies Ten The MITRE Corporation is • Achieve effective placement within a larger organization that a not-for-profit organization enables CSOC operations that operates federally funded • Attract, retain, and grow the right staff and skills research and development • Prepare the CSOC team, technologies, and processes for agile, centers (FFRDCs). FFRDCs threat-based response are unique organizations that • Architect for large-scale data collection and analysis with a assist the U.S. government with limited budget scientific research and analysis, • Prioritize sensor placement and data feed choices across development and acquisition, enteprise systems, enclaves, networks, and perimeters and systems engineering and integration. We’re proud to have If you manage, work in, or are standing up a CSOC, this book is for you. served the public interest for It is also available on MITRE’s website, www.mitre.org. more than 50 years. -

Teaching Adversarial Thinking for Cybersecurity

Journal of The Colloquium for Information System Security Education (CISSE) September 2016 Teaching Adversarial Thinking for Cybersecurity Seth T. Hamman †, ‡ [email protected] Kenneth M. Hopkinson † [email protected] † Air Force Institute of Technology Wright-Patterson AFB, OH 45433 ‡ Cedarville University Cedarville, OH 45314 Abstract - The academic discipline of cybersecurity is still in its formative years. One area in need of improvement is teaching cybersecurity students adversarial thinking—an important academic objective that is typically defined as “the ability to think like a hacker.” Working from this simplistic definition makes framing student learning outcomes difficult, and without proper learning outcomes, it is not possible to create appropriate instructional materials. A better understanding of the concept of adversarial thinking is needed in order to improve this aspect of cybersecurity education. This paper sheds new light on adversarial thinking by exploring it through the lens of Sternberg’s triarchic theory of intelligence. The triarchic theory’s division of the intellect into the analytical, creative, and practical components provides a comprehensive framework for examining the characteristic thought processes of hackers. This exploration produces a novel, multidimensional definition of adversarial thinking that leads immediately to three clearly defined learning outcomes and to some new ideas for teaching adversarial thinking to cybersecurity students. Categories and Subject Descriptors K.3.2 [Computers and Education]: Computer and Information Science Education 1 Journal of The Colloquium for Information System Security Education (CISSE) September 2016 General Terms Computer science education, Curriculum Keywords Adversarial Thinking Definition, Cybersecurity Education, Triarchic Theory of Intelligence 1. INTRODUCTION It is widely acknowledged that teaching adversarial thinking to cybersecurity students is important. -

CIT 485: Exploit Kits

CIT 485/585 Exploit Kits The primary objective of this assignment is to learn how exploit kits act to compromise victims and how such malware attempts to hide itself. This lesson comes with a set of files containing captured packets in either the PCAP or PCAPNG formats. Sections of the lesson will refer to the appropriate packet capture files to use by name. Use the references in the References section at the bottom of the lesson when needed to answer questions about the protocols in the lesson. STUDENT LEARNING OUTCOMES 1. Identify malware activity in captured network traffic. 2. Extract files transferred over HTTP. 3. Determine if files are malicious using local and cloud anti-malware tools. INTRODUCTION The objective of this assignment is to learn how to use network data to detect and respond to malware attacks. We will use PCAP tools like Wireshark, the snort IDS, and a two new tools: Clam AV and justniffer. To install ClamAV, run the following commands on your Kali VM. # apt-get update # apt-get install clamav # freshclam Clam AV is a free anti-virus program, which we can use to determine whether executables found in network traffic are malware or not. In addition to ClamAV, we can upload extracted files (or entire pcap files) to VirusTotal to evaluate files using dozens of anti-virus programs. Note that VirusTotal archives uploaded files, so the site should only be used with files that do not contain confidential or sensitive information. Uploading malware executables or example PCAPs from this assignment is fine. Packet captures from corporate networks typically need to have data removed or altered to avoid giving away confidential data and non-confidential but still sensitive data like IP addresses.