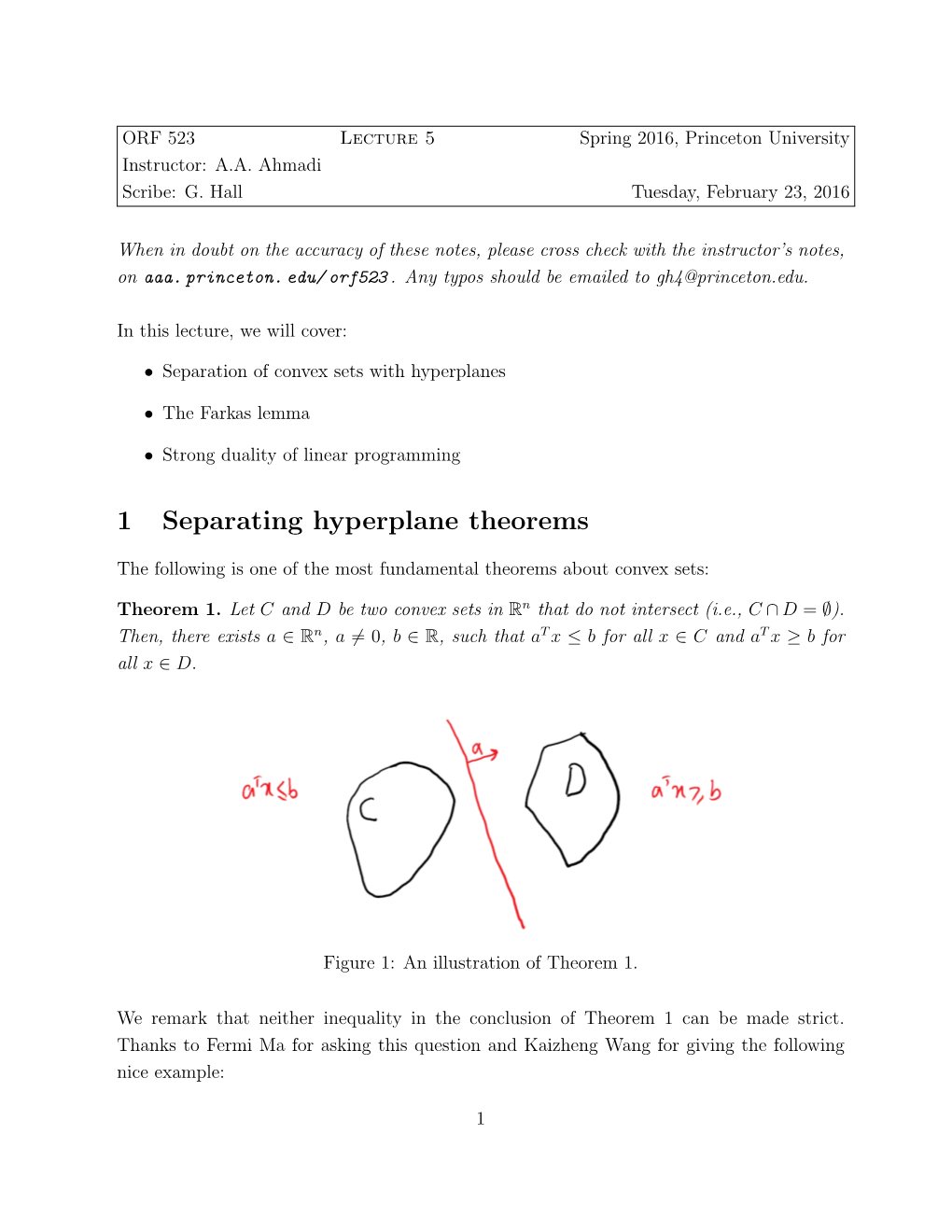

1 Separating Hyperplane Theorems

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

On Quasi Norm Attaining Operators Between Banach Spaces

ON QUASI NORM ATTAINING OPERATORS BETWEEN BANACH SPACES GEUNSU CHOI, YUN SUNG CHOI, MINGU JUNG, AND MIGUEL MART´IN Abstract. We provide a characterization of the Radon-Nikod´ymproperty in terms of the denseness of bounded linear operators which attain their norm in a weak sense, which complement the one given by Bourgain and Huff in the 1970's. To this end, we introduce the following notion: an operator T : X ÝÑ Y between the Banach spaces X and Y is quasi norm attaining if there is a sequence pxnq of norm one elements in X such that pT xnq converges to some u P Y with }u}“}T }. Norm attaining operators in the usual (or strong) sense (i.e. operators for which there is a point in the unit ball where the norm of its image equals the norm of the operator) and also compact operators satisfy this definition. We prove that strong Radon-Nikod´ymoperators can be approximated by quasi norm attaining operators, a result which does not hold for norm attaining operators in the strong sense. This shows that this new notion of quasi norm attainment allows to characterize the Radon-Nikod´ymproperty in terms of denseness of quasi norm attaining operators for both domain and range spaces, completing thus a characterization by Bourgain and Huff in terms of norm attaining operators which is only valid for domain spaces and it is actually false for range spaces (due to a celebrated example by Gowers of 1990). A number of other related results are also included in the paper: we give some positive results on the denseness of norm attaining Lipschitz maps, norm attaining multilinear maps and norm attaining polynomials, characterize both finite dimensionality and reflexivity in terms of quasi norm attaining operators, discuss conditions to obtain that quasi norm attaining operators are actually norm attaining, study the relationship with the norm attainment of the adjoint operator and, finally, present some stability results. -

POLARS and DUAL CONES 1. Convex Sets

POLARS AND DUAL CONES VERA ROSHCHINA Abstract. The goal of this note is to remind the basic definitions of convex sets and their polars. For more details see the classic references [1, 2] and [3] for polytopes. 1. Convex sets and convex hulls A convex set has no indentations, i.e. it contains all line segments connecting points of this set. Definition 1 (Convex set). A set C ⊆ Rn is called convex if for any x; y 2 C the point λx+(1−λ)y also belongs to C for any λ 2 [0; 1]. The sets shown in Fig. 1 are convex: the first set has a smooth boundary, the second set is a Figure 1. Convex sets convex polygon. Singletons and lines are also convex sets, and linear subspaces are convex. Some nonconvex sets are shown in Fig. 2. Figure 2. Nonconvex sets A line segment connecting two points x; y 2 Rn is denoted by [x; y], so we have [x; y] = f(1 − α)x + αy; α 2 [0; 1]g: Likewise, we can consider open line segments (x; y) = f(1 − α)x + αy; α 2 (0; 1)g: and half open segments such as [x; y) and (x; y]. Clearly any line segment is a convex set. The notion of a line segment connecting two points can be generalised to an arbitrary finite set n n by means of a convex combination. Given a finite set fx1; x2; : : : ; xpg ⊂ R , a point x 2 R is the convex combination of x1; x2; : : : ; xp if it can be represented as p p X X x = αixi; αi ≥ 0 8i = 1; : : : ; p; αi = 1: i=1 i=1 Given an arbitrary set S ⊆ Rn, we can consider all possible finite convex combinations of points from this set. -

Slices, Slabs, and Sections of the Unit Hypercube

Slices, Slabs, and Sections of the Unit Hypercube Jean-Luc Marichal Michael J. Mossinghoff Institute of Mathematics Department of Mathematics University of Luxembourg Davidson College 162A, avenue de la Fa¨ıencerie Davidson, NC 28035-6996 L-1511 Luxembourg USA Luxembourg [email protected] [email protected] Submitted: July 27, 2006; Revised: August 6, 2007; Accepted: January 21, 2008; Published: January 29, 2008 Abstract Using combinatorial methods, we derive several formulas for the volume of convex bodies obtained by intersecting a unit hypercube with a halfspace, or with a hyperplane of codimension 1, or with a flat defined by two parallel hyperplanes. We also describe some of the history of these problems, dating to P´olya’s Ph.D. thesis, and we discuss several applications of these formulas. Subject Class: Primary: 52A38, 52B11; Secondary: 05A19, 60D05. Keywords: Cube slicing, hyperplane section, signed decomposition, volume, Eulerian numbers. 1 Introduction In this note we study the volumes of portions of n-dimensional cubes determined by hyper- planes. More precisely, we study slices created by intersecting a hypercube with a halfspace, slabs formed as the portion of a hypercube lying between two parallel hyperplanes, and sections obtained by intersecting a hypercube with a hyperplane. These objects occur natu- rally in several fields, including probability, number theory, geometry, physics, and analysis. In this paper we describe an elementary combinatorial method for calculating volumes of arbitrary slices, slabs, and sections of a unit cube. We also describe some applications that tie these geometric results to problems in analysis and combinatorics. Some of the results we obtain here have in fact appeared earlier in other contexts. -

On the Ekeland Variational Principle with Applications and Detours

Lectures on The Ekeland Variational Principle with Applications and Detours By D. G. De Figueiredo Tata Institute of Fundamental Research, Bombay 1989 Author D. G. De Figueiredo Departmento de Mathematica Universidade de Brasilia 70.910 – Brasilia-DF BRAZIL c Tata Institute of Fundamental Research, 1989 ISBN 3-540- 51179-2-Springer-Verlag, Berlin, Heidelberg. New York. Tokyo ISBN 0-387- 51179-2-Springer-Verlag, New York. Heidelberg. Berlin. Tokyo No part of this book may be reproduced in any form by print, microfilm or any other means with- out written permission from the Tata Institute of Fundamental Research, Colaba, Bombay 400 005 Printed by INSDOC Regional Centre, Indian Institute of Science Campus, Bangalore 560012 and published by H. Goetze, Springer-Verlag, Heidelberg, West Germany PRINTED IN INDIA Preface Since its appearance in 1972 the variational principle of Ekeland has found many applications in different fields in Analysis. The best refer- ences for those are by Ekeland himself: his survey article [23] and his book with J.-P. Aubin [2]. Not all material presented here appears in those places. Some are scattered around and there lies my motivation in writing these notes. Since they are intended to students I included a lot of related material. Those are the detours. A chapter on Nemyt- skii mappings may sound strange. However I believe it is useful, since their properties so often used are seldom proved. We always say to the students: go and look in Krasnoselskii or Vainberg! I think some of the proofs presented here are more straightforward. There are two chapters on applications to PDE. -

Linear Algebra Handout

Artificial Intelligence: 6.034 Massachusetts Institute of Technology April 20, 2012 Spring 2012 Recitation 10 Linear Algebra Review • A vector is an ordered list of values. It is often denoted using angle brackets: ha; bi, and its variable name is often written in bold (z) or with an arrow (~z). We can refer to an individual element of a vector using its index: for example, the first element of z would be z1 (or z0, depending on how we're indexing). Each element of a vector generally corresponds to a particular dimension or feature, which could be discrete or continuous; often you can think of a vector as a point in Euclidean space. p 2 2 2 • The magnitude (also called norm) of a vector x = hx1; x2; :::; xni is x1 + x2 + ::: + xn, and is denoted jxj or kxk. • The sum of a set of vectors is their elementwise sum: for example, ha; bi + hc; di = ha + c; b + di (so vectors can only be added if they are the same length). The dot product (also called scalar product) of two vectors is the sum of their elementwise products: for example, ha; bi · hc; di = ac + bd. The dot product x · y is also equal to kxkkyk cos θ, where θ is the angle between x and y. • A matrix is a generalization of a vector: instead of having just one row or one column, it can have m rows and n columns. A square matrix is one that has the same number of rows as columns. A matrix's variable name is generally a capital letter, often written in bold. -

Cutting Hyperplane Arrangements*

Discrete Comput Geom 6:385 406 (1991) GDieometryscrete & Computational 1991 Springer-VerlagNew York Inc Cutting Hyperplane Arrangements* Jifi Matou~ek Department of Applied Mathematics, Charles University, Malostransk6 nfim. 25, 118 00 Praha 1. Czechoslovakia Abstract. We consider a collection H of n hyperplanes in E a (where the dimension d is fixed). An e.-cuttin9 for H is a collection of (possibly unbounded) d-dimensional simplices with disjoint interiors, which cover all E a and such that the interior of any simplex is intersected by at most en hyperplanes of H. We give a deterministic algorithm for finding a (1/r)-cutting with O(r d) simplices (which is asymptotically optimal). For r < n I 6, where 6 > 0 is arbitrary but fixed, the running time of this algorithm is O(n(log n)~ In the plane we achieve a time bound O(nr) for r _< n I-6, which is optimal if we also want to compute the collection of lines intersecting each simplex of the cutting. This improves a result of Agarwal, and gives a conceptually simpler algorithm. For an n point set X ~_ E d and a parameter r, we can deterministically compute a (1/r)-net of size O(r log r) for the range space (X, {X c~ R; R is a simplex}), in time O(n(log n)~ d- 1 + roe11). The size of the (1/r)-net matches the best known existence result. By a simple transformation, this allows us to find e-nets for other range spaces usually encountered in computational geometry. -

The Ekeland Variational Principle, the Bishop-Phelps Theorem, and The

The Ekeland Variational Principle, the Bishop-Phelps Theorem, and the Brøndsted-Rockafellar Theorem Our aim is to prove the Ekeland Variational Principle which is an abstract result that found numerous applications in various fields of Mathematics. As its application to Convex Analysis, we provide a proof of the famous Bishop- Phelps Theorem and some related results. Let us recall that the epigraph and the hypograph of a function f : X ! [−∞; +1] (where X is a set) are the following subsets of X × R: epi f = f(x; t) 2 X × R : t ≥ f(x)g ; hyp f = f(x; t) 2 X × R : t ≤ f(x)g : Notice that, if f > −∞ on X then epi f 6= ; if and only if f is proper, that is, f is finite at at least one point. 0.1. The Ekeland Variational Principle. In what follows, (X; d) is a metric space. Given λ > 0 and (x; t) 2 X × R, we define the set Kλ(x; t) = f(y; s): s ≤ t − λd(x; y)g = f(y; s): λd(x; y) ≤ t − sg ⊂ X × R: Notice that Kλ(x; t) = hyp[t − λ(x; ·)]. Let us state some useful properties of these sets. Lemma 0.1. (a) Kλ(x; t) is closed and contains (x; t). (b) If (¯x; t¯) 2 Kλ(x; t) then Kλ(¯x; t¯) ⊂ Kλ(x; t). (c) If (xn; tn) ! (x; t) and (xn+1; tn+1) 2 Kλ(xn; tn) (n 2 N), then \ Kλ(x; t) = Kλ(xn; tn) : n2N Proof. (a) is quite easy. -

15 BASIC PROPERTIES of CONVEX POLYTOPES Martin Henk, J¨Urgenrichter-Gebert, and G¨Unterm

15 BASIC PROPERTIES OF CONVEX POLYTOPES Martin Henk, J¨urgenRichter-Gebert, and G¨unterM. Ziegler INTRODUCTION Convex polytopes are fundamental geometric objects that have been investigated since antiquity. The beauty of their theory is nowadays complemented by their im- portance for many other mathematical subjects, ranging from integration theory, algebraic topology, and algebraic geometry to linear and combinatorial optimiza- tion. In this chapter we try to give a short introduction, provide a sketch of \what polytopes look like" and \how they behave," with many explicit examples, and briefly state some main results (where further details are given in subsequent chap- ters of this Handbook). We concentrate on two main topics: • Combinatorial properties: faces (vertices, edges, . , facets) of polytopes and their relations, with special treatments of the classes of low-dimensional poly- topes and of polytopes \with few vertices;" • Geometric properties: volume and surface area, mixed volumes, and quer- massintegrals, including explicit formulas for the cases of the regular simplices, cubes, and cross-polytopes. We refer to Gr¨unbaum [Gr¨u67]for a comprehensive view of polytope theory, and to Ziegler [Zie95] respectively to Gruber [Gru07] and Schneider [Sch14] for detailed treatments of the combinatorial and of the convex geometric aspects of polytope theory. 15.1 COMBINATORIAL STRUCTURE GLOSSARY d V-polytope: The convex hull of a finite set X = fx1; : : : ; xng of points in R , n n X i X P = conv(X) := λix λ1; : : : ; λn ≥ 0; λi = 1 : i=1 i=1 H-polytope: The solution set of a finite system of linear inequalities, d T P = P (A; b) := x 2 R j ai x ≤ bi for 1 ≤ i ≤ m ; with the extra condition that the set of solutions is bounded, that is, such that m×d there is a constant N such that jjxjj ≤ N holds for all x 2 P . -

Exact Hyperplane Covers for Subsets of the Hypercube 1

EXACT HYPERPLANE COVERS FOR SUBSETS OF THE HYPERCUBE JAMES AARONSON, CARLA GROENLAND, ANDRZEJ GRZESIK, TOM JOHNSTON, AND BARTLOMIEJKIELAK Abstract. Alon and F¨uredi(1993) showed that the number of hyper- planes required to cover f0; 1gn n f0g without covering 0 is n. We initiate the study of such exact hyperplane covers of the hypercube for other sub- sets of the hypercube. In particular, we provide exact solutions for covering f0; 1gn while missing up to four points and give asymptotic bounds in the general case. Several interesting questions are left open. 1. Introduction A vector v 2 Rn and a scalar α 2 R determine the hyperplane n fx 2 R : hv; xi := v1x1 + ··· + vnxn = αg in Rn. How many hyperplanes are needed to cover f0; 1gn? Only two are required; for instance, fx : x1 = 0g and fx : x1 = 1g will do. What happens however if 0 2 Rn is not allowed on any of the hyperplanes? We can `exactly' cover f0; 1gn n f0g with n hyperplanes: for example, the collections ffx : Pn xi = 1g : i 2 [n]g or ffx : i=1 xi = jg : j 2 [n]g can be used, where [n] := f1; 2; : : : ; ng. Alon and F¨uredi[2] showed that in fact n hyperplanes are always necessary. Recently, a variation was studied by Clifton and Huang [5], in which they require that each point from f0; 1gn n f0g is covered at least k times for some k 2 N (while 0 is never covered). Another natural generalisation is to put more than just 0 to the set of points we wish to avoid in the cover. -

Chapter 5 Convex Optimization in Function Space 5.1 Foundations of Convex Analysis

Chapter 5 Convex Optimization in Function Space 5.1 Foundations of Convex Analysis Let V be a vector space over lR and k ¢ k : V ! lR be a norm on V . We recall that (V; k ¢ k) is called a Banach space, if it is complete, i.e., if any Cauchy sequence fvkglN of elements vk 2 V; k 2 lN; converges to an element v 2 V (kvk ¡ vk ! 0 as k ! 1). Examples: Let be a domain in lRd; d 2 lN. Then, the space C() of continuous functions on is a Banach space with the norm kukC() := sup ju(x)j : x2 The spaces Lp(); 1 · p < 1; of (in the Lebesgue sense) p-integrable functions are Banach spaces with the norms Z ³ ´1=p p kukLp() := ju(x)j dx : The space L1() of essentially bounded functions on is a Banach space with the norm kukL1() := ess sup ju(x)j : x2 The (topologically and algebraically) dual space V ¤ is the space of all bounded linear functionals ¹ : V ! lR. Given ¹ 2 V ¤, for ¹(v) we often write h¹; vi with h¢; ¢i denoting the dual product between V ¤ and V . We note that V ¤ is a Banach space equipped with the norm j h¹; vi j k¹k := sup : v2V nf0g kvk Examples: The dual of C() is the space M() of Radon measures ¹ with Z h¹; vi := v d¹ ; v 2 C() : The dual of L1() is the space L1(). The dual of Lp(); 1 < p < 1; is the space Lq() with q being conjugate to p, i.e., 1=p + 1=q = 1. -

Non-Linear Inner Structure of Topological Vector Spaces

mathematics Article Non-Linear Inner Structure of Topological Vector Spaces Francisco Javier García-Pacheco 1,*,† , Soledad Moreno-Pulido 1,† , Enrique Naranjo-Guerra 1,† and Alberto Sánchez-Alzola 2,† 1 Department of Mathematics, College of Engineering, University of Cadiz, 11519 Puerto Real, CA, Spain; [email protected] (S.M.-P.); [email protected] (E.N.-G.) 2 Department of Statistics and Operation Research, College of Engineering, University of Cadiz, 11519 Puerto Real (CA), Spain; [email protected] * Correspondence: [email protected] † These authors contributed equally to this work. Abstract: Inner structure appeared in the literature of topological vector spaces as a tool to charac- terize the extremal structure of convex sets. For instance, in recent years, inner structure has been used to provide a solution to The Faceless Problem and to characterize the finest locally convex vector topology on a real vector space. This manuscript goes one step further by settling the bases for studying the inner structure of non-convex sets. In first place, we observe that the well behaviour of the extremal structure of convex sets with respect to the inner structure does not transport to non-convex sets in the following sense: it has been already proved that if a face of a convex set intersects the inner points, then the face is the whole convex set; however, in the non-convex setting, we find an example of a non-convex set with a proper extremal subset that intersects the inner points. On the opposite, we prove that if a extremal subset of a non-necessarily convex set intersects the affine internal points, then the extremal subset coincides with the whole set. -

Convex Sets and Convex Functions 1 Convex Sets

Convex Sets and Convex Functions 1 Convex Sets, In this section, we introduce one of the most important ideas in economic modelling, in the theory of optimization and, indeed in much of modern analysis and computatyional mathematics: that of a convex set. Almost every situation we will meet will depend on this geometric idea. As an independent idea, the notion of convexity appeared at the end of the 19th century, particularly in the works of Minkowski who is supposed to have said: \Everything that is convex interests me." We discuss other ideas which stem from the basic definition, and in particular, the notion of a convex and concave functions which which are so prevalent in economic models. The geometric definition, as we will see, makes sense in any vector space. Since, for the most of our work we deal only with Rn, the definitions will be stated in that context. The interested student may, however, reformulate the definitions either, in an ab stract setting or in some concrete vector space as, for example, C([0; 1]; R)1. Intuitively if we think of R2 or R3, a convex set of vectors is a set that contains all the points of any line segment joining two points of the set (see the next figure). Here is the definition. Definition 1.1 Let u; v 2 V . Then the set of all convex combinations of u and v is the set of points fwλ 2 V : wλ = (1 − λ)u + λv; 0 ≤ λ ≤ 1g: (1.1) In, say, R2 or R3, this set is exactly the line segment joining the two points u and v.