Relation Extraction Using TBL with Distant Supervision

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Christopher Plummer

Christopher Plummer "An actor should be a mystery," Christopher Plummer Introduction ........................................................................................ 3 Biography ................................................................................................................................. 4 Christopher Plummer and Elaine Taylor ............................................................................. 18 Christopher Plummer quotes ............................................................................................... 20 Filmography ........................................................................................................................... 32 Theatre .................................................................................................................................... 72 Christopher Plummer playing Shakespeare ....................................................................... 84 Awards and Honors ............................................................................................................... 95 Christopher Plummer Introduction Christopher Plummer, CC (born December 13, 1929) is a Canadian theatre, film and television actor and writer of his memoir In "Spite of Myself" (2008) In a career that spans over five decades and includes substantial roles in film, television, and theatre, Plummer is perhaps best known for the role of Captain Georg von Trapp in The Sound of Music. His most recent film roles include the Disney–Pixar 2009 film Up as Charles Muntz, -

BCPE Press Kit Aug.Text

Sales Contact (US & Canada) Sales Contact (International) Submarine Entertainment Films Transit Josh Braun Diana Holtzberg Dan Braun [email protected] Amada Lebow 917-757-1444 [email protected] Press Contact [email protected] [email protected] Other Noises [email protected] 212-501-9507 www.centralparkbirdfilm.com LOG LINE A diverse group of full-of-attitude New Yorkers reveals how a hidden world of beautiful wild birds in the middle of Manhattan has upended and magically transformed their lives. SYNOPSIS The Central Park Effect reveals the extraordinary array of wild birds who grace Manhattan’s celebrated patch of green and the equally colorful, full-of-attitude New Yorkers who schedule their lives around the rhythms of migration. The film focuses on seven main characters who regularly visit the Park and have found a profound connection with this hidden natural world. Movie-star handsome Chris Cooper dodges the morning rush hour traffic on bustling Central Park West, his binoculars knocking against his leather bomber jacket as he ducks into the Park. “My friends mock me for what I do in the spring because – they know from experience. From April 15 until Memorial Day, they won’t see me. Because I’m birding!” Anya Auerbach, a radiant fashion-averse teenager, admits that “I take my binoculars pretty much everywhere – except school.” Acclaimed author Jonathan Franzen recalls that he walked through Central Park almost daily for seven years, noticing only the pigeons and sparrows. Then one day, friends invited him to come along with them on a tour of the Park’s famous Ramble section and handed him a pair of binoculars. -

Milloi' KCTONYC

M U ALUMNI SHOW TALENT AND GUTS AS THEY MAKE IT IN SHOW BIZ. Chris Cooper was no stranger to the stage the actresses turned her back to the audio »itl, Journ '86. in MU's constellation of when he made his first live performance enee to feed hini his lines. movie stars. in a Kansas City community theater pro, Cooper, 48, has come a long way since duction of A Streetcar Named Desire. delivering the II lines of the paperboy in KCTONYC He was, however, a stranger to acting. Streetcar. Best known for his portrayals Cooper grew up in the Kansas City sub, Cooper, then a set builder, stepped into of broo<ly lawmen-Sheriff July Johnson urbs, but the sel f·described introvert felt the spotlight only after Tom Be renger for· in theTV miniseries LOIlesome D ove and more at home on the family's 1,280.acre got about a matinee. Sheriff Sam Deeds in L Olle S tar--the Kansas canle ranch. where he spent his " By curtain time, it ....1LS clear that Tom man whom director Joel Schumacher summers. As he grew older, he and his probably wasn 'tgoing to show up," says hails "a consummate artist" receive(1 the buddies ventured on the wrong side of the Cooper. BGS '76, "so I quickly memo· "show Me" Award las t October at the law- let's just say petty theft , he says rized his lines and stood in for him. It fifth annual FilmFest in Kansas City. He but Cooper didn't like keeping time with went off without a hitch." \\-c ll , more or joins the likes of Berenger, AD '71 , Kate trouble. -

MELISSA GIROTTI Cell: 416-268-0425 E-Mail: [email protected]

MELISSA GIROTTI Cell: 416-268-0425 E-mail: [email protected] Production “Downsizing” Feature Film Paramount Pictures Coordinator Producers: Mark Johnson 2016 Director: Alexander Payne Line Producer: Diana Pokorny UPM: Regina Robb Cast: Matt Damon, Kristen Wiig, Jason Sudeikis, Christoph Waltz Production “11.22.63” Mini Series Bad Robot / Warner Bros. Coordinator Producers: JJ Abrams, Kathy Lingg, Bridget Carpenter 2015 Director: Various Line Producer: Joseph Boccia UPM: Anna Beben Cast: James Franco, Sarah Gadon, Chris Cooper, Josh Duhamel, George MacKay Production “MARILYN” Mini Series Asylum / Lifetime Supervisor Producers: Don Carmody, David Cormican 2014 - 2015 Director: Laurie Collyer Line Producer / UPM: Joseph Boccia Cast: Susan Sarandon, Emily Watson, Kelli Garner, Jeffrey Dean Morgan Production “POLTERGEIST” (Reshoots Only) Feature Film MGM Coordinator Producers: Sam Raimi, Nathan Kahane, Roy Lee 2014 Director: Gil Kenan (Re-shoots) Line Producer: Becki Trujillo UPM: Joseph Boccia Cast: Sam Rockwell, Rosemarie DeWitt, Jared Harris Production “AGATHA” TV Pilot ABC Studios Coordinator Exec. Producers: Mark Gordon, Nick Pepper, Michael McDonald 2014 Director: Jace Alexander Line Producer: Todd Arnow UPM: Joseph Boccia Cast: Bojana Novakovic, Clancy Brown, Meta Golding Production “MAPS TO THE STARS” Feature Film Independent Supervisor Producers: Martin Katz, Saïd Ben Saïd, Michel Merkt 2013 France / Canada Director: David Cronenberg Co-Production Line Producer / UPM: Joseph Boccia Cast: Julianne Moore, John Cusack, Mia Wasikowska, -

Hoffman Shines in Powerful Capote W Seth Motel W Editor in Chief You Know a Movie Is Good When the Only Major Complaint About It Is Its Misleading Title

February 10, 2006 Hoffman shines in powerful Capote w Seth Motel w Editor in Chief You know a movie is good when the only major complaint about it is its misleading title. Capote is not a biography of legendary author Truman Capote, but rather a mixture of character study and the process of writing a book. And that’s where the criticism ends. Unlike 2004’s Ray, Capote only follows the author for a few years in his life in which he’s gathering research for his groundbreaking “nonfiction novel,”In Cold Blood. The six years he spends researching and writing it wipes him out, as his investigation of the murder leads to an emotional conflict. Capote is portrayed by Philip Seymour Hoffman (Almost Famous) in what may be the best performance of the year. The only un-Capote-like thing about Hoffman is his six-and-a-half inch height advantage over the real one. Outside of that facet, Hoffman is hauntingly spectacular. He goes on a trek to Kansas to interview the suspected murderers in a particularly brutal slaying. He takes along lifelong friend and To Kill a Mockingbird scribe Harper Lee (Catherine Keener) to help him get inside the head of the supposed cold-blood killers. What Truman expects to be a piece for The New Yorker becomes a project he works on for six years. The prisoners keep getting stays of execution, eliminating any possibility of him completing the book. More of a dilemma for Truman is his friendship (some real-life critics argue that it was a love affair) with one of the prisoners (Clifton Collins, Jr. -

ADRUITHA LEE Hair Stylist IATSE 706 and 798

ADRUITHA LEE Hair Stylist IATSE 706 and 798 FILM CANTERBURY GLASS Co-Department Head New Regency Productions Director: David O. Russell RED NOTICE Department Head Netflix Director: Rawson Marshall THE ETERNALS Personal Hairstylist to Angelina Jolie Marvel Studios Director: Ms. Cloe Zhoo THE CONJURING 3 Department Head Warner Bros. Director: Mr.Michael Chaves IRRESISTABLE Department Head Hair Harlan Films, LLC. Director: Jon Stewart Cast: Steve Carell, MacKenzie Davis, Chris Cooper BIRDS OF PREY Department Head Warner Bros. Director: Cathy Yan Cast: Margot Robbie, Ewan McGregor, Chris Messina, Jurnee Smolett-Bell BOMBSHELL Personal Hair-Stylist to Charlize Theorn Bron/Lionsgate Director: Jay Roach JUNGLE CRUISE Department Head Walt Disney Pictures Director: Jaume Collet-Serra Cast: Jesse Plemons, Paul Giamanti,Edgar Ramirez, Veronica Falco'n GOOSEBUMPS: HORRORLAND Department Head Columbia Pictures Director: Ari Sandel Cast: Jeremy Ray Taylor, Madison Iseman, Ken Jeong VENOM Department Head Sony Pictures Director: Ruben Fleischer Cast: Michelle Williams, Tom Hardy, Woody Harrelson THE SPY WHO DUMPED ME Personal Hair Stylist to Mila Kunis Imagine Entertainment Director: Susanna Fogel RAMPAGE Department Head New Line Cinema Director: Brad Peyton Cast: Malin Akerman, Jeffrey Dean Morgan, Marley Shelton, Joe Manganiello THE MILTON AGENCY Adruitha Lee 6715 Hollyw ood Blvd #206, Los Angeles, CA 90028 Hairstylist Telephone: 323.466.4441 Facsim ile: 323.460.4442 IATSE 706, 798 inquiries@m iltonagency.com www.miltonagency.com Page 1 of 5 I, TONYA -

2015 Emmy for His Guest Role in the Series

Cinema Paradiso 9:00 Jury Prize Winner Jury Prize Winner 9:15 9:30 Outdoor Films Mrs. Doubtfire Director: Chris Columbus Trailer Friday, 7:30 p.m., Taylor Street Outdoor Theatre Iconic comedian Robin Williams plays Daniel Hillard, an out-of- work actor whose recent divorce left him without the custody of his beloved three children. When he learns that his ex-wife needs a housekeeper, he applies for the job and disguises himself as a devoted British nanny, in order to see his kids everyday. USA/1993/125 min. Best Make-up at the 1994 Academy Awards Best Performance by an Actor in a Motion Picture at the 1994 Golden Globes Ratatouille Director: Brad Bird Trailer Saturday, 7:30 p.m., Taylor Street Outdoor Theatre Remy is a determined young rat, gifted with highly developed senses of taste and smell. Inspired by his idol, the recently deceased chef Auguste Gusteau, Remy dreams of becoming a chef himself. When fate places Remy by Gusteau’s restaurant in Paris, he strikes an alliance with Linguini, the garbage boy. Torn between his family’s wishes and his true calling, Remy and his pal Linguini set in motion a hilarious chain of events that turn the City of Lights upside down. USA/2007/111 min. Cinema Paradiso (Nuovo Cinema Paradiso) Director: Giuseppe Tornatore Trailer Sunday, 7:30 p.m., Taylor Street Outdoor Theatre In a post-war Sicilian village, Salvatore is a cheeky young boy enchanted by the flickering images at the local Cinema Paradiso. Eager to learn about the secret of cinema’s magic, he frequently visits Alfredo, the projectionist and forms a deep bond with him. -

Engineering (Mail Stop No

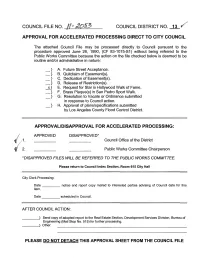

COUNCIL FILE NO. /f- t20S3 COUNCIL DISTRICT NO. 13 fl"'/ APPROVAl FOR ACCELERATED PROCESSING DIRECT TO CITY COUNCil The attached Council File may be processed directly to Council pursuant to the procedure approved June 26, 1990, (CF 83-1075-S1) without being referred to the Public Works Committee because the action on the file checked below is deemed to be routine and/or administrative in nature: _} A. Future Street Acceptance. _} B. Quitclaim of Easement(s). _} C. Dedication of Easement(s). _} D. Release of Restriction(s). _15] E. Request for Star in Hollywood Walk of Fame. _} F. Brass Plaque(s) in San Pedro Sport Walk. _} G. Resolution to Vacate or Ordinance submitted in response to Council action. _} H. Approval of plans/specifications submitted by Los Angeles County Flood Control District. APPROVAl/DISAPPROVAl FOR ACCELERATED PROCESSING: APPROVED DISAPPROVED* /,1. Council Office of the District '/ 2. Public Works Committee Chairperson *DISAPPROVED FILES WILL BE REFERRED TO THE PUBLIC WORKS COMMITTEE. Please return to Council Index Section, Room 615 City Hall City Clerk Processing: Date notice and report copy mailed to interested parties advising of Council date for this item. Date scheduled in Council. AFTER COUNCIL ACTION: ____~ Send copy of adopted report to the Real Estate Section, Development Services Division, Bureau of Engineering (Mail Stop No. 515) for further processing. ____~ Other: PLEASE DO NOT DETACH THIS APPROVAL SHEET FROM THE COUNCil FILE ACCELERATED REVIEW PROCESS- E Office of the City Engineer Los Angeles California To the Honorable Council DEC 0 7 2011 Ofthe City of Los Angeles Honorable Members: C. -

Movie Data Analysis.Pdf

FinalProject 25/08/2018, 930 PM COGS108 Final Project Group Members: Yanyi Wang Ziwen Zeng Lingfei Lu Yuhan Wang Yuqing Deng Introduction and Background Movie revenue is one of the most important measure of good and bad movies. Revenue is also the most important and intuitionistic feedback to producers, directors and actors. Therefore it is worth for us to put effort on analyzing what factors correlate to revenue, so that producers, directors and actors know how to get higher revenue on next movie by focusing on most correlated factors. Our project focuses on anaylzing all kinds of factors that correlated to revenue, for example, genres, elements in the movie, popularity, release month, runtime, vote average, vote count on website and cast etc. After analysis, we can clearly know what are the crucial factors to a movie's revenue and our analysis can be used as a guide for people shooting movies who want to earn higher renveue for their next movie. They can focus on those most correlated factors, for example, shooting specific genre and hire some actors who have higher average revenue. Reasrch Question: Various factors blend together to create a high revenue for movie, but what are the most important aspect contribute to higher revenue? What aspects should people put more effort on and what factors should people focus on when they try to higher the revenue of a movie? http://localhost:8888/nbconvert/html/Desktop/MyProjects/Pr_085/FinalProject.ipynb?download=false Page 1 of 62 FinalProject 25/08/2018, 930 PM Hypothesis: We predict that the following factors contribute the most to movie revenue. -

Philip Seymour Hoffman

PHILIP SEYMOUR HOFFMAN CATHERINE KEENER CLIFTON COLLINS JR. CHRIS COOPER BRUCE GREENWOOD BOB BALABAN MARK PELLEGRINO AMY RYAN in CAPOTE Directed by Bennett Miller Written by Dan Futterman based on the book by Gerald Clarke A Sony Pictures Classics Release EAST COAST WEST COAST DISTRIBUTOR Donna Daniels Public Relations Block- Korenbrot Sony Pictures Classics Donna Daniels Melody Korenbrot Carmelo Pirrone Rona Geller Lee Ginsberg Angela Gresham Ph: (212) 869-7233 Ph: (323) 634-7001 Ph: (212) 833-8833. Fx: (212) 869-7114 Fx: (323) 634-7030 Fx: (212) 833-8844 1375 Broadway, 21st Floor 110 S. Fairfax Ave, Ste 310 550 Madison Ave., 8th Fl. New York, NY 10018 Los Angeles, CA 90036 New York, NY 10022 Visit the Sony Pictures Classics Internet site at: http:/www.sonyclassics.com CAPOTE The Cast Truman Capote PHILIP SEYMOUR HOFFMAN Nelle Harper Lee CATHERINE KEENER Perry Smith CLIFTON COLLINS Jr. Alvin Dewey CHRIS COOPER Jack Dunphy BRUCE GREENWOOD William Shawn BOB BALABAN Marie Dewey AMY RYAN Dick Hickock MARK PELLEGRINO Laura Kinney ALLIE MICKELSON Warden Marshall Krutch MARSHALL BELL Dorothy Sanderson ARABY LOCKHART New York Reporter ROBERT HUCULAK Roy Church R.D. REID Harold Nye ROBERT McLAUGHLIN Sheriff Walter Sanderson HARRY NELKEN Danny Burke KERR HEWITT Judge Roland Tate JOHN MACLAREN Jury Foreman JEREMY DANGERFIELD Porter KWESI AMEYAW Chaplain JIM SHEPARD Pete Holt JOHN DESTRY Lowell Lee Andrews C. ERNST HARTH Richard Avedon ADAM KIMMEL 2 ` CAPOTE The Filmmakers Director BENNETT MILLER Screenplay DAN FUTTERMAN Based on the book “Capote” -

The Company Men Written & Directed by John Wells Principal Actors: Ben Affleck with Chris Cooper & Tommy Lee Jones

The Company Men Written & Directed by John Wells Principal Actors: Ben Affleck with Chris Cooper & Tommy Lee Jones By Guest Critic Patrick McDonald SPECIAL FOR FILMS FOR TWO© Synopsis: Ripped from recent headlines, The Company Men portrays the effect of “the Great Recession” of 2008 on three men (played by Ben Affleck, Chris Cooper and Tommy Lee Jones) and their families. John Wells, known primarily as the executive producer of groundbreaking television series like ER and The West Wing, makes his feature film debut. Pat’s Review: In dealing with the overall economic downturn of 2008 (the one the world is still experiencing), The Company Men presents three individuals facing long droughts of joblessness. The frustration and insecurity that develops through this is poignantly rendered in writer/director John Wells’ The Company Men. Wells frames his narrative through these characters, all at different levels of one company, plagued with the backlash of a falling stock price, lost business and too many “human units” for the company to “support.” It’s time to downsize. Ben Affleck is “Bobby Walker,” a happy-go-lucky director of sales for a Boston shipping company. On a day when the news is ominous (the chirpy morning shows having to mournfully report on the potential economic collapse in America 2007), Bobby is simply going to work. But when he arrives, he is called on the carpet; his position has been eliminated. Behind Bobby’s firing is the power base of the company: founders “Gene McClary” (Tommy Lee Jones) and “Jim Salinger” (Craig T. Nelson). Gene, a plain speaker who tells it like it is, tries to be pragmatic regarding the circumstances. -

Sarah Mays Makeup Department Head Atlanta Iatse 798

SARAH MAYS MAKEUP DEPARTMENT HEAD ATLANTA IATSE 798 FEATURES/DEPARTMENT HEAD VACATION FRIENDS • Broken Road Pictures • Exec. Producer: Timothy M. Bourney • 2020 Cast: John Cena, Meredith Hagner, Lil Rel Howery, Yvonne Orji GREENLAND• Thunder Road Pictures • Exec. Producer: Harold van Lier, Nik Bower, Deepak Nayar • 2019 Cast: Gerard Butler, Morena Baccarin IRRESISTIBLE• Focus Features • Exec. Producer: Lila Yacoub • Director: Jon Stewart • 2019 Cast: Steve Carell, Rose Byrne, Chris Cooper, Mackenzie Davis, Topher Grace, Natasha Lyonne ZOMBIELAND 2: DOUBLE TAP • Sony Pictures • Exec. Producer: Paul Wernick, Rhett Reese • Director: Ruben Fleischer • 2019 Cast: Emma Stone, Woody Harrelson, Jesse Eisenberg, Abigail Breslin, Zoey Deutch, Avan Jogi, Bill Murray, Rosario Dawson FATHER FIGURES • Warner Bros/Alcon Entertainment • Exec. Prod: Tim Bourne • Director: Larry Sher • 2015 Cast: Owen Wilson, Ed Helms, Glenn Close, J.K. Simmons, Katt Williams, Terry Bradshaw, Ving Rhames KEEPING UP WITH THE JONESES • 20th Century Fox • Exec. Prod: Tim Bourne • Director: Greg Mottolo • 2015 Cast: Zach Galifianakis, Jon Hamm, Isla Fisher, Gal Gadot THE ACCOUNTANT • Warner Bros. • Producer: Marty Ewing Director: Gavin O'Connor • 2015 Cast: Ben Affleck, Anna Kendrick, J.K. Simmons, Jon Bernthal, Cynthia Addai-Robinson, Jeffrey Tambor, John Lithgow TERM LIFE • Redeemed Prod., LLC • Prod: Tim Bourne, Victoria, Vince Vaughn • Director: P. Billingsley • 2014 Cast: Vince Vaughn, Hailee Steinfeld, Bill Paxton, Johanth Banks, Terrence Howard, Shea Whigham SINGLE MOM'S CLUB • Tyler Perry Studios • Producer: Jonathan McKoy • Director: Tyler Perry • 2013 Cast: Nia Long, Wendi McLendon-Covery, Amy Smart, Zulay Henao, Coca Brown, Ryan Eggold THE INTERNSHIP • Twentieth Century Fox • Exec. Prod: Mary McLaghlen • Director: Shawn Levy • 2013 Cast: Vince Vaughn, Owen Wilson, Rose Byrne, Max Minghella, Josh Brener Dylan O’Brien AMERICAN REUNION • Universal • Producers: Chris Moore, Craig Perry • Director: J.