A Beam Tracing Approach to Acoustic Modeling for Interactive Virtual Environments

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Anti-Aliased and Accelerated Ray Tracing

Anti-aliased and accelerated ray tracing University of Texas at Austin CS384G - Computer Graphics Fall 2010 Don Fussell Reading Required: Watt, sections 12.5.3 – 12.5.4, 14.7 Further reading: A. Glassner. An Introduction to Ray Tracing. Academic Press, 1989. [In the lab.] University of Texas at Austin CS384G - Computer Graphics Fall 2010 Don Fussell 2 Aliasing in rendering One of the most common rendering artifacts is the “jaggies”. Consider rendering a white polygon against a black background: We would instead like to get a smoother transition: University of Texas at Austin CS384G - Computer Graphics Fall 2010 Don Fussell 3 Anti-aliasing Q: How do we avoid aliasing artifacts? 1. Sampling: 2. Pre-filtering: 3. Combination: Example - polygon: University of Texas at Austin CS384G - Computer Graphics Fall 2010 Don Fussell 4 Polygon anti-aliasing University of Texas at Austin CS384G - Computer Graphics Fall 2010 Don Fussell 5 Antialiasing in a ray tracer We would like to compute the average intensity in the neighborhood of each pixel. When casting one ray per pixel, we are likely to have aliasing artifacts. To improve matters, we can cast more than one ray per pixel and average the result. A.k.a., super-sampling and averaging down. University of Texas at Austin CS384G - Computer Graphics Fall 2010 Don Fussell 6 Speeding it up Vanilla ray tracing is really slow! Consider: m x m pixels, k x k supersampling, and n primitives, average ray path length of d, with 2 rays cast recursively per intersection. Complexity = For m=1,000,000, k = 5, n = 100,000, d=8…very expensive!! In practice, some acceleration technique is almost always used. -

Interactive Image-Space Techniques for Approximating Caustics

Interactive Image-Space Techniques for Approximating Caustics Chris Wyman∗ Scott Davis† University of Iowa University of Iowa Figure 1: Our approach to interactive caustics builds off work for interactive reflections and refractions. The first pass renders (a) the view from the light, (b) a depth map for shadow mapping, and (c) final photon locations into buffers. The second pass gathers the photons and (e) renders a result from the eye’s point of view. The result significantly improves on (d) existing interactive renderings, and compares favorably with (f) photon mapping. Abstract significant simplifications to the underlying physical mod- els. These simplifications typically restrict the global inter- actions that can occur between objects, and dynamic spec- Interactive applications require simplifications to lighting, ular interactions are frequently eliminated first. geometry, and material properties that preclude many ef- fects encountered in the physical world. Until recently only While perfect specular interactions are easily modeled us- the most simplistic reflections and refractions could be per- ing a ray tracing paradigm, these techniques do not apply formed interactively, but state-of-the-art research has lifted well to the GPU pipeline. Brute-force ray tracing for main- some restrictions on such materials. This paper builds upon stream applications seems distant (either via parallel imple- this work, but examines reflection and refraction from the mentations [Wald et al. 2002], ray tracing accelerators [Woop light’s viewpoint to achieve interactive caustics from point et al. 2005], or GPU ray tracing [Purcell et al. 2002]), but sources. Our technique emits photons from the light and techniques for applying rasterization to interactively approx- stores the results in image-space, similar to a shadow map. -

Topological Space Partition for Fast Ray Tracing in Architectural Models

Topological Space Partition for Fast Ray Tracing in Architectural Models Maxime Maria, S´ebastien Horna and Lilian Aveneau University of Poitiers, XLIM-SIC, UMR7252 CNRS, Futuroscope Chasseneuil Cedex, Poitiers, France Keywords: Interactive Ray Tracing, Beam Tracing, Acceleration Structure, Architecture, Topology, Cells-and-Portals. Abstract: Fast ray-tracing requires an efficient acceleration structure. For architectural environment, the most famous is the cells-and-portals one. Many previous works attempt to automatically construct a good cells-and-portals. We propose a new acceleration structure which extends the classical cells-and-portals. It is automatically extracted from the topological model of a given building. It contains a low number of large volumes, all of them linked into a graph model. The scan of our structure is particularly simple and rapid, using all the topological information available from the topological model. The scan can be done for a single ray, or a wide ray packet. We show in this paper that our structure allows an interactive rendering even for large building models, with direct lighting from some thousands of point lights. 1 INTRODUCTION Meneveaux et al., 1998; Fradin et al., 2005), the ef- ficiency of cells-and-portals have been demonstrated Ray-tracing is a rendering technique allowing to com- using only neighbourhood information between vol- pute high-quality realistic images. It allows to render umes. all kinds of visual phenomena such as reflection, re- In this article, we propose a new acceleration fraction, direct or global illumination. Its main dis- structure for ray tracing in architectural environments. advantage lies in its high computational cost. -

A Beam Tracing with Precise Antialiasing for Polyhedral Scenes Djamchid Ghazanfarpour, Jean-Marc Hasenfratz

A Beam Tracing with Precise Antialiasing for Polyhedral Scenes Djamchid Ghazanfarpour, Jean-Marc Hasenfratz To cite this version: Djamchid Ghazanfarpour, Jean-Marc Hasenfratz. A Beam Tracing with Precise Antialiasing for Poly- hedral Scenes. Computers and Graphics, Elsevier, 1998, 22 (1), pp.103–115. inria-00509981 HAL Id: inria-00509981 https://hal.inria.fr/inria-00509981 Submitted on 17 Aug 2010 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. A Beam Tracing Method with Precise Antialiasing for Polyhedral Scenes Djamchid GHAZANFARPOUR and Jean-Marc HASENFRATZ Laboratoire MSI - Université de Limoges E.N.S.I.L. Technopole F87068 LIMOGES Cedex, France e-mails: [email protected] [email protected] 1. Introduction Ray tracing is one of the most interesting algorithms in computer graphics to generate realistic images of 3D scenes. It is able to simulate various optic phenomena such as shadows, reflections and refractions. However, ray tracing is in essence a point sampling approach (the scene is sampled at the centre of each screen pixel) and thus is prone to the well-known aliasing problems (image 1). This is also the case of many other visualization algorithms such as the popular z-buffer [1]. -

Real-Time Global Illumination Using Opengl and Voxel Cone Tracing

FREIE UNIVERSITÄT BERLIN BACHELOR’S THESIS Real-Time Global Illumination Using OpenGL And Voxel Cone Tracing Author: Supervisor: Benjamin KAHL Prof. Wolfgang MULZER A revised and corrected version of a thesis submitted in fulfillment of the requirements for the degree of Bachelor of Science in the arXiv:2104.00618v1 [cs.GR] 1 Apr 2021 Department of Mathematics and Computer Science on February 19, 2019 April 2, 2021 i FREIE UNIVERSITÄT BERLIN Abstract Institute of Computer Science Department of Mathematics and Computer Science Bachelor of Science Real-Time Global Illumination Using OpenGL And Voxel Cone Tracing by Benjamin KAHL Building systems capable of replicating global illumination models with interactive frame-rates has long been one of the toughest conundrums facing computer graphics researchers. Voxel Cone Tracing, as proposed by Cyril Crassin et al. in 2011, makes use of mipmapped 3D textures containing a voxelized representation of an environments direct light component to trace diffuse, specular and occlusion cones in linear time to extrapolate a surface fragments indirect light emitted towards a given photo- receptor. Seemingly providing a well-disposed balance between performance and phys- ical fidelity, this thesis examines the algorithms theoretical side on the basis of the rendering equation as well as its practical side in the context of a self-implemented, OpenGL-based variant. Whether if it can compete with long standing alternatives such as radiosity and raytracing will be determined in the subsequent evaluation. ii Contents Abstracti 1 Preface1 1.1 Introduction . .1 1.2 Problem and Objective . .1 1.3 Thesis Structure . .2 2 Introduction3 2.1 Basic Optics . -

A Comprehensive Theory of Volumetric Radiance Estimation Using Photon Points and Beams

A Comprehensive Theory of Volumetric Radiance Estimation using Photon Points and Beams WOJCIECH JAROSZ Disney Research Zurich¨ and UC San Diego DEREK NOWROUZEZAHRAI Disney Research Zurich¨ and University of Toronto IMAN SADEGHI and HENRIK WANN JENSEN UC San Diego We present two contributions to the area of volumetric rendering. We de- organic materials is due to the way these media “participate” in light velop a novel, comprehensive theory of volumetric radiance estimation that interactions by emitting, absorbing, or scattering photons. These leads to several new insights and includes all previously published estimates phenomena are common in the real world but, unfortunately, are as special cases. This theory allows for estimating in-scattered radiance incredibly costly to simulate accurately. Because of this, computer at a point, or accumulated radiance along a camera ray, with the standard graphics has had a long-standing interest in developing more effi- photon particle representation used in previous work. Furthermore, we gen- cient, accurate, and general participating media rendering techniques. eralize these operations to include a more compact, and more expressive We refer the reader to the recent survey by Cerezo et al. [2005] for a intermediate representation of lighting in participating media, which we comprehensive overview. call “photon beams.” The combination of these representations and their The most general techniques often use a form of stochastic sam- respective query operations results in a collection of nine distinct volumetric pling and Monte Carlo integration. This includes unbiased tech- radiance estimates. niques such as (bidirectional) path tracing [Lafortune and Willems Our second contribution is a more efficient rendering method for partici- 1993; Veach and Guibas 1994; Lafortune and Willems 1996] or pating media based on photon beams. -

Ray Differentials and Multiresolution Geometry Caching for Distribution Ray Tracing in Complex Scenes

EUROGRAPHICS 2003 / P. Brunet and D. Fellner Volume 22 (2003), Number 3 (Guest Editors) Ray Differentials and Multiresolution Geometry Caching for Distribution Ray Tracing in Complex Scenes Per H. Christensen David M. Laur Julian Fong Wayne L. Wooten Dana Batali Pixar Animation Studios Abstract When rendering only directly visible objects, ray tracing a few levels of specular reflection from large, low- curvature surfaces, and ray tracing shadows from point-like light sources, the accessed geometry is coherent and a geometry cache performs well. But in many other cases, the accessed geometry is incoherent and a standard geometry cache performs poorly: ray tracing of specular reflection from highly curved surfaces, tracing rays that are many reflection levels deep, and distribution ray tracing for wide glossy reflection, global illumination, wide soft shadows, and ambient occlusion. Fortunately, less geometric accuracy is necessary in the incoherent cases. This observation can be formalized by looking at the ray differentials for different types of scattering: coherent rays have small differentials, while incoherent rays have large differentials. We utilize this observation to obtain efficient multiresolution caching of geometry and textures (including displacement maps) for classic and distribu- tion ray tracing in complex scenes. We use an existing multiresolution caching scheme (originally developed for scanline rendering) for textures and displacement maps, and introduce a multiresolution geometry caching scheme for tessellated surfaces. The multiresolution geometry caching scheme makes it possible to efficiently render scenes that, if fully tessellated, would use 100 times more memory than the geometry cache size. 1. Introduction effect. For example, due to the fixed resolution of shadow maps, they are not suitable for computing tiny, sharp shad- Our goal is to render ray tracing and global illumination ef- ows in large scenes. -

A Real-Time Beam Tracer with Application to Exact Soft Shadows

Eurographics Symposium on Rendering (2007) Jan Kautz and Sumanta Pattanaik (Editors) A Real-time Beam Tracer with Application to Exact Soft Shadows Ryan Overbeck1, Ravi Ramamoorthi1 and William R. Mark2 1Columbia University 2University of Texas at Austin Abstract Efficiently calculating accurate soft shadows cast by area light sources remains a difficult problem. Ray tracing based approaches are subject to noise or banding, and most other accurate methods either scale poorly with scene geometry or place restrictions on geometry and/or light source size and shape. Beam tracing is one solu- tion which has historically been considered too slow and complicated for most practical rendering applications. Beam tracing’s performance has been hindered by complex geometry intersection tests, and a lack of good accel- eration structures with efficient algorithms to traverse them. We introduce fast new algorithms for beam tracing, specifically for beam–triangle intersection and beam–kd-tree traversal. The result is a beam tracer capable of calculating precise primary visibility and point light shadows in real-time. Moreover, beam tracing provides full area elements instead of point samples, which allows us to maintain coherence through to secondary effects and utilize the GPU for high quality antialiasing and shading with minimal extra cost. More importantly, our analysis shows that beam tracing is particularly well suited to soft shadows from area lights, and we generate essentially exact noise-free soft shadows for complex scenes in seconds rather than minutes or hours. 1. Introduction Soft shadows are valuable for photorealistic rendering. They benefits of these methods are relatively modest for sec- provide visual depth cues and help to set the mood in an ondary effects such as soft shadows. -

Simulation of Radio Wave Propagation by Beam Tracing

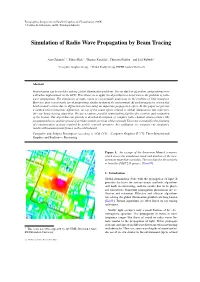

Eurographics Symposium on Parallel Graphics and Visualization (2009) J. Comba, K. Debattista, and D. Weiskopf (Editors) Simulation of Radio Wave Propagation by Beam Tracing Arne Schmitz†∗, Tobias Rick+, Thomas Karolski∗, Thorsten Kuhlen+ and Leif Kobbelt∗ ∗Computer Graphics Group, +Virtual Reality Group, RWTH Aachen University Abstract Beam tracing can be used for solving global illumination problems. It is an efficient algorithm, and performs very well when implemented on the GPU. This allows us to apply the algorithm in a novel way to the problem of radio wave propagation. The simulation of radio waves is conceptually analogous to the problem of light transport. However, their wavelengths are of proportions similar to that of the environment. At such frequencies, waves that bend around corners due to diffraction are becoming an important propagation effect. In this paper we present a method which integrates diffraction, on top of the usual effects related to global illumination like reflection, into our beam tracing algorithm. We use a custom, parallel rasterization pipeline for creation and evaluation of the beams. Our algorithm can provide a detailed description of complex radio channel characteristics like propagation losses and the spread of arriving signals over time (delay spread). Those are essential for the planning of communication systems required by mobile network operators. For validation, we compare our simulation results with measurements from a real world network. Categories and Subject Descriptors (according to ACM CCS): Computer Graphics [I.3.7]: Three-Dimensional Graphics and Realism—: Raytracing Figure 1: An excerpt of the downtown Munich scenario, which shows the simulation result and markers of the mea- surement route that was taken. -

Simulation of Radio Wave Propagation by Beam Tracing

Eurographics Symposium on Parallel Graphics and Visualization (2009) J. Comba, K. Debattista, and D. Weiskopf (Editors) Simulation of Radio Wave Propagation by Beam Tracing Arne Schmitzy∗, Tobias Rick+, Thomas Karolski∗, Thorsten Kuhlen+ and Leif Kobbelt∗ ∗Computer Graphics Group, +Virtual Reality Group, RWTH Aachen University Abstract Beam tracing can be used for solving global illumination problems. It is an efficient algorithm, and performs very well when implemented on the GPU. This allows us to apply the algorithm in a novel way to the problem of radio wave propagation. The simulation of radio waves is conceptually analogous to the problem of light transport. However, their wavelengths are of proportions similar to that of the environment. At such frequencies, waves that bend around corners due to diffraction are becoming an important propagation effect. In this paper we present a method which integrates diffraction, on top of the usual effects related to global illumination like reflection, into our beam tracing algorithm. We use a custom, parallel rasterization pipeline for creation and evaluation of the beams. Our algorithm can provide a detailed description of complex radio channel characteristics like propagation losses and the spread of arriving signals over time (delay spread). Those are essential for the planning of communication systems required by mobile network operators. For validation, we compare our simulation results with measurements from a real world network. Categories and Subject Descriptors (according to ACM CCS): Computer Graphics [I.3.7]: Three-Dimensional Graphics and Realism—: Raytracing Figure 1: An excerpt of the downtown Munich scenario, which shows the simulation result and markers of the mea- surement route that was taken. -

Extending Backward Beam Tracing to Glossy Scattering Surfaces

Volume xx (200y), Number z, pp. 1–11 Extending Backward Beam Tracing to Glossy Scattering Surfaces B. Duvenhage1 , K. Bouatouch 2 and D. G. Kourie1 1University of Pretoria, Pretoria, South Africa 2University of Rennes 1, Rennes, France Abstract From the literature, it is known that backward polygon beam tracing, i.e. beam tracing from the light source (L), methods are well suited to gather path coherency from specular (S) scattering surfaces. These methods are of course useful for modelling and efficiently simulating caustics on diffuse (D) surfaces which are due to LS+D transport paths. This paper generalises backward polygon beam tracing to include a glossy (G) scattering surface. To this end the details of a backward polygon beam tracing model and implementation of L(SjG)D transport paths are presented. A ray tracing forward renderer is used to connect these lumped transport paths to the eye (E). Although we limit the discussion to short transport paths we show that backward beam tracing outperforms photon mapping by an order of magnitude for rendering caustics from glossy and specular surfaces. Categories and Subject Descriptors (according to ACM CCS): I.3.7 [Computer Graphics]: Three Dimensional Graph- ics and Realism—Color, shading, shadowing, and texture 1. Introduction Watt [Wat90] and others [EAMJ05][BP00] have used back- ward polygon beam tracing to efficiently simulate transport paths from the light source (L) that contain a sequence of specular (S) surface interactions and a final diffuse (D) in- teraction. Using Heckbert’s [Hec90] regular expression no- tation the modelled transport paths are described with the ex- pression LS+D. -

A Beam Tracing Method for Interactive Architectural Acoustics

A Beam Tracing Method for Interactive Architectural Acoustics Thomas Funkhouser Princeton University Nicolas Tsingos, Ingrid Carlbom, Gary Elko, Mohan Sondhi, James E. West, Gopal Pingali, Bell Laboratories Patrick Min and Addy Ngan Princeton University Suggested abbreviated title: “Beam Tracing for Interactive Architectural Acoustics” Received: 1 Abstract A difficult challenge in geometrical acoustic modeling is computing propagation paths from sound sources to receivers fast enough for interactive applications. We paper describe a beam tracing method that enables interactive updates of propagation paths from a stationary source to a moving receiver. During a precomputation phase, we trace convex polyhedral beams from the location of each sound source, constructing a “beam tree” representing the regions of space reachable by potential sequences of transmissions, diffractions, and specular reflections at surfaces of a 3D polygonal model. Then, during an interactive phase, we use the precomputed beam trees to generate propagation paths from the source(s) to any receiver location at interactive rates. The key features of our beam tracing method are: 1) it scales to support large architectural environments, 2) it models propagation due to wedge diffraction, 3) it finds all propagation paths up to a given termination criterion without exhaustive search or risk of under-sampling, and 4) it updates propagation paths at interactive rates. We demonstrate use of this method for interactive acoustic design of architectural environments. PACS numbers: 43.55.Ka 43.58.Ta 2 1 Introduction Geometric acoustic modeling tools are commonly used for design and simulation of 3D archi- tectural environments. For example, architects use CAD tools to evaluate the acoustic properties of proposed auditorium designs, factory planners predict the sound levels at different positions on factory floors, and audio engineers optimize arrangements of loudspeakers.