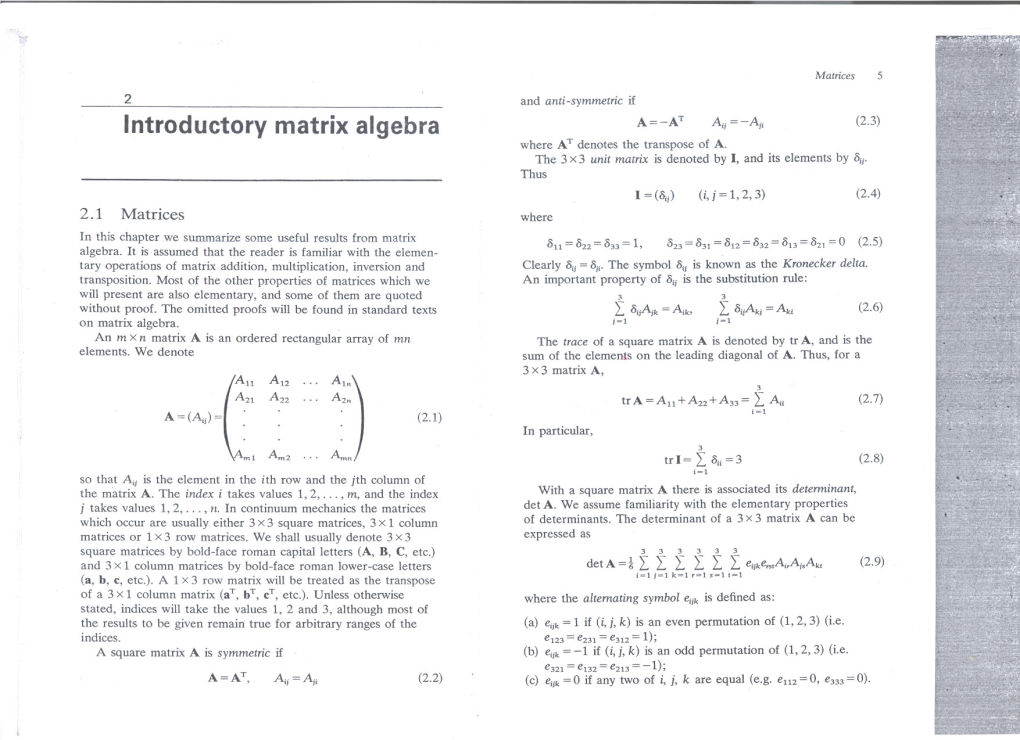

Introductory Matrix Algebra ~~ Where at Denotes the Transpose of A

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

21. Orthonormal Bases

21. Orthonormal Bases The canonical/standard basis 011 001 001 B C B C B C B0C B1C B0C e1 = B.C ; e2 = B.C ; : : : ; en = B.C B.C B.C B.C @.A @.A @.A 0 0 1 has many useful properties. • Each of the standard basis vectors has unit length: q p T jjeijj = ei ei = ei ei = 1: • The standard basis vectors are orthogonal (in other words, at right angles or perpendicular). T ei ej = ei ej = 0 when i 6= j This is summarized by ( 1 i = j eT e = δ = ; i j ij 0 i 6= j where δij is the Kronecker delta. Notice that the Kronecker delta gives the entries of the identity matrix. Given column vectors v and w, we have seen that the dot product v w is the same as the matrix multiplication vT w. This is the inner product on n T R . We can also form the outer product vw , which gives a square matrix. 1 The outer product on the standard basis vectors is interesting. Set T Π1 = e1e1 011 B C B0C = B.C 1 0 ::: 0 B.C @.A 0 01 0 ::: 01 B C B0 0 ::: 0C = B. .C B. .C @. .A 0 0 ::: 0 . T Πn = enen 001 B C B0C = B.C 0 0 ::: 1 B.C @.A 1 00 0 ::: 01 B C B0 0 ::: 0C = B. .C B. .C @. .A 0 0 ::: 1 In short, Πi is the diagonal square matrix with a 1 in the ith diagonal position and zeros everywhere else. -

![Arxiv:2012.13347V1 [Physics.Class-Ph] 15 Dec 2020](https://docslib.b-cdn.net/cover/7144/arxiv-2012-13347v1-physics-class-ph-15-dec-2020-137144.webp)

Arxiv:2012.13347V1 [Physics.Class-Ph] 15 Dec 2020

KPOP E-2020-04 Bra-Ket Representation of the Inertia Tensor U-Rae Kim, Dohyun Kim, and Jungil Lee∗ KPOPE Collaboration, Department of Physics, Korea University, Seoul 02841, Korea (Dated: June 18, 2021) Abstract We employ Dirac's bra-ket notation to define the inertia tensor operator that is independent of the choice of bases or coordinate system. The principal axes and the corresponding principal values for the elliptic plate are determined only based on the geometry. By making use of a general symmetric tensor operator, we develop a method of diagonalization that is convenient and intuitive in determining the eigenvector. We demonstrate that the bra-ket approach greatly simplifies the computation of the inertia tensor with an example of an N-dimensional ellipsoid. The exploitation of the bra-ket notation to compute the inertia tensor in classical mechanics should provide undergraduate students with a strong background necessary to deal with abstract quantum mechanical problems. PACS numbers: 01.40.Fk, 01.55.+b, 45.20.dc, 45.40.Bb Keywords: Classical mechanics, Inertia tensor, Bra-ket notation, Diagonalization, Hyperellipsoid arXiv:2012.13347v1 [physics.class-ph] 15 Dec 2020 ∗Electronic address: [email protected]; Director of the Korea Pragmatist Organization for Physics Educa- tion (KPOP E) 1 I. INTRODUCTION The inertia tensor is one of the essential ingredients in classical mechanics with which one can investigate the rotational properties of rigid-body motion [1]. The symmetric nature of the rank-2 Cartesian tensor guarantees that it is described by three fundamental parameters called the principal moments of inertia Ii, each of which is the moment of inertia along a principal axis. -

Abstract Tensor Systems and Diagrammatic Representations

Abstract tensor systems and diagrammatic representations J¯anisLazovskis September 28, 2012 Abstract The diagrammatic tensor calculus used by Roger Penrose (most notably in [7]) is introduced without a solid mathematical grounding. We will attempt to derive the tools of such a system, but in a broader setting. We show that Penrose's work comes from the diagrammisation of the symmetric algebra. Lie algebra representations and their extensions to knot theory are also discussed. Contents 1 Abstract tensors and derived structures 2 1.1 Abstract tensor notation . 2 1.2 Some basic operations . 3 1.3 Tensor diagrams . 3 2 A diagrammised abstract tensor system 6 2.1 Generation . 6 2.2 Tensor concepts . 9 3 Representations of algebras 11 3.1 The symmetric algebra . 12 3.2 Lie algebras . 13 3.3 The tensor algebra T(g)....................................... 16 3.4 The symmetric Lie algebra S(g)................................... 17 3.5 The universal enveloping algebra U(g) ............................... 18 3.6 The metrized Lie algebra . 20 3.6.1 Diagrammisation with a bilinear form . 20 3.6.2 Diagrammisation with a symmetric bilinear form . 24 3.6.3 Diagrammisation with a symmetric bilinear form and an orthonormal basis . 24 3.6.4 Diagrammisation under ad-invariance . 29 3.7 The universal enveloping algebra U(g) for a metrized Lie algebra g . 30 4 Ensuing connections 32 A Appendix 35 Note: This work relies heavily upon the text of Chapter 12 of a draft of \An Introduction to Quantum and Vassiliev Invariants of Knots," by David M.R. Jackson and Iain Moffatt, a yet-unpublished book at the time of writing. -

Vectors, Matrices and Coordinate Transformations

S. Widnall 16.07 Dynamics Fall 2009 Lecture notes based on J. Peraire Version 2.0 Lecture L3 - Vectors, Matrices and Coordinate Transformations By using vectors and defining appropriate operations between them, physical laws can often be written in a simple form. Since we will making extensive use of vectors in Dynamics, we will summarize some of their important properties. Vectors For our purposes we will think of a vector as a mathematical representation of a physical entity which has both magnitude and direction in a 3D space. Examples of physical vectors are forces, moments, and velocities. Geometrically, a vector can be represented as arrows. The length of the arrow represents its magnitude. Unless indicated otherwise, we shall assume that parallel translation does not change a vector, and we shall call the vectors satisfying this property, free vectors. Thus, two vectors are equal if and only if they are parallel, point in the same direction, and have equal length. Vectors are usually typed in boldface and scalar quantities appear in lightface italic type, e.g. the vector quantity A has magnitude, or modulus, A = |A|. In handwritten text, vectors are often expressed using the −→ arrow, or underbar notation, e.g. A , A. Vector Algebra Here, we introduce a few useful operations which are defined for free vectors. Multiplication by a scalar If we multiply a vector A by a scalar α, the result is a vector B = αA, which has magnitude B = |α|A. The vector B, is parallel to A and points in the same direction if α > 0. -

Multilinear Algebra and Applications July 15, 2014

Multilinear Algebra and Applications July 15, 2014. Contents Chapter 1. Introduction 1 Chapter 2. Review of Linear Algebra 5 2.1. Vector Spaces and Subspaces 5 2.2. Bases 7 2.3. The Einstein convention 10 2.3.1. Change of bases, revisited 12 2.3.2. The Kronecker delta symbol 13 2.4. Linear Transformations 14 2.4.1. Similar matrices 18 2.5. Eigenbases 19 Chapter 3. Multilinear Forms 23 3.1. Linear Forms 23 3.1.1. Definition, Examples, Dual and Dual Basis 23 3.1.2. Transformation of Linear Forms under a Change of Basis 26 3.2. Bilinear Forms 30 3.2.1. Definition, Examples and Basis 30 3.2.2. Tensor product of two linear forms on V 32 3.2.3. Transformation of Bilinear Forms under a Change of Basis 33 3.3. Multilinear forms 34 3.4. Examples 35 3.4.1. A Bilinear Form 35 3.4.2. A Trilinear Form 36 3.5. Basic Operation on Multilinear Forms 37 Chapter 4. Inner Products 39 4.1. Definitions and First Properties 39 4.1.1. Correspondence Between Inner Products and Symmetric Positive Definite Matrices 40 4.1.1.1. From Inner Products to Symmetric Positive Definite Matrices 42 4.1.1.2. From Symmetric Positive Definite Matrices to Inner Products 42 4.1.2. Orthonormal Basis 42 4.2. Reciprocal Basis 46 4.2.1. Properties of Reciprocal Bases 48 4.2.2. Change of basis from a basis to its reciprocal basis g 50 B B III IV CONTENTS 4.2.3. -

Geometric-Algebra Adaptive Filters Wilder B

1 Geometric-Algebra Adaptive Filters Wilder B. Lopes∗, Member, IEEE, Cassio G. Lopesy, Senior Member, IEEE Abstract—This paper presents a new class of adaptive filters, namely Geometric-Algebra Adaptive Filters (GAAFs). They are Faces generated by formulating the underlying minimization problem (a deterministic cost function) from the perspective of Geometric Algebra (GA), a comprehensive mathematical language well- Edges suited for the description of geometric transformations. Also, (directed lines) differently from standard adaptive-filtering theory, Geometric Calculus (the extension of GA to differential calculus) allows Fig. 1. A polyhedron (3-dimensional polytope) can be completely described for applying the same derivation techniques regardless of the by the geometric multiplication of its edges (oriented lines, vectors), which type (subalgebra) of the data, i.e., real, complex numbers, generate the faces and hypersurfaces (in the case of a general n-dimensional quaternions, etc. Relying on those characteristics (among others), polytope). a deterministic quadratic cost function is posed, from which the GAAFs are devised, providing a generalization of regular adaptive filters to subalgebras of GA. From the obtained update rule, it is shown how to recover the following least-mean squares perform calculus with hypercomplex quantities, i.e., elements (LMS) adaptive filter variants: real-entries LMS, complex LMS, that generalize complex numbers for higher dimensions [2]– and quaternions LMS. Mean-square analysis and simulations in [10]. a system identification scenario are provided, showing very good agreement for different levels of measurement noise. GA-based AFs were first introduced in [11], [12], where they were successfully employed to estimate the geometric Index Terms—Adaptive filtering, geometric algebra, quater- transformation (rotation and translation) that aligns a pair of nions. -

Matrices and Tensors

APPENDIX MATRICES AND TENSORS A.1. INTRODUCTION AND RATIONALE The purpose of this appendix is to present the notation and most of the mathematical tech- niques that are used in the body of the text. The audience is assumed to have been through sev- eral years of college-level mathematics, which included the differential and integral calculus, differential equations, functions of several variables, partial derivatives, and an introduction to linear algebra. Matrices are reviewed briefly, and determinants, vectors, and tensors of order two are described. The application of this linear algebra to material that appears in under- graduate engineering courses on mechanics is illustrated by discussions of concepts like the area and mass moments of inertia, Mohr’s circles, and the vector cross and triple scalar prod- ucts. The notation, as far as possible, will be a matrix notation that is easily entered into exist- ing symbolic computational programs like Maple, Mathematica, Matlab, and Mathcad. The desire to represent the components of three-dimensional fourth-order tensors that appear in anisotropic elasticity as the components of six-dimensional second-order tensors and thus rep- resent these components in matrices of tensor components in six dimensions leads to the non- traditional part of this appendix. This is also one of the nontraditional aspects in the text of the book, but a minor one. This is described in §A.11, along with the rationale for this approach. A.2. DEFINITION OF SQUARE, COLUMN, AND ROW MATRICES An r-by-c matrix, M, is a rectangular array of numbers consisting of r rows and c columns: ¯MM.. -

Chapter 5 ANGULAR MOMENTUM and ROTATIONS

Chapter 5 ANGULAR MOMENTUM AND ROTATIONS In classical mechanics the total angular momentum L~ of an isolated system about any …xed point is conserved. The existence of a conserved vector L~ associated with such a system is itself a consequence of the fact that the associated Hamiltonian (or Lagrangian) is invariant under rotations, i.e., if the coordinates and momenta of the entire system are rotated “rigidly” about some point, the energy of the system is unchanged and, more importantly, is the same function of the dynamical variables as it was before the rotation. Such a circumstance would not apply, e.g., to a system lying in an externally imposed gravitational …eld pointing in some speci…c direction. Thus, the invariance of an isolated system under rotations ultimately arises from the fact that, in the absence of external …elds of this sort, space is isotropic; it behaves the same way in all directions. Not surprisingly, therefore, in quantum mechanics the individual Cartesian com- ponents Li of the total angular momentum operator L~ of an isolated system are also constants of the motion. The di¤erent components of L~ are not, however, compatible quantum observables. Indeed, as we will see the operators representing the components of angular momentum along di¤erent directions do not generally commute with one an- other. Thus, the vector operator L~ is not, strictly speaking, an observable, since it does not have a complete basis of eigenstates (which would have to be simultaneous eigenstates of all of its non-commuting components). This lack of commutivity often seems, at …rst encounter, as somewhat of a nuisance but, in fact, it intimately re‡ects the underlying structure of the three dimensional space in which we are immersed, and has its source in the fact that rotations in three dimensions about di¤erent axes do not commute with one another. -

Solving the Geodesic Equation

Solving the Geodesic Equation Jeremy Atkins December 12, 2018 Abstract We find the general form of the geodesic equation and discuss the closed form relation to find Christoffel symbols. We then show how to use metric independence to find Killing vector fields, which allow us to solve the geodesic equation when there are helpful symmetries. We also discuss a more general way to find Killing vector fields, and some of their properties as a Lie algebra. 1 The Variational Method We will exploit the following variational principle to characterize motion in general relativity: The world line of a free test particle between two timelike separated points extremizes the proper time between them. where a test particle is one that is not a significant source of spacetime cur- vature, and a free particles is one that is only under the influence of curved spacetime. Similarly to classical Lagrangian mechanics, we can use this to de- duce the equations of motion for a metric. The proper time along a timeline worldline between point A and point B for the metric gµν is given by Z B Z B µ ν 1=2 τAB = dτ = (−gµν (x)dx dx ) (1) A A using the Einstein summation notation, and µ, ν = 0; 1; 2; 3. We can parame- terize the four coordinates with the parameter σ where σ = 0 at A and σ = 1 at B. This gives us the following equation for the proper time: Z 1 dxµ dxν 1=2 τAB = dσ −gµν (x) (2) 0 dσ dσ We can treat the integrand as a Lagrangian, dxµ dxν 1=2 L = −gµν (x) (3) dσ dσ and it's clear that the world lines extremizing proper time are those that satisfy the Euler-Lagrange equation: @L d @L − = 0 (4) @xµ dσ @(dxµ/dσ) 1 These four equations together give the equation for the worldline extremizing the proper time. -

Generalized Kronecker and Permanent Deltas, Their Spinor and Tensor Equivalents — Reference Formulae

Generalized Kronecker and permanent deltas, their spinor and tensor equivalents — Reference Formulae R L Agacy1 L42 Brighton Street, Gulliver, Townsville, QLD 4812, AUSTRALIA Abstract The work is essentially divided into two parts. The purpose of the first part, applicable to n-dimensional space, is to (i) introduce a generalized permanent delta (gpd), a symmetrizer, on an equal footing with the generalized Kronecker delta (gKd), (ii) derive combinatorial formulae for each separately, and then in combination, which then leads us to (iii) provide a comprehensive listing of these formulae. For the second part, applicable to spinors in the mathematical language of General Relativity, the purpose is to (i) provide formulae for combined gKd/gpd spinors, (ii) obtain and tabulate spinor equivalents of gKd and gpd tensors and (iii) derive and exhibit tensor equivalents of gKd and gpd spinors. 1 Introduction The generalized Kronecker delta (gKd) is well known as an alternating function or antisymmetrizer, eg S^Xabc = d\X[def\. In contrast, although symmetric tensors are seen constantly, there does not appear to be employment of any symmetrizer, for eg X(abc), in analogy with the antisymmetrizer. However, it is exactly the combination of both types of symmetrizers, treated equally, that give us the flexibility to describe any type of tensor symmetry. We define such a 'permanent' symmetrizer as a generalized permanent delta (gpd) below. Our purpose is to restore an imbalance between the gKd and gpd and provide a consolidated reference of combinatorial formulae for them, most of which is not in the literature. The work divides into two Parts: the first applicable to tensors in n-dimensions, the second applicable to 2-component spinors used in the mathematical language of General Relativity. -

Geodetic Position Computations

GEODETIC POSITION COMPUTATIONS E. J. KRAKIWSKY D. B. THOMSON February 1974 TECHNICALLECTURE NOTES REPORT NO.NO. 21739 PREFACE In order to make our extensive series of lecture notes more readily available, we have scanned the old master copies and produced electronic versions in Portable Document Format. The quality of the images varies depending on the quality of the originals. The images have not been converted to searchable text. GEODETIC POSITION COMPUTATIONS E.J. Krakiwsky D.B. Thomson Department of Geodesy and Geomatics Engineering University of New Brunswick P.O. Box 4400 Fredericton. N .B. Canada E3B5A3 February 197 4 Latest Reprinting December 1995 PREFACE The purpose of these notes is to give the theory and use of some methods of computing the geodetic positions of points on a reference ellipsoid and on the terrain. Justification for the first three sections o{ these lecture notes, which are concerned with the classical problem of "cCDputation of geodetic positions on the surface of an ellipsoid" is not easy to come by. It can onl.y be stated that the attempt has been to produce a self contained package , cont8.i.ning the complete development of same representative methods that exist in the literature. The last section is an introduction to three dimensional computation methods , and is offered as an alternative to the classical approach. Several problems, and their respective solutions, are presented. The approach t~en herein is to perform complete derivations, thus stqing awrq f'rcm the practice of giving a list of for11111lae to use in the solution of' a problem. -

1.3 Cartesian Tensors a Second-Order Cartesian Tensor Is Defined As A

1.3 Cartesian tensors A second-order Cartesian tensor is defined as a linear combination of dyadic products as, T Tijee i j . (1.3.1) The coefficients Tij are the components of T . A tensor exists independent of any coordinate system. The tensor will have different components in different coordinate systems. The tensor T has components Tij with respect to basis {ei} and components Tij with respect to basis {e i}, i.e., T T e e T e e . (1.3.2) pq p q ij i j From (1.3.2) and (1.2.4.6), Tpq ep eq TpqQipQjqei e j Tij e i e j . (1.3.3) Tij QipQjqTpq . (1.3.4) Similarly from (1.3.2) and (1.2.4.6) Tij e i e j Tij QipQjqep eq Tpqe p eq , (1.3.5) Tpq QipQjqTij . (1.3.6) Equations (1.3.4) and (1.3.6) are the transformation rules for changing second order tensor components under change of basis. In general Cartesian tensors of higher order can be expressed as T T e e ... e , (1.3.7) ij ...n i j n and the components transform according to Tijk ... QipQjqQkr ...Tpqr... , Tpqr ... QipQjqQkr ...Tijk ... (1.3.8) The tensor product S T of a CT(m) S and a CT(n) T is a CT(m+n) such that S T S T e e e e e e . i1i2 im j1j 2 jn i1 i2 im j1 j2 j n 1.3.1 Contraction T Consider the components i1i2 ip iq in of a CT(n).