Unity, Disunity and Pluralism in Science

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

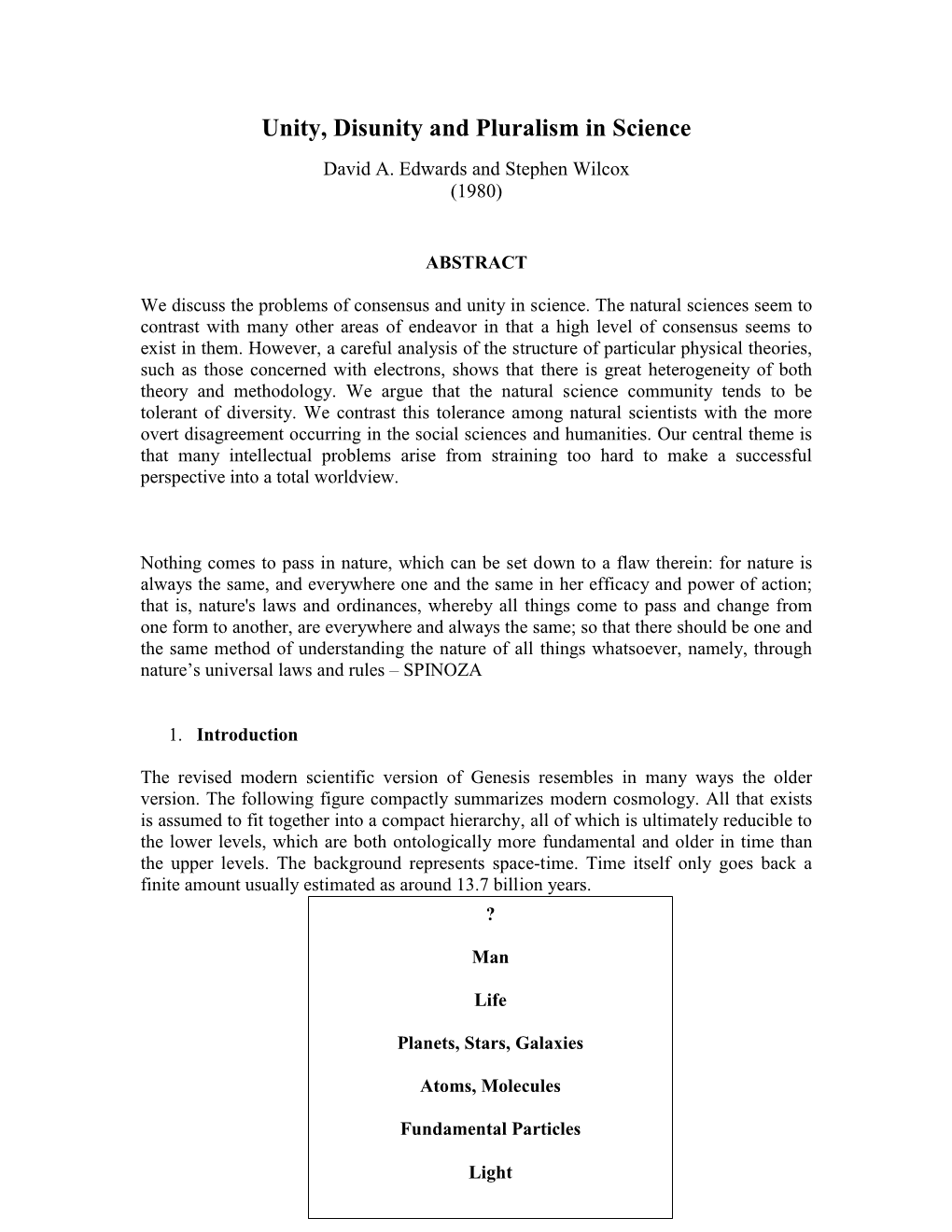

The Epistemology of Evidence in Cognitive Neuroscience1

To appear in In R. Skipper Jr., C. Allen, R. A. Ankeny, C. F. Craver, L. Darden, G. Mikkelson, and R. Richardson (eds.), Philosophy and the Life Sciences: A Reader. Cambridge, MA: MIT Press. The Epistemology of Evidence in Cognitive Neuroscience1 William Bechtel Department of Philosophy and Science Studies University of California, San Diego 1. The Epistemology of Evidence It is no secret that scientists argue. They argue about theories. But even more, they argue about the evidence for theories. Is the evidence itself trustworthy? This is a bit surprising from the perspective of traditional empiricist accounts of scientific methodology according to which the evidence for scientific theories stems from observation, especially observation with the naked eye. These accounts portray the testing of scientific theories as a matter of comparing the predictions of the theory with the data generated by these observations, which are taken to provide an objective link to reality. One lesson philosophers of science have learned in the last 40 years is that even observation with the naked eye is not as epistemically straightforward as was once assumed. What one is able to see depends upon one’s training: a novice looking through a microscope may fail to recognize the neuron and its processes (Hanson, 1958; Kuhn, 1962/1970).2 But a second lesson is only beginning to be appreciated: evidence in science is often not procured through simple observations with the naked eye, but observations mediated by complex instruments and sophisticated research techniques. What is most important, epistemically, about these techniques is that they often radically alter the phenomena under investigation. -

Pluralisms About Truth and Logic Nathan Kellen University of Connecticut - Storrs, [email protected]

University of Connecticut OpenCommons@UConn Doctoral Dissertations University of Connecticut Graduate School 8-9-2019 Pluralisms about Truth and Logic Nathan Kellen University of Connecticut - Storrs, [email protected] Follow this and additional works at: https://opencommons.uconn.edu/dissertations Recommended Citation Kellen, Nathan, "Pluralisms about Truth and Logic" (2019). Doctoral Dissertations. 2263. https://opencommons.uconn.edu/dissertations/2263 Pluralisms about Truth and Logic Nathan Kellen, PhD University of Connecticut, 2019 Abstract: In this dissertation I analyze two theories, truth pluralism and logical pluralism, as well as the theoretical connections between them, including whether they can be combined into a single, coherent framework. I begin by arguing that truth pluralism is a combination of realist and anti-realist intuitions, and that we should recognize these motivations when categorizing and formulating truth pluralist views. I then introduce logical functionalism, which analyzes logical consequence as a functional concept. I show how one can both build theories from the ground up and analyze existing views within the functionalist framework. One upshot of logical functionalism is a unified account of logical monism, pluralism and nihilism. I conclude with two negative arguments. First, I argue that the most prominent form of logical pluralism faces a serious dilemma: it either must give up on one of the core principles of logical consequence, and thus fail to be a theory of logic at all, or it must give up on pluralism itself. I call this \The Normative Problem for Logical Pluralism", and argue that it is unsolvable for the most prominent form of logical pluralism. Second, I examine an argument given by multiple truth pluralists that purports to show that truth pluralists must also be logical pluralists. -

Some Unnoticed Implications of Churchland's Pragmatic Pluralism

Contemporary Pragmatism Editions Rodopi Vol. 8, No. 1 (June 2011), 173–189 © 2011 Beyond Eliminative Materialism: Some Unnoticed Implications of Churchland’s Pragmatic Pluralism Teed Rockwell Paul Churchland’s epistemology contains a tension between two positions, which I will call pragmatic pluralism and eliminative materialism. Pragmatic pluralism became predominant as his episte- mology became more neurocomputationally inspired, which saved him from the skepticism implicit in certain passages of the theory of reduction he outlined in Scientific Realism and the Plasticity of Mind. However, once he replaces eliminativism with a neurologically inspired pragmatic pluralism, Churchland (1) cannot claim that folk psychology might be a false theory, in any significant sense; (2) cannot claim that the concepts of Folk psychology might be empty of extension and lack reference; (3) cannot sustain Churchland’s critic- ism of Dennett’s “intentional stance”; (4) cannot claim to be a form of scientific realism, in the sense of believing that what science describes is somehow realer that what other conceptual systems describe. One of the worst aspects of specialization in Philosophy and the Sciences is that it often inhibits people from asking the questions that could dissolve long standing controversies. This paper will deal with one of these controversies: Churchland’s proposal that folk psychology is a theory that might be false. Even though one of Churchland’s greatest contributions to philosophy of mind was demonstrating that the issues in philosophy of mind were a subspecies of scientific reduction, still philosophers of psychology have usually defended or critiqued folk psychology without attempting to carefully analyze Churchland’s theory of reduction. -

PDF Download Starting with Science Strategies for Introducing Young Children to Inquiry 1St Edition Ebook

STARTING WITH SCIENCE STRATEGIES FOR INTRODUCING YOUNG CHILDREN TO INQUIRY 1ST EDITION PDF, EPUB, EBOOK Marcia Talhelm Edson | 9781571108074 | | | | | Starting with Science Strategies for Introducing Young Children to Inquiry 1st edition PDF Book The presentation of the material is as good as the material utilizing star trek analogies, ancient wisdom and literature and so much more. Using Multivariate Statistics. Michael Gramling examines the impact of policy on practice in early childhood education. Part of a series on. Schauble and colleagues , for example, found that fifth grade students designed better experiments after instruction about the purpose of experimentation. For example, some suggest that learning about NoS enables children to understand the tentative and developmental NoS and science as a human activity, which makes science more interesting for children to learn Abd-El-Khalick a ; Driver et al. Research on teaching and learning of nature of science. The authors begin with theory in a cultural context as a foundation. What makes professional development effective? Frequently, the term NoS is utilised when considering matters about science. This book is a documentary account of a young intern who worked in the Reggio system in Italy and how she brought this pedagogy home to her school in St. Taking Science to School answers such questions as:. The content of the inquiries in science in the professional development programme was based on the different strands of the primary science curriculum, namely Living Things, Energy and Forces, Materials and Environmental Awareness and Care DES Exit interview. Begin to address the necessity of understanding other usually peer positions before they can discuss or comment on those positions. -

Some Major Issues and Developments in the Philosophy Ofscience Oflogical Empiricism

-----HERBERT FEIGL----- Some Major Issues and Developments in the Philosophy ofScience ofLogical Empiricism AsouT twenty-five years ago a small group of philosophically minded scientists and scientifically trained philosophers in Vienna formulated their declaration of independence from traditional philosophy. The pamphlet Wissenschaftliche Weltauffassung: Der Wiener Kreis (1929) contained the first succinct statement of the outlook which soon after became known as "logical positivism." In the first flush of enthusiasm we Viennese felt we had attained a philosophy to end all philosophies. Schlick spoke of a "Wende der Philosophie" (a decisive turning point of philosophy). Neurath and Frank declared "school philosophy" as obsolete and even suggested that our outlook drop the word "philoso phy" altogether, and replace it by "Einheitswissenschaft" or by "scien· tific empiricism." The notable impact of Alfred Ayer's first book in England, and my own efforts ~oward a propagation of Logical Positiv ism in the United States during the early thirties, and then the immi· gration of Carnap, Frank, von Mises, Reichenbach, Hempel and Berg mann created a powerful movement, but it elicited also sharp opposition nncl criticism. Through the discussions within the movement and its own production and progressive work, as well as in response to the NO'l'F.: This essay is a revised and considerably expanded .version of a lecture given in plenary session at the International Congress for Philosophy of Science, Zurich, /\ngust 25, 1954. It was first- published in Proceedi11gs of the Secono International Congress of the International Union for tl1e Philosophy ot Science (Neuchatel, Switzerland, 19 55). In the cordial letter of invitation I received from Professor Ferdinand Gonseth, president of the Congress, he asked me to discuss "I'empirisme logi<\ue,-ce qu'il fut, et ce qu'il est clevenu." Much as I appreciated the honor of t 1is ambitious assignment, I realized of course that the limitations of time would permit me to deal onJy with some selected topics within this larger frame. -

Analysis - Identify Assumptions, Reasons and Claims, and Examine How They Interact in the Formation of Arguments

Analysis - identify assumptions, reasons and claims, and examine how they interact in the formation of arguments. Individuals use analytics to gather information from charts, graphs, diagrams, spoken language and documents. People with strong analytical skills attend to patterns and to details. They identify the elements of a situation and determine how those parts interact. Strong interpretations skills can support high quality analysis by providing insights into the significance of what a person is saying or what something means. Inference - draw conclusions from reasons and evidence. Inference is used when someone offers thoughtful suggestions and hypothesis. Inference skills indicate the necessary or the very probable consequences of a given set of facts and conditions. Conclusions, hypotheses, recommendations or decisions that are based on faulty analysis, misinformation, bad data or biased evaluations can turn out to be mistaken, even if they have reached using excellent inference skills. Evaluative - assess the credibility of sources of information and the claims they make, and determine the strength and weakness or arguments. Applying evaluation skills can judge the quality of analysis, interpretations, explanations, inferences, options, opinions, beliefs, ideas, proposals, and decisions. Strong explanation skills can support high quality evaluation by providing evidence, reasons, methods, criteria, or assumptions behind the claims made and the conclusions reached. Deduction - decision making in precisely defined contexts where rules, operating conditions, core beliefs, values, policies, principles, procedures and terminology completely determine the outcome. Deductive reasoning moves with exacting precision from the assumed truth of a set of beliefs to a conclusion which cannot be false if those beliefs are untrue. Deductive validity is rigorously logical and clear-cut. -

Situational Analysis

Situational Analysis by Kevin D. Hoover CHOPE Working Paper No. 2016-17 February 2016 Situational Analysis Kevin D. Hoover Department of Economics Department of Philosophy Duke University 18 February 2016 Mail: Department of Economics Duke University Box 90097 Durham, NC 27708-0097 Tel. (919) 660-1876 Email [email protected] Abstract Situational analysis (also known as situational logic) was popularized by Karl Popper as an appropriate method for the interpretation of history and as a basis for a scientific social science. It seeks an objective positive explanation of behavior through imputing a dominant goal or motive to individuals and then identifying the action that would be objectively appropriate to the situation as the action actually taken. Popper regarded situational analysis as a generalization to all of social science of the prototypical reasoning of economics. Applied to history, situational analysis is largely an interpretive strategy used to understand individual behavior. In social sciences, however, it is applied many to types of behavior or to group behavior (e.g., to markets) as is used to generate testable hypothesis. Popper’s account of situational analysis and some criticisms that have been levied against it are reviewed. The charge that situational analysis contradicts Popper’s view that falsification is the hallmark of sciences is examined and rejected: situational analysis is precisely how Popper believes social sciences are able to generate falsifiable, and, therefore, scientific hypotheses. Still, situational analysis is in tension with another of Popper’s central ideas: situational analysis as a method for generating testable conjectures amounts to a logic of scientific discovery, something that Popper argued elsewhere was not possible. -

Ohio Rules of Evidence

OHIO RULES OF EVIDENCE Article I GENERAL PROVISIONS Rule 101 Scope of rules: applicability; privileges; exceptions 102 Purpose and construction; supplementary principles 103 Rulings on evidence 104 Preliminary questions 105 Limited admissibility 106 Remainder of or related writings or recorded statements Article II JUDICIAL NOTICE 201 Judicial notice of adjudicative facts Article III PRESUMPTIONS 301 Presumptions in general in civil actions and proceedings 302 [Reserved] Article IV RELEVANCY AND ITS LIMITS 401 Definition of “relevant evidence” 402 Relevant evidence generally admissible; irrelevant evidence inadmissible 403 Exclusion of relevant evidence on grounds of prejudice, confusion, or undue delay 404 Character evidence not admissible to prove conduct; exceptions; other crimes 405 Methods of proving character 406 Habit; routine practice 407 Subsequent remedial measures 408 Compromise and offers to compromise 409 Payment of medical and similar expenses 410 Inadmissibility of pleas, offers of pleas, and related statements 411 Liability insurance Article V PRIVILEGES 501 General rule Article VI WITNESS 601 General rule of competency 602 Lack of personal knowledge 603 Oath or affirmation Rule 604 Interpreters 605 Competency of judge as witness 606 Competency of juror as witness 607 Impeachment 608 Evidence of character and conduct of witness 609 Impeachment by evidence of conviction of crime 610 Religious beliefs or opinions 611 Mode and order of interrogation and presentation 612 Writing used to refresh memory 613 Impeachment by self-contradiction -

Recent Work on Ontological Pluralism

Recent Work on Ontological Pluralism Jason Turner November 15, 2018 Ontological Pluralism is said in many ways, at least if two counts as ‘many’. On one disambiguation, to be an ‘ontological pluralist’ is to be accommodating about theories with different ontologies. This is the pluralism of Carnap (1950) and Putnam (1987) who grant that there are competing ontological visions of reality but deny that any has an objectively better claim to correctness. We are free to accept an ontology of numbers or not, and there’s no good philosoph- ical debate to be had about whether there really are any numbers. This is the pluralism of the pluralistic society, where opposing ontological visions need to learn to just get along. We will discuss the other disambiguation of ‘Ontological Pluralism’ here. According to it, there are different ways of being. In The Problems of Philosophy, for instance, Betrand Russell (1912: 90, 98) tells us that, while there are relations as well as people, relations exist in a deeply different way than people do.1 The way in which the world grants being to relations is radically different, on this picture, then the way in which it grants it to people. Despite its pedigree, during the 20th century analytic philosophers grew suspicious of the notion. Presumably, when the logical positivists tried to kill metaphysics, the idea things could exist in different ways was supposed to die with it. Quine’s resurrection of metaphysics linked ontology with quantifiers, making it hard to see what ‘things exist in different ways’ could mean. Zoltán Szabó puts it this way: The standard view nowadays is that we can adequately capture the meaning of sentences like ‘There are Fs’, ‘Some things are F’, or ‘F’s exist’ through existential quantification. -

"What Is Evidence?" a Philosophical Perspective

"WHAT IS EVIDENCE?" A PHILOSOPHICAL PERSPECTIVE PRESENTATION SUMMARY NATIONAL COLLABORATING CENTRES FOR PUBLIC HEALTH 2007 SUMMER INSTITUTE "MAKING SENSE OF IT ALL" BADDECK, NOVA SCOTIA, AUGUST 20-23 2007 Preliminary version—for discussion "WHAT IS EVIDENCE?" A PHILOSOPHICAL PERSPECTIVE PRESENTATION SUMMARY NATIONAL COLLABORATING CENTRES FOR PUBLIC HEALTH 2007 SUMMER INSTITUTE "MAKING SENSE OF IT ALL" BADDECK, NOVA SCOTIA, AUGUST 20-23 2007 NATIONAL COLLABORATING CENTRE FOR HEALTHY PUBLIC POLICY JANUARY 2010 SPEAKER Daniel Weinstock Research Centre on Ethics, University of Montréal EDITOR Marianne Jacques National Collaborating Centre for Healthy Public Policy LAYOUT Madalina Burtan National Collaborating Centre for Healthy Public Policy DATE January 2010 The aim of the National Collaborating Centre for Healthy Public Policy (NCCHPP) is to increase the use of knowledge about healthy public policy within the public health community through the development, transfer and exchange of knowledge. The NCCHPP is part of a Canadian network of six centres financed by the Public Health Agency of Canada. Located across Canada, each Collaborating Centre specializes in a specific area, but all share a common mandate to promote knowledge synthesis, transfer and exchange. The production of this document was made possible through financial support from the Public Health Agency of Canada and funding from the National Collaborating Centre for Healthy Public Policy (NCCHPP). The views expressed here do not necessarily reflect the official position of the Public Health Agency of Canada. This document is available in electronic format (PDF) on the web site of the National Collaborating Centre for Healthy Public Policy at www.ncchpp.ca. La version française est disponible sur le site Internet du CCNPPS au www.ccnpps.ca. -

The Problem of Induction

The Problem of Induction Gilbert Harman Department of Philosophy, Princeton University Sanjeev R. Kulkarni Department of Electrical Engineering, Princeton University July 19, 2005 The Problem The problem of induction is sometimes motivated via a comparison between rules of induction and rules of deduction. Valid deductive rules are necessarily truth preserving, while inductive rules are not. So, for example, one valid deductive rule might be this: (D) From premises of the form “All F are G” and “a is F ,” the corresponding conclusion of the form “a is G” follows. The rule (D) is illustrated in the following depressing argument: (DA) All people are mortal. I am a person. So, I am mortal. The rule here is “valid” in the sense that there is no possible way in which premises satisfying the rule can be true without the corresponding conclusion also being true. A possible inductive rule might be this: (I) From premises of the form “Many many F s are known to be G,” “There are no known cases of F s that are not G,” and “a is F ,” the corresponding conclusion can be inferred of the form “a is G.” The rule (I) might be illustrated in the following “inductive argument.” (IA) Many many people are known to have been moral. There are no known cases of people who are not mortal. I am a person. So, I am mortal. 1 The rule (I) is not valid in the way that the deductive rule (D) is valid. The “premises” of the inductive inference (IA) could be true even though its “con- clusion” is not true. -

Epistemology and Philosophy of Science

OUP UNCORRECTED PROOF – FIRSTPROOFS, Wed Apr 06 2016, NEWGEN Chapter 11 Epistemology and Philosophy of Science Otávio Bueno 1 Introduction It is a sad fact of contemporary epistemology and philosophy of science that there is very little substantial interaction between the two fields. Most epistemological theo- ries are developed largely independently of any significant reflection about science, and several philosophical interpretations of science are articulated largely independently of work done in epistemology. There are occasional exceptions, of course. But the general point stands. This is a missed opportunity. Closer interactions between the two fields would be ben- eficial to both. Epistemology would gain from a closer contact with the variety of mech- anisms of knowledge generation that are produced in scientific research, with attention to sources of bias, the challenges associated with securing truly representative samples, and elaborate collective mechanisms to secure the objectivity of scientific knowledge. It would also benefit from close consideration of the variety of methods, procedures, and devices of knowledge acquisition that shape scientific research. Epistemological theories are less idealized and more sensitive to the pluralism and complexities involved in securing knowledge of various features of the world. Thus, philosophy of science would benefit, in turn, from a closer interaction with epistemology, given sophisticated conceptual frameworks elaborated to refine and characterize our understanding of knowledge,