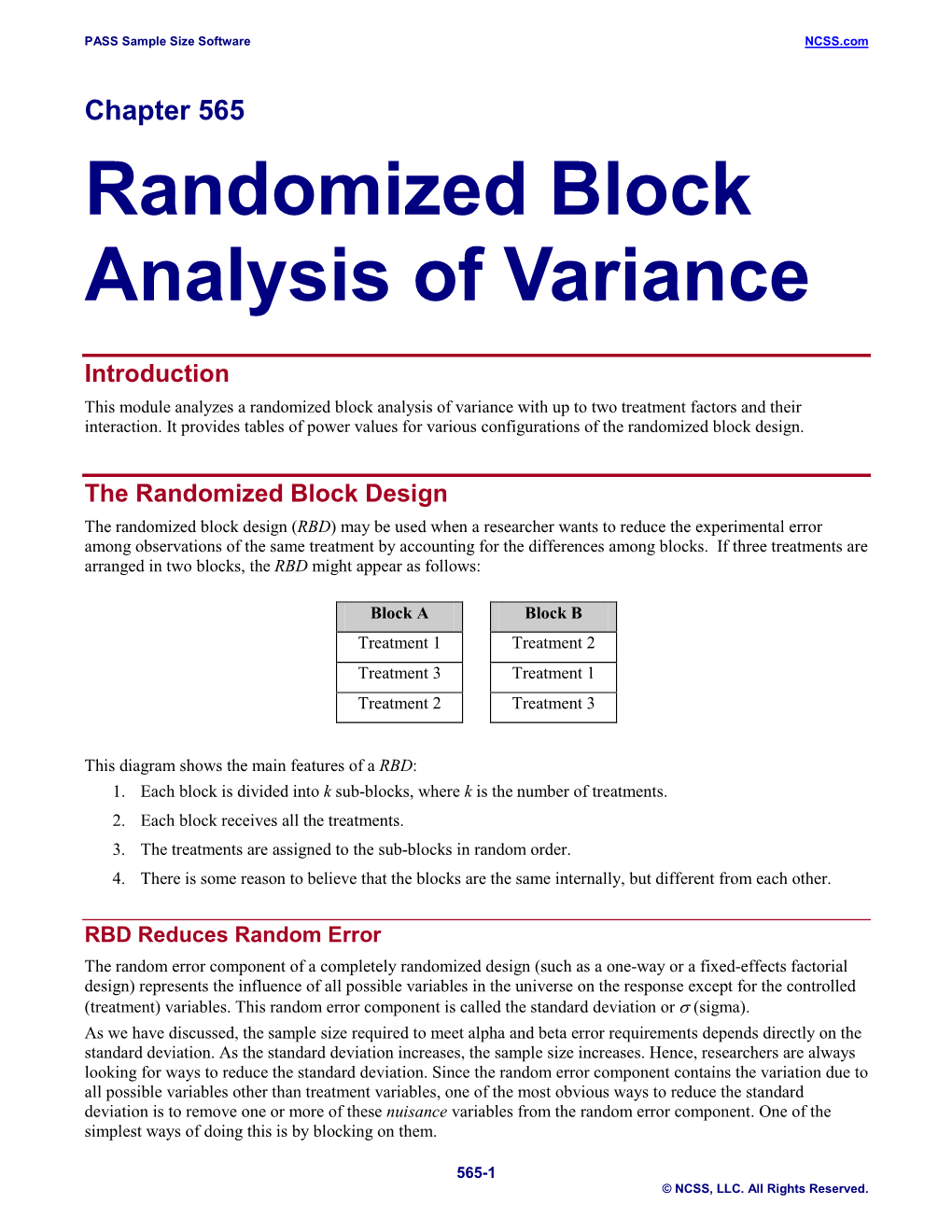

Randomized Block Analysis of Variance

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

When Does Blocking Help?

page 1 When Does Blocking Help? Teacher Notes, Part I The purpose of blocking is frequently described as “reducing variability.” However, this phrase carries little meaning to most beginning students of statistics. This activity, consisting of three rounds of simulation, is designed to illustrate what reducing variability really means in this context. In fact, students should see that a better description than “reducing variability” might be “attributing variability”, or “reducing unexplained variability”. The activity can be completed in a single 90-minute class or two classes of at least 45 minutes. For shorter classes you may wish to extend the simulations over two days. It is important that students understand not only what to do but also why they do what they do. Background Here is the specific problem that will be addressed in this activity: A set of 24 dogs (6 of each of four breeds; 6 from each of four veterinary clinics) has been randomly selected from a population of dogs older than eight years of age whose owners have permitted their inclusion in a study. Each dog will be assigned to exactly one of three treatment groups. Group “Ca” will receive a dietary supplement of calcium, Group “Ex” will receive a dietary supplement of calcium and a daily exercise regimen, and Group “Co” will be a control group that receives no supplement to the ordinary diet and no additional exercise. All dogs will have a bone density evaluation at the beginning and end of the one-year study. (The bone density is measured in Houndsfield units by using a CT scan.) The goals of the study are to determine (i) whether there are different changes in bone density over the year of the study for the dogs in the three treatment groups; and if so, (ii) how much each treatment influences that change in bone density. -

Lec 9: Blocking and Confounding for 2K Factorial Design

Lec 9: Blocking and Confounding for 2k Factorial Design Ying Li December 2, 2011 Ying Li Lec 9: Blocking and Confounding for 2k Factorial Design 2k factorial design Special case of the general factorial design; k factors, all at two levels The two levels are usually called low and high (they could be either quantitative or qualitative) Very widely used in industrial experimentation Ying Li Lec 9: Blocking and Confounding for 2k Factorial Design Example Consider an investigation into the effect of the concentration of the reactant and the amount of the catalyst on the conversion in a chemical process. A: reactant concentration, 2 levels B: catalyst, 2 levels 3 replicates, 12 runs in total Ying Li Lec 9: Blocking and Confounding for 2k Factorial Design 1 A B A = f[ab − b] + [a − (1)]g − − (1) = 28 + 25 + 27 = 80 2n + − a = 36 + 32 + 32 = 100 1 B = f[ab − a] + [b − (1)]g − + b = 18 + 19 + 23 = 60 2n + + ab = 31 + 30 + 29 = 90 1 AB = f[ab − b] − [a − (1)]g 2n Ying Li Lec 9: Blocking and Confounding for 2k Factorial Design Manual Calculation 1 A = f[ab − b] + [a − (1)]g 2n ContrastA = ab + a − b − (1) Contrast SS = A A 4n Ying Li Lec 9: Blocking and Confounding for 2k Factorial Design Regression Model For 22 × 1 experiment Ying Li Lec 9: Blocking and Confounding for 2k Factorial Design Regression Model The least square estimates: The regression coefficient estimates are exactly half of the \usual" effect estimates Ying Li Lec 9: Blocking and Confounding for 2k Factorial Design Analysis Procedure for a Factorial Design Estimate factor effects. -

Chapter 7 Blocking and Confounding Systems for Two-Level Factorials

Chapter 7 Blocking and Confounding Systems for Two-Level Factorials &5² Design and Analysis of Experiments (Douglas C. Montgomery) hsuhl (NUK) DAE Chap. 7 1 / 28 Introduction Sometimes, it is impossible to perform all 2k factorial experiments under homogeneous condition. I a batch of raw material: not large enough for the required runs Blocking technique: making the treatments are equally effective across many situation hsuhl (NUK) DAE Chap. 7 2 / 28 Blocking a Replicated 2k Factorial Design 2k factorial design, n replicates Example 7.1: chemical process experiment 22 factorial design: A-concentration; B-catalyst 4 trials; 3 replicates hsuhl (NUK) DAE Chap. 7 3 / 28 Blocking a Replicated 2k Factorial Design (cont.) n replicates a block: each set of nonhomogeneous conditions each replicate is run in one of the blocks 3 2 2 X Bi y··· SSBlocks= − (2 d:f :) 4 12 i=1 = 6:50 The block effect is small. hsuhl (NUK) DAE Chap. 7 4 / 28 Confounding Confounding(干W;混雜;ø絡) the block size is smaller than the number of treatment combinations impossible to perform a complete replicate of a factorial design in one block confounding: a design technique for arranging a complete factorial experiment in blocks causes information about certain treatment effects(high-order interactions) to be indistinguishable(p|辨½的) from, or confounded with blocks hsuhl (NUK) DAE Chap. 7 5 / 28 Confounding the 2k Factorial Design in Two Blocks a single replicate of 22 design two batches of raw material are required 2 factors with 2 blocks hsuhl (NUK) DAE Chap. 7 6 / 28 Confounding the 2k Factorial Design in Two Blocks (cont.) 1 A = 2 [ab + a − b−(1)] 1 (any difference between block 1 and 2 will cancel out) B = 2 [ab + b − a−(1)] 1 AB = [ab+(1) − a − b] 2 (block effect and AB interaction are identical; confounded with blocks) hsuhl (NUK) DAE Chap. -

Introduction to Biostatistics

Introduction to Biostatistics Jie Yang, Ph.D. Associate Professor Department of Family, Population and Preventive Medicine Director Biostatistical Consulting Core In collaboration with Clinical Translational Science Center (CTSC) and the Biostatistics and Bioinformatics Shared Resource (BB-SR), Stony Brook Cancer Center (SBCC). OUTLINE What is Biostatistics What does a biostatistician do • Experiment design, clinical trial design • Descriptive and Inferential analysis • Result interpretation What you should bring while consulting with a biostatistician WHAT IS BIOSTATISTICS • The science of biostatistics encompasses the design of biological/clinical experiments the collection, summarization, and analysis of data from those experiments the interpretation of, and inference from, the results How to Lie with Statistics (1954) by Darrell Huff. http://www.youtube.com/watch?v=PbODigCZqL8 GOAL OF STATISTICS Sampling POPULATION Probability SAMPLE Theory Descriptive Descriptive Statistics Statistics Inference Population Sample Parameters: Inferential Statistics Statistics: 흁, 흈, 흅… 푿 , 풔, 풑 ,… PROPERTIES OF A “GOOD” SAMPLE • Adequate sample size (statistical power) • Random selection (representative) Sampling Techniques: 1.Simple random sampling 2.Stratified sampling 3.Systematic sampling 4.Cluster sampling 5.Convenience sampling STUDY DESIGN EXPERIEMENT DESIGN Completely Randomized Design (CRD) - Randomly assign the experiment units to the treatments Design with Blocking – dealing with nuisance factor which has some effect on the response, but of no interest to the experimenter; Without blocking, large unexplained error leads to less detection power. 1. Randomized Complete Block Design (RCBD) - One single blocking factor 2. Latin Square 3. Cross over Design Design (two (each subject=blocking factor) 4. Balanced Incomplete blocking factor) Block Design EXPERIMENT DESIGN Factorial Design: similar to randomized block design, but allowing to test the interaction between two treatment effects. -

COMBINATORICS, Volume

http://dx.doi.org/10.1090/pspum/019 PROCEEDINGS OF SYMPOSIA IN PURE MATHEMATICS Volume XIX COMBINATORICS AMERICAN MATHEMATICAL SOCIETY Providence, Rhode Island 1971 Proceedings of the Symposium in Pure Mathematics of the American Mathematical Society Held at the University of California Los Angeles, California March 21-22, 1968 Prepared by the American Mathematical Society under National Science Foundation Grant GP-8436 Edited by Theodore S. Motzkin AMS 1970 Subject Classifications Primary 05Axx, 05Bxx, 05Cxx, 10-XX, 15-XX, 50-XX Secondary 04A20, 05A05, 05A17, 05A20, 05B05, 05B15, 05B20, 05B25, 05B30, 05C15, 05C99, 06A05, 10A45, 10C05, 14-XX, 20Bxx, 20Fxx, 50A20, 55C05, 55J05, 94A20 International Standard Book Number 0-8218-1419-2 Library of Congress Catalog Number 74-153879 Copyright © 1971 by the American Mathematical Society Printed in the United States of America All rights reserved except those granted to the United States Government May not be produced in any form without permission of the publishers Leo Moser (1921-1970) was active and productive in various aspects of combin• atorics and of its applications to number theory. He was in close contact with those with whom he had common interests: we will remember his sparkling wit, the universality of his anecdotes, and his stimulating presence. This volume, much of whose content he had enjoyed and appreciated, and which contains the re• construction of a contribution by him, is dedicated to his memory. CONTENTS Preface vii Modular Forms on Noncongruence Subgroups BY A. O. L. ATKIN AND H. P. F. SWINNERTON-DYER 1 Selfconjugate Tetrahedra with Respect to the Hermitian Variety xl+xl + *l + ;cg = 0 in PG(3, 22) and a Representation of PG(3, 3) BY R. -

Statistical Analysis 8: Two-Way Analysis of Variance (ANOVA)

Statistical Analysis 8: Two-way analysis of variance (ANOVA) Research question type: Explaining a continuous variable with 2 categorical variables What kind of variables? Continuous (scale/interval/ratio) and 2 independent categorical variables (factors) Common Applications: Comparing means of a single variable at different levels of two conditions (factors) in scientific experiments. Example: The effective life (in hours) of batteries is compared by material type (1, 2 or 3) and operating temperature: Low (-10˚C), Medium (20˚C) or High (45˚C). Twelve batteries are randomly selected from each material type and are then randomly allocated to each temperature level. The resulting life of all 36 batteries is shown below: Table 1: Life (in hours) of batteries by material type and temperature Temperature (˚C) Low (-10˚C) Medium (20˚C) High (45˚C) 1 130, 155, 74, 180 34, 40, 80, 75 20, 70, 82, 58 2 150, 188, 159, 126 136, 122, 106, 115 25, 70, 58, 45 type Material 3 138, 110, 168, 160 174, 120, 150, 139 96, 104, 82, 60 Source: Montgomery (2001) Research question: Is there difference in mean life of the batteries for differing material type and operating temperature levels? In analysis of variance we compare the variability between the groups (how far apart are the means?) to the variability within the groups (how much natural variation is there in our measurements?). This is why it is called analysis of variance, abbreviated to ANOVA. This example has two factors (material type and temperature), each with 3 levels. Hypotheses: The 'null hypothesis' might be: H0: There is no difference in mean battery life for different combinations of material type and temperature level And an 'alternative hypothesis' might be: H1: There is a difference in mean battery life for different combinations of material type and temperature level If the alternative hypothesis is accepted, further analysis is performed to explore where the individual differences are. -

5. Dummy-Variable Regression and Analysis of Variance

Sociology 740 John Fox Lecture Notes 5. Dummy-Variable Regression and Analysis of Variance Copyright © 2014 by John Fox Dummy-Variable Regression and Analysis of Variance 1 1. Introduction I One of the limitations of multiple-regression analysis is that it accommo- dates only quantitative explanatory variables. I Dummy-variable regressors can be used to incorporate qualitative explanatory variables into a linear model, substantially expanding the range of application of regression analysis. c 2014 by John Fox Sociology 740 ° Dummy-Variable Regression and Analysis of Variance 2 2. Goals: I To show how dummy regessors can be used to represent the categories of a qualitative explanatory variable in a regression model. I To introduce the concept of interaction between explanatory variables, and to show how interactions can be incorporated into a regression model by forming interaction regressors. I To introduce the principle of marginality, which serves as a guide to constructing and testing terms in complex linear models. I To show how incremental I -testsareemployedtotesttermsindummy regression models. I To show how analysis-of-variance models can be handled using dummy variables. c 2014 by John Fox Sociology 740 ° Dummy-Variable Regression and Analysis of Variance 3 3. A Dichotomous Explanatory Variable I The simplest case: one dichotomous and one quantitative explanatory variable. I Assumptions: Relationships are additive — the partial effect of each explanatory • variable is the same regardless of the specific value at which the other explanatory variable is held constant. The other assumptions of the regression model hold. • I The motivation for including a qualitative explanatory variable is the same as for including an additional quantitative explanatory variable: to account more fully for the response variable, by making the errors • smaller; and to avoid a biased assessment of the impact of an explanatory variable, • as a consequence of omitting another explanatory variables that is relatedtoit. -

Analysis of Variance and Analysis of Variance and Design of Experiments of Experiments-I

Analysis of Variance and Design of Experimentseriments--II MODULE ––IVIV LECTURE - 19 EXPERIMENTAL DESIGNS AND THEIR ANALYSIS Dr. Shalabh Department of Mathematics and Statistics Indian Institute of Technology Kanpur 2 Design of experiment means how to design an experiment in the sense that how the observations or measurements should be obtained to answer a qqyuery inavalid, efficient and economical way. The desigggning of experiment and the analysis of obtained data are inseparable. If the experiment is designed properly keeping in mind the question, then the data generated is valid and proper analysis of data provides the valid statistical inferences. If the experiment is not well designed, the validity of the statistical inferences is questionable and may be invalid. It is important to understand first the basic terminologies used in the experimental design. Experimental unit For conducting an experiment, the experimental material is divided into smaller parts and each part is referred to as experimental unit. The experimental unit is randomly assigned to a treatment. The phrase “randomly assigned” is very important in this definition. Experiment A way of getting an answer to a question which the experimenter wants to know. Treatment Different objects or procedures which are to be compared in an experiment are called treatments. Sampling unit The object that is measured in an experiment is called the sampling unit. This may be different from the experimental unit. 3 Factor A factor is a variable defining a categorization. A factor can be fixed or random in nature. • A factor is termed as fixed factor if all the levels of interest are included in the experiment. -

Block Designs and Graph Theory*

JOURNAL OF COMBINATORIAL qtIliORY 1, 132-148 (1966) Block Designs and Graph Theory* JANE W. DI PAOLA The City University of New York Comnumicated by R.C. Bose INTRODUCTION The purpose of this paper is to demonstrate the relation of balanced incomplete block designs to certain concepts of graph theory. The set of blocks of a balanced incomplete block design with Z -- 1 is shown to be related to a maximum internally stable set of vertices of a suitably defined graph. The development yields also an upper bound for the internal stability number of a large subclass of a class of graphs which we call "graphs on binomial coefficients." In a different but related context every balanced incomplete block design with 2 -- 1 is shown to be a solution of a suitably defined irreflexive relation. Some examples of relativizations and extensions of solutions of irreflexive relations (as developed by Richardson [13-15]) are generated as a result of the concepts derived. PRELIMINARY RESULTS A balanced incomplete block design (BIBD) is a set of v elements arranged in b blocks of k elements each in such a way that each element occurs r times and each unordered pair of distinct elements determines ,~ distinct blocks. The v, b, r, k, 2 are called the parameters of the design. * Research on which this paper is based supported by the U. S. Army Research Office-Durham under Contract No. DA-31-124-ARO-D-366. 132 BLOCK DESIGNS AND GRAPH THEORY 133 Following a suggestion implied by Berge [2] we introduce the DEHNmON. Agraph on the binomial coefficient (k)with edge pa- rameter 2, written G rr)k 4, is raphwhosevert ces ar t e (;).siDle k-tuples which can be formed from v elements and having as adjacent vertices those pairs of vertices which have more than 2 and less than k elements in common. -

ON the POLYHEDRAL GEOMETRY of T–DESIGNS

ON THE POLYHEDRAL GEOMETRY OF t{DESIGNS A thesis presented to the faculty of San Francisco State University In partial fulfilment of The Requirements for The Degree Master of Arts In Mathematics by Steven Collazos San Francisco, California August 2013 Copyright by Steven Collazos 2013 CERTIFICATION OF APPROVAL I certify that I have read ON THE POLYHEDRAL GEOMETRY OF t{DESIGNS by Steven Collazos and that in my opinion this work meets the criteria for approving a thesis submitted in partial fulfillment of the requirements for the degree: Master of Arts in Mathematics at San Francisco State University. Matthias Beck Associate Professor of Mathematics Felix Breuer Federico Ardila Associate Professor of Mathematics ON THE POLYHEDRAL GEOMETRY OF t{DESIGNS Steven Collazos San Francisco State University 2013 Lisonek (2007) proved that the number of isomorphism types of t−(v; k; λ) designs, for fixed t, v, and k, is quasi{polynomial in λ. We attempt to describe a region in connection with this result. Specifically, we attempt to find a region F of Rd with d the following property: For every x 2 R , we have that jF \ Gxj = 1, where Gx denotes the G{orbit of x under the action of G. As an application, we argue that our construction could help lead to a new combinatorial reciprocity theorem for the quasi{polynomial counting isomorphism types of t − (v; k; λ) designs. I certify that the Abstract is a correct representation of the content of this thesis. Chair, Thesis Committee Date ACKNOWLEDGMENTS I thank my advisors, Dr. Matthias Beck and Dr. -

On Doing Geometry in CAYLEY

On doing geometry in CAYLEY PJC, November 1988 1 Introduction These notes were produced in response to a question from John Cannon: what issues are involved in adding facilities to CAYLEY for doing finite geometry? More specifically, what facilities would geometers want? At first sight, the idea of geometry in CAYLEY seems very natural. Groups and geometry have been intimately linked since long before the time of Klein. Many of the groups with which CAYLEY users deal act naturally on geometries. An important point which I’ll touch on briefly later is this: if we’re given a group which happens to be a classical group, many algorithmic questions (e.g. finding particular kinds of subgroups) become much easier once we’ve found and coordi- natised the geometry on which the group acts. With further thought, however, we see how opposed in spirit the two areas are. Everyone agrees on what a group is. Moreover, there are only a few ways in which a group is likely to be presented, and CAYLEY has facilities to deal with each of these. In each case, these are basic algorithms of such subtlety as to deter the casual user from implementing them – surely one of the facts that called CAYLEY into being in the first place. On the other hand, the sheer variety of finite geometries daunts the beginner, who meets projective, affine and polar spaces, buildings, generalised polygons, (semi) partial geometries, t-designs, Steiner systems, Latin squares, nets, codes, matroids, permutation geometries, to name just a few. The range of questions asked about them is even more bewildering. -

THE ONE-SAMPLE Z TEST

10 THE ONE-SAMPLE z TEST Only the Lonely Difficulty Scale ☺ ☺ ☺ (not too hard—this is the first chapter of this kind, but youdistribute know more than enough to master it) or WHAT YOU WILL LEARN IN THIS CHAPTERpost, • Deciding when the z test for one sample is appropriate to use • Computing the observed z value • Interpreting the z value • Understandingcopy, what the z value means • Understanding what effect size is and how to interpret it not INTRODUCTION TO THE Do ONE-SAMPLE z TEST Lack of sleep can cause all kinds of problems, from grouchiness to fatigue and, in rare cases, even death. So, you can imagine health care professionals’ interest in seeing that their patients get enough sleep. This is especially the case for patients 186 Copyright ©2020 by SAGE Publications, Inc. This work may not be reproduced or distributed in any form or by any means without express written permission of the publisher. Chapter 10 ■ The One-Sample z Test 187 who are ill and have a real need for the healing and rejuvenating qualities that sleep brings. Dr. Joseph Cappelleri and his colleagues looked at the sleep difficul- ties of patients with a particular illness, fibromyalgia, to evaluate the usefulness of the Medical Outcomes Study (MOS) Sleep Scale as a measure of sleep problems. Although other analyses were completed, including one that compared a treat- ment group and a control group with one another, the important analysis (for our discussion) was the comparison of participants’ MOS scores with national MOS norms. Such a comparison between a sample’s mean score (the MOS score for par- ticipants in this study) and a population’s mean score (the norms) necessitates the use of a one-sample z test.